凯斯西储(CWRU)数据集解读并将数据集划分为10分类(含代码)

凯斯西储大学轴承故障数据集官方网址:https://engineering.case.edu/bearingdatacenter/download-data-file

官方数据集整理版(不用挨个下了):

链接:https://pan.baidu.com/s/1RAmPFmxdsLhs_hhMMnmklA 提取码:fr5r

处理好的十分类数据集(含源文件与代码):

链接:https://pan.baidu.com/s/1_3C7e2tAb_bQbl4FcV0EMQ 提取码:7tna

十分类数据集文件说明:

1)100、108~等十个数据文件是从官方的数据集摘出来的

2)之后用matlab(classes10code.m)处理为层c10signals.mat文件

3) 再用python(datasetSample.py)将其处理为十分类数据c10classes.mat

c10classes.mat文件中包含打乱顺序的训练集(900×2048)、测试集(300×2048)

训练集(900×2048): 900个样本,每个样本包含2048个数据点。

4) 十分类数据集所含内容为如下图片:

凯斯西储数据集解读:

数据格式:轴承故障数据文件为Matlab格式

每个文件包含风扇和驱动端振动数据,以及电机转速,文件中文件变量命名如下:

DE - drive end accelerometer data 驱动端振动数据

FE - fan end accelerometer data 风扇端振动数据

BA - base accelerometer data 基座振动数据

time - time series data 时间序列数据

RPM- rpm during testing 单位转每分钟 除以60则为转频

数据采集频率分别为:数据集A:在12Khz采样频率下的驱动端轴承故障数据

数据集B:在48Khz采样频率下的驱动端轴承故障数据

数据集C:在12Khz采样频率下的风扇端轴承故障数据

数据集D:以及正常的轴承数据(采样频率应该是48k的)

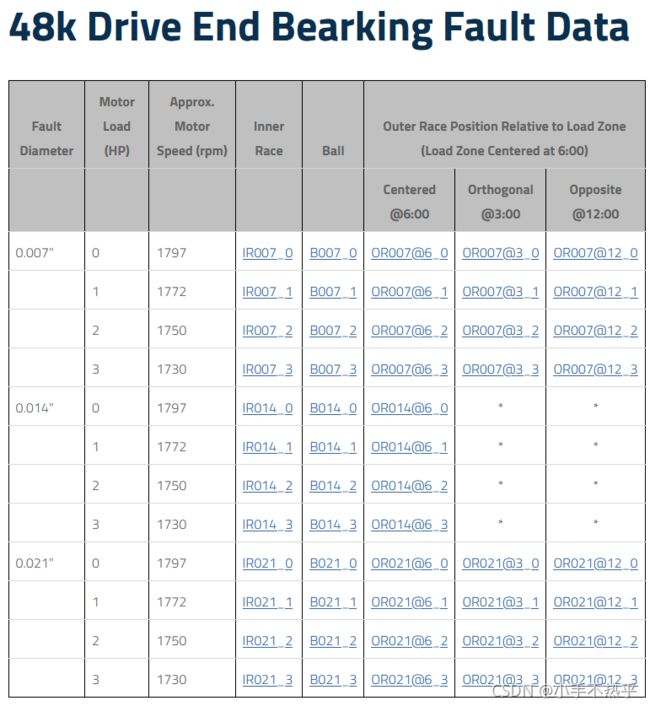

数据集B解读:在48Khz采样频率下的驱动端轴承故障直径又分为0.007英寸、0.014英寸、0.028英寸三种类别,每种故障下负载又分为0马力、1马力、2马力、3马力。在每种故障的每种马力下有轴承内圈故障、轴承滚动体故障、轴承外环故障(由于轴承外环位置一般比较固定,因此外环故障又分为3点钟、6点钟和12点钟三种类别)。

在48Khz采样频率下的驱动端轴承故障数据如图:

注:每个文件打开后,如IR007_1文件(48Khz的驱动端内环轴承故障数据下)打开后还会包含 BA:基座;DE:驱动端,FE:风扇端,RPM:转速四个数据,个人理解应该是在 驱动端轴承故障的情况下 包含BA,DE,FE三个不同位置传感器采集到的数据。

matlab代码:

clc;

clear all;

close all;

drive_100 = load('100.mat');

drive_108 = load('108.mat');

drive_121 = load('121.mat');

drive_133 = load('133.mat');

drive_172 = load('172.mat');

drive_188 = load('188.mat');

drive_200 = load('200.mat');

drive_212 = load('212.mat');

drive_225 = load('225.mat');

drive_237 = load('237.mat');

% de_100 = drive_100.X100_DE_time(1:121048);

de_100 = drive_100.X100_DE_time(1:4:484192);

de_108 = drive_108.X108_DE_time(1:121048);

de_121 = drive_121.X121_DE_time(1:121048);

de_133 = drive_133.X133_DE_time(1:121048);

de_172 = drive_172.X172_DE_time(1:121048);

de_188 = drive_188.X188_DE_time(1:121048);

de_200 = drive_200.X200_DE_time(1:121048);

de_212 = drive_212.X212_DE_time(1:121048);

de_225 = drive_225.X225_DE_time(1:121048);

de_237 = drive_237.X237_DE_time(1:121048);

de_signals = [de_100,de_108,de_121,de_133,de_172,de_188,de_200,de_212,de_225,de_237];

signals = de_signals.';

save('c10signals.mat','signals');

whos('-file','c10signals.mat')python:

import numpy as np

import scipy.io as scio

from random import shuffle

def normalize(data):

'''(0,1)normalization

:param data : the object which is a 1*2048 vector to be normalized

'''

s= (data-min(data)) / (max(data)-min(data))

return s

def cut_samples(org_signals):

''' get original signals to 10*120*2048 samples, meanwhile normalize these samples

:param org_signals :a 10* 121048 matrix of ten original signals

'''

results=np.zeros(shape=(10,120,2048))

temporary_s=np.zeros(shape=(120,2048))

for i in range(10):

s=org_signals[i]

for x in range(120):

temporary_s[x]=s[1000*x:2048+1000*x]

temporary_s[x]=normalize(temporary_s[x]) #顺道对每个样本归一化

results[i]=temporary_s

return results

def make_datasets(org_samples):

'''输入10*120*2048的原始样本,输出带标签的训练集(占75%)和测试集(占25%)'''

train_x=np.zeros(shape=(10,90,2048))

train_y=np.zeros(shape=(10,90,10))

test_x=np.zeros(shape=(10,30,2048))

test_y=np.zeros(shape=(10,30,10))

for i in range(10):

s=org_samples[i]

# 打乱顺序

index_s = [a for a in range(len(s))]

shuffle(index_s)

s=s[index_s]

# 对每种类型都划分训练集和测试集

train_x[i]=s[:90]

test_x[i]=s[90:120]

# 填写标签

label = np.zeros(shape=(10,))

label[i] = 1

train_y[i, :] = label

test_y[i, :] = label

#将十种类型的训练集和测试集分别合并并打乱

x1 = train_x[0]

y1 = train_y[0]

x2 = test_x[0]

y2 = test_y[0]

for i in range(9):

x1 = np.row_stack((x1, train_x[i + 1]))

x2 = np.row_stack((x2, test_x[i + 1]))

y1 = np.row_stack((y1, train_y[i + 1]))

y2 = np.row_stack((y2, test_y[i + 1]))

index_x1= [i for i in range(len(x1))]

index_x2= [i for i in range(len(x2))]

shuffle(index_x1)

shuffle(index_x2)

x1=x1[index_x1]

y1=y1[index_x1]

x2=x2[index_x2]

y2=y2[index_x2]

return x1, y1, x2, y2 #分别代表:训练集样本,训练集标签,测试集样本,测试集标签

def get_timesteps(samples):

''' get timesteps of train_x and test_X to 10*120*31*128

:param samples : a matrix need cut to 31*128

'''

s1 = np.zeros(shape=(31, 128))

s2 = np.zeros(shape=(len(samples), 31, 128))

for i in range(len(samples)):

sample = samples[i]

for a in range(31):

s1[a]= sample[64*a:128+64*a]

s2[i]=s1

return s2

# 读取原始数据,处理后保存

dataFile= 'G://study of machine learing//deep learning//LSTM//datasets//十个原始信号.mat'

data=scio.loadmat(dataFile)

org_signals=data['signals']

org_samples=cut_samples(org_signals)

train_x, train_y, test_x, test_y=make_datasets(org_samples)

train_x= get_timesteps(train_x)

test_x= get_timesteps(test_x)

saveFile = 'G://study of machine learing//deep learning//LSTM//datasets//datasets.mat'

scio.savemat(saveFile, {'train_x':train_x, 'train_y':train_y, 'test_x':test_x, 'test_y':test_y})

引用:

利用python整理凯斯西储大学(CWRU)轴承数据,制作数据集_Victor`Wu的博客-CSDN博客