1.8安装大华相机SDK及测试

不同工业相机的sdk不同,可以到相应的官网下载,我使用的是大华相机

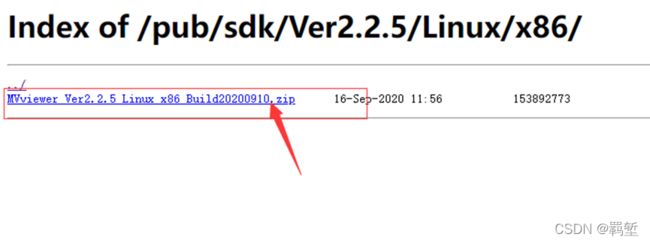

下载SDK:

官方下载地址:http://download.huaraytech.com/pub/sdk/

我使用的是2.2.5的Linux的x86的版本

点击下载

安装:

将下载的压缩包中.run文件提取出来,增加可执行权限,然后运行即可打开软件。

压缩:unzip [name]

更改文件权限:chmod u+x [name] (这里是添加可执行权限)

执行某个可执行程序,开始安装:sudo ./[name]

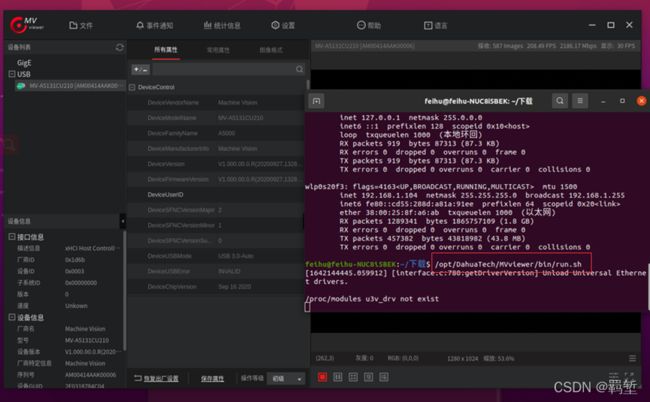

打开软件:找到软件的 .sh文件,从终端进入(输入.sh的路径)

安装默认位置:/opt/DahuaTech/MVviewer

测试:

打开大华的软件:

/opt/DahuaTech/MVviewer/bin/run.sh

成功打开MVviewer

在QT中使用大华相机

新建一个QT工程

导入OpenCV的头文件和库:

.pro文件中输入下列代码

INCLUDEPATH +=/usr/local/include/ \

/usr/local/include/opencv4/ \

/usr/local/include/opencv4/opencv2

LIBS +=/usr/local/lib/lib*

添加大华头文件和动态库:

刚才下载好的SDK在这个位置: 文件夹—其他位置—计算机 /opt/DahuaTech/MVviewer

将大华目录下的头文件 include 和动态库 lib 复制到qt工程文件夹下

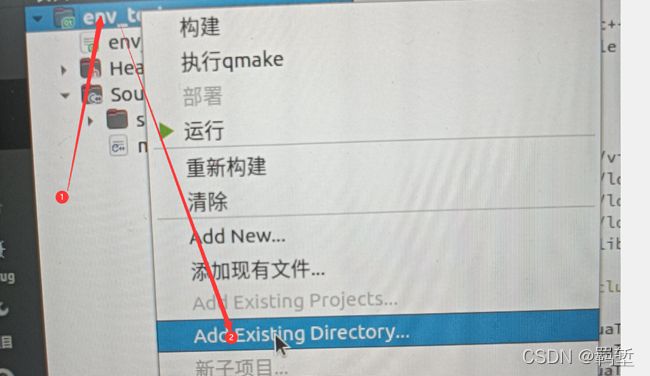

将这两个文件导入QT工程中:

在.pro文件中配置大华的环境

输入下列代码

INCLUDEPATH +=./include \

LIBS += -L/opt/DahuaTech/MVviewer/lib/ -lMVSDK

LIBS += -L/opt/DahuaTech/MVviewer/lib/ -lImageConvert

LIBS += -L/opt/DahuaTech/MVviewer/lib/ -lVideoRender

LIBS += -L/opt/DahuaTech/MVviewer/lib/GenICam/bin/Linux64_x64/ -lGCBase_gcc421_v3_0 -lGenApi_gcc421_v3_0 -lLog_gcc421_v3_0

LIBS += -L/opt/DahuaTech/MVviewer/lib/GenICam/bin/Linux64_x64/ -llog4cpp_gcc421_v3_0 -lNodeMapData_gcc421_v3_0 -lXmlParser_gcc421_v3_0

LIBS += -L/opt/DahuaTech/MVviewer/lib/GenICam/bin/Linux64_x64/ -lMathParser_gcc421_v3_0

LIBS+=-L/opt/DahuaTech/MVviewer/lib -lMVSDK

添加测试代码

将我经常用的Camera和src的文件复制到工程文件夹下,并将其导入Qt工程中(没有的问我要,不想问我的用下面的代码测试)

这个工程有video.h, video.cpp 和 main这三个文件

main函数:

#include

#include "video.h"

#include

using namespace Dahua::GenICam;

using namespace Dahua::Infra;

using namespace std;

using namespace cv;

int main()

{// 创建工业相机的实例

Video v;

if (!v.videoCheck())

{

printf("videoCheck failed!\n");

return 0;

}

if (!v.videoOpen())

{

printf("videoOpen failed!\n");

return 0;

}

//设置增益

Video::ETrigType type = Video::ETrigType::trigContinous; //改为连续拉流

v.CameraChangeTrig(type); //默认为软触发

if (!v.videoStart())

{

printf("videoStart failed!\n");

return 0;

}

Mat src;

while(1)

{

if(v.getFrame(src)){

imshow("img",src);

}

else

{

printf("getFrame failed!\n");

break;

}

if(waitKey(1)=='q')

{

break;

}

cout << "Hello World!" << endl;

return 0;

}

}

video.h

#ifndef VIDEO_H

#define VIDEO_H

#include

#include "GenICam/System.h"

#include "Media/VideoRender.h"

#include "Media/ImageConvert.h"

#include

#include "GenICam/Camera.h"

#include "GenICam/GigE/GigECamera.h"

#include "GenICam/GigE/GigEInterface.h"

#include "Infra/PrintLog.h"

#include "Memory/SharedPtr.h"

#include

#include

#include

#include

using namespace Dahua::GenICam;

using namespace Dahua::Infra;

using namespace Dahua::Memory;

using namespace std;

using namespace cv;

static uint32_t gFormatTransferTbl[] =

{

// Mono Format

gvspPixelMono1p,

gvspPixelMono8,

gvspPixelMono10,

gvspPixelMono10Packed,

gvspPixelMono12,

gvspPixelMono12Packed,

// Bayer Format

gvspPixelBayRG8,

gvspPixelBayGB8,

gvspPixelBayBG8,

gvspPixelBayRG10,

gvspPixelBayGB10,

gvspPixelBayBG10,

gvspPixelBayRG12,

gvspPixelBayGB12,

gvspPixelBayBG12,

gvspPixelBayRG10Packed,

gvspPixelBayGB10Packed,

gvspPixelBayBG10Packed,

gvspPixelBayRG12Packed,

gvspPixelBayGB12Packed,

gvspPixelBayBG12Packed,

gvspPixelBayRG16,

gvspPixelBayGB16,

gvspPixelBayBG16,

gvspPixelBayRG10p,

gvspPixelBayRG12p,

gvspPixelMono1c,

// RGB Format

gvspPixelRGB8,

gvspPixelBGR8,

// YVR Format

gvspPixelYUV411_8_UYYVYY,

gvspPixelYUV422_8_UYVY,

gvspPixelYUV422_8,

gvspPixelYUV8_UYV,

};

#define gFormatTransferTblLen sizeof(gFormatTransferTbl) / sizeof(gFormatTransferTbl[0])

class Video

{

public:

Video() {}

~Video()

{

videoStopStream(); //断流

videoClose(); //析构断开与相机的链接

}

//枚举触发方式

enum ETrigType

{

trigContinous = 0, //连续拉流

trigSoftware = 1, //软件触发

trigLine = 2, //外部触发

};

bool videoCheck(); //搜索相机

bool videoOpen(); //初始化相机

void CameraChangeTrig(ETrigType trigType = trigSoftware); //设置触发模式,一般为软触发

void ExecuteSoftTrig(); //执行一次软触发

bool videoStart(); //创建流对象

bool getFrame(Mat &img); //获取一帧图片

bool convertToRGB24(Mat &img); //转换为opencv可以识别的格式

void videoStopStream(); //断开拉流

void videoClose(); //断开相机

void startGrabbing(); //

void SetExposeTime(double exp); //设置曝光

void SetAdjustPlus(double adj); //设置增益

void setBufferSize(int nSize);

void setBalanceRatio(double dRedBalanceRatio, double dGreenBalanceRatio, double dBlueBalanceRatio);

void setResolution(int height = 720, int width = 1280); //设置分辨率

void setROI(int64_t nX, int64_t nY, int64_t nWidth, int64_t nHeight);

void setBinning();

bool loadSetting(int mode);

void setFrameRate(double rate = 210); //设置帧率

ICameraPtr m_pCamera; //相机对象

private:

TVector m_vCameraPtrList; //相机列表

IStreamSourcePtr m_pStreamSource; //流文件

};

class FrameBuffer

{

private:

uint8_t *Buffer_;

int Width_;

int Height_;

int PaddingX_;

int PaddingY_;

int DataSize_;

int PixelFormat_;

uint64_t TimeStamp_;

uint64_t BlockId_;

public:

FrameBuffer(Dahua::GenICam::CFrame const &frame)

{

if (frame.getImageSize() > 0)

{

if (frame.getImagePixelFormat() == Dahua::GenICam::gvspPixelMono8)

{

Buffer_ = new (std::nothrow) uint8_t[frame.getImageSize()];

}

else

{

Buffer_ = new (std::nothrow) uint8_t[frame.getImageWidth() * frame.getImageHeight() * 3];

}

if (Buffer_)

{

Width_ = frame.getImageWidth();

Height_ = frame.getImageHeight();

PaddingX_ = frame.getImagePadddingX();

PaddingY_ = frame.getImagePadddingY();

DataSize_ = frame.getImageSize();

PixelFormat_ = frame.getImagePixelFormat();

BlockId_ = frame.getBlockId();

}

}

}

~FrameBuffer()

{

if (Buffer_ != NULL)

{

delete[] Buffer_;

Buffer_ = NULL;

}

}

bool Valid()

{

if (NULL != Buffer_)

{

return true;

}

else

{

return false;

}

}

int Width()

{

return Width_;

}

int Height()

{

return Height_;

}

int PaddingX()

{

return PaddingX_;

}

int PaddingY()

{

return PaddingY_;

}

int DataSize()

{

return DataSize_;

}

uint64_t PixelFormat()

{

return PixelFormat_;

}

uint64_t TimeStamp()

{

return TimeStamp_;

}

void setWidth(uint32_t iWidth)

{

Width_ = iWidth;

}

void setPaddingX(uint32_t iPaddingX)

{

PaddingX_ = iPaddingX;

}

uint64_t BlockId()

{

return BlockId_;

}

void setPaddingY(uint32_t iPaddingX)

{

PaddingY_ = iPaddingX;

}

void setHeight(uint32_t iHeight)

{

Height_ = iHeight;

}

void setDataSize(int dataSize)

{

DataSize_ = dataSize;

}

void setPixelFormat(uint32_t pixelFormat)

{

PixelFormat_ = pixelFormat;

}

void setTimeStamp(uint64_t timeStamp)

{

TimeStamp_ = timeStamp;

}

uint8_t *bufPtr()

{

return Buffer_;

}

};

#endif

video.cpp

#include "Camera/video.h"

void Video::setBufferSize(int nSize){

bool bRet;

m_pStreamSource = CSystem::getInstance().createStreamSource(m_pCamera);

if (NULL == m_pStreamSource)

{

printf("create a SourceStream failed!\n");

return;

}

m_pStreamSource->setBufferCount(nSize);

// bRet = intNode.setValue(width);

// if (false == bRet)

// {

// printf("set width fail.\n");

// return;

// }

// intNode = sptrImageFormatControl

// bRet = intNode.setValue(height);

// if (false == bRet)

// {

// printf("set height fail.\n");

// return;

// }

}

bool Video::loadSetting(int mode)

{

CSystem &sysobj = CSystem::getInstance();

IUserSetControlPtr iSetPtr;

iSetPtr = sysobj.createUserSetControl(m_pCamera);

CEnumNode nodeUserSelect(m_pCamera, "UserSetSelector");

if (mode == 0)

{

if (!nodeUserSelect.setValueBySymbol("UserSet1")){

cout << "set UserSetSelector failed!" << endl;

}

}

else if (mode == 1){

if (!nodeUserSelect.setValueBySymbol("UserSet2")){

cout << "set UserSetSelector failed!" << endl;

}

}

CCmdNode nodeUserSetLoad(m_pCamera, "UserSetLoad");

if (!nodeUserSetLoad.execute()){

cout << "set UserSetLoad failed!" << endl;

}

}

bool Video::videoCheck()

{

CSystem &systemObj = CSystem::getInstance();

bool bRet = systemObj.discovery(m_vCameraPtrList);

if (false == bRet)

{

printf("discovery fail.\n");

exit(-1);

return false;

}

// 打印相机基本信息(key, 制造商信息, 型号, 序列号)

for (int i = 0; i < m_vCameraPtrList.size(); i++)

{

ICameraPtr cameraSptr = m_vCameraPtrList[i];

printf("Camera[%d] Info :\n", i);

printf(" key = [%s]\n", cameraSptr->getKey());

printf(" vendor name = [%s]\n", cameraSptr->getVendorName());

printf(" model = [%s]\n", cameraSptr->getModelName());

printf(" serial number = [%s]\n", cameraSptr->getSerialNumber());

}

if (m_vCameraPtrList.size() < 1)

{

printf("no camera.\n");

return false;

// msgBoxWarn(tr("Device Disconnected."));

}

else

{

//默认设置列表中的第一个相机为当前相机,其他操作比如打开、关闭、修改曝光都是针对这个相机。

m_pCamera = m_vCameraPtrList[0];

}

return true;

}

bool Video::videoOpen()

{

if (NULL == m_pCamera)

{

printf("connect camera fail. No camera.\n");

exit(-1);

return false;

}

if (true == m_pCamera->isConnected())

{

printf("camera is already connected.\n");

exit(-1);

return false;

}

if (false == m_pCamera->connect())

{

printf("connect camera fail.\n");

exit(-1);

return false;

}

return true;

}

void Video::videoClose()

{

if (NULL == m_pCamera)

{

printf("disconnect camera fail. No camera.\n");

exit(-1);

return;

}

if (false == m_pCamera->isConnected())

{

printf("camera is already disconnected.\n");

exit(-1);

return;

}

if (false == m_pCamera->disConnect())

{

printf("disconnect camera fail.\n");

}

}

bool Video::videoStart()

{

if (m_pStreamSource != NULL)

{

return true;

}

if (NULL == m_pCamera)

{

printf("start camera fail. No camera.\n");

exit(-1);

return false;

}

m_pStreamSource = CSystem::getInstance().createStreamSource(m_pCamera); //创建流的对象

if (NULL == m_pStreamSource)

{

printf("Create stream source failed.");

exit(-1);

return false;

}

return true;

}

void Video::startGrabbing()

{

m_pStreamSource->setBufferCount(1);

m_pStreamSource->startGrabbing();

}

void Video::CameraChangeTrig(ETrigType trigType)

{

if (NULL == m_pCamera)

{

printf("Change Trig fail. No camera or camera is not connected.\n");

exit(-1);

return;

}

if (trigContinous == trigType)

{

//设置触发模式

CEnumNode nodeTriggerMode(m_pCamera, "TriggerMode");

if (false == nodeTriggerMode.isValid())

{

printf("get TriggerMode node fail.\n");

return;

}

if (false == nodeTriggerMode.setValueBySymbol("Off"))

{

printf("set TriggerMode value = Off fail.\n");

return;

}

}

else if (trigSoftware == trigType)

{

//设置触发源为软触发

CEnumNode nodeTriggerSource(m_pCamera, "TriggerSource");

if (false == nodeTriggerSource.isValid())

{

printf("get TriggerSource node fail.\n");

return;

}

if (false == nodeTriggerSource.setValueBySymbol("Software"))

{

printf("set TriggerSource value = Software fail.\n");

return;

}

//设置触发器

CEnumNode nodeTriggerSelector(m_pCamera, "TriggerSelector");

if (false == nodeTriggerSelector.isValid())

{

printf("get TriggerSelector node fail.\n");

return;

}

if (false == nodeTriggerSelector.setValueBySymbol("FrameStart"))

{

printf("set TriggerSelector value = FrameStart fail.\n");

return;

}

//设置触发模式

CEnumNode nodeTriggerMode(m_pCamera, "TriggerMode");

if (false == nodeTriggerMode.isValid())

{

printf("get TriggerMode node fail.\n");

return;

}

if (false == nodeTriggerMode.setValueBySymbol("On"))

{

printf("set TriggerMode value = On fail.\n");

return;

}

}

else if (trigLine == trigType)

{

//设置触发源为Line1触发

CEnumNode nodeTriggerSource(m_pCamera, "TriggerSource");

if (false == nodeTriggerSource.isValid())

{

printf("get TriggerSource node fail.\n");

return;

}

if (false == nodeTriggerSource.setValueBySymbol("Line1"))

{

printf("set TriggerSource value = Line1 fail.\n");

return;

}

//设置触发器

CEnumNode nodeTriggerSelector(m_pCamera, "TriggerSelector");

if (false == nodeTriggerSelector.isValid())

{

printf("get TriggerSelector node fail.\n");

return;

}

if (false == nodeTriggerSelector.setValueBySymbol("FrameStart"))

{

printf("set TriggerSelector value = FrameStart fail.\n");

return;

}

//设置触发模式

CEnumNode nodeTriggerMode(m_pCamera, "TriggerMode");

if (false == nodeTriggerMode.isValid())

{

printf("get TriggerMode node fail.\n");

return;

}

if (false == nodeTriggerMode.setValueBySymbol("On"))

{

printf("set TriggerMode value = On fail.\n");

return;

}

// 设置外触发为上升沿(下降沿为FallingEdge)

CEnumNode nodeTriggerActivation(m_pCamera, "TriggerActivation");

if (false == nodeTriggerActivation.isValid())

{

printf("get TriggerActivation node fail.\n");

return;

}

if (false == nodeTriggerActivation.setValueBySymbol("RisingEdge"))

{

printf("set TriggerActivation value = RisingEdge fail.\n");

return;

}

}

}

void Video::ExecuteSoftTrig()

{

if (NULL == m_pCamera)

{

printf("Set GainRaw fail. No camera or camera is not connected.\n");

return;

}

CCmdNode nodeTriggerSoftware(m_pCamera, "TriggerSoftware");

if (false == nodeTriggerSoftware.isValid())

{

printf("get TriggerSoftware node fail.\n");

return;

}

if (false == nodeTriggerSoftware.execute())

{

printf("set TriggerSoftware fail.\n");

return;

}

// printf("ExecuteSoftTrig success.\n");

}

void Video::videoStopStream()

{

if (m_pStreamSource == NULL)

{

printf("stopGrabbing succefully!\n");

return;

}

if (!m_pStreamSource->stopGrabbing())

{

printf("stopGrabbing fail.\n");

}

}

bool Video::getFrame(Mat &img)

{

CFrame frame, frameClone;

bool isSuccess = m_pStreamSource->getFrame(frame, 300/*500*/);

if (!isSuccess)

{

printf("getFrame fail.\n");

m_pStreamSource->stopGrabbing();

m_pCamera->disConnect();

exit(0);

return false;

}

//判断帧的有效性

bool isValid = frame.valid();

if (!isValid)

{

printf("frame is invalid!\n");

return false;

}

frameClone = frame.clone();

TSharedPtr PtrFrameBuffer(new FrameBuffer(frameClone));

if (!PtrFrameBuffer)

{

printf("create PtrFrameBuffer failed!\n");

return false;

}

uint8_t *pSrcData = new (std::nothrow) uint8_t[frameClone.getImageSize()];

if (pSrcData)

{

memcpy(pSrcData, frameClone.getImage(), frameClone.getImageSize());

}

else

{

printf("new pSrcData failed!\n");

return false;

}

int dstDataSize = 0;

IMGCNV_SOpenParam openParam;

openParam.width = PtrFrameBuffer->Width();

openParam.height = PtrFrameBuffer->Height();

openParam.paddingX = PtrFrameBuffer->PaddingX();

openParam.paddingY = PtrFrameBuffer->PaddingY();

openParam.dataSize = PtrFrameBuffer->DataSize();

openParam.pixelForamt = PtrFrameBuffer->PixelFormat();

IMGCNV_EErr status = IMGCNV_ConvertToBGR24(pSrcData, &openParam, PtrFrameBuffer->bufPtr(), &dstDataSize);

if (IMGCNV_SUCCESS != status)

{

delete[] pSrcData;

return false;

}

delete[] pSrcData;

//将读进来的帧数据转化为opencv中的Mat格式操作

Size size;

size.height = PtrFrameBuffer->Height();

size.width = PtrFrameBuffer->Width();

img = Mat(size, CV_8UC3, PtrFrameBuffer->bufPtr()).clone();

// PtrFrameBuffer.reset();

frameClone.reset();

return true;

}

void Video::setBalanceRatio(double dRedBalanceRatio, double dGreenBalanceRatio, double dBlueBalanceRatio)

{

bool bRet;

IAnalogControlPtr sptrAnalogControl = CSystem::getInstance().createAnalogControl(m_pCamera);

if (NULL == sptrAnalogControl)

{

return ;

}

/* 关闭自动白平衡 */

CEnumNode enumNode = sptrAnalogControl->balanceWhiteAuto();

if (false == enumNode.isReadable())

{

printf("balanceRatio not support.\n");

return ;

}

bRet = enumNode.setValueBySymbol("Off");

if (false == bRet)

{

printf("set balanceWhiteAuto Off fail.\n");

return ;

}

enumNode = sptrAnalogControl->balanceRatioSelector();

bRet = enumNode.setValueBySymbol("Red");

if (false == bRet)

{

printf("set red balanceRatioSelector fail.\n");

return ;

}

CDoubleNode doubleNode = sptrAnalogControl->balanceRatio();

bRet = doubleNode.setValue(dRedBalanceRatio);

if (false == bRet)

{

printf("set red balanceRatio fail.\n");

return ;

}

enumNode = sptrAnalogControl->balanceRatioSelector();

bRet = enumNode.setValueBySymbol("Green");

if (false == bRet)

{

printf("set green balanceRatioSelector fail.\n");

return ;

}

doubleNode = sptrAnalogControl->balanceRatio();

bRet = doubleNode.setValue(dGreenBalanceRatio);

if (false == bRet)

{

printf("set green balanceRatio fail.\n");

return ;

}

enumNode = sptrAnalogControl->balanceRatioSelector();

bRet = enumNode.setValueBySymbol("Blue");

if (false == bRet)

{

printf("set blue balanceRatioSelector fail.\n");

return ;

}

doubleNode = sptrAnalogControl->balanceRatio();

bRet = doubleNode.setValue(dBlueBalanceRatio);

if (false == bRet)

{

printf("set blue balanceRatio fail.\n");

return ;

}

}

void Video::SetExposeTime(double exp)

{

bool bRet;

IAcquisitionControlPtr sptrAcquisitionControl = CSystem::getInstance().createAcquisitionControl(m_pCamera);

if (NULL == sptrAcquisitionControl)

{

printf("create a IAcquisitionControlPtr failed!\n");

return;

}

CEnumNode eNode = sptrAcquisitionControl->exposureAuto();

uint64 getValue;

if (!eNode.getValue(getValue))

{

printf("get value of type is failed!\n");

return;

}

if (getValue)//如果开启了自动曝光模式,则关闭

{

bRet = eNode.setValueBySymbol("Off");

if (!bRet)

{

printf("close autoExposure failed!\n");

return;

}

}

CDoubleNode dNode = sptrAcquisitionControl->exposureTime();

bRet = dNode.setValue(exp);

if (!bRet)

{

printf("set exposure failed!\n");

return;

}

}

void Video::SetAdjustPlus(double adj)

{

if (NULL == m_pCamera)

{

printf("Set GainRaw fail. No camera or camera is not connected.\n");

return;

}

CDoubleNode nodeGainRaw(m_pCamera, "GainRaw");

if (false == nodeGainRaw.isValid())

{

printf("get GainRaw node fail.\n");

return;

}

if (false == nodeGainRaw.isAvailable())

{

printf("GainRaw is not available.\n");

return;

}

if (false == nodeGainRaw.setValue(adj))

{

printf("set GainRaw value = %f fail.\n", adj);

return;

}

}

void Video::setResolution(int height, int width)

{

bool bRet;

IImageFormatControlPtr sptrImageFormatControl = CSystem::getInstance().createImageFormatControl(m_pCamera);

if (NULL == sptrImageFormatControl)

{

printf("create a IImageFormatControlPtr failed!\n");

return;

}

CIntNode intNode = sptrImageFormatControl->height();

bRet = intNode.setValue(width);

if (false == bRet)

{

printf("set width fail.\n");

return;

}

intNode = sptrImageFormatControl->height();

bRet = intNode.setValue(height);

if (false == bRet)

{

printf("set height fail.\n");

return;

}

}

void Video::setROI(int64_t nX, int64_t nY, int64_t nWidth, int64_t nHeight)

{

bool bRet;

CIntNode nodeWidth(m_pCamera, "Width");

bRet = nodeWidth.setValue(nWidth);

if (!bRet)

{

printf("set width fail.\n");

return;

}

CIntNode nodeHeight(m_pCamera, "Height");

bRet = nodeHeight.setValue(nHeight);

if (!bRet)

{

printf("set Height fail.\n");

return;

}

CIntNode OffsetX(m_pCamera, "OffsetX");

bRet = OffsetX.setValue(nX);

if (!bRet)

{

printf("set OffsetX fail.\n");

return;

}

CIntNode OffsetY(m_pCamera, "OffsetY");

bRet = OffsetY.setValue(nY);

if (!bRet)

{

printf("set OffsetY fail.\n");

return;

}

// bool bRet;

// IImageFormatControlPtr sptrImageFormatControl = CSystem::getInstance().createImageFormatControl(m_pCamera);

// if (NULL == sptrImageFormatControl)

// {

// return;

// }

// /* width */

// CIntNode intNode = sptrImageFormatControl->width();

// bRet = intNode.setValue(nWidth);

// if (!bRet)

// {

// printf("set width fail.\n");

// return;

// }

// /* height */

// intNode = sptrImageFormatControl->height();

// bRet = intNode.setValue(nHeight);

// if (!bRet)

// {

// printf("set height fail.\n");

// return;

// }

// /* OffsetX */

// intNode = sptrImageFormatControl->offsetX();

// bRet = intNode.setValue(nX);

// if (!bRet)

// {

// printf("set offsetX fail.\n");

// return;

// }

// /* OffsetY */

// intNode = sptrImageFormatControl->offsetY();

// bRet = intNode.setValue(nY);

// if (!bRet)

// {

// printf("set offsetY fail.\n");

// return;

// }

return;

}

void Video::setBinning()

{

CEnumNodePtr ptrParam(new CEnumNode(m_pCamera, "Binning"));

if (ptrParam)

{

if (false == ptrParam->isReadable())

{

printf("binning not support.\n");

return;

}

if (false == ptrParam->setValueBySymbol("XY"))

{

printf("set Binning XY fail.\n");

return;

}

// if (false == ptrParam->setValueBySymbol("Off"))

// {

// printf("set Binning Off fail.\n");

// return;

// }

}

return;

}

void Video::setFrameRate(double rate)

{

bool bRet;

IAcquisitionControlPtr sptAcquisitionControl = CSystem::getInstance().createAcquisitionControl(m_pCamera);

if (NULL == sptAcquisitionControl)

{

return;

}

CBoolNode booleanNode = sptAcquisitionControl->acquisitionFrameRateEnable();

bRet = booleanNode.setValue(true);

if (false == bRet)

{

printf("set acquisitionFrameRateEnable fail.\n");

return;

}

CDoubleNode doubleNode = sptAcquisitionControl->acquisitionFrameRate();

bRet = doubleNode.setValue(rate);

if (false == bRet)

{

printf("set acquisitionFrameRate fail.\n");

return;

}

}

插入大华相机,运行,显示设备信息即成功

完成