【Pytorch】损失函数与反向传播 - 学习笔记

视频地址

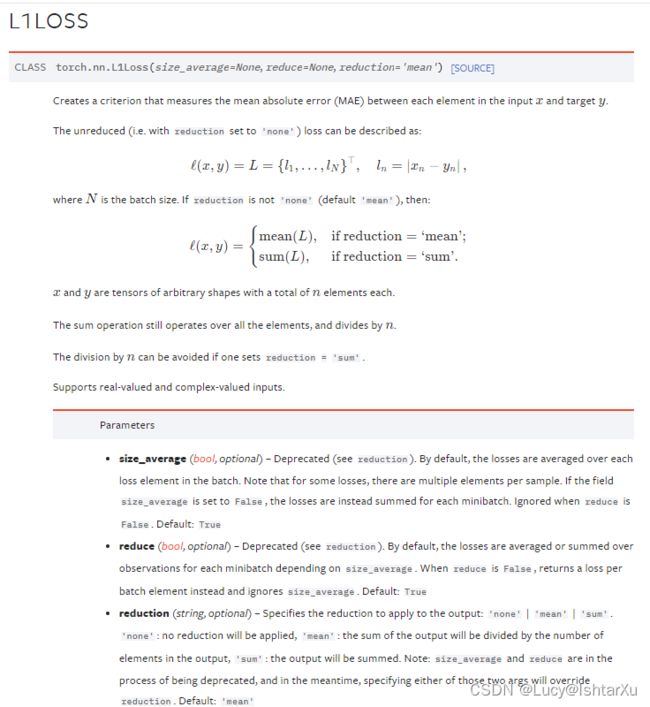

1 - L1Loss

import torch

from torch.nn import L1Loss

inputs = torch.tensor([1, 2, 3], dtype=torch.float32)

targets = torch.tensor([1, 2, 5], dtype=torch.float32)

inputs = torch.reshape(inputs, (1, 1, 1, 3))

targets = torch.reshape(targets, (1, 1, 1, 3))

loss = L1Loss()

# loss = L1Loss(reduction='sum') 这里输出为2

result = loss(inputs, targets)

print(result)

输出结果为

D:\Anaconda3\envs\pytorch\python.exe D:/研究生/代码尝试/nn_loss.py

tensor(0.6667)

进程已结束,退出代码为 0

2 - MSELoss 均方误差

import torch

from torch.nn import L1Loss, MSELoss

# from torch import nn 这样就可以避免忘记函数名称

inputs = torch.tensor([1, 2, 3], dtype=torch.float32)

targets = torch.tensor([1, 2, 5], dtype=torch.float32)

inputs = torch.reshape(inputs, (1, 1, 1, 3))

targets = torch.reshape(targets, (1, 1, 1, 3))

loss_mse = MSELoss()

# result_mse = nn.MSELoss() 搭配使用,防止忘记函数名称

result_mse = loss_mse(inputs, targets)

print(result_mse)

输出结果为

D:\Anaconda3\envs\pytorch\python.exe D:/研究生/代码尝试/nn_loss.py

tensor(1.3333)

进程已结束,退出代码为 0

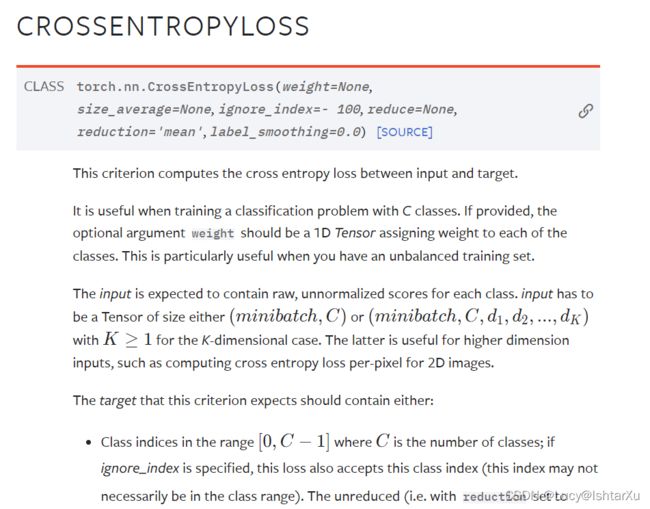

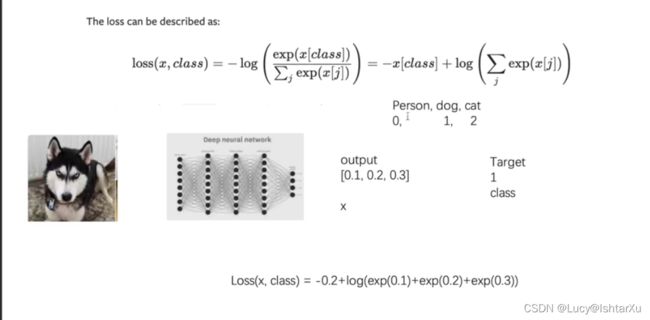

3 - CrossEntropyLoss 交叉熵

文档比较长,自行查阅

这里是交叉熵+ softmax?

代码!

import torch

from torch import nn

from torch.nn import L1Loss, MSELoss

x = torch.tensor([0.1, 0.2, 0.3])

y = torch.tensor([1])

x = torch.reshape(x, (1, 3)) # batch_size , class

loss_cross = nn.CrossEntropyLoss()

result_cross = loss_cross(x, y)

print(result_cross)

4 - 将损失函数和神经网络结合

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=1)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

model = Model()

for data in dataloader:

imgs, targets = data

outputs = model(imgs)

result_loss = loss(outputs, targets)

print(result_loss)

# print(outputs)

# print(targets)

输出结果为(取前几个例子)

tensor(2.2874, grad_fn=<NllLossBackward0>)

tensor(2.3025, grad_fn=<NllLossBackward0>)

tensor(2.3110, grad_fn=<NllLossBackward0>)

tensor(2.2716, grad_fn=<NllLossBackward0>)

tensor(2.2530, grad_fn=<NllLossBackward0>)

tensor(2.2430, grad_fn=<NllLossBackward0>)

5 - 使用反向传播

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=1)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

model = Model()

for data in dataloader:

imgs, targets = data

outputs = model(imgs)

result_loss = loss(outputs, targets)

result_loss.backward()

print("OK")

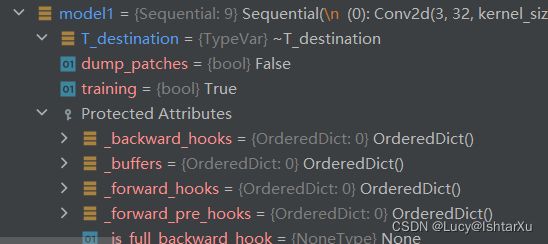

我们使用断点看一下梯度数据

在model->protected->modules->conv2d->weight里可以查看,最开始没有,但是单步运行一次后,就会有数据