Pytorch笔记-1

what is Pytorch

- 深度学习框架,提供最大的灵活性和速度

- 支持GPU计算能力

Tensors

Tensors 类似于Numpy中的ndarrays,但Tensors能够在GPU上加速计算。

from __future__ import print_function

import torch

# 声明一个未初始化的矩阵

x = torch.empty(5, 3)

print(x)

# 创建一个5*3的零矩阵,dtype设置为long

x = torch.zeros(5, 3, dtype=torch.long)

print(x)

# 基于数据,创建一个矩阵

x = torch.tensor([5.5, 3])

print(x, x.dtype)

# 基于已存在的tensor,创建新的tensor

## 利用new_* 函数,去创建新的tensor,size可以更改,dtype也可以更改

x = x.new_ones(5, 3)

print(x, x.dtype)

x = x.new_ones(5, 3, dtype=torch.double)

print(x)

## size不变,dtype改变

x = torch.rand_like(x, dtype=torch.float)

print(x)

# 获取tensor的大小

print(x.size())

tensor([[4.8674e-39, 8.9082e-39, 8.9082e-39],

[1.0194e-38, 9.1837e-39, 4.6837e-39],

[9.2755e-39, 1.0837e-38, 8.4490e-39],

[1.0194e-38, 9.0919e-39, 8.4490e-39],

[9.6429e-39, 8.4490e-39, 9.6429e-39]])

tensor([[0, 0, 0],

[0, 0, 0],

[0, 0, 0],

[0, 0, 0],

[0, 0, 0]])

tensor([5.5000, 3.0000]) torch.float32

tensor([[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.]]) torch.float32

tensor([[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.]], dtype=torch.float64)

tensor([[0.6535, 0.1822, 0.2415],

[0.6635, 0.8093, 0.6927],

[0.5346, 0.7273, 0.1211],

[0.5198, 0.7663, 0.9047],

[0.0295, 0.8088, 0.2461]])

torch.Size([5, 3])

Opertions

y = torch.rand(5, 3)

# 第一种方式

print(x + y)

# 第二种方式

print(torch.add(x, y))

# 第三种方式

result = torch.empty(5, 3)

torch.add(x, y, out=result)

print(result)

# 第四种方式,注:以'_'结尾的函数,一般会替换原有值,例子:x_copy_(y), x.t_()等都将改变x

y.add_(x)

print(y)

# tensor的索引切片

print(x)

print(x[:, 1])

# Resizing: reshape/resize tensor

x = torch.randn(4, 4)

y = x.view(16)

z = x.view(-1, 8)

w = x.view(8, -1)

print(x.size(), y.size(), z.size(), w.size())

# 只有一个元素的tensor,使用.item()获取value,即一个数值类型

x = torch.randn(1)

print(x)

print(x.item())

tensor([[0.6727, 1.1430, 0.6668],

[0.9527, 1.0709, 0.9511],

[0.6208, 1.3169, 0.4683],

[0.8150, 0.9228, 1.8305],

[0.7101, 1.7925, 0.8232]])

tensor([[0.6727, 1.1430, 0.6668],

[0.9527, 1.0709, 0.9511],

[0.6208, 1.3169, 0.4683],

[0.8150, 0.9228, 1.8305],

[0.7101, 1.7925, 0.8232]])

tensor([[0.6727, 1.1430, 0.6668],

[0.9527, 1.0709, 0.9511],

[0.6208, 1.3169, 0.4683],

[0.8150, 0.9228, 1.8305],

[0.7101, 1.7925, 0.8232]])

tensor([[0.6727, 1.1430, 0.6668],

[0.9527, 1.0709, 0.9511],

[0.6208, 1.3169, 0.4683],

[0.8150, 0.9228, 1.8305],

[0.7101, 1.7925, 0.8232]])

tensor([[0.6535, 0.1822, 0.2415],

[0.6635, 0.8093, 0.6927],

[0.5346, 0.7273, 0.1211],

[0.5198, 0.7663, 0.9047],

[0.0295, 0.8088, 0.2461]])

tensor([0.1822, 0.8093, 0.7273, 0.7663, 0.8088])

torch.Size([4, 4]) torch.Size([16]) torch.Size([2, 8]) torch.Size([8, 2])

tensor([0.8052])

0.8052219748497009

Numpy Bridge

Torch Tensor和Numpy之间相互转换。

Torch Tensor和Numpy共享内存(Tensor在CPU下),一个改变,另一个也会发生改变。

# Tensor 转换为 Numpy

a = torch.ones(5)

print(a)

b = a.numpy()

print(b)

## 一个改变,另一个也发生改变

a.add_(1)

print(a, b)

# Numpy 转换为 Tensor

import numpy as np

a = np.ones(5)

b = torch.from_numpy(a)

np.add(a, 1, out=a)

print(a, b)

tensor([1., 1., 1., 1., 1.])

[1. 1. 1. 1. 1.]

tensor([2., 2., 2., 2., 2.]) [2. 2. 2. 2. 2.]

[2. 2. 2. 2. 2.] tensor([2., 2., 2., 2., 2.], dtype=torch.float64)

CUDA Tensors

Tensor可以被转移到任何device上,只需要使用.to方法就可以。

# 利用torch.device对象,将tensors移入到GPU上或者移出GPU

if torch.cuda.is_available():

# cuda device 对象

device = torch.device('cuda')

# 直接在gpu上创建tensor

y = torch.ones_like(x, device=device)

# 将cpu上的tensor移入到gpu

x =x.to(device)

z = x + y

print(z)

# 将GPU上的tensor转移到cpu

print(z.to('cpu', torch.double))

tensor([1.8052], device='cuda:0')

tensor([1.8052], dtype=torch.float64)

AutoGrad: Automatic Differentiation

Pytorch中所有神经网络的核心就是autograd包。对tensor所有运算,autograd能够自动求导。它是一个define-by-run 框架,意味着反向传播由你的代码定义,并且每一次迭代都可以不同。

torch.Tensor的属性.requires_grad如果设置为True,它将会跟踪在其上面的所有运算。当你完成自己运算,你可以利用.backward(),然后所有的梯度将会自动计算,这个Tensor的梯度通过.grad可以获取。

如果在跟踪tensor的历史运算中,停止跟踪某一运算,可以使用.detach()方法。也可以使用代码块with torch.no_grad():,这是非常有用的,当可训练参数requires_grad=True时,而我们不需要某个梯度时,就可以这么做。

每个tensor都有.grad_fn属性,而叶子点(用户自己定义的tensor)的属性grad_fn is None,以及tensor的.requires_grad为False时,其grad_fn is None;否则,.grad_fn属性就是运算的Function。

Tensors的属性

import torch

# 创建tensor,设置requires_grad=True,将会跟踪该tensor的所有的运算

x = torch.ones(2, 2, requires_grad=True)

print(x)

# 做一个tensor运算

y = x + 2

print(y)

# y作为运算后的tensor被创建,其属性grad_fn

print(y.grad_fn)

# x作为叶子点,其属性grad_fn是None4

print(x.grad_fn)

tensor([[1., 1.],

[1., 1.]], requires_grad=True)

tensor([[3., 3.],

[3., 3.]], grad_fn=)

None

a = torch.randn(2, 2)

a = ((a * 3) / (a - 1))

print(a.requires_grad) # False

# 将tensor的requires_grad属性设置为True

a.requires_grad_(True)

print(a.requires_grad)

b = (a * a).sum()

print(b.grad_fn)

False

True

Gradients

x = torch.ones(2, 2, requires_grad=True)

y = x + 2

z = y * y * 3

out = z.mean()

print(z, out)

# 反向传播,out是一个scalar,那么out.backward()等价于out.backward(torch.tensor(1.))

out.backward()

# 求梯度 d(out)/d(x)

print(x.grad)

# y 不是一个scalar,那么反向传播y.backward()中的参数必须设置和y的大小一样

x = torch.randn(3, requires_grad=True)

y = x * 2

while y.data.norm() < 1000:

y = y * 2

print(y)

y.backward(torch.tensor([1, 1, 1], dtype=torch.float))

print(x.grad)

tensor([[27., 27.],

[27., 27.]], grad_fn=) tensor(27., grad_fn=)

tensor([[4.5000, 4.5000],

[4.5000, 4.5000]])

tensor([-297.3523, 1063.2278, 114.6286], grad_fn=)

tensor([1024., 1024., 1024.])

# 对tensor(当.requires_grad=True时)的跟踪历史,停止跟踪某一运算。

print(x.requires_grad) # True

print((x**2).requires_grad) # True

with torch.no_grad():

print((x**2).requires_grad) # False

# 使用.detach(),停止跟踪

print(x.requires_grad) # True

y = (x**2).detach()

print(y.requires_grad) # False

True

True

False

True

False

Neural Networks

利用torch.nn包构建神经网络。nn依赖于autograd包来定义模型,但不同于autograd。

nn.Module包含layers,以及方法forward(input),然后返回output。

例子:数字图片分类

这是一个简单的前馈神经网络,包含若干个layers,前后连接,输入input,最终输出output。

- 定义(可学习参数)神经网络

- 输入数据(一个batch的遍历)

- 数据在神经网络中process

- 计算损失函数

- 反向传播,计算梯度

- 更新可学习参数,即weight。典型的更新规则:

weight = weight - learning_rate * gradient

Define the network

import torch

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# 1 input image channel, 6 output channels, 3x3 square convolution kernel

self.conv1 = nn.Conv2d(1, 6, 3)

self.conv2 = nn.Conv2d(6, 16, 3)

self.fc1 = nn.Linear(16*6*6, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

print('conv1:', x.size())

# Max pooling over a (2, 2) window

x = F.max_pool2d(x, (2, 2))

print('pool1', x.size())

x = F.relu(self.conv2(x))

print('conv2:', x.size())

# If the size is a square you can only specify a single number

x = F.max_pool2d(x, 2)

print('pool2:', x.size())

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x):

# all dimensions except the batch dimension

size = x.size()[1: ]

num_features = 1

for s in size:

num_features *= s

return num_features

net = Net()

print(net)

Net(

(conv1): Conv2d(1, 6, kernel_size=(3, 3), stride=(1, 1))

(conv2): Conv2d(6, 16, kernel_size=(3, 3), stride=(1, 1))

(fc1): Linear(in_features=576, out_features=120, bias=True)

(fc2): Linear(in_features=120, out_features=84, bias=True)

(fc3): Linear(in_features=84, out_features=10, bias=True)

)

params = list(net.parameters())

print(len(params))

# conv1's weight

print(params[0].size())

10

torch.Size([6, 1, 3, 3])

# input 随机生成1*32*32的图片

input = torch.randn(1, 1, 32, 32)

# 前向传播

out = net(input)

print(out)

conv1: torch.Size([1, 6, 30, 30])

pool1 torch.Size([1, 6, 15, 15])

conv2: torch.Size([1, 16, 13, 13])

pool2: torch.Size([1, 16, 6, 6])

tensor([[ 0.0353, 0.0761, -0.0565, -0.0780, 0.0075, -0.0527, 0.0095, -0.0354,

0.0399, -0.0458]], grad_fn=)

# 所有参数的梯度归零,不然反向传播后,梯度会基于the existing gradients进行累加。

net.zero_grad()

# 反向传播随机梯度系数

out.backward(torch.randn(1, 10))

注:

torch.nn只支持mini-batches of sample,不支持single sample。nn.Conv2d需要4D Tensor,大小是nSamples × nChannels × Height × width。- 如果你有single sample,仅需要

input.unsqueeze(0)操作,添加一个fake batch dimension。

Loss Function

损失函数需要(output, target),计算一个值,用来评估output和target的距离。在nn包下有需要不同的loss function,比如:nn.MSELoss

input = torch.randn(1, 1, 32, 32)

output = net(input)

# fake example,自己设置目标值,正常有真实数据情况下,这些标签都是有的。

target = torch.randn(10)

# target的shape保持和output的shape一样

target = target.view(1, -1)

print(target)

# 建立MSELoss损失函数对象

criterion = nn.MSELoss()

# 返回MSELoss损失函数根据output和target,获取的值

loss = criterion(output, target)

print(loss)

conv1: torch.Size([1, 6, 30, 30])

pool1 torch.Size([1, 6, 15, 15])

conv2: torch.Size([1, 16, 13, 13])

pool2: torch.Size([1, 16, 6, 6])

tensor([[ 1.0337e+00, -5.6170e-01, 3.7140e-01, -6.9811e-01, 7.8860e-01,

-1.5017e+00, 2.2199e+00, 1.5598e-01, 4.4258e-01, -2.2088e-03]])

tensor(0.9772, grad_fn=)

# 查看一些属性

print(loss.grad_fn)

print(loss.grad_fn.next_functions[0][0]) # Linear

print(loss.grad_fn.next_functions[0][0].next_functions[0][0]) # Relu

BackProp

使用loss.backward()进行反向传播。必须先clear the existing gradients,否则the existing gradients将会逐渐累加

# 所有参数的梯度归零

net.zero_grad()

print('conv1.bias.grad before backward')

print(net.conv1.bias.grad)

loss.backward()

print('conv1.bias.grad after backward')

print(net.conv1.bias.grad)

conv1.bias.grad before backward

tensor([0., 0., 0., 0., 0., 0.])

conv1.bias.grad after backward

tensor([-0.0320, -0.0230, -0.0095, 0.0117, -0.0098, 0.0429])

Update the weights

最简单的更新方法,使用随机梯度下降法(SGD):weight = weight - learning_rate * gradient。

# 第一种方法,我们用Python code去实现

learning_rate = 0.01

for f in net.parameters():

f.data.sub_(f.grad.data * learning_rate)

然而,除了SGD,还有Nesterov-SGD,Adam,RMSProp等。因此pytorch中,构建了torch.optim模块,来实现这些方法。

# 第二种方法,利用optim模块

import torch.optim as optim

# 创建optimizer

optimizer = optim.SGD(net.parameters(), lr=0.01)

# 所有参数的梯度归零, 同net.zero_grad()一样的功能

optimizer.zero_grad()

output = net(input)

loss = criterion(output, target)

loss.backward()

# update weights

optimizer.step()

conv1: torch.Size([1, 6, 30, 30])

pool1 torch.Size([1, 6, 15, 15])

conv2: torch.Size([1, 16, 13, 13])

pool2: torch.Size([1, 16, 6, 6])

Training a classifier

一般来说 ,当你处理图片,文本或者视频数据,你可能需要python标准库去加载数据,转换为numpy array数据类型。你可以转换array为torch.*Tensor.

- 图片,Pillow、OpenCV

- 视频,scipy、librosa

- 文本,NLTK、SpaCy

对于pytorch,我们经常用torchvision,进行一些数据加载和数据转换的操作。

本小节,我们将会用CIFAR10数据集。主要类标签: ‘airplane’, ‘automobile’, ‘bird’, ‘cat’, ‘deer’, ‘dog’, ‘frog’, ‘horse’, ‘ship’, ‘truck’。CIFAR10中图片大小33232。

训练图片分类器,将按照以下步骤:

- 使用

torchvision加载和标准化数据 - 定义卷积神经网络

- 定义损失函数

- 在训练集上训练

- 在测试集上测试

Loading and normalizing CIFAR10

import torch

import torchvision

import torchvision.transforms as transforms

# 构建数据转换器

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

# 在线下载图片数据,并利用数据转换器进行处理

trainset = torchvision.datasets.CIFAR10(root='./data',

train=True,

download=True,

transform=transform)

# 将数据batch处理

trainloader = torch.utils.data.DataLoader(trainset,

batch_size=4,

shuffle=True,

num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

Files already downloaded and verified

Files already downloaded and verified

# 查看下训练集的图片

import matplotlib.pyplot as plt

import numpy as np

def imshow(img):

img = img/2 + 0.5 # 非标准化

nping = img.numpy() # 转换为numpy array

plt.imshow(np.transpose(nping, (1, 2, 0)))

plt.show()

# 获取训练集图片

dataiter = iter(trainloader)

images, labels = dataiter.next()

# 展示图片,make_grid的作用是将若干幅图像拼成一幅图像

imshow(torchvision.utils.make_grid(images))

# 打印标签

print(' \t'.join('%5s' % classes[labels[j]] for j in range(4)))

deer ship horse deer

Define a Convolutional Neural Network

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16*5*5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()

Define a Loss function and optimizer

import torch.optim as optim

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

Train the network

for epoch in range(2): # loop over the dataset multiple times

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

inputs, labels = data

# zero the parameter gradients

optimizer.zero_grad()

# forward

outputs = net(inputs)

loss = criterion(outputs, labels)

# backward

loss.backward()

# optimize

optimizer.step()

# 每批batch的损失函数累加

running_loss += loss.item()

if i % 2000 == 1999:

print('[%d, %5d] loss: %.3f' % (epoch+1, i+1, running_loss/2000))

running_loss = 0.0

print('Finished Training')

[1, 2000] loss: 2.171

[1, 4000] loss: 1.799

[1, 6000] loss: 1.655

[1, 8000] loss: 1.556

[1, 10000] loss: 1.501

[1, 12000] loss: 1.455

[2, 2000] loss: 1.377

[2, 4000] loss: 1.382

[2, 6000] loss: 1.338

[2, 8000] loss: 1.321

[2, 10000] loss: 1.301

[2, 12000] loss: 1.278

Finished Training

# 保存训练模型

PATH = './cifar_net.pth'

torch.save(net.state_dict(), PATH)

Test the network on the test data

dataiter = iter(testloader)

images, labels = dataiter.next()

# print images

imshow(torchvision.utils.make_grid(images))

print('GroundTruth: ', ' '.join('%5s' % classes[labels[j]] for j in range(4)))

GroundTruth: cat ship ship plane

# 对测试集预测

outputs = net(images)

_, predicted = torch.max(outputs, 1)

print('Predicted: ', ' '.join('%5s'%classes[predicted[j]] for j in range(4)))

Predicted: cat ship ship plane

# 重新加载模型文件,对测试集进行预测

net = Net()

net.load_state_dict(torch.load(PATH))

outputs = net(images)

_, predicted = torch.max(outputs, 1)

print('Predicted: ', ' '.join('%5s'%classes[predicted[j]] for j in range(4)))

Predicted: cat ship ship plane

# 针对所有的数据集进行预测和评估

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (100*correct/total))

Accuracy of the network on the 10000 test images: 55 %

# 针对具体的类,其预测效果如何

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs, 1)

c = (predicted == labels).squeeze()

for i in range(4):

label = labels[i]

class_correct[label] += c[i].item()

class_total[label] += 1

for i in range(10):

print('Accuracy of %5s : %2d %%' % (classes[i], 100*class_correct[i]/class_total[i]))

Accuracy of plane : 69 %

Accuracy of car : 79 %

Accuracy of bird : 21 %

Accuracy of cat : 22 %

Accuracy of deer : 43 %

Accuracy of dog : 57 %

Accuracy of frog : 77 %

Accuracy of horse : 67 %

Accuracy of ship : 54 %

Accuracy of truck : 63 %

Training on GPU

# 查看下自己的设备是否支持GPU

device = torch.device("cuda:0" if torch.cuda.is_available() else 'cpu')

print(device)

cuda:0

# 将神经网络模型转移到GPU上

net.to(device)

# 将数据转移到GPU上

inputs, labels = data[0].to(device), data[1].to(device)

Learning Pytorch with examples

基于一些简单的例子,对numpy和tensors分别构建神经网络进行对比。

warm-up: numpy

# -*- coding: utf-8 -*-

import numpy as np

# N: batch size, D_in: input dimenesion

# H: hidden dimension,D_out: out dimension

N, D_in, H, D_out = 64, 1000, 100, 10

# create random input and outtput data

x = np.random.randn(N, D_in)

y = np.random.randn(N, D_out)

# randomly initialize weights

w1 = np.random.randn(D_in, H)

w2 = np.random.randn(H, D_out)

learning_rate = 1e-6

for t in range(500):

# foward

h = x.dot(w1)

h_relu = np.maximum(h, 0)

y_pred = h_relu.dot(w2)

# loss

loss = np.square(y_pred - y).sum()

# print(t, loss)

# backprop

grad_y_pred = 2.0 * (y_pred - y)

grad_w2 = h_relu.T.dot(grad_y_pred)

grad_h_relu = grad_y_pred.dot(w2.T)

grad_h = grad_h_relu.copy()

grad_h[h < 0] = 0

grad_w1 = x.T.dot(grad_h)

# update weights

w1 -= learning_rate * grad_w1

w2 -= learning_rate * grad_w2

Pytorch: tensors

# -*- coding: utf-8 -*-

import torch

dtype = torch.float

device = torch.device("cpu")

# device = torch.device("cuda:0") # Uncomment this to run on GPU

# N is batch size; D_in is input dimension;

# H is hidden dimension; D_out is output dimension.

N, D_in, H, D_out = 64, 1000, 100, 10

# Create random input and output data

x = torch.randn(N, D_in, device=device, dtype=dtype)

y = torch.randn(N, D_out, device=device, dtype=dtype)

# Randomly initialize weights

w1 = torch.randn(D_in, H, device=device, dtype=dtype)

w2 = torch.randn(H, D_out, device=device, dtype=dtype)

learning_rate = 1e-6

for t in range(500):

# Forward

h = x.mm(w1)

# tensor.clamp(min=p, max=q),表示tensor每在小于p的值,给它替换为p;大于q的值,替换为q;其他不变。

h_relu = h.clamp(min=0)

y_pred = h_relu.mm(w2)

# loss

loss = (y_pred - y).pow(2).sum().item()

if t % 100 == 99:

print(t, loss)

# backprop

grad_y_pred = 2.0 * (y_pred - y)

grad_w2 = h_relu.t().mm(grad_y_pred)

grad_h_relu = grad_y_pred.mm(w2.t())

# clone函数相当于深拷贝,等同于copy()

grad_h = grad_h_relu.clone()

grad_h[h < 0] = 0

grad_w1 = x.t().mm(grad_h)

# 更新weights

w1 -= learning_rate*grad_w1

w2 -= learning_rate*grad_w2

99 725.5035400390625

199 6.171583652496338

299 0.0905323326587677

399 0.0018679401837289333

499 0.00015406067541334778

Autograd

# -*- coding: utf-8 -*-

import torch

dtype = torch.float

device = torch.device('cpu')

# N: batch size, D_in: input dimenesion

# H: hidden dimension,D_out: out dimension

N, D_in, H, D_out = 64, 1000, 100, 10

x = torch.randn(N, D_in, device=device, dtype=dtype)

y = torch.randn(N, D_out, device=device, dtype=dtype)

# 注意:与上面代码不同之处 requires_grad=True,主要是使用autograd,构建计算图后自动求导,进行tracking history

w1 = torch.randn(D_in, H, device=device, dtype=dtype, requires_grad=True)

w2 = torch.randn(H, D_out, device=device, dtype=dtype, requires_grad=True)

learning_rate = 1e-6

for t in range(500):

# forward

y_pred = x.mm(w1).clamp(min=0).mm(w2)

# loss

loss = (y_pred - y).pow(2).sum()

if t%100 == 99:

print(t, loss.item())

# backward

loss.backward()

# wrap in torch.no_grad(), because Weights have requires_grad=True, but we don't need to track this

# in autograd.

with torch.no_grad():

w1 -= learning_rate * w1.grad

w2 -= learning_rate * w2.grad

# zero the gradients after updating weights

w1.grad.zero_()

w2.grad.zero_()

99 701.320068359375

199 4.477272987365723

299 0.044173479080200195

399 0.0007534730830229819

499 7.956846820889041e-05

Pytorch: Defining new autograd functions

定义torch.autograd.Function的子类,作为function对象,能够在前向传播和后向传播中运算。

# -*- coding: utf-8 -*-

import torch

class MyReLU(torch.autograd.Function):

"""

ctx is a context object that can be used

to stash(隐藏) information for backward computation。

You can cache arbitrary objects for use in the backward pass

using the ctx.save_for_backward method(利用ctx.save_for_backward可以缓存任何对象,从而用在backward computations)

"""

@staticmethod

def forward(ctx, input):

# input 缓存起来

ctx.save_for_backward(input)

return input.clamp(min=0)

@staticmethod

def backward(ctx, grad_output):

# backward时,input 再拿出来

input, = ctx.saved_tensors

grad_input = grad_output.clone()

grad_input[input<0] = 0

return grad_input

dtype = torch.float

device = torch.device('cpu')

N, D_in, H, D_out = 64, 1000, 100, 10

x = torch.randn(N, D_in, device=device, dtype=dtype)

y = torch.randn(N, D_out, device=device, dtype=dtype)

w1 = torch.randn(D_in, H, device=device, dtype=dtype, requires_grad=True)

w2 = torch.randn(H, D_out, device=device, dtype=dtype, requires_grad=True)

learning_rate = 1e-6

for t in range(500):

# to apply our Function, we use Function.apply method. we alias this as 'relu'

relu = MyReLU.apply

# Forward

y_pred = relu(x.mm(w1)).mm(w2)

# loss

loss = (y_pred - y).pow(2).sum()

if t % 100 == 99:

print(t, loss.item())

# backward

loss.backward()

# update weights

with torch.no_grad():

w1 -= learning_rate * w1.grad

w2 -= learning_rate * w2.grad

# Manually zero the gradients after updating weights

w1.grad.zero_()

w2.grad.zero_()

99 392.440673828125

199 1.3885456323623657

299 0.007697926368564367

399 0.00017928658053278923

499 3.0681290809297934e-05

Pytorch: nn

nn包定义了一系列Modules,相同于神经网络结构的layers。当然,nn包也定义了一系列损失函数。

# -*- coding: utf-8 -*-

import torch

N, D_in, H, D_out = 64, 1000, 100, 10

x = torch.randn(N, D_in)

y = torch.randn(N, D_out)

# 利用nn包定义我们自己的model,作为 a sequence of layers。

# nn.Sequential是一个Module,其可以包含other Modules

model = torch.nn.Sequential(

torch.nn.Linear(D_in, H),

torch.nn.ReLU(),

torch.nn.Linear(H, D_out),

)

# MSE loss

loss_fn = torch.nn.MSELoss(reduction='sum')

learning_rate = 1e-4

for t in range(500):

y_pred = model(x)

loss = loss_fn(y_pred, y)

if t % 100 == 99:

print(t, loss.item())

# 比起上面的例子,这个是新增的,因为模型构建后grad会被随机初始化,需要归置为0

model.zero_grad()

loss.backward()

# update the weights

with torch.no_grad():

for param in model.parameters():

param -= learning_rate * param.grad

99 1.8741734027862549

199 0.025903530418872833

299 0.0006964506465010345

399 2.3446677005267702e-05

499 8.755716862651752e-07

Pytorch: optim

optimizers常用的有AdaGrad, RMSProp, Adam等。

# -*- coding: utf-8 -*-

import torch

N, D_in, H, D_out = 64, 1000, 100, 10

x = torch.randn(N, D_in)

y = torch.randn(N, D_out)

model = torch.nn.Sequential(

torch.nn.Linear(D_in, H),

torch.nn.ReLU(),

torch.nn.Linear(H, D_out),

)

loss_fn = torch.nn.MSELoss(reduction='sum')

learning_rate = 1e-4

# 构建optimizer

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

for t in range(500):

y_pred = model(x)

loss = loss_fn(y_pred, y)

if t % 100 == 99:

print(t, loss.item())

# 和model.zero_grad()同样的作用

optimizer.zero_grad()

loss.backward()

# 替代了上面例子的一个个参数更新,相当于封装了一个个参数更新

optimizer.step()

99 36.15200424194336

199 0.32718443870544434

299 0.008027580566704273

399 0.0008134263334795833

499 7.64066498959437e-05

Pytorch: Custom nn Modules

定义nn.Module的子类,自定义Model

# -*- coding: utf-8 -*-

import torch

class TwoLayerNet(torch.nn.Module):

def __init__(self, D_in, H, D_out):

super(TwoLayerNet, self).__init__()

self.linear1 = torch.nn.Linear(D_in, H)

self.linear2 = torch.nn.Linear(H, D_out)

def forward(self, x):

h_relu = self.linear1(x).clamp(min=0)

y_pred = self.linear2(h_relu)

return y_pred

# N is batch size; D_in is input dimension;

# H is hidden dimension; D_out is output dimension.

N, D_in, H, D_out = 64, 1000, 100, 10

x = torch.randn(N, D_in)

y = torch.randn(N, D_out)

model = TwoLayerNet(D_in, H, D_out)

criterion = torch.nn.MSELoss(reduction='sum')

optimizer = torch.optim.SGD(model.parameters(), lr=1e-4)

for t in range(500):

y_pred = model(x)

# loss

loss = criterion(y_pred, y)

if t % 100 == 99:

print(t, loss.item())

# Zero gradients and backward , and update the weights

optimizer.zero_grad()

loss.backward()

optimizer.step()

99 3.0683937072753906

199 0.03691878542304039

299 0.0009349210886284709

399 4.506673212745227e-05

499 3.3089236239902675e-06

Pytorch: Control Flow + Weight Sharing

动态图,控制模型最终的定义。

# -*-coding: utf-8 -*-

import random

import torch

class DynamicNet(torch.nn.Module):

def __init__(self, D_in, H, D_out):

super(DynamicNet, self).__init__()

self.input_linear = torch.nn.Linear(D_in, H)

self.middle_linear = torch.nn.Linear(H, H)

self.out_linear = torch.nn.Linear(H, D_out)

def forward(self, x):

# 对于前向传播,我们随机选择0,1,2,3,随机产生隐藏层的层数

h_relu = self.input_linear(x).clamp(min=0)

for _ in range(random.randint(0, 3)):

h_relu = self.middle_linear(h_relu).clamp(min=0)

y_pred = self.out_linear(h_relu)

return y_pred

N, D_in, H, D_out = 64, 1000, 100, 10

# Create random Tensors to hold inputs and outputs

x = torch.randn(N, D_in)

y = torch.randn(N, D_out)

# Construct our model by instantiating the class defined above

model = DynamicNet(D_in, H, D_out)

criterion = torch.nn.MSELoss(reduction='sum')

optimizer = torch.optim.SGD(model.parameters(), lr=1e-4, momentum=0.9)

for t in range(500):

# Forward pass: Compute predicted y by passing x to the model

y_pred = model(x)

# Compute and print loss

loss = criterion(y_pred, y)

if t % 100 == 99:

print(t, loss.item())

# Zero gradients, perform a backward pass, and update the weights.

optimizer.zero_grad()

loss.backward()

optimizer.step()

99 12.072546005249023

199 21.22652816772461

299 0.1546557992696762

399 0.33444735407829285

499 0.6670736074447632

Tensorflow: Static Graphs

TensorFlow 和 Pytorch其中的不同之处,主要是TensorFlow使用static computational graphs,而Pytorch使用dynamic computational graphs。

注:下面的例子是基于TensorFlow 1版本的,而目前我安装的是TensorFlow 2版本的,不兼容,所以下面代码运行会报错,展示出来,只是为了显示TensorFlow的静态图。

# import tensorflow as tf

# import numpy as np

# # First we set up the computational graph:

# # N is batch size; D_in is input dimension;

# # H is hidden dimension; D_out is output dimension.

# N, D_in, H, D_out = 64, 1000, 100, 10

# # Create placeholders for the input and target data; these will be filled

# # with real data when we execute the graph.

# x = tf.placeholder(tf.float32, shape=(None, D_in))

# y = tf.placeholder(tf.float32, shape=(None, D_out))

# # Create Variables for the weights and initialize them with random data.

# # A TensorFlow Variable persists its value across executions of the graph.

# w1 = tf.Variable(tf.random_normal((D_in, H)))

# w2 = tf.Variable(tf.random_normal((H, D_out)))

# # Forward pass: Compute the predicted y using operations on TensorFlow Tensors.

# # Note that this code does not actually perform any numeric operations; it

# # merely sets up the computational graph that we will later execute.

# h = tf.matmul(x, w1)

# h_relu = tf.maximum(h, tf.zeros(1))

# y_pred = tf.matmul(h_relu, w2)

# # Compute loss using operations on TensorFlow Tensors

# loss = tf.reduce_sum((y - y_pred) ** 2.0)

# # Compute gradient of the loss with respect to w1 and w2.

# grad_w1, grad_w2 = tf.gradients(loss, [w1, w2])

# # Update the weights using gradient descent. To actually update the weights

# # we need to evaluate new_w1 and new_w2 when executing the graph. Note that

# # in TensorFlow the the act of updating the value of the weights is part of

# # the computational graph; in PyTorch this happens outside the computational

# # graph.

# learning_rate = 1e-6

# new_w1 = w1.assign(w1 - learning_rate * grad_w1)

# new_w2 = w2.assign(w2 - learning_rate * grad_w2)

# # Now we have built our computational graph, so we enter a TensorFlow session to

# # actually execute the graph.

# with tf.Session() as sess:

# # Run the graph once to initialize the Variables w1 and w2.

# sess.run(tf.global_variables_initializer())

# # Create numpy arrays holding the actual data for the inputs x and targets

# # y

# x_value = np.random.randn(N, D_in)

# y_value = np.random.randn(N, D_out)

# for t in range(500):

# # Execute the graph many times. Each time it executes we want to bind

# # x_value to x and y_value to y, specified with the feed_dict argument.

# # Each time we execute the graph we want to compute the values for loss,

# # new_w1, and new_w2; the values of these Tensors are returned as numpy

# # arrays.

# loss_value, _, _ = sess.run([loss, new_w1, new_w2],

# feed_dict={x: x_value, y: y_value})

# if t % 100 == 99:

# print(t, loss_value)

What IS torch.nn really?

MNIST data setup

我们使用手写数字图片(0-9)MNIST数据集。利用Python3自带的pathlib模块处理路径,利用requests下载图片。

from pathlib import Path

import requests

# 返回目录data下的object,数据类型是 WindowsPath,返回值WindowsPath('data')

DATA_PATH = Path("data")

# WindowsPath和字符串拼接,生成 WindowsPath('data/mnist')

PATH = DATA_PATH / 'mnist'

# mkdir()的两个参数,

# parents:True,表示任何目录不存在,就会被创建。False,表示若不存在,报错FileNotFoundError。

# exist_ok: False,表示目录如果存在,报错 FileExistsError。True,表示目录如果存在,忽略报错 FileExistsError。

PATH.mkdir(parents=True, exist_ok=True)

URL = "http://deeplearning.net/data/mnist/"

FILENAME = 'mnist.pkl.gz'

if not (PATH / FILENAME).exists():

content = requests.get(URL + FILENAME).content

# 将content写入文件FILENAME中

(PATH / FILENAME).open('wb').write(content)

# 该数据是numpy array 格式,经过上面操作已经以pickle形式被存储

import pickle

import gzip

# as_posix()函数,主要使window系统的反斜杠'\',变成正斜杠'/'

with gzip.open((PATH/FILENAME).as_posix(), 'rb') as f:

((x_train, y_train), (x_valid, y_valid), _) = pickle.load(f, encoding='latin-1')

# 每张图片都是28*28,被存储以a flattened row,长度784(28*28),我们需要reshape to 2d.

from matplotlib import pyplot

import numpy as np

pyplot.imshow(x_train[0].reshape((28, 28)), cmap="gray")

print(x_train.shape)

(50000, 784)

import torch

# 由于数据集是numpy arrays,转换为 torch.tensor

x_train, y_train, x_valid, y_valid = map(torch.tensor, (x_train, y_train, x_valid, y_valid))

n, c = x_train.shape

# 查看数据情况

print(x_train, y_train)

print(x_train.shape)

print(y_train.min(), y_train.max())

tensor([[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]) tensor([5, 0, 4, ..., 8, 4, 8])

torch.Size([50000, 784])

tensor(0) tensor(9)

Neural net from scratch (no torch.nn)

我们用pytorch的tensor的基本操作来创建模型,不涉及torch.nn等包。

import math

# 利用Xavier initialisation初始化权重,即:乘以系数 1/sqrt(n)

weights = torch.randn(784, 10)/math.sqrt(784)

weights.requires_grad_(True)

bias = torch.zeros(10, requires_grad=True)

# 激活函数, x - log(sum(exp(x)))

def log_softmax(x):

return x - x.exp().sum(-1).log().unsqueeze(-1)

# 自定义模型

def model(xb):

return log_softmax(xb @ weights + bias)

# batch size

bs = 64

# a mini-batch from x

xb = x_train[0: bs]

# 简单的前向传播,此时的权重只是刚开始初始化的权重

preds = model(xb)

# 我们能够看到preds不仅是个tensor values,还是个 gradient function,后面将用来做反向传播。

print(preds[0], preds.shape)

print('*'*50)

# 定义损失函数

def nll(input, target):

return -input[range(target.shape[0]), target].mean()

loss_func = nll

yb = y_train[0: bs]

print(loss_func(preds, yb))

print('*'*50)

# 计算模型的准确率

def accuracy(out, yb):

preds = torch.argmax(out, dim=1)

return (preds == yb).float().mean()

print(accuracy(preds, yb))

tensor([-2.1470, -2.6022, -2.4621, -2.5204, -2.3438, -2.8420, -2.0887, -2.7984,

-1.8764, -1.8892], grad_fn=) torch.Size([64, 10])

**************************************************

tensor(2.3567, grad_fn=)

**************************************************

tensor(0.1719)

run a training loop:

- 选择 a mini-batch of data

- 模型预测

- 计算损失值

- 反向传播,更新模型参数

tip:可以使用Python自带的debugger调式pytorch代码,每一步都能够看到每个变量的值

from IPython.core.debugger import set_trace

lr = 0.5

epochs = 2

for epoch in range(epochs):

for i in range((n-1)//bs + 1):

# set_trace()

start_i = i * bs

end_i = start_i + bs

xb = x_train[start_i: end_i]

yb = y_train[start_i: end_i]

# forward

pred = model(xb)

# loss

loss = loss_func(pred, yb)

# backward

loss.backward()

# update weights

with torch.no_grad():

weights -= weights.grad * lr

bias -= bias.grad * lr

weights.grad.zero_()

bias.grad.zero_()

print(loss_func(model(xb), yb), accuracy(model(xb), yb))

tensor(0.0827, grad_fn=) tensor(1.)

Using torch.nn.functional

与上面例子相比,为了达到同样的目的,充分展现pytorch的优势,其实nn包使代码更简单和灵活。利用torch.nn.functional能够替代我们自己手写的激活函数和损失函数。还能创建神经网络结构,例如pooling、convolutions、linear layers等。

import torch.nn.functional as F

# 损失函数F.cross_entropy 替代自定义的nll

loss_func = F.cross_entropy

# 激活函数F.log_softmax 替代自定义的log_softmax

def model(xb):

return F.log_softmax(xb @ weights + bias, dim=1)

# 最终的损失值和准确率都是同上面计算出来的一样

print(loss_func(model(xb), yb), accuracy(model(xb), yb))

tensor(0.0827, grad_fn=) tensor(1.)

Refactor using nn.Model

我们将会用nn.Module和nn.Parameter模块,nn.Module有许多属性和方法,比如.parameters()和.zero_grad()。同时我们可以定义nn.Module的子类,能够 keep track of state。

from torch import nn

class Mnist_Logistic(nn.Module):

def __init__(self):

super(Mnist_Logistic, self).__init__()

self.weights = nn.Parameter(torch.randn(784, 10) / math.sqrt(784))

self.bias = nn.Parameter(torch.zeros(10))

def forward(self, xb):

return xb @ self.weights + self.bias

model = Mnist_Logistic()

print(loss_func(model(xb), yb))

# 不同于自己定义的参数更新(6.2章节代码),这次使用 model.parameters() and model.zero_grad()

# 这样不至于忘记某些参数,特别当模型很复杂的时候

# warp out little training loop in a fit function

def fit():

for epoch in range(epochs):

for i in range((n-1)//bs + 1):

start_i = i * bs

end_i = start_i + bs

xb = x_train[start_i: end_i]

yb = y_train[start_i: end_i]

pred = model(xb)

loss = loss_func(pred, yb)

loss.backward()

with torch.no_grad():

# 不同于自己定义的参数更新(6.2章节代码),这次使用 model.parameters() and model.zero_grad()

# 这样不至于忘记某些参数,特别当模型很复杂的时候

for p in model.parameters():

p -= p.grad * lr

model.zero_grad()

fit()

print(loss_func(model(xb), yb))

tensor(2.2315, grad_fn=)

tensor(0.0842, grad_fn=)

Refactor using nn.Linear

继续更新我们的代码。不同于之前自定义和初始化self.weights和self.bias,以及xb @ self.weights + self.bias。我们将用nn.Linear替换

class Mnist_Logistic(nn.Module):

def __init__(self):

super(Mnist_Logistic, self).__init__()

self.lin = nn.Linear(784, 10)

def forward(self, xb):

return self.lin(xb)

model = Mnist_Logistic()

Refactor using optim

我们使用torch.optim替换 update each parameter

from torch import optim

def get_model():

model = Mnist_Logistic()

return model, optim.SGD(model.parameters(), lr=lr)

model, opt = get_model()

for epoch in range(epochs):

for i in range((n-1)//bs + 1):

start_i = i * bs

end_i = start_i + bs

xb = x_train[start_i: end_i]

yb = y_train[start_i: end_i]

pred = model(xb)

loss = loss_func(pred, yb)

opt.zero_grad()

loss.backward()

# 替换上个例子更新参数方法

opt.step()

print(loss_func(model(xb), yb))

tensor(0.0808, grad_fn=)

Refactor using Dataset

pytorch的TensorDataset是一种可以包裹tensors的数据集合。

from torch.utils.data import TensorDataset

# x_train, y_train 合并在一起

train_ds = TensorDataset(x_train, y_train)

model, opt = get_model()

for epoch in range(epochs):

for i in range((n - 1) // bs + 1):

# 下面一行代码,等价于:

# xb = x_train[start_i:end_i]

# yb = y_train[start_i:end_i]

xb, yb = train_ds[i * bs: i * bs + bs]

pred = model(xb)

loss = loss_func(pred, yb)

loss.backward()

opt.step()

opt.zero_grad()

print(loss_func(model(xb), yb))

tensor(0.0824, grad_fn=)

Refactor using DataLoader

DataLoader主要用来管理batches。对任何Dataset都可以创建DataLoader。DataLoader让batches迭代更加容易,而不需要在使用train_ds[i*bs : i*bs+bs] 。

from torch.utils.data import DataLoader

train_ds = TensorDataset(x_train, y_train)

train_dl = DataLoader(train_ds, batch_size=bs)

model, opt = get_model()

for epoch in range(epochs):

for xb, yb in train_dl:

pred = model(xb)

loss = loss_func(pred, yb)

opt.zero_grad()

loss.backward()

opt.step()

print(loss_func(model(xb), yb))

tensor(0.0820, grad_fn=)

Add validation

以往章节,都忽略了验证集,但事实上,需要验证集,来判断是否过拟合。另外,shuffle 训练集数据也很重要,

# shuffle训练集

train_ds = TensorDataset(x_train, y_train)

train_dl = DataLoader(train_ds, batch_size=bs, shuffle=True)

# 引入验证集,验证集batch大小,我们设置了是训练集大小的两倍

valid_ds = TensorDataset(x_valid, y_valid)

valid_dl = DataLoader(valid_ds, batch_size=bs * 2)

model, opt = get_model()

# 注:在训练之前,我们先`model.train()`,在验证之前,先`model.eval()`。

# 因为像`nn.BatchNorm2d`和`nn.Dropout`这样的layers被使用时候,能够确保不同阶段(训练阶段和验证阶段)不一样的行为。

for epoch in range(epochs):

# 训练之前,使用 model.train()

model.train()

for xb, yb in train_dl:

pred = model(xb)

loss = loss_func(pred, yb)

opt.zero_grad()

loss.backward()

opt.step()

# 验证之前, 使用 model.eval()

model.eval()

with torch.no_grad():

# 测试集的损失值之和

valid_loss = sum(loss_func(model(xb), yb) for xb, yb in valid_dl)

# 打印每个epoch下,验证集平均损失

print(epoch, valid_loss/len(valid_dl))

0 tensor(0.4226)

1 tensor(0.2868)

Create fit() and get_data()

继续优化我们的代码。由于我们在训练集合验证集做了类似的操作,即计算了损失值。因此,我们可以封装在函数loss_batch中。对于训练集,我们需要optimizer。而对于验证集,我们不需要 optimizer。

def loss_batch(model, loss_func, xb, yb, opt=None):

loss = loss_func(model(xb), yb)

if opt is not None:

opt.zero_grad()

loss.backward()

opt.step()

return loss.item(), len(xb)

def get_data(train_ds, valid_ds, bs):

return (

DataLoader(train_ds, batch_size=bs, shuffle=True),

DataLoader(valid_ds, batch_size=bs * 2),

)

import numpy as np

def fit(epochs, model, loss_func, opt, train_dl, valid_dl):

for epoch in range(epochs):

model.train()

for xb, yb in train_dl:

loss_batch(model, loss_func, xb, yb, opt)

model.eval()

with torch.no_grad():

losses, nums = zip(

*[loss_batch(model, loss_func, xb, yb) for xb, yb in valid_dl]

)

val_loss = np.sum(np.multiply(losses, nums)) / np.sum(nums)

print(epoch, val_loss)

train_dl, valid_dl = get_data(train_ds, valid_ds, bs)

model, opt = get_model()

fit(epochs, model, loss_func, opt, train_dl, valid_dl)

0 0.29847807577848434

1 0.32609606840610506

Switch to CNN

由于前面的章节,没有对模型形式做说明,只是简单的线性函数,本章节将会训练一个cnn模型。

class Mnist_CNN(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 16, kernel_size=3, stride=2, padding=1)

self.conv2 = nn.Conv2d(16, 16, kernel_size=3, stride=2, padding=1)

self.conv3 = nn.Conv2d(16, 10, kernel_size=3, stride=2, padding=1)

def forward(self, xb):

xb = xb.view(-1, 1, 28, 28)

xb = F.relu(self.conv1(xb))

xb = F.relu(self.conv2(xb))

xb = F.relu(self.conv3(xb))

xb = F.avg_pool2d(xb, 4)

return xb.view(-1, xb.size(1))

lr = 0.1

model = Mnist_CNN()

opt = optim.SGD(model.parameters(), lr=lr, momentum=0.9)

fit(epochs, model, loss_func, opt, train_dl, valid_dl)

0 0.4276552426338196

1 0.2699263202905655

nn.Sequential

nn.Sequential对象,可以包含多个Module类。

# 自定义Modulel子类,即:创建个 view layer

class Lambda(nn.Module):

def __init__(self, func):

super().__init__()

self.func = func

def forward(self, x):

return self.func(x)

def preprocess(x):

return x.view(-1, 1, 28, 28)

model = nn.Sequential(

Lambda(preprocess),

nn.Conv2d(1, 16, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(16, 16, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(16, 10, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.AvgPool2d(4),

Lambda(lambda x: x.view(x.size(0), -1)),

)

opt = optim.SGD(model.parameters(), lr=lr, momentum=0.9)

fit(epochs, model, loss_func, opt, train_dl, valid_dl)

0 0.3005018771290779

1 0.2376256714463234

Wrapping DataLoader

继续优化我们的代码,我们想移出 Lambda layer,把数据预处理融入进generator中。

def preprocess(x, y):

return x.view(-1, 1, 28, 28), y

# 自定义个 generator,融入 数据预处理功能,即preprocess()的功能

class WrappedDataLoader:

def __init__(self, dl, func):

self.dl = dl

self.func = func

def __len__(self):

return len(self.dl)

def __iter__(self):

batches = iter(self.dl)

for b in batches:

yield (self.func(*b))

train_dl, valid_dl = get_data(train_ds, valid_ds, bs)

train_dl = WrappedDataLoader(train_dl, preprocess)

valid_dl = WrappedDataLoader(valid_dl, preprocess)

model = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(16, 16, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(16, 10, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.AdaptiveAvgPool2d(1),

Lambda(lambda x: x.view(x.size(0), -1)),

)

opt = optim.SGD(model.parameters(), lr=lr, momentum=0.9)

fit(epochs, model, loss_func, opt, train_dl, valid_dl)

0 0.3556475947856903

1 0.26916867879629136

Using your GPU

数据集转移至gpu,模型转移至gpu

dev = torch.device(

"cuda") if torch.cuda.is_available() else torch.device("cpu")

def preprocess(x, y):

return x.view(-1, 1, 28, 28).to(dev), y.to(dev)

train_dl, valid_dl = get_data(train_ds, valid_ds, bs)

train_dl = WrappedDataLoader(train_dl, preprocess)

valid_dl = WrappedDataLoader(valid_dl, preprocess)

model = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(16, 16, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.Conv2d(16, 10, kernel_size=3, stride=2, padding=1),

nn.ReLU(),

nn.AdaptiveAvgPool2d(1),

Lambda(lambda x: x.view(x.size(0), -1)),

)

model.to(dev)

opt = optim.SGD(model.parameters(), lr=lr, momentum=0.9)

fit(epochs, model, loss_func, opt, train_dl, valid_dl)

0 0.37574934339523314

1 0.33825436668396

Closing thoughts

模块总结:

- torch.nn

- Module

- Parameter

- functional

- torch.optim: update weights

- Dataset: such as TensorDataset, 合并x_train, y_train

- DataLoader: returns batches of data

Visualizing models, data, and training with tensorboard

以上六章内,我们展示了如何加载数据,数据如何喂进模型,在训练集上训练数据,测试集上测试数据。为了了解发生了什么,我们在模型进行训练时打印出一些统计数据,以了解训练是否在进行。

然而,pytorch结合了TensorBoard(可视化神经网络训练结果的工具)。

# imports

import matplotlib.pyplot as plt

import numpy as np

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

# transforms

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.5), (0.5))])

# datasets

trainset = torchvision.datasets.FashionMNIST('./data',

download=True,

train=True,

transform=transform)

testset = torchvision.datasets.FashionMNIST('./data',

download=True,

train=False,

transform=transform)

# dataloaders

trainloader = torch.utils.data.DataLoader(trainset,

batch_size=4,

shuffle=True,

num_workers=2)

testloader = torch.utils.data.DataLoader(testset,

batch_size=4,

shuffle=False,

num_workers=2)

# constant for classes

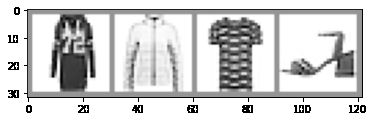

classes = ('T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle Boot')

# images show

def matplotlib_imshow(img, one_channel=False):

if one_channel:

img = img.mean(dim=0)

img = img/2 + 0.5 # unnormalize

npimg = img.numpy()

if one_channel:

plt.imshow(npimg, cmap='Greys')

else:

print(npimg.shape)

plt.imshow(np.transpose(npimg, (1, 2, 0)))

return npimg

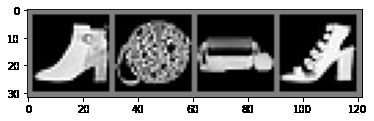

dataiter = iter(trainloader)

images, labels = dataiter.next()

# 展示图片,make_grid的作用是将若干幅图像拼成一幅图像

npimg = matplotlib_imshow(torchvision.utils.make_grid(images), False)

# 打印标签

print(' \t'.join('%5s' % classes[labels[j]] for j in range(4)))

(3, 32, 122)

Ankle Boot Bag Bag Sandal

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 4 * 4, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 4 * 4)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 网络结构

net = Net()

# 损失函数类

criterion = nn.CrossEntropyLoss()

# optimizer类

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

TensorBoard setup

from torch.utils.tensorboard import SummaryWriter

# creates a runs/fashion_mnist_experiment_1 folder,responsible for writing information to TensorBoard

writer = SummaryWriter('runs/fashion_mnist_experiment_1')

Writing to TrensorBoard

图片写入TensorBoard

# get some random training images

dataiter = iter(trainloader)

images, labels = dataiter.next()

# create grid of images

img_grid = torchvision.utils.make_grid(images)

# show images

matplotlib_imshow(img_grid, one_channel=True)

# write to tensorboard

writer.add_image('four_fashion_mnist_images', img_grid)

writer.close()

以路径..\runs下,使用命令行运行以下代码:tensorboard --logdir=runs

然后,浏览器输入地址:http://localhost:6006/

图片显示如下:

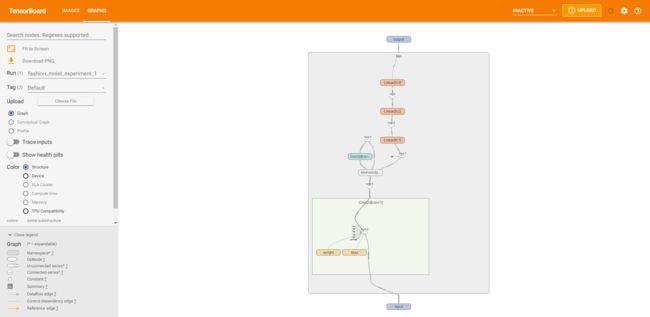

Inspect the model using TensorBoard

TensorBoard强项之一是,它能够可视化复杂的模型结构及交互式可视化的方式。

writer.add_graph(net, images)

writer.close()

Adding a “Projector” to TensorBoard

# visualize the lower dimensional representation of higher dimensional data(用低维数据表示高维数据,并可视化显示)

# 辅助函数

def select_n_random(data, labels, n=100):

"""

select n random datapoints and their corresponding(对应的) labels from a dataset

"""

assert len(data) == len(labels)

# 1-len(data)的数值随机排序

perm = torch.randperm(len(data))

return data[perm][:n], labels[perm][:n]

# select random images and their target indices

images, labels = select_n_random(trainset.data, trainset.targets)

# get class labels for each image

class_labels = [classes[lab] for lab in labels]

# log embeddings

features = images.view(-1, 28*28)

writer.add_embedding(features,

metadata=class_labels,

label_img=images.unsqueeze(1)

)

writer.close()

在TensorBoard的“project”tab中,将会看到100张图片,784维度被投影到3维空间。此外,也可以单击拖拽旋转三维投影。

注:如果根据官网操作会报错,因为TensorFlow的版本问题,在安装pytorch后,还需要安装tensorflow/tensorboard等。

如果报错:‘tensorflow_core._api.v2.io.gfile’ has no attribute ‘get_filesystem’

解决方案:在writer.py文件中加入

import tensorboard as tb tf.io.gfile = tb.compat.tensorflow_stub.io.gfile

Tracking model training with TensorBoard

之前的例子中,我们通过打印训练过程的loss值。本章节,我们将运行loss在TensorBoard,并通过函数plot_classes_preds查看模型的预测。

def images_to_probs(net, images):

output = net(images) # 返回预测概率值

_, preds_tensor = torch.max(output, 1) # 返回预测值

preds = np.squeeze(preds_tensor.numpy())

# 利用softmax函数计算最终预测的概率

return preds, [F.softmax(el, dim=0)[i].item() for i, el in zip(preds, output)]

def plot_classes_preds(net, images, labels):

preds, probs = images_to_probs(net, images)

# plot the images in the batch, along with predicted and true labels

fig = plt.figure(figsize=(12, 48))

for idx in np.arange(4):

ax = fig.add_subplot(1, 4, idx+1, xticks=[], yticks=[])

matplotlib_imshow(images[idx], one_channel=True)

ax.set_title("{0}, {1:.1f}%\n(label: {2})".format(classes[preds[idx]],

probs[idx]*100,

classes[labels[idx]]

),

color=("green" if preds[idx]==labels[idx].item() else "red"))

return fig

running_loss = 0.0

for epoch in range(1): # loop over the dataset multiple times

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels]

inputs, labels = data

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % 1000 == 999: # every 1000 mini-batches...

# ...log the running loss

writer.add_scalar('training loss',

running_loss / 1000,

epoch * len(trainloader) + i)

# ...log a Matplotlib Figure showing the model's predictions on a

# random mini-batch

writer.add_figure('predictions vs. actuals',

plot_classes_preds(net, inputs, labels),

global_step=epoch * len(trainloader) + i)

running_loss = 0.0

print('Finished Training')

Finished Training

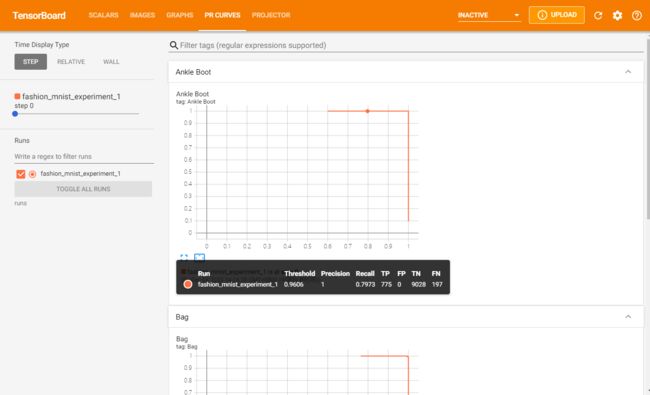

Assessing trained models with TensorBoard

# 1. gets the probability predictions in a test_size x num_classes Tensor

# 2. gets the preds in a test_size Tensor

# takes ~10 seconds to run

class_probs = []

class_preds = []

with torch.no_grad():

for data in testloader:

images, labels = data

output = net(images)

class_probs_batch = [F.softmax(el, dim=0) for el in output]

_, class_preds_batch = torch.max(output, 1)

class_probs.append(class_probs_batch)

class_preds.append(class_preds_batch)

test_probs = torch.cat([torch.stack(batch) for batch in class_probs])

test_preds = torch.cat(class_preds)

# helper function

def add_pr_curve_tensorboard(class_index, test_probs, test_preds, global_step=0):

'''

Takes in a "class_index" from 0 to 9 and plots the corresponding

precision-recall curve

'''

tensorboard_preds = test_preds == class_index

tensorboard_probs = test_probs[:, class_index]

writer.add_pr_curve(classes[class_index],

tensorboard_preds,

tensorboard_probs,

global_step=global_step)

writer.close()

# plot all the pr curves

for i in range(len(classes)):

add_pr_curve_tensorboard(i, test_probs, test_preds)