经典卷积神经网络简介(使用tensflow实现)

参考: https://blog.csdn.net/weixin_39589455/article/details/114950664

https

文章目录

- LeNet

- AlexNet

- VGGNet

- inceptionNet

- ResNet

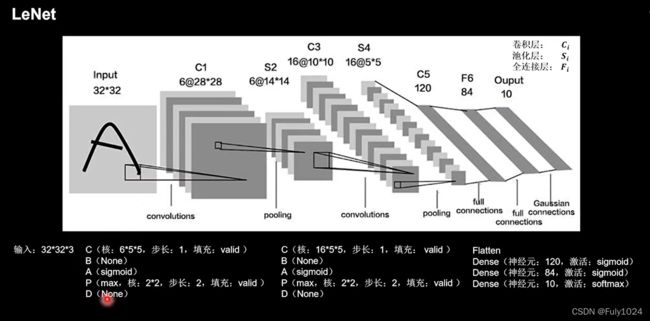

LeNet

参考: https://zhuanlan.zhihu.com/p/82495268

https://blog.csdn.net/zrh_CSDN/article/details/81267873

https://blog.csdn.net/weixin_39589455/article/details/114950664

LeNet又被称为LeNet-5,其之所以称为这个名称是由于原始的LeNet是一个5层的卷积神经网络,它主要包括两部分:

卷积层 2

全连接层 3

LeNet 各层参数详解:

-

INPUT层 – 输入层

输入层通常不被视为网络层次结构

输入图像尺寸统一进行预处理为 32*32 -

第一层卷积使用CBAPD描述

C(6个55的卷积核, 步长1 填充:valid)

B: None LetNet提出时还没有BN操作

A:激活函数 sigmoid

P: 池化核 22 步长2 max:最大池化 填充: valid

D: None LetNet提出时还没有Dropout操作 -

第二层卷积 使用CBAPD描述

C(16个55的卷积核, 步长1 填充:valid)

B: None LetNet提出时还没有BN操作

A:激活函数 sigmoid

P: 池化核 22 步长2 max:最大池化 填充: valid

D: None LetNet提出时还没有Dropout操作 -

Flatten层 拉直层

-

3个全连接层

Dense(神经元个数: 120 激活函数: sigmoid)

Dense(神经元个数: 84 激活函数: sigmoid)

Dense(神经元个数: 10 激活函数: softmax) # 使输出符合概率分布

小知识点: Input层到C1层叫卷积 经过卷积核降参数

C1层到S2层叫池化,一般使用最大值max(池化矩阵中取最大值)或者平均值mean(池化矩阵中取平均值)

池化和卷积参考: https://blog.csdn.net/weixin_41417982/article/details/81412076

LeNet版cifar10代码如下:

# -*- coding: utf-8 -*-

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.python.keras.datasets.cifar import load_batch

def load_cifar10_data():

# 获取数据 直接使用 tf.keras.datasets.cifar10.load_data() 会报错

# cifar10数据下载 参考 https://zhuanlan.zhihu.com/p/129078357

# path 为解压后的路径

path = 'D:\\Repository\\ai_data\\DeepLearing_TensorFlow2.0-book\\cifar10\\cifar-10-batches-py\\'

num_train_samples = 50000

x_train = np.empty((num_train_samples, 3, 32, 32), dtype='uint8')

y_train = np.empty((num_train_samples,), dtype='uint8')

for i in range(1, 6):

fpath = os.path.join(path, 'data_batch_' + str(i))

(x_train[(i - 1) * 10000:i * 10000, :, :, :],

y_train[(i - 1) * 10000:i * 10000]) = load_batch(fpath)

fpath = os.path.join(path, 'test_batch')

x_test, y_test = load_batch(fpath)

y_train = np.reshape(y_train, (len(y_train), 1))

y_test = np.reshape(y_test, (len(y_test), 1))

if tf.keras.backend.image_data_format() == 'channels_last':

x_train = x_train.transpose(0, 2, 3, 1)

x_test = x_test.transpose(0, 2, 3, 1)

x_test = x_test.astype(x_train.dtype)

y_test = y_test.astype(y_train.dtype)

x_train, x_test = x_train / 255.0, x_test / 255.0 # 数据归一化

return (x_train, y_train), (x_test, y_test)

class LeNetModel(tf.keras.Model):

def __init__(self):

super(LeNetModel, self).__init__()

# 第一层

self.c1 = tf.keras.layers.Conv2D(filters=6, kernel_size=(5, 5),

activation='sigmoid')

self.p1 = tf.keras.layers.MaxPool2D(pool_size=(2, 2), strides=2)

# 第二层

self.c2 = tf.keras.layers.Conv2D(filters=16, kernel_size=(5, 5),

activation='sigmoid')

self.p2 = tf.keras.layers.MaxPool2D(pool_size=(2, 2), strides=2)

# 拉直层

self.flatten = tf.keras.layers.Flatten()

# 三层全连接层

self.f1 = tf.keras.layers.Dense(120, activation='sigmoid')

self.f2 = tf.keras.layers.Dense(84, activation='sigmoid')

self.f3 = tf.keras.layers.Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.p1(x)

x = self.c2(x)

x = self.p2(x)

x = self.flatten(x)

x = self.f1(x)

x = self.f2(x)

y = self.f3(x)

return y

def load_local_model(model_path):

if os.path.exists(model_path + '/saved_model.pb'):

tf.print('-------------load the model-----------------')

local_model = tf.keras.models.load_model(model_path)

else:

local_model = LetNetModel()

local_model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

return local_model

if __name__ == '__main__':

(x_train, y_train), (x_test, y_test) = load_cifar10_data()

model_path = "./data/model/cifar/letnet"

model = load_local_model(model_path)

# 机器垃圾 跑不快 运行3次

history = model.fit(x_train, y_train, batch_size=32, epochs=3, validation_data=(x_test, y_test),

validation_freq=1)

model.summary()

# 保存模型

model.save(model_path, save_format="tf")

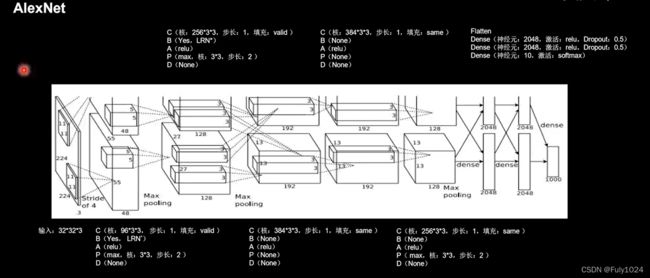

AlexNet

参考: https://zhuanlan.zhihu.com/p/42914388

2012年出现,AlexNet使用relu激活函数,提省了训练速度,使用Dropout缓解了过拟合。

AlexNet共有8层, 5层卷积,3层全连接层。

AlexNet 各层参数详解:

- 第一层卷积使用CBAPD描述

C(96个33的卷积核, 步长1 填充:valid)

B: Yes , LRN

A:激活函数 relu

P: 池化核 22 步长2 max:最大池化 填充: valid

D: None - 第二层卷积使用CBAPD描述

C(256个33的卷积核, 步长1 填充:valid)

B: Yes , LRN

A:激活函数 relu

P: 池化核 33 步长2 max:最大池化 填充: valid

D: None - 第三层卷积使用CBAPD描述

C(384个3*3的卷积核, 步长1 填充:same)

B: None

A:激活函数 relu

P: None

D: None - 第四层卷积使用CBAPD描述

C(384个3*3的卷积核, 步长1 填充:same)

B: None

A:激活函数 relu

P: None

D: None - 第五层卷积使用CBAPD描述

C(256个33的卷积核, 步长1 填充:same)

B: None

A: 激活函数 relu

P: 池化核 33 步长2 max:最大池化 填充: valid

D: None - Flatten拉直层

- 全连接层

Dense(神经元:2048,激活函数: relu,Dropout: 0.5 )

Dense(神经元:2048,激活函数: relu,Dropout: 0.5 )

Dense(神经元:10,激活函数:softmax)

AlexNet 运行需要大量资源 cpu电脑就不要尝试了

AlexNet版cifar10代码如下:

# -*- coding: utf-8 -*-

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.python.keras.datasets.cifar import load_batch

def load_cifar10_data():

# 获取数据 直接使用 tf.keras.datasets.cifar10.load_data() 会报错

# cifar10数据下载 参考 https://zhuanlan.zhihu.com/p/129078357

# path 为解压后的路径

path = 'D:\\Repository\\ai_data\\DeepLearing_TensorFlow2.0-book\\cifar10\\cifar-10-batches-py\\'

num_train_samples = 50000

x_train = np.empty((num_train_samples, 3, 32, 32), dtype='uint8')

y_train = np.empty((num_train_samples,), dtype='uint8')

for i in range(1, 6):

fpath = os.path.join(path, 'data_batch_' + str(i))

(x_train[(i - 1) * 10000:i * 10000, :, :, :],

y_train[(i - 1) * 10000:i * 10000]) = load_batch(fpath)

fpath = os.path.join(path, 'test_batch')

x_test, y_test = load_batch(fpath)

y_train = np.reshape(y_train, (len(y_train), 1))

y_test = np.reshape(y_test, (len(y_test), 1))

if tf.keras.backend.image_data_format() == 'channels_last':

x_train = x_train.transpose(0, 2, 3, 1)

x_test = x_test.transpose(0, 2, 3, 1)

x_test = x_test.astype(x_train.dtype)

y_test = y_test.astype(y_train.dtype)

x_train, x_test = x_train / 255.0, x_test / 255.0 # 数据归一化

return (x_train, y_train), (x_test, y_test)

class AlexNetModel(tf.keras.Model):

def __init__(self):

super(AlexNetModel, self).__init__()

self.c1 = tf.keras.layers.Conv2D(filters=96, kernel_size=(3, 3))

self.b1 = tf.keras.layers.BatchNormalization()

self.a1 = tf.keras.layers.Activation('relu')

self.p1 = tf.keras.layers.MaxPool2D(pool_size=(3, 3), strides=2)

self.c2 = tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3))

self.b2 = tf.keras.layers.BatchNormalization()

self.a2 = tf.keras.layers.Activation('relu')

self.p2 = tf.keras.layers.MaxPool2D(pool_size=(3, 3), strides=2)

self.c3 = tf.keras.layers.Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c4 = tf.keras.layers.Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c5 = tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3), padding='same',

activation='relu')

self.p3 = tf.keras.layers.MaxPool2D(pool_size=(3, 3), strides=2)

self.flatten = tf.keras.layers.Flatten()

self.f1 = tf.keras.layers.Dense(2048, activation='relu')

self.d1 = tf.keras.layers.Dropout(0.5)

self.f2 = tf.keras.layers.Dense(2048, activation='relu')

self.d2 = tf.keras.layers.Dropout(0.5)

self.f3 = tf.keras.layers.Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p2(x)

x = self.c3(x)

x = self.c4(x)

x = self.c5(x)

x = self.p3(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d1(x)

x = self.f2(x)

x = self.d2(x)

y = self.f3(x)

return y

def load_local_model(model_path):

if os.path.exists(model_path + '/saved_model.pb'):

tf.print('-------------load the model-----------------')

local_model = tf.keras.models.load_model(model_path)

else:

local_model = AlexNetModel()

local_model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

return local_model

if __name__ == '__main__':

(x_train, y_train), (x_test, y_test) = load_cifar10_data()

model_path = "./data/model/cifar/alexnet"

model = load_local_model(model_path)

# 机器垃圾 跑不快 运行1次 cpu电脑一次也别跑了

history = model.fit(x_train, y_train, batch_size=32, epochs=3, validation_data=(x_test, y_test),

validation_freq=1)

model.summary()

# 保存模型

model.save(model_path, save_format="tf")

VGGNet

诞生于2014年,VGGNet使用小尺寸卷积核,在减少参数的同时,提高了识别准确率。 VGGNet的网络结构规整,非常适合硬件加速(也就是说VGGNet更耗资源,cpu机器带不动了)

以VGGNet16为例

还是卷积层+ 全连接层

VGGNet版cifar10代码如下:

# -*- coding: utf-8 -*-

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.python.keras.datasets.cifar import load_batch

def load_cifar10_data():

# 获取数据 直接使用 tf.keras.datasets.cifar10.load_data() 会报错

# cifar10数据下载 参考 https://zhuanlan.zhihu.com/p/129078357

# path 为解压后的路径

path = 'D:\\Repository\\ai_data\\DeepLearing_TensorFlow2.0-book\\cifar10\\cifar-10-batches-py\\'

num_train_samples = 50000

x_train = np.empty((num_train_samples, 3, 32, 32), dtype='uint8')

y_train = np.empty((num_train_samples,), dtype='uint8')

for i in range(1, 6):

fpath = os.path.join(path, 'data_batch_' + str(i))

(x_train[(i - 1) * 10000:i * 10000, :, :, :],

y_train[(i - 1) * 10000:i * 10000]) = load_batch(fpath)

fpath = os.path.join(path, 'test_batch')

x_test, y_test = load_batch(fpath)

y_train = np.reshape(y_train, (len(y_train), 1))

y_test = np.reshape(y_test, (len(y_test), 1))

if tf.keras.backend.image_data_format() == 'channels_last':

x_train = x_train.transpose(0, 2, 3, 1)

x_test = x_test.transpose(0, 2, 3, 1)

x_test = x_test.astype(x_train.dtype)

y_test = y_test.astype(y_train.dtype)

x_train, x_test = x_train / 255.0, x_test / 255.0 # 数据归一化

return (x_train, y_train), (x_test, y_test)

class VGGNetModel(tf.keras.Model):

def __init__(self):

super(VGGNetModel, self).__init__()

# vgg是两次CBA CBAPD

# 第一层 CBA

self.c1 = tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3), padding='same') # 卷积层1

self.b1 = tf.keras.layers.BatchNormalization() # BN层1

self.a1 = tf.keras.layers.Activation('relu') # 激活层1

# 第二层 CBAPD

self.c2 = tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3), padding='same', )

self.b2 = tf.keras.layers.BatchNormalization() # BN层1

self.a2 = tf.keras.layers.Activation('relu') # 激活层1

self.p2 = tf.keras.layers.MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d2 = tf.keras.layers.Dropout(0.2) # dropout层

# 第三层 CBA

self.c3 = tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3), padding='same')

self.b3 = tf.keras.layers.BatchNormalization() # BN层1

self.a3 = tf.keras.layers.Activation('relu') # 激活层1

# 第四层 CBAPD

self.c4 = tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3), padding='same')

self.b4 = tf.keras.layers.BatchNormalization() # BN层1

self.a4 = tf.keras.layers.Activation('relu') # 激活层1

self.p4 = tf.keras.layers.MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d4 = tf.keras.layers.Dropout(0.2) # dropout层

# 随后三次 CBA CBA CBAPD

# 第五层 CBA

self.c5 = tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b5 = tf.keras.layers.BatchNormalization() # BN层1

self.a5 = tf.keras.layers.Activation('relu') # 激活层1

# 第6层 CBA

self.c6 = tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b6 = tf.keras.layers.BatchNormalization() # BN层1

self.a6 = tf.keras.layers.Activation('relu') # 激活层1

# 第7层 CBAPD

self.c7 = tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b7 = tf.keras.layers.BatchNormalization()

self.a7 = tf.keras.layers.Activation('relu')

self.p7 = tf.keras.layers.MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d7 = tf.keras.layers.Dropout(0.2)

# 第8层 CBA

self.c8 = tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b8 = tf.keras.layers.BatchNormalization() # BN层1

self.a8 = tf.keras.layers.Activation('relu') # 激活层1

# 第9层 CBA

self.c9 = tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b9 = tf.keras.layers.BatchNormalization() # BN层1

self.a9 = tf.keras.layers.Activation('relu') # 激活层1

# 第10层 CBAPD

self.c10 = tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b10 = tf.keras.layers.BatchNormalization()

self.a10 = tf.keras.layers.Activation('relu')

self.p10 = tf.keras.layers.MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d10 = tf.keras.layers.Dropout(0.2)

# 第11层 CBA

self.c11 = tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b11 = tf.keras.layers.BatchNormalization() # BN层1

self.a11 = tf.keras.layers.Activation('relu') # 激活层1

# 第12层 CBA

self.c12 = tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b12 = tf.keras.layers.BatchNormalization() # BN层1

self.a12 = tf.keras.layers.Activation('relu') # 激活层1

# 第13层 CBAPD

self.c13 = tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b13 = tf.keras.layers.BatchNormalization()

self.a13 = tf.keras.layers.Activation('relu')

self.p13 = tf.keras.layers.MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d13 = tf.keras.layers.Dropout(0.2)

# 拉直层

self.flatten = tf.keras.layers.Flatten()

# 第14层 全连接层

self.f14 = tf.keras.layers.Dense(512, activation='relu')

self.d14 = tf.keras.layers.Dropout(0.2)

# 第15层 全连接层

self.f15 = tf.keras.layers.Dense(512, activation='relu')

self.d15 = tf.keras.layers.Dropout(0.2)

# 第16层 全连接层

self.f16 = tf.keras.layers.Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p2(x)

x = self.d2(x)

x = self.c3(x)

x = self.b3(x)

x = self.a3(x)

x = self.c4(x)

x = self.b4(x)

x = self.a4(x)

x = self.p4(x)

x = self.d4(x)

x = self.c5(x)

x = self.b5(x)

x = self.a5(x)

x = self.c6(x)

x = self.b6(x)

x = self.a6(x)

x = self.c7(x)

x = self.b7(x)

x = self.a7(x)

x = self.p7(x)

x = self.d7(x)

x = self.c8(x)

x = self.b8(x)

x = self.a8(x)

x = self.c9(x)

x = self.b9(x)

x = self.a9(x)

x = self.c10(x)

x = self.b10(x)

x = self.a10(x)

x = self.p10(x)

x = self.d10(x)

x = self.c11(x)

x = self.b11(x)

x = self.a11(x)

x = self.c12(x)

x = self.b12(x)

x = self.a12(x)

x = self.c13(x)

x = self.b13(x)

x = self.a13(x)

x = self.p13(x)

x = self.d13(x)

x = self.flatten(x)

x = self.f14(x)

x = self.d14(x)

x = self.f15(x)

x = self.d15(x)

y = self.f16(x)

return y

def load_local_model(model_path):

if os.path.exists(model_path + '/saved_model.pb'):

tf.print('-------------load the model-----------------')

local_model = tf.keras.models.load_model(model_path)

else:

local_model = VGGNetModel()

local_model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

return local_model

if __name__ == '__main__':

(x_train, y_train), (x_test, y_test) = load_cifar10_data()

model_path = "./data/model/cifar/vgg"

model = load_local_model(model_path)

# 机器垃圾 跑不快 运行1次

history = model.fit(x_train, y_train, batch_size=32, epochs=1, validation_data=(x_test, y_test),

validation_freq=1)

model.summary()

# 保存模型

model.save(model_path, save_format="tf")

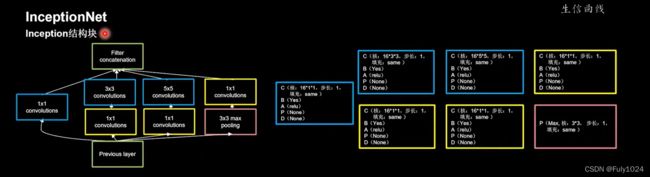

inceptionNet

InceptionNet诞生于2014年,它引入了Inception结构块

在同一层网络内使用不同尺寸的卷积核,提升了感知力,使用了批标准化,缓解了梯度消失。(GoogleNet即inception-V1)

InceptionNet核心是它的基本单元Inception机构块

Inception机构块

InceptionNet在同一层网络内使用不同尺寸的卷积核,可以提取不同尺寸的特征,

通过11卷积核 作用到每个输入特征图的每个像素点,通过设定少于输入特征深度的11卷积核个数,减少了输出特征图深度,起到了降维的作用,减少了参数量和计算量

Inception结构块包含4个分支

- 经过1*1卷积核输出到卷积连接器

- 经过11卷积核配合33卷积核输出到卷积连接器

- 经过11卷积核配合55卷积核输出到卷积连接器

- 经过33最大池化核配合11卷积核输出到卷积连接器

送到卷积连接器的特征尺寸相同,卷积连接器会把接收到的四路特征数据按深度方向拼接,行程Inception结构块的输出

InceptionNet版cifar10代码

# -*- coding: utf-8 -*-

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.python.keras.datasets.cifar import load_batch

def load_cifar10_data():

# 获取数据 直接使用 tf.keras.datasets.cifar10.load_data() 会报错

# cifar10数据下载 参考 https://zhuanlan.zhihu.com/p/129078357

# path 为解压后的路径

path = 'D:\\Repository\\ai_data\\DeepLearing_TensorFlow2.0-book\\cifar10\\cifar-10-batches-py\\'

num_train_samples = 50000

x_train = np.empty((num_train_samples, 3, 32, 32), dtype='uint8')

y_train = np.empty((num_train_samples,), dtype='uint8')

for i in range(1, 6):

fpath = os.path.join(path, 'data_batch_' + str(i))

(x_train[(i - 1) * 10000:i * 10000, :, :, :],

y_train[(i - 1) * 10000:i * 10000]) = load_batch(fpath)

fpath = os.path.join(path, 'test_batch')

x_test, y_test = load_batch(fpath)

y_train = np.reshape(y_train, (len(y_train), 1))

y_test = np.reshape(y_test, (len(y_test), 1))

if tf.keras.backend.image_data_format() == 'channels_last':

x_train = x_train.transpose(0, 2, 3, 1)

x_test = x_test.transpose(0, 2, 3, 1)

x_test = x_test.astype(x_train.dtype)

y_test = y_test.astype(y_train.dtype)

x_train, x_test = x_train / 255.0, x_test / 255.0 # 数据归一化

return (x_train, y_train), (x_test, y_test)

class ConvBNRelu(tf.keras.Model):

'''

Inception结构块中的4个分支都包含相同结构(CBA结构)

可以写成一个类 从而减少代码长度

'''

#

def __init__(self, filters, kernel_size=3, strides=1, padding='same'):

super(ConvBNRelu, self).__init__()

self.model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(filters=filters, kernel_size=kernel_size, strides=strides, padding=padding),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.Activation('relu')

])

def call(self, x):

# 在training=False时,BN通过整个训练集计算均值、方差去做批归一化,training=True时,通过当前batch的均值、方差去做批归一化。推理时 training=False效果好

x = self.model(x, training=False)

return x

class InceptionStruct(tf.keras.Model):

'''

Inception结构块

filters, kernel_size=3, strides=1, padding='same'

'''

def __init__(self, filters, strides=1):

super(InceptionStruct, self).__init__()

self.filters = filters

self.strides = strides

self.c1 = ConvBNRelu(filters, kernel_size=1, strides=strides)

self.c2_1 = ConvBNRelu(filters, kernel_size=1, strides=strides)

self.c2_2 = ConvBNRelu(filters, kernel_size=3, strides=1)

self.c3_1 = ConvBNRelu(filters, kernel_size=1, strides=strides)

self.c3_2 = ConvBNRelu(filters, kernel_size=5, strides=1)

self.p4_1 = tf.keras.layers.MaxPool2D(3, strides=1, padding='same')

self.c4_2 = ConvBNRelu(filters, kernel_size=1, strides=strides)

def call(self, x):

x1 = self.c1(x)

x2_1 = self.c2_1(x)

x2_2 = self.c2_2(x2_1)

x3_1 = self.c3_1(x)

x3_2 = self.c3_2(x3_1)

x4_1 = self.p4_1(x)

x4_2 = self.c4_2(x4_1)

# 使用concat函数将他们堆叠在一起

x = tf.concat([x1, x2_2, x3_2, x4_2], axis=3)

return x

class InceptionModel(tf.keras.Model):

def __init__(self, num_blocks, num_classes, init_ch=16, **kwargs):

super(InceptionModel, self).__init__(**kwargs)

self.in_channels = init_ch

self.out_channels = init_ch

self.num_blocks = num_blocks

self.init_ch = init_ch

self.c1 = ConvBNRelu(init_ch)

self.blocks = tf.keras.models.Sequential()

for block_id in range(num_blocks):

for layer_id in range(2):

if layer_id == 0:

block = InceptionStruct(self.out_channels, strides=2)

else:

block = InceptionStruct(self.out_channels, strides=1)

self.blocks.add(block)

# enlarger out_channels per block

self.out_channels *= 2

self.p1 = tf.keras.layers.GlobalAveragePooling2D()

self.f1 = tf.keras.layers.Dense(num_classes, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y

def load_local_model(model_path):

if os.path.exists(model_path + '/saved_model.pb'):

tf.print('-------------load the model-----------------')

local_model = tf.keras.models.load_model(model_path)

else:

local_model = InceptionModel(num_blocks=2, num_classes=10)

local_model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

return local_model

if __name__ == '__main__':

(x_train, y_train), (x_test, y_test) = load_cifar10_data()

# 数据量太大跑不动 仅用作测试

(x_train, y_train), (x_test, y_test) = (x_train[0:256], y_train[0:256]), (x_test[0:256], y_test[0:256])

model_path = "./data/model/cifar/inception"

model = load_local_model(model_path)

history = model.fit(x_train, y_train, batch_size=32, epochs=6, validation_data=(x_test, y_test),

validation_freq=1)

model.summary()

# 保存模型

model.save(model_path, save_format="tf")

ResNet

ResNet诞生于2015年,ResNet提出了层间残差跳连,引入了前方信息,缓解梯度消失, 使神经网络层数增加成为可能。

ResNet块有两种形式

一种在堆叠卷积前后维度不同

一种在堆叠卷积前后维度相同

ResNet版cifar10代码

# -*- coding: utf-8 -*-

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.python.keras.datasets.cifar import load_batch

def load_cifar10_data():

# 获取数据 直接使用 tf.keras.datasets.cifar10.load_data() 会报错

# cifar10数据下载 参考 https://zhuanlan.zhihu.com/p/129078357

# path 为解压后的路径

path = 'D:\\Repository\\ai_data\\DeepLearing_TensorFlow2.0-book\\cifar10\\cifar-10-batches-py\\'

num_train_samples = 50000

x_train = np.empty((num_train_samples, 3, 32, 32), dtype='uint8')

y_train = np.empty((num_train_samples,), dtype='uint8')

for i in range(1, 6):

fpath = os.path.join(path, 'data_batch_' + str(i))

(x_train[(i - 1) * 10000:i * 10000, :, :, :],

y_train[(i - 1) * 10000:i * 10000]) = load_batch(fpath)

fpath = os.path.join(path, 'test_batch')

x_test, y_test = load_batch(fpath)

y_train = np.reshape(y_train, (len(y_train), 1))

y_test = np.reshape(y_test, (len(y_test), 1))

if tf.keras.backend.image_data_format() == 'channels_last':

x_train = x_train.transpose(0, 2, 3, 1)

x_test = x_test.transpose(0, 2, 3, 1)

x_test = x_test.astype(x_train.dtype)

y_test = y_test.astype(y_train.dtype)

x_train, x_test = x_train / 255.0, x_test / 255.0 # 数据归一化

return (x_train, y_train), (x_test, y_test)

class ResStruct(tf.keras.Model):

'''

Res结构块

filters, kernel_size=3, strides=1, residual_path

'''

def __init__(self, filters, strides=1, residual_path=False):

super(ResStruct, self).__init__()

self.filters = filters

self.strides = strides

self.residual_path = residual_path

self.c1 = tf.keras.layers.Conv2D(filters, (3, 3), strides=strides, padding='same', use_bias=False)

self.b1 = tf.keras.layers.BatchNormalization()

self.a1 = tf.keras.layers.Activation('relu')

self.c2 = tf.keras.layers.Conv2D(filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b2 = tf.keras.layers.BatchNormalization()

# residual_path为True时,对输入进行下采样,即用1x1的卷积核做卷积操作,保证x能和F(x)维度相同,顺利相加

if residual_path:

self.down_c1 = tf.keras.layers.Conv2D(filters, (1, 1), strides=strides, padding='same', use_bias=False)

self.down_b1 = tf.keras.layers.BatchNormalization()

self.a2 = tf.keras.layers.Activation('relu')

def call(self, inputs):

residual = inputs # residual等于输入值本身,即residual=x

# 将输入通过卷积、BN层、激活层,计算F(x)

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

y = self.b2(x)

if self.residual_path:

residual = self.down_c1(inputs)

residual = self.down_b1(residual)

out = self.a2(y + residual) # 最后输出的是两部分的和,即F(x)+x或F(x)+Wx,再过激活函数

return out

class ResNetModel(tf.keras.Model):

def __init__(self, block_list, initial_filters=64): # block_list表示每个block有几个卷积层

super(ResNetModel, self).__init__()

self.num_blocks = len(block_list) # 共有几个block

self.block_list = block_list

self.out_filters = initial_filters

self.c1 = tf.keras.layers.Conv2D(self.out_filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b1 = tf.keras.layers.BatchNormalization()

self.a1 = tf.keras.layers.Activation('relu')

self.blocks = tf.keras.models.Sequential()

# 构建ResNet网络结构

for block_id in range(len(block_list)): # 第几个resnet block

for layer_id in range(block_list[block_id]): # 第几个卷积层

if block_id != 0 and layer_id == 0: # 对除第一个block以外的每个block的输入进行下采样

block = ResStruct(self.out_filters, strides=2, residual_path=True)

else:

block = ResStruct(self.out_filters, residual_path=False)

self.blocks.add(block) # 将构建好的block加入resnet

self.out_filters *= 2 # 下一个block的卷积核数是上一个block的2倍

self.p1 = tf.keras.layers.GlobalAveragePooling2D()

self.f1 = tf.keras.layers.Dense(10, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

def call(self, inputs):

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y

def load_local_model(model_path):

if os.path.exists(model_path + '/saved_model.pb'):

tf.print('-------------load the model-----------------')

local_model = tf.keras.models.load_model(model_path)

else:

local_model = ResNetModel([2, 2, 2, 2])

local_model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

return local_model

if __name__ == '__main__':

(x_train, y_train), (x_test, y_test) = load_cifar10_data()

(x_train, y_train), (x_test, y_test) = (x_train[0:256], y_train[0:256]), (x_test[0:256], y_test[0:256])

model_path = "./data/model/cifar/resnet"

model = load_local_model(model_path)

# 机器垃圾 跑不快 运行3次

history = model.fit(x_train, y_train, batch_size=64, epochs=3, validation_data=(x_test, y_test),

validation_freq=1)

model.summary()

# 保存模型

model.save(model_path, save_format="tf")