计算机视觉之opencv基本用法

计算机视觉之opencv基本用法

- 一、opencv安装

- 二、opencv图像数据预处理

-

- 2.1 数据读取-图像(cv2.imread函数)

- 2.2 数据存储-图像(cv2.imwrite函数)

- 2.3 数据读取-视频(cv2.VideoCapture函数)

- 2.4 截取部分图像数据(索引操作)

- 2.5 颜色通道读取(cv2.split函数)

- 2.6 颜色通道合并(cv2.merge函数)

- 2.7 边界填充(cv2.copyMakeBorder函数)

- 2.8 数值计算(cv2.add函数和+运算)

- 2.9 图像融合(+运算)

- 2.10 图像伸缩(cv2.resize函数)

- 2.11 形态学-腐蚀操作(cv2.erode函数)

- 2.12 形态学-膨胀操作(cv2.dilate函数)

- 2.13 开运算与闭运算(cv2.morphologyEx函数)

-

- 2.13.1 开运算:先腐蚀后膨胀

- 2.13.2 闭运算:先膨胀后腐蚀

- 2.14 边缘检测-梯度运算=膨胀-腐蚀(cv2.morphologyEx函数)

- 2.15 礼帽与黑帽(cv2.morphologyEx函数)

- 2.16 边缘检测-图像梯度-Sobel算子(cv2.Sobel函数)

- 2.17 边缘检测-图像梯度-Scharr算子&laplacian算子(cv2.Scharr函数和cv2.Laplacian函数)

- 2.18 图像阈值(cv2.threshold函数)

- 2.19 图像平滑

-

- 2.19.1 均值滤波(cv2.blur函数)

- 2.19.2 方框滤波(cv2.boxFilter函数)

- 2.19.3 高斯滤波(cv2.GaussianBlur函数)

- 2.19.4 均值滤波(cv2.medianBlur函数)

- 2.19.5 各种滤波比较

- 2.20 Canny边缘检测(cv2.Canny函数)

- 2.21 图像金字塔pyramid(cv2.pyrUp和cv2.pyrDown函数)

-

- 2.21.1 高斯金字塔

- 2.21.2 拉普拉斯金字塔

- 2.22 图像轮廓

-

- 2.22.1 寻找轮廓(cv2.findContours函数)

- 2.22.2 绘制轮廓(cv2.drawContours函数)

- 2.22.3 轮廓特征(cv2.contourArea面积函数和cv2.arcLength周长函数)

- 2.22.4 轮廓近似(cv2.approxPolyDP函数)

- 2.22.5 外接矩形(cv2.boundingRect函数和cv2.rectangle函数)

- 2.22.6 外接圆(cv2.minEnclosing函数和cv2.circle函数)

- 2.23 模板匹配(cv2.matchTemplate函数)

-

- 2.23.1 匹配一个对象

- 2.23.2 匹配多个对象

一、opencv安装

- 在python环境下安装opencv3.4.1及以前的版本,因为3.4.2以后的版本有些算法申请了专利,导致无法使用,安装opencv需安装同版本的opencv-python和opencv-contrib-python,由于未找到3.4.1版本的opencv,所以本文安装4.5.5.64版本的opencv-python和opencv-contrib-python。

#采用清华源安装

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple opencv-python

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple opencv-contrib-python

二、opencv图像数据预处理

2.1 数据读取-图像(cv2.imread函数)

cv2.IMREAD_COLOR:彩色图像

cv2.IMREAD_GRAYSCALE:灰度图像

import cv2 #opencv读取的格式是BGR

import matplotlib.pyplot as plt

import numpy as np

%matplotlib inline #自动展示图片,不需要加plt.show()

img=cv2.imread(r'./3-5days_Opencv/Images_operation/cat.jpg')

img

- uint8数据类型的取值范围是0-255

img.shape

#结果

(414, 500, 3)

#图像展示,可以创建多个窗口

cv2.imshow('image',img) #第一个参数是窗口名称,第二个参数是展示图像

#等待时间,毫秒级,0表示任意键终止,当参数为1000时,则表示过1000毫秒以后自动终止

cv2.waitKey(0)

#当触发终止时,关闭所有窗口

cv2.destroyAllWindows()

#定义函数

"""

def cv_show(name,img):

cv2.imshow(name, img)

cv2.waitKey(0)

cv2.destroyAllWindows()

"""

#读取灰色图片

img2=cv2.imread(r'./3-5days_Opencv/Images_operation/cat.jpg',

cv2.IMREAD_GRAYSCALE)

img2

#结果

"""

array([[153, 157, 162, ..., 174, 173, 172],

[119, 124, 129, ..., 173, 172, 171],

[120, 124, 130, ..., 172, 171, 170],

...,

[187, 182, 167, ..., 202, 191, 170],

[165, 172, 164, ..., 185, 141, 122],

[179, 179, 146, ..., 197, 142, 141]], dtype=uint8)

"""

img2.shape

#结果

(414, 500)

#定义图像读取函数

def cv_show(name,img):

cv2.imshow(name, img)

cv2.waitKey(0)

cv2.destroyAllWindows()

cv_show('image2',img2)

2.2 数据存储-图像(cv2.imwrite函数)

#保存图片

cv2.imwrite(r'./3-5days_Opencv/Images_operation/gray_cat.jpg',img2)

#结果

True

#cv2读取图像数据的数据结构

type(img2)

#结果

numpy.ndarray

#计算图像像素点数量

img2.size

#结果

207000

#判断图像像素点的数据类型

img2.dtype

#结果

dtype('uint8')

2.3 数据读取-视频(cv2.VideoCapture函数)

cv2.VideoCapture可以捕获摄像头,用数字来控制不同的设备,例如0,1。

如果是视频文件,直接指定好路径即可。

vc=cv2.VideoCapture(r'./3-5days_Opencv/Images_operation/test.mp4')

#检查是否可以正确打开

if vc.isOpened(): #返回True或False,表示视频文件是否可以被正确打开

opened, frame=vc.read() #返回值opened表示每一帧图像是否可以被正确打开,True或False,frame表示返回的每一帧图像

else:

opened=False

#以灰色播放视频

while opened:

ret,frame=vc.read()

if frame is None: #表示读取最后一帧结束后,frame会变为None

break #跳出循环

if ret==True:

gray=cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) #将每一帧转换为灰度图

cv2.imshow('result',gray)

if cv2.waitKey(100) & 0xFF==27:

#cv2.waitKey(100)表示每一帧图像停留播放的时间,数值越大每一帧图像停留的时间越长,视频播放越慢,单位是微秒;

#Esc的ASCII码为27,即判断是否按下esc键,即当键盘按下ESC键后,视频退出

break

#vc.release() #vc释放release后,再次执行此cell播放视频时需要重新读取视频数据,不release时,可随时执行此cell进行播放视频

cv2.destroyAllWindows()

2.4 截取部分图像数据(索引操作)

img=cv2.imread(r'./3-5days_Opencv/Images_operation/cat.jpg')

cat=img[:200,:200] #截取heigh和twidth

cv_show('cat',cat)

print(cat.shape)

#结果

(200, 200, 3)

2.5 颜色通道读取(cv2.split函数)

b,g,r=cv2.split(img)

print(b.shape)

b

#结果

"""

(414, 500)

array([[142, 146, 151, ..., 156, 155, 154],

[108, 112, 118, ..., 155, 154, 153],

[108, 110, 118, ..., 156, 155, 154],

...,

[162, 157, 142, ..., 181, 170, 149],

[140, 147, 139, ..., 169, 125, 106],

[154, 154, 121, ..., 183, 128, 127]], dtype=uint8)

"""

- 只保留个别通道数据

#只保留B通道

cur_img=img.copy()

cur_img[:,:,1]=0

cur_img[:,:,2]=0

cv_show('B',cur_img)

#只保留G通道

cur_img=img.copy()

cur_img[:,:,0]=0

cur_img[:,:,2]=0

cv_show('G',cur_img)

#只保留R通道

cur_img=img.copy()

cur_img[:,:,0]=0

cur_img[:,:,1]=0

cv_show('R',cur_img)

2.6 颜色通道合并(cv2.merge函数)

img=cv2.merge((b,g,r))

img.shape

#结果

(414, 500, 3)

cv_show('img',img)

2.7 边界填充(cv2.copyMakeBorder函数)

- BORDER_REPLICATE:复制法,也就是复制最边缘像素。

- BORDER_REFLECT:反射法,对感兴趣的图像中的像素在两边进行复制例如:fedcba|abcdefgh|hgfedcb

- BORDER_REFLECT_101:反射法,也就是以最边缘像素为轴,对称,gfedcb|abcdefgh|gfedcba

- BORDER_WRAP:外包装法cdefgh|abcdefgh|abcdefg

- BORDER_CONSTANT:常量法,常数值填充。

top_size,bottom_size,left_size,right_size=(50,50,50,50)

#复制法

replicate=cv2.copyMakeBorder(img,top_size,bottom_size,left_size,right_size,borderType=cv2.BORDER_REPLICATE)

#反射法

reflect=cv2.copyMakeBorder(img,top_size,bottom_size,left_size,right_size,borderType=cv2.BORDER_REFLECT)

#反射法

reflect101=cv2.copyMakeBorder(img,top_size,bottom_size,left_size,right_size,borderType=cv2.BORDER_REFLECT_101)

#外包装法

wrap=cv2.copyMakeBorder(img,top_size,bottom_size,left_size,right_size,borderType=cv2.BORDER_WRAP)

#常量法

constant=cv2.copyMakeBorder(img,top_size,bottom_size,left_size,right_size,borderType=cv2.BORDER_CONSTANT,value=0)

import matplotlib.pyplot as plt

plt.subplot(231), plt.imshow(img), plt.title('ORIGINAL')

plt.subplot(232), plt.imshow(replicate, 'gray'), plt.title('REPLICATE')

plt.subplot(233), plt.imshow(reflect, 'gray'), plt.title('REFLECT')

plt.subplot(234), plt.imshow(reflect101, 'gray'), plt.title('REFLECT_101')

plt.subplot(235), plt.imshow(wrap, 'gray'), plt.title('WRAP')

plt.subplot(236), plt.imshow(constant, 'gray'), plt.title('CONSTANT')

plt.show()

2.8 数值计算(cv2.add函数和+运算)

img_cat=cv2.imread(r'./3-5days_Opencv/Images_operation/cat.jpg')

img_dog=cv2.imread(r'./3-5days_Opencv/Images_operation/dog.jpg')

img_cat[:5,:,0]

#结果

"""

array([[142, 146, 151, ..., 156, 155, 154],

[108, 112, 118, ..., 155, 154, 153],

[108, 110, 118, ..., 156, 155, 154],

[139, 141, 148, ..., 156, 155, 154],

[153, 156, 163, ..., 160, 159, 158]], dtype=uint8)

"""

img_cat2=img_cat+10

img_cat2[:5,:,0]

#结果

"""

array([[152, 156, 161, ..., 166, 165, 164],

[118, 122, 128, ..., 165, 164, 163],

[118, 120, 128, ..., 166, 165, 164],

[149, 151, 158, ..., 166, 165, 164],

[163, 166, 173, ..., 170, 169, 168]], dtype=uint8)

"""

#相加超出256后,会对256进行取余

(img_cat+img_cat2)[:5,:,0]

#结果

"""

array([[ 38, 46, 56, ..., 66, 64, 62],

[226, 234, 246, ..., 64, 62, 60],

[226, 230, 246, ..., 66, 64, 62],

[ 32, 36, 50, ..., 66, 64, 62],

[ 60, 66, 80, ..., 74, 72, 70]], dtype=uint8)

"""

#add操作是相加超过255时记为255

cv2.add(img_cat,img_cat2)[:5,:,0]

#结果

"""

array([[255, 255, 255, ..., 255, 255, 255],

[226, 234, 246, ..., 255, 255, 255],

[226, 230, 246, ..., 255, 255, 255],

[255, 255, 255, ..., 255, 255, 255],

[255, 255, 255, ..., 255, 255, 255]], dtype=uint8)

"""

2.9 图像融合(+运算)

- 融合的图像必须尺寸相同

img_cat+img_dog

#结果

"""

ValueError Traceback (most recent call last)

in

----> 1 img_cat+img_dog

ValueError: operands could not be broadcast together with shapes (414,500,3) (429,499,3)

"""

img_cat.shape

#结果

(414, 500, 3)

img_dog.shape

#结果

(429, 499, 3)

img_dog2=cv2.resize(img_dog,(500,414)) #注意输入参数的顺序(500,414)

img_dog2.shape

#结果

(414, 500, 3)

#加权求和,ax1+bx2+c

res=cv2.addWeighted(img_cat,0.4,img_dog2,0.6,0)

plt.imshow(res)

2.10 图像伸缩(cv2.resize函数)

#x轴从坐标0开始扩大4倍,y轴从坐标0开始扩大4倍

res=cv2.resize(img_cat,(0,0),fx=4,fy=4)

plt.imshow(res)

#x轴不变,y轴从坐标0开始扩大3倍

res=cv2.resize(img_cat,(0,0),fx=1,fy=3)

plt.imshow(res)

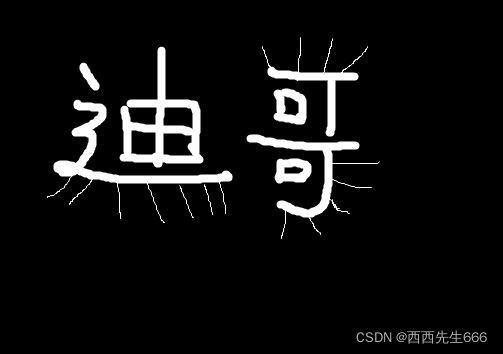

2.11 形态学-腐蚀操作(cv2.erode函数)

img=cv2.imread('./dige.png')

cv2.imshow('img',img)

cv2.waitKey(0)

cv2.destroyAllWindows()

- 图片中线条较粗,而且有毛刺,现在将毛刺去除,线条微微变细;

- 腐蚀操作原理:存在一个kernel,比如(3, 3),在图像中不断的平移,在这个(3,3)的9像素点的方框中,哪一种颜色所占的比重大,9个像素点就是这个值;

kernal=np.ones((5,5),np.uint8) #腐蚀核的大小,可以改变,核越大腐蚀越严重,越小腐蚀越轻微

erosion=cv2.erode(img,kernal,iterations=1)#iterations为迭代次数,表示腐蚀的次数,迭代次数越多,图像被腐蚀的越严重

cv2.imshow('erosion',erosion)

cv2.waitKey(0)

cv2.destroyAllWindows()

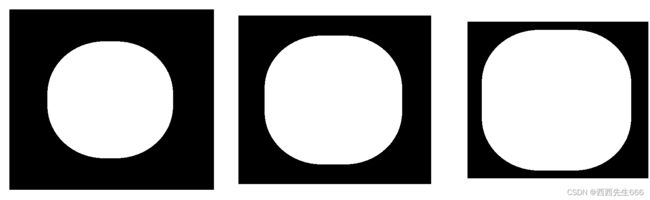

#不同腐蚀/迭代次数比较

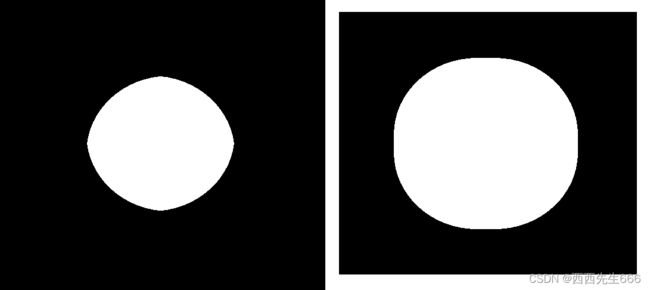

img1=cv2.imread('./pie.png')

kernal=np.ones((30,30),np.uint8)

erosion_1=cv2.erode(img1,kernal,iterations=1)

erosion_2=cv2.erode(img1,kernal,iterations=2)

erosion_3=cv2.erode(img1,kernal,iterations=3)

#合并多个图像

res=np.hstack((erosion_1,erosion_2,erosion_3))

cv2.imshow('res',res)

cv2.waitKey(0)

cv2.destroyAllWindows()

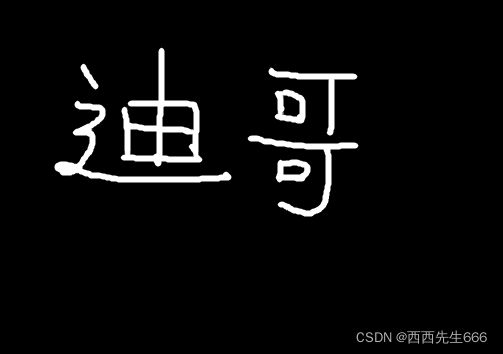

2.12 形态学-膨胀操作(cv2.dilate函数)

#先腐蚀

img=cv2.imread('./dige.png')

kernal=np.ones((3,3),np.uint8)

dige_erosion=cv2.erode(img,kernal,iterations=1)

cv2.imshow('dige_erosion',dige_erosion)

cv2.waitKey(0)

cv2.destroyAllWindows()

#后膨胀

dige_dilate=cv2.dilate(dige_erosion,kernal,iterations=1)

cv2.imshow('dige_dilate',dige_dilate)

cv2.waitKey(0)

cv2.destroyAllWindows()

#多次膨胀比较

img=cv2.imread('./pie.png')

kernal=np.ones((30,30),np.uint8)

dilate_pie1=cv2.dilate(img,kernal,iterations=1)

dilate_pie2=cv2.dilate(img,kernal,iterations=2)

dilate_pie3=cv2.dilate(img,kernal,iterations=3)

res=np.hstack((dilate_pie1,dilate_pie2,dilate_pie3))

cv2.imshow('res',res)

cv2.waitKey(0)

cv2.destroyAllWindows()

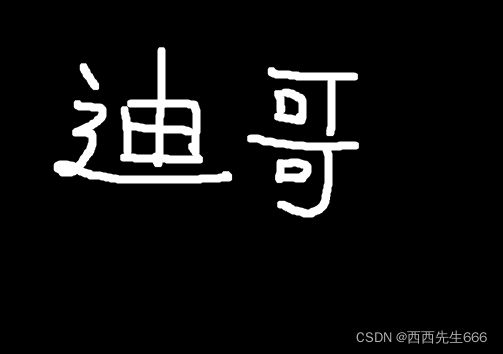

2.13 开运算与闭运算(cv2.morphologyEx函数)

2.13.1 开运算:先腐蚀后膨胀

#开运算:先腐蚀,后膨胀

img=cv2.imread('./dige.png')

kernal=np.ones((5,5),np.uint8)

opening=cv2.morphologyEx(img,cv2.MORPH_OPEN,kernal)#cv2.MORPH_OPEN开运算

cv2.imshow('opening',opening)

cv2.waitKey(0)

cv2.destroyAllWindows()

2.13.2 闭运算:先膨胀后腐蚀

#闭运算:先膨胀,后腐蚀

img=cv2.imread('./dige.png')

kernal=np.ones((5,5),np.uint8)

closing=cv2.morphologyEx(img,cv2.MORPH_CLOSE,kernal) #cv2.MORPH_CLOSE闭运算

cv2.imshow('closing',closing)

cv2.waitKey(0)

cv2.destroyAllWindows()

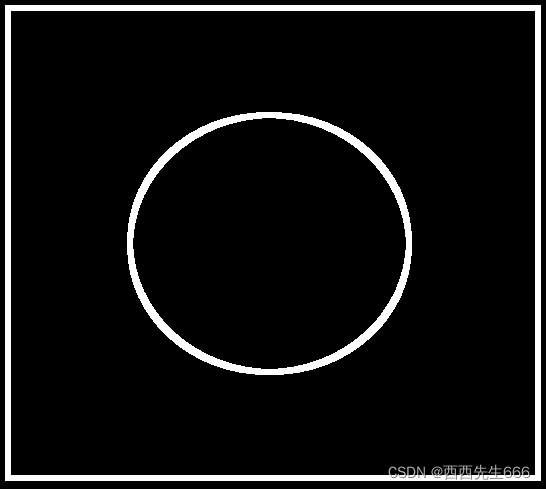

2.14 边缘检测-梯度运算=膨胀-腐蚀(cv2.morphologyEx函数)

img1=cv2.imread('./pie.png')

kernal=np.ones((7,7),np.uint8)

erosion_1=cv2.erode(img1,kernal,iterations=5)

dilatel_1=cv2.dilate(img1,kernal,iterations=5)

#合并多个图像

res=np.hstack((erosion_1,dilatel_1))

cv2.imshow('res',res)

cv2.waitKey(0)

cv2.destroyAllWindows()

gradient=cv2.morphologyEx(img1,cv2.MORPH_GRADIENT,kernal)#MORPH_GRADIENT计算梯度

cv2.imshow('gradient',gradient)

cv2.waitKey(0)

cv2.destroyAllWindows()

2.15 礼帽与黑帽(cv2.morphologyEx函数)

- 礼帽=原始输入-开运算结果

- 黑帽=闭运算结果-原始输入

img=cv2.imread('./dige.png')

kernal=np.ones((7,7),np.uint8)

tophat=cv2.morphologyEx(img,cv2.MORPH_TOPHAT,kernal)#cv2.MORPH_TOPHAT礼帽

cv2.imshow('tophat',tophat)

cv2.waitKey(0)

cv2.destroyAllWindows()

img=cv2.imread('./dige.png')

kernal=np.ones((7,7),np.uint8)

blackhat=cv2.morphologyEx(img,cv2.MORPH_BLACKHAT,kernal)

cv2.imshow('blackhat',blackhat)

cv2.waitKey(0)

cv2.destroyAllWindows()

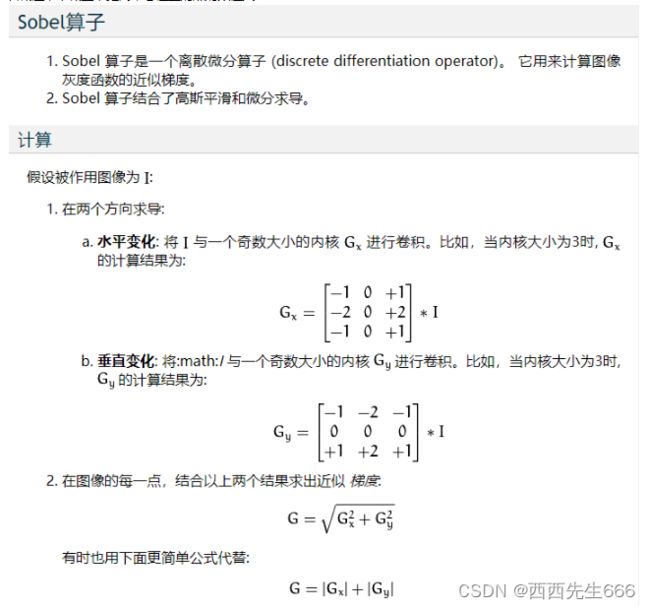

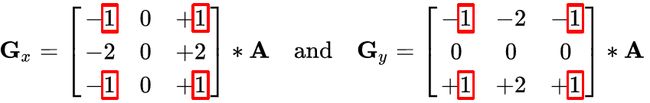

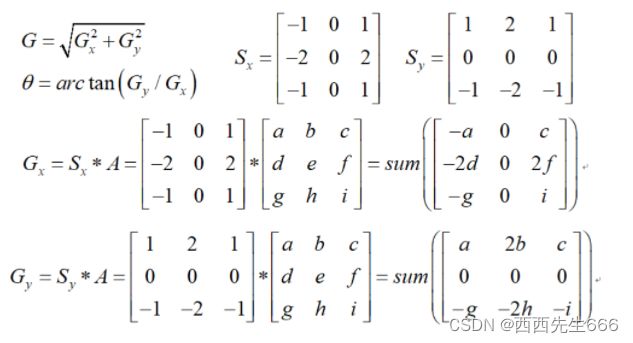

2.16 边缘检测-图像梯度-Sobel算子(cv2.Sobel函数)

cv2.Sobel(src,ddepth,dx,dy,ksize)

ddepth图像深度,像素点取值的范围

dx,dy分别表示水平和垂直方向

ksize是Sobel算子的大小,一般是3×3或5×5

img=cv2.imread('./pie.png')

cv2.imshow('img',img)

cv2.waitKey(0)

cv2.destroyAllWindows()

sobelsx=cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

cv2.imshow('sobelsx',sobelsx)

cv2.waitKey(0)

cv2.destroyAllWindows()

- 只有左半边,因为圆圈里是白色表示255,圆圈外是黑色表示0,圆圈按照中轴线分为左半圆和右半圆,左半圆边界处右侧减左侧时,结果是正数,右半圆边界处右侧减左侧时,结果是负数,cv2会进行截断操作,所以负数返回值是0,所以右侧减完是黑色。

- 综上:白到黑是正数,黑到白是负数,所有负数都被截断成0,所以要取绝对值,才能展现出轮廓边缘。

#X轴

sobelx=cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

sobelx=cv2.convertScaleAbs(sobelx)

cv2.imshow('sobelx',sobelx)

cv2.waitKey(0)

cv2.destroyAllWindows()

#Y轴

sobely=cv2.Sobel(img,cv2.CV_64F,0,1,ksize=3)

sobely=cv2.convertScaleAbs(sobely)

cv2.imshow('sobely',sobely)

cv2.waitKey(0)

cv2.destroyAllWindows()

- 分别计算x和y,再求和

sobelxy=cv2.addWeighted(sobelx,0.5,sobely,0.5,0.5)

cv2.imshow('sobelxy',sobelxy)

cv2.waitKey(0)

cv2.destroyAllWindows()

- 不建议直接算

sobelxy=cv2.Sobel(img,cv2.CV_64F,1,1,ksize=3)

sobely=cv2.convertScaleAbs(sobelxy)

cv2.imshow('sobelxy',sobelxy)

cv2.waitKey(0)

cv2.destroyAllWindows()

- 图像边缘检测

img=cv2.imread('./lena.jpg',cv2.IMREAD_GRAYSCALE)

cv2.imshow('img',img)

cv2.waitKey(0)

cv2.destroyAllWindows()

#x方向

sobelx=cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

sobelx=cv2.convertScaleAbs(sobelx)

#y方向

sobely=cv2.Sobel(img,cv2.CV_64F,0,1,ksize=3)

sobely=cv2.convertScaleAbs(sobely)

#合并x和y

sobelxy=cv2.addWeighted(sobelx,0.5,sobely,0.5,0)

cv2.imshow('sobelxy',sobelxy)

cv2.waitKey(0)

cv2.destroyAllWindows()

#直接合并计算x和y,效果并不好

sobelxy=cv2.Sobel(img,cv2.CV_64F,1,1,ksize=3)

cv2.imshow('sobelxy',sobelxy)

cv2.waitKey(0)

cv2.destroyAllWindows()

2.17 边缘检测-图像梯度-Scharr算子&laplacian算子(cv2.Scharr函数和cv2.Laplacian函数)

#不同算子之间的差异,不同算子之间的边缘检测结果不同

img=cv2.imread('lena.jpg',cv2.IMREAD_GRAYSCALE)

sobelx=cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

sobelx=cv2.convertScaleAbs(sobelx)

sobely=cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

sobely=cv2.convertScaleAbs(sobely)

sobelxy=cv2.addWeighted(sobelx,0.5,sobely,0.5,0)

scharrx=cv2.Scharr(img,cv2.CV_64F,1,0) #该算子没有ksize参数

scharrx=cv2.convertScaleAbs(scharrx)

scharry=cv2.Scharr(img,cv2.CV_64F,1,0) #该算子没有ksize参数

scharry=cv2.convertScaleAbs(scharry)

scharrxy=cv2.addWeighted(scharrx,0.5,scharry,0.5,0)

laplacian=cv2.Laplacian(img,cv2.CV_64F)#只有图像参数和深度参数

laplacian=cv2.convertScaleAbs(laplacian)

res=np.hstack((sobelxy,scharrxy,laplacian))

cv2.imshow('res',res)

cv2.waitKey(0)

cv2.destroyAllWindows()

2.18 图像阈值(cv2.threshold函数)

ret, dst = cv2.threshold(src, thresh, maxval, type)

- src: 输入图,只能输入单通道图像,通常来说为灰度图

- dst: 输出图

- thresh: 阈值

- maxval: 当像素值超过了阈值(或者小于阈值,根据type来决定),所赋予的值

- type:二值化操作的类型,包含以下5种类型: cv2.THRESH_BINARY; cv2.THRESH_BINARY_INV; cv2.THRESH_TRUNC; cv2.THRESH_TOZERO;cv2.THRESH_TOZERO_INV

- cv2.THRESH_BINARY 超过阈值部分取maxval(最大值),否则取0

- cv2.THRESH_BINARY_INV THRESH_BINARY的反转

- cv2.THRESH_TRUNC 大于阈值部分设为阈值,否则不变

- cv2.THRESH_TOZERO 大于阈值部分不改变,否则设为0

- cv2.THRESH_TOZERO_INV THRESH_TOZERO的反转

import cv2

img_cat=cv2.imread(r'./cat.jpg')

cv2.imshow('img_cat',img_cat)

cv2.waitKey(0)

cv2.destroyAllWindows()

img_cat_gray=cv2.cvtColor(img_cat,cv2.COLOR_BGR2GRAY)

ret,dst1=cv2.threshold(img_cat_gray,127,255,cv2.THRESH_BINARY)

ret,dst2=cv2.threshold(img_cat_gray,127,255,cv2.THRESH_BINARY_INV)

ret,dst3=cv2.threshold(img_cat_gray,127,255,cv2.THRESH_TRUNC)

ret,dst4=cv2.threshold(img_cat_gray,127,255,cv2.THRESH_TOZERO)

ret,dst5=cv2.threshold(img_cat_gray,127,255,cv2.THRESH_TOZERO_INV)

titles=['Original Image', 'BINARY', 'BINARY_INV', 'TRUNC', 'TOZERO', 'TOZERO_INV']

images=[img_cat,dst1,dst2,dst3,dst4,dst5]

for i in range(6):

plt.subplot(2, 3, i + 1), plt.imshow(images[i], 'gray')

plt.title(titles[i])

plt.xticks([]), plt.yticks([])

plt.show()

2.19 图像平滑

2.19.1 均值滤波(cv2.blur函数)

- 上图中,在图中3*3的区域内,像素点204的位置的取值在图像平滑处理完成后,应该等于(121+75+78+24+204+113+154+104+235)/9。

#定义图像展示函数

def show_img(name,img):

cv2.imshow(name,img)

cv2.waitKey(0)

cv2.destroyAllWindows()

#包含噪声的图像,图像中有很多白色的点

lena_noise=cv2.imread(r'./3-5days_Opencv/Images_operation/lenaNoise.png')

cv2.imshow('lena_noise',lena_noise)

cv2.waitKey(0)

cv2.destroyAllWindows()

- 简单的平均卷积操作,卷积核为33或55或7*7,卷积核的值全为1,然后再求平均。

blur=cv2.blur(lena_noise,ksize=(3,3))

show_img('blur',blur)

2.19.2 方框滤波(cv2.boxFilter函数)

- 基本和均值一样,可以选择是否进行归一化,两者之间存在差异。

#选择进行归一化操作

#参数ddepth=-1是代表处理后的图像深度(通道数)与原输入图像一致

box=cv2.boxFilter(lena_noise,-1,(3,3),normalize=True)

show_img('box',box)

#未选择归一化,容易越界,当求和大于255时取255,255为纯白色

box=cv2.boxFilter(lena_noise,-1,(3,3),normalize=False)

show_img('box',box)

2.19.3 高斯滤波(cv2.GaussianBlur函数)

#高斯滤波的卷积核里的数值是满足高斯分布,相当于更重视中间的

aussian=cv2.GaussianBlur(lena_noise,(5,5),1) #(5,5)为核函数尺寸,1为X方向上的高斯核标准偏差。

show_img('aussian',aussian)

2.19.4 均值滤波(cv2.medianBlur函数)

- 将感受野中的像素值从小到大排序,取中间位置的值作为该点的取值。

median=cv2.medianBlur(lena_noise,5)

show_img('median',median)

2.19.5 各种滤波比较

res=np.hstack((blur,aussian,median))

show_img('res',res)

- 结果表明均值滤波效果好,但不同场景下效果不一。

2.20 Canny边缘检测(cv2.Canny函数)

- 使用高斯滤波器,以平滑图像,滤除噪声;

- 计算图像中每个像素点的梯度强度和方向;

- 应用非极大值(Non-Maximum Suppression)抑制,以消除边缘检测带来的杂散响应;

- 应用双阈值(Double-Threshold)检测,来确定真实的和潜在的边缘;

- A点的梯度大于最大值,则保留为边界点,C点的边界介于最大和最小值之间,但和边界点A相连,保留C点,B点梯度不和A点相连,则舍弃B点。

- 通过抑制孤立的弱边缘最终完成边缘检测。

img=cv2.imread('./3-5days_Opencv/Images_operation/lena.jpg',cv2.IMREAD_GRAYSCALE)

v1=cv2.Canny(img,80,150) #80为梯度最小值,150为梯度最大值

v2=cv2.Canny(img,50,100)

res=np.hstack((v1,v2))

show_img('res',res)

- 阈值小的会检测出更多的边界。

img=cv2.imread('./3-5days_Opencv/Images_operation/car.png',cv2.IMREAD_GRAYSCALE)

v1=cv2.Canny(img,120,250) #120为梯度最小值,250为梯度最大值

v2=cv2.Canny(img,50,100)

res=np.hstack((v1,v2))

show_img('res',res)

2.21 图像金字塔pyramid(cv2.pyrUp和cv2.pyrDown函数)

![]()

2.21.1 高斯金字塔

img=cv2.imread(r'./3-5days_Opencv/Images_operation/AM.png')

show_img('img',img)

print(img.shape)

#结果

"""(442, 340, 3)"""

#上采样

up=cv2.pyrUp(img)

show_img('up',up)

print(up.shape)

#结果

"""(884, 680, 3)"""

#下采样

down=cv2.pyrDown(img)

show_img('down',down)

print(down.shape)

#结果

"""(221, 170, 3)"""

#连续上采样

up_2=cv2.pyrUp(up)

show_img('up_2',up_2)

print(up_2.shape)

#结果

"""(1768, 1360, 3)"""

#先上采样,后下采样后的图像与原图像对比

up_1=cv2.pyrUp(img)

up_down=cv2.pyrDown(up_1)

show_img('up_down',up_down)

print(up_down.shape)

#结果(442, 340, 3)

show_img('up_down vs original',np.hstack((up_down,img)))

2.21.2 拉普拉斯金字塔

down=cv2.pyrDown(img)

up_down=cv2.pyrUp(down)

l_1=img-up_down

show_img('l_1',l_1)

2.22 图像轮廓

2.22.1 寻找轮廓(cv2.findContours函数)

- cv2.findContours(img,mode,method)

mode:轮廓检索模式

- RETR_EXTERNAL :只检索最外面的轮廓;

- RETR_LIST:检索所有的轮廓,并将其保存到一条链表当中;

- RETR_CCOMP:检索所有的轮廓,并将他们组织为两层:顶层是各部分的外部边界,第二层是空洞的边界;

- RETR_TREE:检索所有的轮廓,并重构嵌套轮廓的整个层次,通常选这个参数多一些

method:轮廓逼近方法

- CHAIN_APPROX_NONE:以Freeman链码的方式输出轮廓,所有其他方法输出多边形(顶点的序列)。

- CHAIN_APPROX_SIMPLE:压缩水平的、垂直的和斜的部分,也就是,函数只保留他们的终点部分。

#为了更高的准确率,使用二值图像

img=cv2.imread(r'./3-5days_Opencv/Images_operation/contours.png',cv2.COLOR_BGR2GRAY)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

show_img('thresh',thresh)

#4.6版本的opencv,此函数只返回2个返回值

contours, hierarchy = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

#返回值为contours轮廓本身,hierarchy为每条轮廓对应的属性

2.22.2 绘制轮廓(cv2.drawContours函数)

#绘制所有轮廓,包含每个图形的内轮廓和外轮廓

draw_img=img.copy()

#绘制轮廓,输入参数为原图,轮廓本身,-1为绘制所有轮廓,(0,0,255)表示BGR的颜色,1为轮廓线的粗细

res=cv2.drawContours(draw_img,contours,-1,(0,0,255),1)

show_img('res',res)

#绘制个别轮廓,偶数表示外轮廓,奇数表示内轮廓

draw_img=img.copy()

res=cv2.drawContours(draw_img,contours,0,(0,0,255),2)

show_img('res',res)

#绘制个别轮廓,偶数表示外轮廓,奇数表示内轮廓

draw_img=img.copy()

res=cv2.drawContours(draw_img,contours,1,(0,0,255),2)

show_img('res',res)

#绘制个别轮廓,偶数表示外轮廓,奇数表示内轮廓

draw_img=img.copy()

res=cv2.drawContours(draw_img,contours,2,(0,0,255),2)

show_img('res',res)

2.22.3 轮廓特征(cv2.contourArea面积函数和cv2.arcLength周长函数)

cnt=contours[0]

#计算面积

print(cv2.contourArea(cnt))

#计算周长

print(cv2.arcLength(cnt,True)) #True表示轮廓是闭合的

#结果

"""

8500.5

437.9482651948929

"""

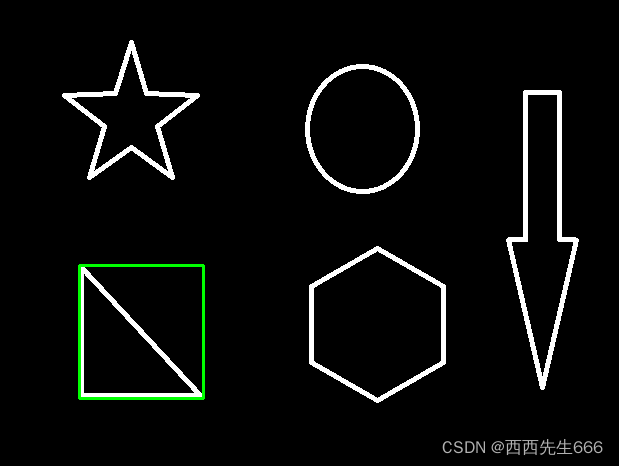

2.22.4 轮廓近似(cv2.approxPolyDP函数)

img=cv2.imread('./3-5days_Opencv/Images_operation/contours2.png')

gray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

ret,thresh=cv2.threshold(gray,127,255,cv2.THRESH_BINARY)

contours,hierarchy=cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_NONE)

cnt=contours[0]

draw_img=img.copy()

res=cv2.drawContours(draw_img,contours,0,(0,0,255),2) #0表示图形的外轮廓

show_img('res',res)

draw_img=img.copy()

epsilon=0.01*cv2.arcLength(cnt,True) #计算阈值

#轮廓近似函数,输入参数cnt为轮廓系数,epsilon轮廓近似阈值,值越小轮廓约接近于真实轮廓,True轮廓是否闭合

approx=cv2.approxPolyDP(cnt,epsilon,True)

res=cv2.drawContours(draw_img,[approx],-1,(0,255,0),2)

show_img('res',res)

draw_img=img.copy()

epsilon=0.15*cv2.arcLength(cnt,True)

#轮廓近似函数,输入参数cnt为轮廓系数,epsilon轮廓近似阈值,值越小轮廓约接近于真实轮廓,True轮廓是否闭合

approx=cv2.approxPolyDP(cnt,epsilon,True)

res=cv2.drawContours(draw_img,[approx],-1,(0,255,0),2)

show_img('res',res)

2.22.5 外接矩形(cv2.boundingRect函数和cv2.rectangle函数)

img = cv2.imread('./3-5days_Opencv/Images_operation/contours.png')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

contours, hierarchy = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

cnt = contours[0]

x,y,w,h = cv2.boundingRect(cnt) #边界矩形计算,输入轮廓

img = cv2.rectangle(img,(x,y),(x+w,y+h),(0,255,0),2)#绘制边界矩形

show_img('img',img)

area = cv2.contourArea(cnt) #边界轮廓区域面积

x, y, w, h = cv2.boundingRect(cnt)#外接矩形

rect_area = w * h#外接矩形面积

extent = float(area) / rect_area

print ('轮廓面积与边界矩形比',extent)

#结果

"""轮廓面积与边界矩形比 0.5154317244724715"""

2.22.6 外接圆(cv2.minEnclosing函数和cv2.circle函数)

(x,y),radius = cv2.minEnclosingCircle(cnt) #外接圆,返回圆中心和圆半径

center = (int(x),int(y)) #中心点坐标

radius = int(radius) #半径

img = cv2.circle(img,center,radius,(0,255,0),2)

show_img('img',img)

2.23 模板匹配(cv2.matchTemplate函数)

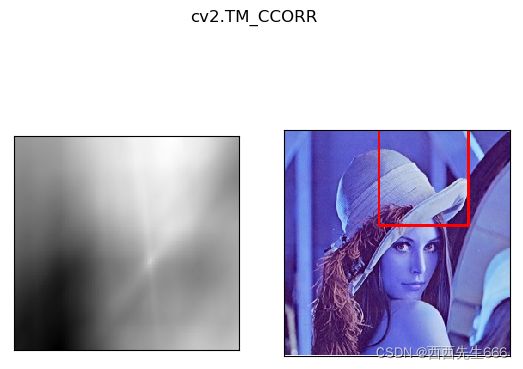

- 模板匹配与卷积操作类似,模板在原图像上进行滑动,计算模板与(图像被模板覆盖的地方)的差别程度,opencv中有6种计算方式,计算结果放到一个矩阵里,作为输出结果,假如原图像大小是A×B,模板大小是a×b,则输出结果的矩阵为(A-a+1)×(B-b+1)

- 计算方式:

TM_SQDIFF:计算平方不同,值越小,越相关

TM_CCORR:计算相关性,值越大,越相关

TM_CCOEFF:计算相关系数,值越大,越相关

TM_SQDIFF_NORMED:计算归一化平方不同,值越接近于0,越相关

TM_CCORR_NORMED:计算归一化相关性,值越接近于1,越相关

TM_CCOEFF_NORMED:计算归一化相关系数,值越接近于1,越相关

2.23.1 匹配一个对象

img=cv2.imread(r'./3-5days_Opencv/Images_operation/lena.jpg')

tmp=cv2.imread('./3-5days_Opencv/Images_operation/face.jpg')

print(img.shape)

print(tmp.shape)

h,w=tmp.shape[:2]

#结果

"""

(263, 263, 3)

(99, 100, 3)

"""

show_img('img',img)

show_img('tmp',tmp)

method=['cv2.TM_SQDIFF','cv2.TM_CCORR','cv2.TM_CCOEFF',

'cv2.TM_SQDIFF_NORMED','cv2.TM_CCORR_NORMED','cv2.TM_CCOEFF_NORMED']

res=cv2.matchTemplate(img,tmp,cv2.TM_SQDIFF)

res.shape

#结果 (152, 160)

min_val,max_val,min_loc,max_loc=cv2.minMaxLoc(res)

min_val

#结果 1489058.0

max_val

#结果 258484320.0

min_loc #该点的坐标是左上角位置的坐标,加上h和w,可以定位出与模板最接近的图像位置

#结果 (96, 89)

max_loc

#结果 (150, 38)

for i in method:

img2=img.copy()

meth=eval(i)

print(meth)

res=cv2.matchTemplate(img2,tmp,meth)

min_val,max_val,min_loc,max_loc=cv2.minMaxLoc(res)

if meth in [cv2.TM_SQDIFF,cv2.TM_SQDIFF_NORMED]:

bottom_left=min_loc

else:

bottom_left=max_loc

top_right=[bottom_left[0]+w,bottom_left[1]+h]

#画矩形

cv2.rectangle(img2,bottom_left,top_right,255,2)

plt.subplot(121)

plt.imshow(res,cmap='gray')

plt.xticks([]);plt.yticks([])#隐藏坐标轴

plt.subplot(122)

plt.imshow(img2,cmap='gray')

plt.xticks([]);plt.yticks([])

plt.suptitle(i)

plt.show()

- 图中每一行的左侧图的亮点表示识别到的脸部的位置;

- 结果显示有归一化操作的比没有归一化操作的效果好。

2.23.2 匹配多个对象

img=cv2.imread(r'./3-5days_Opencv/Images_operation/shizi.png')

print(img.shape)

tmp=cv2.imread(r'./3-5days_Opencv/Images_operation/shizi_part.jpg')

h,w=tmp.shape[:2]

print('h=',h,'w=',w)

res=cv2.matchTemplate(img,tmp,cv2.TM_CCOEFF_NORMED)

threshold=0.8

loc=np.where(res>=threshold) #输出满足匹配程度大于80%的坐标

for pt in zip(*loc[::-1]): #[::-1]必须有,没有的话就输不出结果

top_right=(pt[0]+w,pt[1]+h)

cv2.rectangle(img,pt,top_right,(0,0,255),2)

cv2.imshow('img',img)

cv2.waitKey(0)

cv2.destroyAllWindows()

#结果

"""

(135, 849, 3)

h= 40 w= 24

"""

res.shape

#结果

"""

(101, 830)

"""

loc[::-1]

#结果

"""

(array([133, 252, 598, 741, 133, 251, 252, 598, 741, 133, 598, 741, 133,

251, 252, 598, 741, 133, 251, 252, 598, 741], dtype=int64),

array([ 5, 5, 5, 5, 6, 6, 6, 6, 6, 7, 7, 7, 93, 93, 93, 93, 93,

94, 94, 94, 94, 94], dtype=int64))

"""

loc

#结果

"""

(array([ 5, 5, 5, 5, 6, 6, 6, 6, 6, 7, 7, 7, 93, 93, 93, 93, 93,

94, 94, 94, 94, 94], dtype=int64),

array([133, 252, 598, 741, 133, 251, 252, 598, 741, 133, 598, 741, 133,

251, 252, 598, 741, 133, 251, 252, 598, 741], dtype=int64))

"""