使用卷积神经网络(CNN)对Fashion Mnist数据集进行分类 【日常生活物品 十类别】

新的改变

这里是一个入门卷积神经网络,深度学习的简单小项目,

主要使用了全连接神经网络对Fashion Mninst中的物体进行分类,物体包括拖鞋,靴子,T恤等等;

采用pytorch框架,python3.6版本编程实现;

可学习熟悉 torch中对于数据的批量读取写入操作

torch 定义学习网络的方式,

以及后期数据输出可视化的过程。

参考书籍:PyTorch 深度学习入门与实战 孙玉林

全部代码

import numpy as np

import pandas as pd

from sklearn.metrics import accuracy_score,confusion_matrix,classification_report

import matplotlib.pyplot as plt

import seaborn as sns

import copy

import time

import torch

import torch.nn as nn

from torch.optim import Adam

import torch.utils.data as Data

from torchvision import transforms

from torchvision.datasets import FashionMNIST

train_data = FashionMNIST(

root = "./data/FashionMNIST",

train = True,

transform = transforms.ToTensor(),

download = False

)

train_loader = Data.DataLoader(

dataset = train_data,

batch_size = 64,

shuffle = False,

#num_workers = 2,

)

print("Train_loader 的batch数量为:",len(train_loader))

for step,(b_x,b_y) in enumerate(train_loader):

if step > 0:

break

batch_x = b_x.squeeze().numpy()

batch_y = b_y.numpy()

class_label = train_data.classes

class_label[0] = "T-shirt"

plt.figure(figsize = (12,5))

for ii in np.arange(len(batch_y)):

plt.subplot(4,16,ii+1)

plt.imshow(batch_x[ii,:,:],cmap = plt.cm.gray)

plt.title(class_label[batch_y[ii]],size = 9)

plt.axis("off")

plt.subplots_adjust(wspace = 0.05)

#plt.show()

test_data = FashionMNIST(

root="./data/FashionMNIST",

train = False,

download = False

)

test_data_x = test_data.data.type(torch.FloatTensor) / 255.0

test_data_x = torch.unsqueeze(test_data_x,dim = 1)

test_data_y = test_data.targets

print("test_data_x.shape:",test_data_x.shape)

print("test_data_y.shape:",test_data_y.shape)

class MyConvNet(nn.Module):

def __init__(self):

super(MyConvNet,self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(

in_channels = 1,

out_channels = 16,

kernel_size = 3,

stride = 1,

padding = 1,

),

nn.ReLU(),

nn.AvgPool2d(

kernel_size = 2,

stride = 2)

)

self.conv2 = nn.Sequential(

nn.Conv2d(16,32,3,1,0),

nn.ReLU(),

nn.MaxPool2d(2,2),

)

self.classifier = nn.Sequential(

nn.Linear(32*6*6,256),

nn.ReLU(),

nn.Linear(256,128),

nn.ReLU(),

nn.Linear(128,10)

)

def forward(self,x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0),-1)

output = self.classifier(x)

return output

myconvnet = MyConvNet()

print(myconvnet)

def train_model(model,traindataloader,train_rate,criterion,optimizer,num_epochs = 25):

batch_num = len(traindataloader)

train_batch_num = round(batch_num * train_rate)

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

train_loss_all = []

train_acc_all = []

val_loss_all = []

val_acc_all = []

since = time.time()

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch,num_epochs-1))

print('-'*10)

train_loss = 0.0

train_corrects = 0

train_num = 0

val_loss = 0.0

val_correct = 0

val_num = 0

for step,(b_x,b_y) in enumerate(traindataloader):

if step < train_batch_num:

model.train()

output = model(b_x)

pre_lab = torch.argmax(output,1)

loss = criterion(output, b_y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss += loss.item()

train_corrects += torch.sum(pre_lab == b_y.data)

train_num += b_x.size(0)

else:

model.eval()

output = model(b_x)

pre_lab = torch.argmax(output,1)

loss = criterion(output,b_y)

val_loss += loss.item()

val_correct += torch.sum(pre_lab == b_y.data)

val_num += b_y.size(0)

train_loss_all.append(train_loss / train_num)

train_acc_all.append(train_corrects.double().item()/train_num)

val_loss_all.append(val_loss / val_num)

val_acc_all.append(val_correct.double().item() / val_num)

print("{} Train Loss:{:.4f} Train Acc: {:.4f}" .format(epoch,train_loss_all[-1],train_acc_all[-1]))

print('{} Val Loss: {:.4f} val Acc: {:.4f}' .format(epoch,val_loss_all[-1],val_acc_all[-1]))

if val_loss_all[-1] > best_acc:

best_acc = val_acc_all[-1]

best_model_wts = copy.deepcopy(model.state_dict())

time_use = time.time() - since

print("Train and val complete in {:.0f}m {:.0f}s".format(time_use // 60,time_use % 60))

model.load_state_dict(best_model_wts)

train_process = pd.DataFrame(

data = {"epoch":range(num_epochs),

"train_loss_all": train_loss_all,

"val_loss_all":val_loss_all,

"train_acc_all":train_acc_all,

"val_acc_all":val_acc_all}

)

return model,train_process

optimizer = torch.optim.Adam(myconvnet.parameters(),lr = 0.0003)

criterion = nn.CrossEntropyLoss()

myconvnet,train_process = train_model(

myconvnet,train_loader,0.8,criterion,optimizer,num_epochs=25

)

plt.figure(figsize=(12,4))

plt.subplot(1,2,1)

plt.plot(train_process.epoch,train_process.train_loss_all,"ro-",label = "Train_loss")

plt.plot(train_process.epoch,train_process.val_loss_all,"bs-",label = "Val loss")

plt.legend()

plt.xlabel("epoch")

plt.ylabel("Loss")

plt.subplot(1,2,2)

plt.plot(train_process.epoch,train_process.train_acc_all,"ro-",label = "Train_acc")

plt.plot(train_process.epoch,train_process.val_acc_all,"bs-",label = "Val acc")

plt.xlabel("epoch")

plt.ylabel("acc")

plt.legend()

myconvnet.eval()

output = myconvnet(test_data_x)

pre_lab = torch.argmax(output,1)

test_data_ya = test_data_y.detach().numpy()

pre_laba = pre_lab.detach().numpy()

acc = accuracy_score(test_data_ya,pre_laba)

print("ACC Test:",acc)

plt.figure(figsize=(12,12))

conf_mat = confusion_matrix(test_data_ya,pre_laba)

df_cm = pd.DataFrame(conf_mat, index = class_label,columns = class_label)

heatmap = sns.heatmap(df_cm,annot = True,fmt = "d", cmap = "YlGnBu")

heatmap.yaxis.set_ticklabels(heatmap.yaxis.get_ticklabels(),rotation = 0, ha = "right")

plt.ylabel('True label')

plt.xlabel("Predicted label")

plt.show()

输出结果

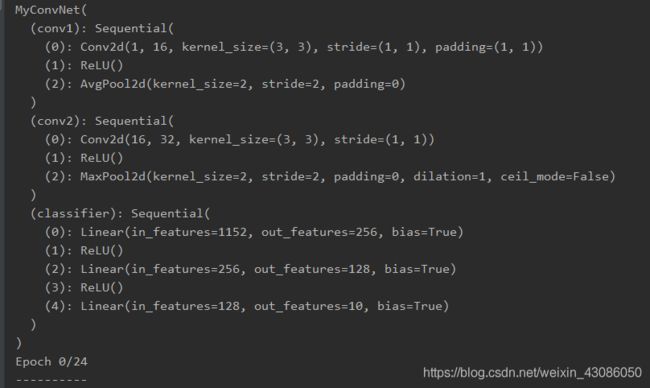

整体网络结构

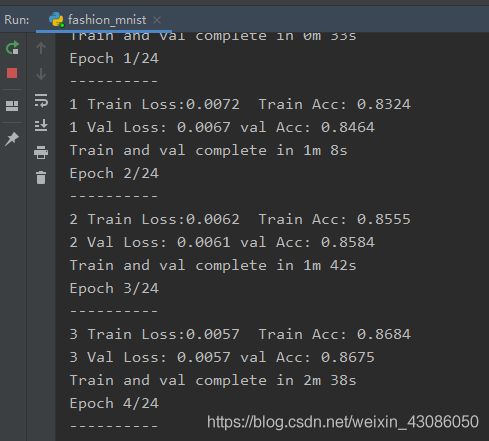

训练截图[部分]

训练耗时大概15分钟 cpu-3500x gpu-1080ti,内存16g 3200mhz

数据集图片示例:

训练时损失下降趋势:

准确率上升趋势:

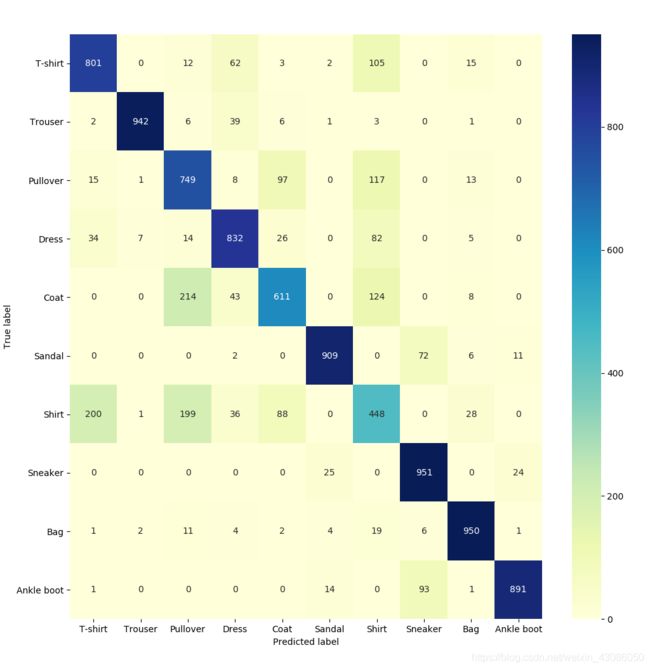

关联性热力图:(可分析得出 靴子和滑板鞋,T-shirt和shirt属于相互误判比较多的类别)

完结,撒花!