贝叶斯网络实践

主要内容:

朴素贝叶斯的推导和应用

使用马尔可夫模型计算临近点概率

文本数据的处理流程

使用TF-IDF得到文本特征

Word2vec的使用

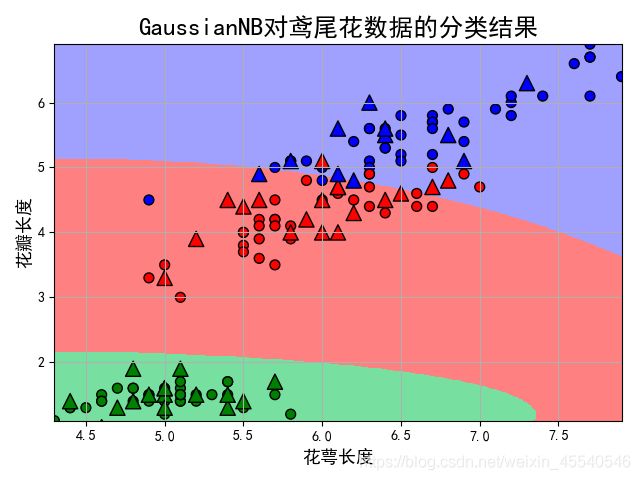

朴素贝叶斯进行鸢尾花分类

#!/usr/bin/python

# -*- coding:utf-8 -*-

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib as mpl

from sklearn.preprocessing import StandardScaler, MinMaxScaler, PolynomialFeatures

from sklearn.naive_bayes import GaussianNB, MultinomialNB

from sklearn.pipeline import Pipeline

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

def iris_type(s):

it = {'Iris-setosa': 0, 'Iris-versicolor': 1, 'Iris-virginica': 2}

return it[s]

if __name__ == "__main__":

data = pd.read_csv('D:/ML/iris.data', header=None)

x, y = data[np.arange(4)], data[4]

y = pd.Categorical(values=y).codes

feature_names = '花萼长度', '花萼宽度', '花瓣长度', '花瓣宽度'

features = [0,2]#用前两个特征做分类

x = x[features]

x, x_test, y, y_test = train_test_split(x, y, train_size=0.7, random_state=0)

priors = np.array((1,2,4), dtype=float)

priors /= priors.sum()

gnb = Pipeline([

('sc', StandardScaler()),

('poly', PolynomialFeatures(degree=1)),

('clf', GaussianNB(priors=priors))]) # 由于鸢尾花数据是样本均衡的,其实不需要设置先验值

# gnb = KNeighborsClassifier(n_neighbors=3).fit(x, y.ravel())

gnb.fit(x, y.ravel())

y_hat = gnb.predict(x)

print('训练集准确度: %.2f%%' % (100 * accuracy_score(y, y_hat)))

y_test_hat = gnb.predict(x_test)

print('测试集准确度:%.2f%%' % (100 * accuracy_score(y_test, y_test_hat))) # 画图

N, M = 500, 500 # 横纵各采样多少个值

x1_min, x2_min = x.min()

x1_max, x2_max = x.max()

t1 = np.linspace(x1_min, x1_max, N)

t2 = np.linspace(x2_min, x2_max, M)

x1, x2 = np.meshgrid(t1, t2) # 生成网格采样点

x_grid = np.stack((x1.flat, x2.flat), axis=1) # 测试点

mpl.rcParams['font.sans-serif'] = ['simHei']

mpl.rcParams['axes.unicode_minus'] = False

cm_light = mpl.colors.ListedColormap(['#77E0A0', '#FF8080', '#A0A0FF'])

cm_dark = mpl.colors.ListedColormap(['g', 'r', 'b'])

y_grid_hat = gnb.predict(x_grid) # 预测值

y_grid_hat = y_grid_hat.reshape(x1.shape)

plt.figure(facecolor='w')

plt.pcolormesh(x1, x2, y_grid_hat, cmap=cm_light) # 预测值的显示

plt.scatter(x[features[0]], x[features[1]], c=y, edgecolors='k', s=50, cmap=cm_dark)

plt.scatter(x_test[features[0]], x_test[features[1]], c=y_test, marker='^', edgecolors='k', s=120, cmap=cm_dark)

plt.xlabel(feature_names[features[0]], fontsize=13)

plt.ylabel(feature_names[features[1]], fontsize=13)

plt.xlim(x1_min, x1_max)

plt.ylim(x2_min, x2_max)

plt.title('GaussianNB对鸢尾花数据的分类结果', fontsize=18)

plt.grid(True)

plt.show()

# /usr/bin/python

# -*- coding:utf-8 -*-

import numpy as np

import matplotlib.pyplot as plt

import os

from matplotlib import animation

from PIL import Image

def update(f):

global loc

if f == 0:

loc = loc_prime

next_loc = np.zeros((m, n), dtype=np.float)

for i in np.arange(m):

for j in np.arange(n):

next_loc[i, j] = calc_next_loc(np.array([i, j]), loc, directions)

loc = next_loc / np.max(next_loc)

im.set_array(loc)

# Save

if save_image:

if f % 3 == 0:

image_data = plt.cm.coolwarm(loc) * 255

image_data, _ = np.split(image_data, (-1, ), axis=2)

image_data = image_data.astype(np.uint8).clip(0, 255)

output = '.\\Pic2\\'

if not os.path.exists(output):

os.mkdir(output)

a = Image.fromarray(image_data, mode='RGB')

a.save('%s%d.png' % (output, f))

return [im]

def calc_next_loc(now, loc, directions):

near_index = np.array([(-1, -1), (-1, 0), (-1, 1),

(0, -1), (0, 1),

(1, -1), (1, 0), (1, 1)])

directions_index = np.array([7, 6, 5, 0, 4, 1, 2, 3])

nn = now + near_index

ii, jj = nn[:, 0], nn[:, 1]

ii[ii >= m] = 0

jj[jj >= n] = 0

return np.dot(loc[ii, jj], directions[ii, jj, directions_index])#当前位置跟direction做点乘得到下一个位置

if __name__ == '__main__':

np.set_printoptions(suppress=True, linewidth=300, edgeitems=8)

np.random.seed(0)

save_image = False

style = 'Sin' # Sin/Direct/Random

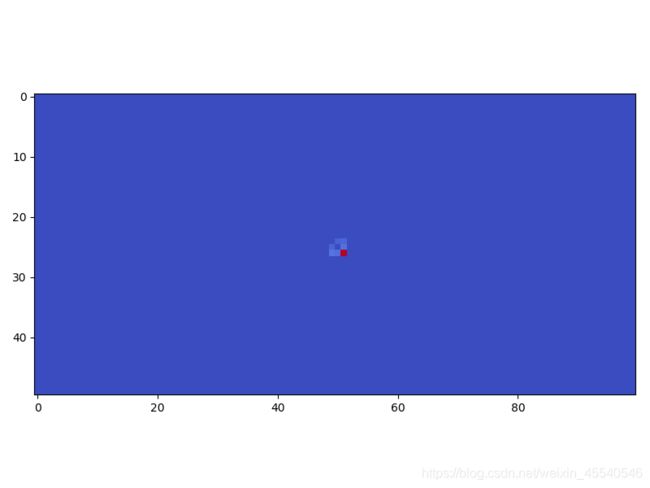

m, n = 50, 100#图象的大小高度、宽度

directions = np.random.rand(m, n, 8)#默认8邻域

if style == 'Direct':

directions[:,:,1] = 10

elif style == 'Sin':

x = np.arange(n)

y_d = np.cos(6*np.pi*x/n)

theta = np.empty_like(x, dtype=np.int)

theta[y_d > 0.5] = 1

theta[~(y_d > 0.5) & (y_d > -0.5)] = 0

theta[~(y_d > -0.5)] = 7

directions[:, x.astype(np.int), theta] = 10

directions[:, :] /= np.sum(directions[:, :])

print(directions)

loc = np.zeros((m, n), dtype=np.float)

loc[int(m/2),int(n/2)] = 1

loc_prime = np.empty_like(loc)

loc_prime = loc

fig = plt.figure(figsize=(8, 6), facecolor='w')

im = plt.imshow(loc/np.max(loc), cmap='coolwarm')

anim = animation.FuncAnimation(fig, update, frames=300, interval=50, blit=True)

plt.tight_layout(1.5)

plt.show()

#!/usr/bin/python

# -*- coding:utf-8 -*-

import numpy as np

from sklearn.naive_bayes import MultinomialNB, BernoulliNB

from sklearn.datasets import fetch_20newsgroups

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import RidgeClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import GridSearchCV

from sklearn import metrics

from time import time

from pprint import pprint

import matplotlib.pyplot as plt

import matplotlib as mpl

#测试分类器

def test_clf(clf):

print('分类器:', clf)

alpha_can = np.logspace(-3, 2, 10)

model = GridSearchCV(clf, param_grid={'alpha': alpha_can}, cv=5)

m = alpha_can.size

if hasattr(clf, 'alpha'):#岭回归分类器

model.set_params(param_grid={'alpha': alpha_can})

m = alpha_can.size

if hasattr(clf, 'n_neighbors'):#K近邻分类器

neighbors_can = np.arange(1, 15)

model.set_params(param_grid={'n_neighbors': neighbors_can})

m = neighbors_can.size

if hasattr(clf, 'C'):#SVM

C_can = np.logspace(1, 3, 3)

gamma_can = np.logspace(-3, 0, 3)

model.set_params(param_grid={'C':C_can, 'gamma':gamma_can})

m = C_can.size * gamma_can.size

if hasattr(clf, 'max_depth'):#决策树,随机森林

max_depth_can = np.arange(4, 10)

model.set_params(param_grid={'max_depth': max_depth_can})

m = max_depth_can.size

t_start = time()

model.fit(x_train, y_train)

t_end = time()

t_train = (t_end - t_start) / (5*m)#计算训练时间

print('5折交叉验证的训练时间为:%.3f秒/(5*%d)=%.3f秒' % ((t_end - t_start), m, t_train))

print('最优超参数为:', model.best_params_)

t_start = time()

y_hat = model.predict(x_test)

t_end = time()

t_test = t_end - t_start

print('测试时间:%.3f秒' % t_test)

acc = metrics.accuracy_score(y_test, y_hat)

print('测试集准确率:%.2f%%' % (100 * acc))

name = str(clf).split('(')[0]

index = name.find('Classifier')

if index != -1:

name = name[:index] # 去掉末尾的Classifier

if name == 'SVC':

name = 'SVM'

return t_train, t_test, 1-acc, name

if __name__ == "__main__":

print('开始下载/加载数据...')

t_start = time()

# remove = ('headers', 'footers', 'quotes')

remove = ()

categories = 'alt.atheism', 'talk.religion.misc', 'comp.graphics', 'sci.space'

# categories = None # 若分类所有类别,请注意内存是否够用,下载所有类别

data_train = fetch_20newsgroups(subset='train', categories=categories, shuffle=True, random_state=0, remove=remove)

data_test = fetch_20newsgroups(subset='test', categories=categories, shuffle=True, random_state=0, remove=remove)#shuffle是指是否将下载的数据打乱,remove是否将一部分删除

t_end = time()

print('下载/加载数据完成,耗时%.3f秒' % (t_end - t_start))

print('数据类型:', type(data_train))

print('训练集包含的文本数目:', len(data_train.data))

print('测试集包含的文本数目:', len(data_test.data))

print('训练集和测试集使用的%d个类别的名称:' % len(categories))

categories = data_train.target_names

pprint(categories)

y_train = data_train.target

y_test = data_test.target

print(' -- 前10个文本 -- ')

for i in np.arange(10):

print('文本%d(属于类别 - %s):' % (i+1, categories[y_train[i]]))

print(data_train.data[i])

print('\n\n')

vectorizer = TfidfVectorizer(input='content', stop_words='english', max_df=0.5, sublinear_tf=True)

x_train = vectorizer.fit_transform(data_train.data) # x_train是稀疏的,scipy.sparse.csr.csr_matrix

x_test = vectorizer.transform(data_test.data)

print('训练集样本个数:%d,特征个数:%d' % x_train.shape)

print('停止词:\n', end=' ')

pprint(vectorizer.get_stop_words())

feature_names = np.asarray(vectorizer.get_feature_names())

print('\n\n===================\n分类器的比较:\n')

clfs = (MultinomialNB(), # 0.87(0.017), 0.002, 90.39%

BernoulliNB(), # 1.592(0.032), 0.010, 88.54%

KNeighborsClassifier(), # 19.737(0.282), 0.208, 86.03%

RidgeClassifier(), # 25.6(0.512), 0.003, 89.73%

RandomForestClassifier(n_estimators=200), # 59.319(1.977), 0.248, 77.01%

SVC() # 236.59(5.258), 1.574, 90.10%

)

result = []

for clf in clfs:

a = test_clf(clf)

result.append(a)

print('\n')

result = np.array(result)

time_train, time_test, err, names = result.T

time_train = time_train.astype(np.float)

time_test = time_test.astype(np.float)

err = err.astype(np.float)

x = np.arange(len(time_train))

mpl.rcParams['font.sans-serif'] = ['simHei']

mpl.rcParams['axes.unicode_minus'] = False

plt.figure(figsize=(10, 7), facecolor='w')

ax = plt.axes()

b1 = ax.bar(x, err, width=0.25, color='#77E0A0')

ax_t = ax.twinx()

b2 = ax_t.bar(x+0.25, time_train, width=0.25, color='#FFA0A0')

b3 = ax_t.bar(x+0.5, time_test, width=0.25, color='#FF8080')

plt.xticks(x+0.5, names)

plt.legend([b1[0], b2[0], b3[0]], ('错误率', '训练时间', '测试时间'), loc='upper left', shadow=True)

plt.title('新闻组文本数据不同分类器间的比较', fontsize=18)

plt.xlabel('分类器名称')

plt.grid(True)

plt.tight_layout(2)

plt.show()

示例寻找几个词中的不同词

# etc/bin/python

# -*- encoding: utf-8 -*-

from time import time

from gensim.models import Word2Vec

import sys

import os

import imp

#imp.reload(sys)

#sys.setdefaultencoding('utf-8')

class LoadCorpora(object):

def __init__(self, s):

self.path = s

def __iter__(self):

f = open(self.path,'rb')

for line in f:

yield line.decode().split(' ')

def print_list(a):

for i, s in enumerate(a):

if i != 0:

print('+', end=' ')

print(s, end=' ')

if __name__ == '__main__':

if not os.path.exists('news.model'):

sentences = LoadCorpora('news.dat')

t_start = time()

model = Word2Vec(sentences, size=200, min_count=5, workers=8) # 词向量维度为200,丢弃出现次数少于5次的词

model.save('news.model')

print('OK:', time() - t_start)

model = Word2Vec.load('news.model')

print('词典中词的个数:', len(model.wv.vocab))

for i, word in enumerate(model.wv.vocab):

print(word, end=' ')

if i % 25 == 24:

print()

print()

intrested_words = ('中国', '手机', '学习', '人民', '名义')

print('特征向量:')

for word in intrested_words:

print(word, len(model[word]), model[word])

for word in intrested_words:

result = model.most_similar(word)

print('与', word, '最相近的词:')

for w, s in result:

print('\t', w, s)

words = ('中国', '祖国', '毛泽东', '人民')

for i in range(len(words)):

w1 = words[i]

for j in range(i+1, len(words)):

w2 = words[j]

print('%s 和 %s 的相似度为:%.6f' % (w1, w2, model.similarity(w1, w2)))

print('========================')

opposites = ((['中国', '城市'], ['学生']),

(['男', '工作'], ['女']),

(['俄罗斯', '美国', '英国'], ['日本']))

for positive, negative in opposites:

result = model.most_similar(positive=positive, negative=negative)

print_list(positive)

print('-', end=' ')

print_list(negative)

print(':')

for word, similar in result:

print('\t', word, similar)

print('========================')

words_list = ('苹果 三星 美的 海尔', '中国 日本 韩国 美国 北京',

'医院 手术 护士 医生 感染 福利', '爸爸 妈妈 舅舅 爷爷 叔叔 阿姨 老婆')

for words in words_list:

print(words, '离群词:', model.doesnt_match(words.split(' ')))