使用YOLOv3训练一个数字串识别模型,端到端实现数字串识别

YOLOv3数字串识别

使用YOLOv3模型训练一个字符串识别模型,例如车牌、数码管、刻度值等,端到端的无字符分割的快速实现数字串的识别。

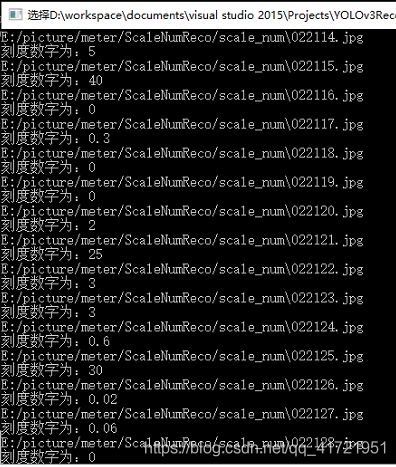

使用c++调用模型直接输出字符串数值:

#include