PyTorch深度学习实践(九)——卷积神经网络入门

文章目录

- 0 写在前面

- 1 卷积层

- 2 下采样

- 3 卷积和下采样

- 4 输出是十分类的问题

- 5 特征提取器

- 6 卷积层

-

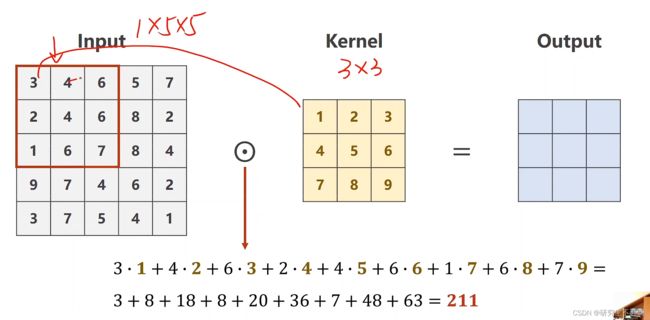

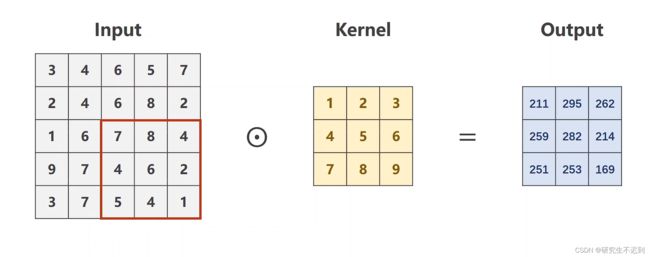

- 6.1 单通道卷积

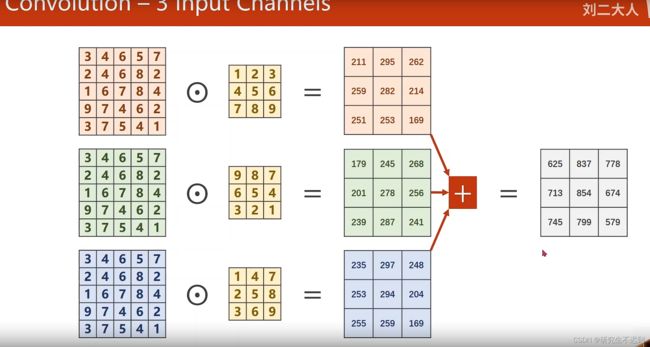

- 6.2 多通道卷积

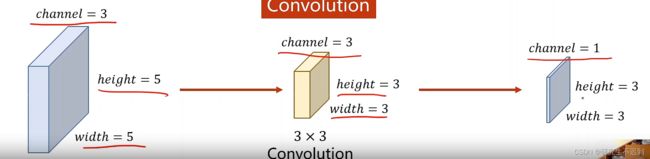

- 6.3 卷积输出

- 7 卷积核的维度确定

- 8 局部感知域(过滤器)

- 9 卷积层代码实现

- 10 填充padding

- 11 定义模型

- 12 完整代码

0 写在前面

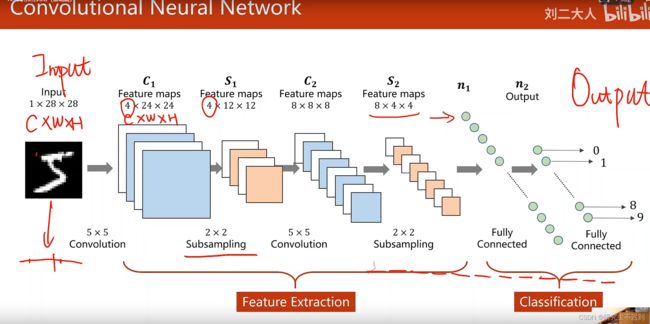

- 在传统的神经网络中,我们会把输入层的节点与隐含层的所有节点相连。卷积神经网络中,采用“局部感知”的方法,即不再把输入层的每个节点都连接到隐含层的每一个神经元节点上。

- full yconnected 全连接层:如果这个网络是用线性层串联起来的

- 卷积神经网络中间使用的是卷积层,它不像全连接层一样,会把CWH打成一维的数据,而是保留原本的3维,这样就不会损失原本数据中的空间信息。

- 卷积神经网络识别模仿了人脑的视觉处理机制,采用分级提取特征的原理!

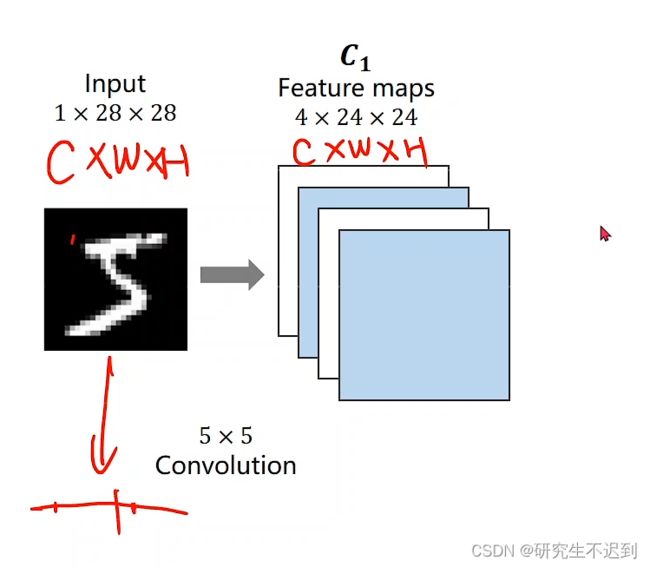

1 卷积层

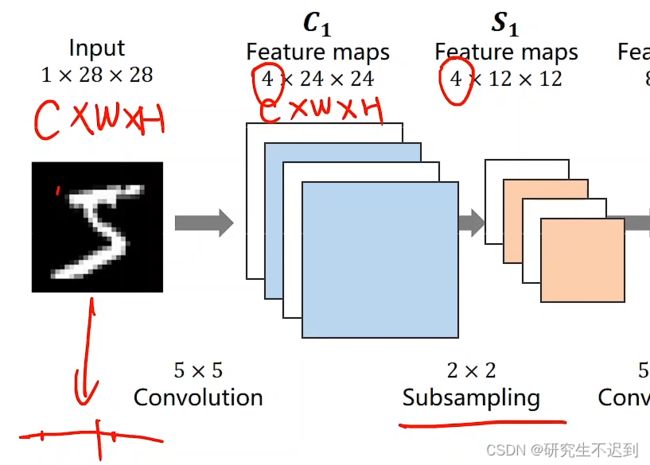

2 下采样

- 目的:减少数据量,feature_matrix,降低运算压力

- 经过一次下采样,通道数是不变的

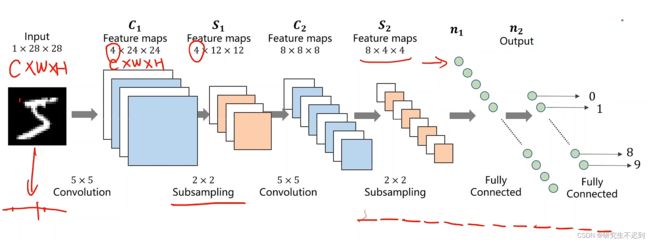

3 卷积和下采样

- 池化的目的是降低维度;实际上就是下才哟昂

- 先做一个5×5的卷积,然后再做一个2×2的下采样;

- 再做一个5×5的卷积,然后再做一个2×2的下采样;

4 输出是十分类的问题

- 从 S 2 S_2 S2到 n 1 n_1 n1是把 8 × 4 × 4 8×4×4 8×4×4映射到一维数据,然后把 n 1 n_1 n1使用一个线性层(全连接层)映射到一个10维。

- 然后用交叉熵来计算损失,这就可以能让网络正常工作了。

5 特征提取器

6 卷积层

6.1 单通道卷积

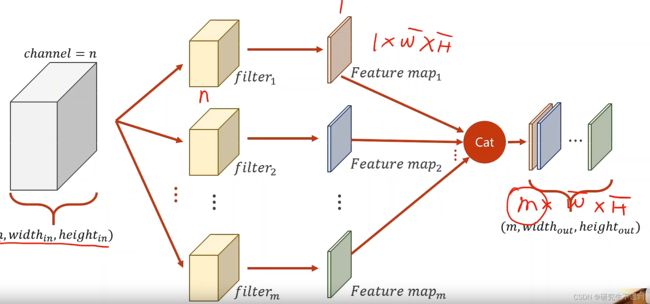

6.2 多通道卷积

6.3 卷积输出

7 卷积核的维度确定

- 卷积核的通道数,应该与输入数据的通道数一致

- 卷积核的个数,决定(等于)输出数据的通道数

8 局部感知域(过滤器)

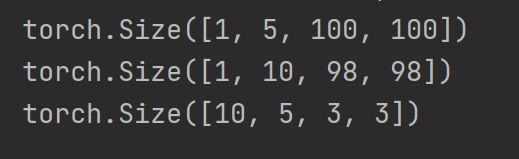

9 卷积层代码实现

- 可以看到卷积层的shape, 10取决于输出的通道数,5取决于输出的通道数。

import torch

in_channels, out_channels = 5, 10

width, height = 100, 100 # 宽和高的大小

kernel_size = 3 # 卷积核的大小

batch_size = 1

input = torch.randn(batch_size, in_channels, width, height)

print(input)

conv_layer = torch.nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size)

output = conv_layer(input)

print(input.shape)

print(output.shape)

print(conv_layer.weight.shape)

- 所以说,卷积层的定义只需要输入的通道,输出的通道和卷积核的大小。

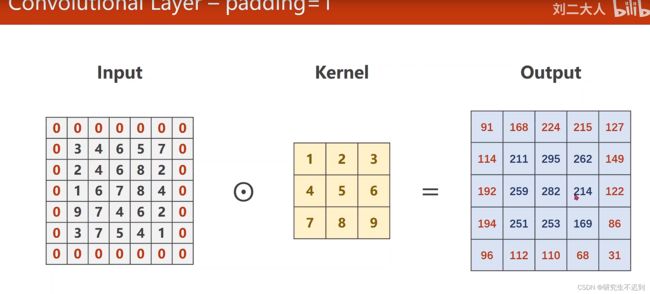

10 填充padding

- 考虑到之前去计算kernel=3时,即使每次stride=1,经过卷积之后特征图的尺寸也会变小。

- 这是由于过滤穷在移动到边缘的时候就结束了,中间的像素点参与计算的次数要比边缘的要多。

- 因此,越是边缘的点,对于输出的影响就越小,为了把边缘信息也考虑进来,我们可以在外围进行一次填充,这就是padding,一般来说填充的都是0.

11 定义模型

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1,10,(5,5))

self.conv2 = torch.nn.Conv2d(10,20,(5,5))

self.maxpooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320,10)

def forward(self,x):

# 将数据从 (n,1,28,28) 转为 (n,784)

#统计 minibatch 的大小

batch_size = x.size(0)

x = F.relu(self.maxpooling(self.conv1(x)))

x = F.relu(self.maxpooling(self.conv2(x)))

#将批量输入的图片转为 张数 * N

# 注意 批量数据矩阵 一行表示一个数据

x = x.view(batch_size,-1)

x = self.fc(x)

return x

12 完整代码

- 我会写一篇blog来分析一下这个卷积网络模型LeNet,如果有兴趣的小伙伴可以移步下方链接!

https://blog.csdn.net/weixin_42521185/article/details/123789635

- 觉得本篇blog对你有帮助的话,麻烦收藏点赞+关注我哟!!

import numpy as np

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets

from torchvision import transforms

from torch.utils.data import DataLoader

import torch

import matplotlib.pyplot as plt

transform = transforms.Compose([

transforms.ToTensor(), #将图像转为tensor向量即每一行叠加起来,会丧失空间结构,且取值为0-1

transforms.Normalize((0.1307,),(0.3081,)) #第一个是均值,第二个是标准差,需要提前算出,这两个参数都是mnist的

])

batch_size = 64

train_dataset = datasets.MNIST(root='../dataset/mnist',

train = True,

download=True,

transform=transform

)

train_loader = DataLoader(train_dataset,

shuffle=True,

batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist',

train = False,

download=False,

transform=transform

)

test_loader = DataLoader(test_dataset,

shuffle=False,

batch_size=batch_size)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1,10,(5,5))

self.conv2 = torch.nn.Conv2d(10,20,(5,5))

self.maxpooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320,10)

def forward(self,x):

# 将数据从 (n,1,28,28) 转为 (n,784)

#统计 minibatch 的大小

batch_size = x.size(0)

x = F.relu(self.maxpooling(self.conv1(x)))

x = F.relu(self.maxpooling(self.conv2(x)))

#将批量输入的图片转为 张数 * N

# 注意 批量数据矩阵 一行表示一个数据

x = x.view(batch_size,-1)

x = self.fc(x)

return x

model = Net()

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(),lr=0.01,momentum=0.5)

#使用 GPU 加速

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

def train(epoch):

running_loss = 0.0

# batch_idx 的范围是从 0-937 共938个 因为 batch为64,共60000个数据,所以输入矩阵为 (64*N)

for batch_idx,data in enumerate(train_loader,0):

x ,y = data

x,y = x.to(device),y.to(device) #装入GPU

optimizer.zero_grad()

y_pred = model(x)

loss = criterion(y_pred,y) #计算交叉熵损失

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx%300 == 299:

print("[%d,%5d] loss:%.3f"%(epoch+1,batch_idx+1,running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

x,y = data

x,y = x.to(device),y.to(device)

y_pred = model(x)

_,predicted = torch.max(y_pred.data,dim=1)

total += y.size(0)

correct += (predicted==y).sum().item()

print('accuracy on test set:%d%% [%d/%d]'%(100*correct/total,correct,total))

accuracy_list.append(100*correct/total)

if __name__ == '__main__':

accuracy_list = []

for epoch in range(10):

train(epoch)

test()

plt.plot(np.linspace(1,10,10),accuracy_list)

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.show()