Tensorflow:VGG-net训练过程总结

前言:原本我是比较偏向数据挖掘方法的,后来找到的工作更偏向CV和NLP,所以也在慢慢的往CV和NLP靠,有什么说的不好,望指正。

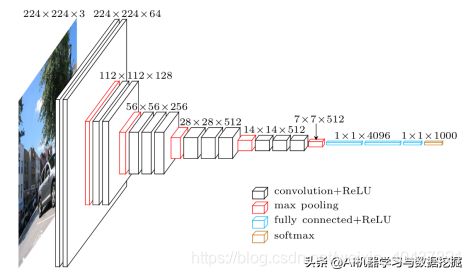

1、VGG-net简介

VGG-net是牛津大学和Deepmind一起研发的一种深度卷积神经网络,在2014年的ILSVRC比赛在取得第二名的成绩,将Top-5的错误率将到7.3%,而当年排名第一的正是大名鼎鼎的Google-Net,后面有空在详细说。VGG-net的泛化能力非常好,在许多不同的数据集都有较好的表现。2019年的现在,VGG仍然经常被用来提取特征图像,VGG对于新入门的同学也是比较友好(没有太多复杂的结构)。

2、问题解答

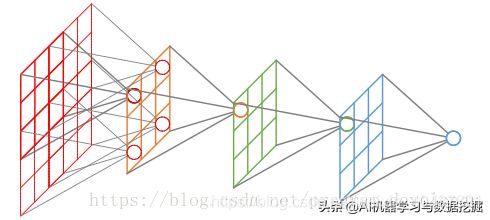

(1)什么是感受野?

在卷积神经网络中,感受野(Receptive Field)的定义是卷积神经网络每一层输出的特征图(feature map)上的像素点在输入图片上映射的区域大小。再通俗点的解释是,特征图上的一个点对应输入图上的区域,如下图所示。

2)为什么说连续两个3*3的卷积核等价于一个5*5的卷积核,连续三个3*3的卷积核等价于一个7*7的卷积核?

首先解释为什么要使用更小的卷积核,每一张图片使用的卷积神经网络的核是共享的,使用更小的核可以降低模型的参数量,然而较小的核函数的感受野较小,为了使得3*3核的感受野和5*5的感受野一致,VGG提出了使用连续的两个3*3卷积核来替代5*5的卷积核,参数量从5*5=25,降为3*3*2=18(两个3*3的卷积核参数量)。网上我也找到相关的问题(1)的解释,没看太懂,下面说下我的理解:

padding为VALID,假设一幅图片是w*w(不是一般性,设为32*32),卷积核大小设为k*k,步长设为S,padding的像素个数P(一般情况为0),输出的图片尺寸为N*N,其中N=((w-k+2P)/S)+1。

1)对于一个5*5的卷积核,步长S为1,P=0,卷积后的输出尺寸边长为((32-5+2*0))/1+1=28,图片为28*28。

2)对于一个3*3的卷积核,其它设置一样,输出的尺寸为30*30,对得到的30*30的图片继续使用3*3卷积核,得到的输出的图片尺寸为28*28。

因此,连续使用两次3*3的卷积核得到的图片尺寸和一次使用5*5卷积核的尺寸一样,但参数量更少。

3、VGG-net的网络结构

4、训练过程

(1)导入相关包,设置模型参数

# -*- coding:utf-8 -*-

import tensorflow as tf

import numpy as np

import time

import os

import sys

import random

import pickle

import tarfile

class_num = 10

image_size = 32

img_channels = 3

batch_size = 250

iterations = 200 # 训练集50000=250*200

total_epoch = 200

weight_decay = 0.0003

dropout_rate = 0.5

momentum_rate = 0.9

log_save_path = './vgg_16_logs'

model_save_path = './model/'

(2)下载数据集

def download_data():

dirname = 'CAFIR-10_data/cifar-10-python' # 解压后的文件夹

origin = 'http://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz'

fname = './CAFIR-10_data/cifar-10-python.tar.gz'

#fname = './CAFIR-10_data'

fpath = './' + dirname

download = False

if os.path.exists(fpath) or os.path.isfile(fname):

download = False

print("DataSet already exist!")

import tarfile

if fname.endswith("tar.gz"):

tar = tarfile.open(fname, "r:gz")

tar.extractall()

tar.close()

#tarfile.open(fpath, "r:gz").extractall(fname)

elif fname.endswith("tar"):

tar = tarfile.open(fname, "r:")

tar.extractall()

tar.close()

else:

download = True

if download:

print('Downloading data from', origin)

import urllib.request

import tarfile

def reporthook(count, block_size, total_size):

global start_time

if count == 0:

start_time = time.time()

return

duration = time.time() - start_time

progress_size = int(count * block_size)

speed = int(progress_size / (1024 * duration))

percent = min(int(count*block_size*100/total_size),100)

sys.stdout.write("\r...%d%%, %d MB, %d KB/s, %d seconds passed" %

(percent, progress_size / (1024 * 1024), speed, duration))

sys.stdout.flush()

urllib.request.urlretrieve(origin, fname, reporthook)

print('Download finished. Start extract!', origin)

if fname.endswith("tar.gz"):

tar = tarfile.open(fname, "r:gz")

tar.extractall()

tar.close()

elif fname.endswith("tar"):

tar = tarfile.open(fname, "r:")

tar.extractall()

tar.close()

def unpickle(file):

with open(file, 'rb') as fo:

dict = pickle.load(fo, encoding='bytes')

return dict

def load_data_one(file):

batch = unpickle(file)

data = batch[b'data']

labels = batch[b'labels']

print("Loading %s : %d." % (file, len(data)))

return data, labels

def load_data(files, data_dir, label_count):

global image_size, img_channels

data, labels = load_data_one(data_dir + '/' + files[0])

for f in files[1:]:

data_n, labels_n = load_data_one(data_dir + '/' + f)

data = np.append(data, data_n, axis=0)

labels = np.append(labels, labels_n, axis=0)

labels = np.array([[float(i == label) for i in range(label_count)] for label in labels])

data = data.reshape([-1, img_channels, image_size, image_size])

data = data.transpose([0, 2, 3, 1])

return data, labels

def prepare_data():

print("======Loading data======")

download_data()

#data_dir = './CAFIR-10_data/cifar-10-python/cifar-10-batches-py'

data_dir = './cifar-10-batches-py' # 数据集解压后的文件夹

image_dim = image_size * image_size * img_channels

meta = unpickle(data_dir + '/batches.meta')

label_names = meta[b'label_names']

label_count = len(label_names)

train_files = ['data_batch_%d' % d for d in range(1, 6)]

train_data, train_labels = load_data(train_files, data_dir, label_count)

test_data, test_labels = load_data(['test_batch'], data_dir, label_count)

print("Train data:", np.shape(train_data), np.shape(train_labels))

print("Test data :", np.shape(test_data), np.shape(test_labels))

print("======Load finished======")

print("======Shuffling data======")

indices = np.random.permutation(len(train_data))

train_data = train_data[indices]

train_labels = train_labels[indices]

print("======Prepare Finished======")

return train_data, train_labels, test_data, test_labels

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape, dtype=tf.float32)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool(input, k_size=1, stride=1, name=None):

return tf.nn.max_pool(input, ksize=[1, k_size, k_size, 1], strides=[1, stride, stride, 1],

padding='SAME', name=name)

def batch_norm(input):

return tf.contrib.layers.batch_norm(input, decay=0.9, center=True, scale=True, epsilon=1e-3,

is_training=train_flag, updates_collections=None)

# random crop 随机裁剪,下面的数据增强

def _random_crop(batch, crop_shape, padding=None):

oshape = np.shape(batch[0])

if padding:

oshape = (oshape[0] + 2*padding, oshape[1] + 2*padding)

new_batch = []

npad = ((padding, padding), (padding, padding), (0, 0))

for i in range(len(batch)):

new_batch.append(batch[i])

if padding:

new_batch[i] = np.lib.pad(batch[i], pad_width=npad,

mode='constant', constant_values=0)

nh = random.randint(0, oshape[0] - crop_shape[0])

nw = random.randint(0, oshape[1] - crop_shape[1])

new_batch[i] = new_batch[i][nh:nh + crop_shape[0],

nw:nw + crop_shape[1]]

return new_batch

def _random_flip_leftright(batch):

for i in range(len(batch)):

if bool(random.getrandbits(1)):

batch[i] = np.fliplr(batch[i])

return batch

def data_augmentation(batch):

batch = _random_flip_leftright(batch)

batch = _random_crop(batch, [32, 32], 4)

return batchdef data_preprocessing(x_train,x_test):

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train[:, :, :, 0] = (x_train[:, :, :, 0] - np.mean(x_train[:, :, :, 0])) / np.std(x_train[:, :, :, 0])

x_train[:, :, :, 1] = (x_train[:, :, :, 1] - np.mean(x_train[:, :, :, 1])) / np.std(x_train[:, :, :, 1])

x_train[:, :, :, 2] = (x_train[:, :, :, 2] - np.mean(x_train[:, :, :, 2])) / np.std(x_train[:, :, :, 2])

x_test[:, :, :, 0] = (x_test[:, :, :, 0] - np.mean(x_test[:, :, :, 0])) / np.std(x_test[:, :, :, 0])

x_test[:, :, :, 1] = (x_test[:, :, :, 1] - np.mean(x_test[:, :, :, 1])) / np.std(x_test[:, :, :, 1])

x_test[:, :, :, 2] = (x_test[:, :, :, 2] - np.mean(x_test[:, :, :, 2])) / np.std(x_test[:, :, :, 2])

return x_train, x_test

def learning_rate_schedule(epoch_num):

if epoch_num < 81:

return 0.01#0.1

elif epoch_num < 121:

return 0.001#0.01

else:

def run_testing(sess, ep):

acc = 0.0

loss = 0.0

pre_index = 0

add = 1000

for it in range(10):

batch_x = test_x[pre_index:pre_index+add]

batch_y = test_y[pre_index:pre_index+add]

pre_index = pre_index + add

loss_, acc_ = sess.run([cross_entropy, accuracy],

feed_dict={x: batch_x, y_: batch_y, keep_prob: 1.0, train_flag: False})

loss += loss_ / 10.0

acc += acc_ / 10.0

summary = tf.Summary(value=[tf.Summary.Value(tag="test_loss", simple_value=loss),

tf.Summary.Value(tag="test_accuracy", simple_value=acc)])

return acc, loss, summary

if __name__ == '__main__':

train_x, train_y, test_x, test_y = prepare_data()

train_x, test_x = data_preprocessing(train_x, test_x)

# define placeholder x, y_ , keep_prob, learning_rate

x = tf.placeholder(tf.float32,[None, image_size, image_size, 3])

y_ = tf.placeholder(tf.float32, [None, class_num])

keep_prob = tf.placeholder(tf.float32)

learning_rate = tf.placeholder(tf.float32)

train_flag = tf.placeholder(tf.bool)

# build_network

W_conv1_1 = tf.get_variable('conv1_1', shape=[3, 3, 3, 64], initializer=tf.contrib.keras.initializers.he_normal())

b_conv1_1 = bias_variable([64])

output = tf.nn.relu(batch_norm(conv2d(x, W_conv1_1) + b_conv1_1))

W_conv1_2 = tf.get_variable('conv1_2', shape=[3, 3, 64, 64], initializer=tf.contrib.keras.initializers.he_normal())

b_conv1_2 = bias_variable([64])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv1_2) + b_conv1_2))

output = max_pool(output, 2, 2, "pool1")

W_conv2_1 = tf.get_variable('conv2_1', shape=[3, 3, 64, 128], initializer=tf.contrib.keras.initializers.he_normal())

b_conv2_1 = bias_variable([128])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv2_1) + b_conv2_1))

W_conv2_2 = tf.get_variable('conv2_2', shape=[3, 3, 128, 128], initializer=tf.contrib.keras.initializers.he_normal())

b_conv2_2 = bias_variable([128])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv2_2) + b_conv2_2))

output = max_pool(output, 2, 2, "pool2")

W_conv3_1 = tf.get_variable('conv3_1', shape=[3, 3, 128, 256], initializer=tf.contrib.keras.initializers.he_normal())

b_conv3_1 = bias_variable([256])

output = tf.nn.relu( batch_norm(conv2d(output,W_conv3_1) + b_conv3_1))

W_conv3_2 = tf.get_variable('conv3_2', shape=[3, 3, 256, 256], initializer=tf.contrib.keras.initializers.he_normal())

b_conv3_2 = bias_variable([256])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv3_2) + b_conv3_2))

W_conv3_3 = tf.get_variable('conv3_3', shape=[3, 3, 256, 256], initializer=tf.contrib.keras.initializers.he_normal())

b_conv3_3 = bias_variable([256])

output = tf.nn.relu( batch_norm(conv2d(output, W_conv3_3) + b_conv3_3))

output = max_pool(output, 2, 2, "pool3")

W_conv4_1 = tf.get_variable('conv4_1', shape=[3, 3, 256, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv4_1 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv4_1) + b_conv4_1))

W_conv4_2 = tf.get_variable('conv4_2', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv4_2 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv4_2) + b_conv4_2))

W_conv4_3 = tf.get_variable('conv4_3', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv4_3 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv4_3) + b_conv4_3))

output = max_pool(output, 2, 2)

W_conv5_1 = tf.get_variable('conv5_1', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv5_1 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv5_1) + b_conv5_1))

W_conv5_2 = tf.get_variable('conv5_2', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv5_2 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv5_2) + b_conv5_2))

W_conv5_3 = tf.get_variable('conv5_3', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv5_3 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv5_3) + b_conv5_3))

#output = max_pool(output, 2, 2)

# output = tf.contrib.layers.flatten(output)

output = tf.reshape(output, [-1, 2*2*512])

W_fc1 = tf.get_variable('fc1', shape=[2048, 4096], initializer=tf.contrib.keras.initializers.he_normal())

b_fc1 = bias_variable([4096])

output = tf.nn.relu(batch_norm(tf.matmul(output, W_fc1) + b_fc1) )

output = tf.nn.dropout(output, keep_prob)

W_fc2 = tf.get_variable('fc7', shape=[4096, 4096], initializer=tf.contrib.keras.initializers.he_normal())

b_fc2 = bias_variable([4096])

output = tf.nn.relu(batch_norm(tf.matmul(output, W_fc2) + b_fc2))

output = tf.nn.dropout(output, keep_prob)

W_fc3 = tf.get_variable('fc3', shape=[4096, 10], initializer=tf.contrib.keras.initializers.he_normal())

b_fc3 = bias_variable([10])

output = tf.nn.relu(batch_norm(tf.matmul(output, W_fc3) + b_fc3))

# output = tf.reshape(output,[-1,10])

# loss function: cross_entropy

# train_step: training operation

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_, logits=output))

l2 = tf.add_n([tf.nn.l2_loss(var) for var in tf.trainable_variables()])

train_step = tf.train.MomentumOptimizer(learning_rate, momentum_rate, use_nesterov=True).\

minimize(cross_entropy + l2 * weight_decay)

correct_prediction = tf.equal(tf.argmax(output, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# initial an saver to save model

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

summary_writer = tf.summary.FileWriter(log_save_path,sess.graph)

# epoch = 164

# make sure [bath_size * iteration = data_set_number]

for ep in range(1, total_epoch+1):

lr = learning_rate_schedule(ep)

pre_index = 0

train_acc = 0.0

train_loss = 0.0

start_time = time.time()

print("\n epoch %d/%d:" % (ep, total_epoch))

for it in range(1, iterations+1):

batch_x = train_x[pre_index:pre_index+batch_size]

batch_y = train_y[pre_index:pre_index+batch_size]

batch_x = data_augmentation(batch_x)

_, batch_loss = sess.run([train_step, cross_entropy],

feed_dict={x: batch_x, y_: batch_y, keep_prob: dropout_rate,

learning_rate: lr, train_flag: True})

batch_acc = accuracy.eval(feed_dict={x: batch_x, y_: batch_y, keep_prob: 1.0, train_flag: True})

train_loss += batch_loss

train_acc += batch_acc

pre_index += batch_size

if it == iterations:

train_loss /= iterations

train_acc /= iterations

loss_, acc_ = sess.run([cross_entropy, accuracy],

feed_dict={x: batch_x, y_: batch_y, keep_prob: 1.0, train_flag: True})

train_summary = tf.Summary(value=[tf.Summary.Value(tag="train_loss", simple_value=train_loss),

tf.Summary.Value(tag="train_accuracy", simple_value=train_acc)])

val_acc, val_loss, test_summary = run_testing(sess, ep)

summary_writer.add_summary(train_summary, ep)

summary_writer.add_summary(test_summary, ep)

summary_writer.flush()

print("iteration: %d/%d, cost_time: %ds, train_loss: %.4f, "

"train_acc: %.4f, test_loss: %.4f, test_acc: %.4f"

% (it, iterations, int(time.time()-start_time), train_loss, train_acc, val_loss, val_acc))

else:

print("iteration: %d/%d, train_loss: %.4f, train_acc: %.4f"

% (it, iterations, train_loss / it, train_acc / it))

save_path = saver.save(sess, model_save_path)

print("Model saved in file: %s" % save_path)

代码汇总:

# -*- coding:utf-8 -*-

import tensorflow as tf

import numpy as np

import time

import os

import sys

import random

import pickle

import tarfile

class_num = 10

image_size = 32

img_channels = 3

batch_size = 250

iterations = 200 # 训练集50000=250*200

total_epoch = 200

weight_decay = 0.0003

dropout_rate = 0.5

momentum_rate = 0.9

log_save_path = './vgg_16_logs'

model_save_path = './model/'

def download_data():

dirname = 'CAFIR-10_data/cifar-10-python' # 解压后的文件夹

origin = 'http://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz'

fname = './CAFIR-10_data/cifar-10-python.tar.gz'

#fname = './CAFIR-10_data'

fpath = './' + dirname

download = False

if os.path.exists(fpath) or os.path.isfile(fname):

download = False

print("DataSet already exist!")

import tarfile

if fname.endswith("tar.gz"):

tar = tarfile.open(fname, "r:gz")

tar.extractall()

tar.close()

#tarfile.open(fpath, "r:gz").extractall(fname)

elif fname.endswith("tar"):

tar = tarfile.open(fname, "r:")

tar.extractall()

tar.close()

else:

download = True

if download:

print('Downloading data from', origin)

import urllib.request

import tarfile

def reporthook(count, block_size, total_size):

global start_time

if count == 0:

start_time = time.time()

return

duration = time.time() - start_time

progress_size = int(count * block_size)

speed = int(progress_size / (1024 * duration))

percent = min(int(count*block_size*100/total_size),100)

sys.stdout.write("\r...%d%%, %d MB, %d KB/s, %d seconds passed" %

(percent, progress_size / (1024 * 1024), speed, duration))

sys.stdout.flush()

urllib.request.urlretrieve(origin, fname, reporthook)

print('Download finished. Start extract!', origin)

if fname.endswith("tar.gz"):

tar = tarfile.open(fname, "r:gz")

tar.extractall()

tar.close()

elif fname.endswith("tar"):

tar = tarfile.open(fname, "r:")

tar.extractall()

tar.close()

def unpickle(file):

with open(file, 'rb') as fo:

dict = pickle.load(fo, encoding='bytes')

return dict

def load_data_one(file):

batch = unpickle(file)

data = batch[b'data']

labels = batch[b'labels']

print("Loading %s : %d." % (file, len(data)))

return data, labels

def load_data(files, data_dir, label_count):

global image_size, img_channels

data, labels = load_data_one(data_dir + '/' + files[0])

for f in files[1:]:

data_n, labels_n = load_data_one(data_dir + '/' + f)

data = np.append(data, data_n, axis=0)

labels = np.append(labels, labels_n, axis=0)

labels = np.array([[float(i == label) for i in range(label_count)] for label in labels])

data = data.reshape([-1, img_channels, image_size, image_size])

data = data.transpose([0, 2, 3, 1])

return data, labels

def prepare_data():

print("======Loading data======")

download_data()

#data_dir = './CAFIR-10_data/cifar-10-python/cifar-10-batches-py'

data_dir = './cifar-10-batches-py' # 数据集解压后的文件夹

image_dim = image_size * image_size * img_channels

meta = unpickle(data_dir + '/batches.meta')

label_names = meta[b'label_names']

label_count = len(label_names)

train_files = ['data_batch_%d' % d for d in range(1, 6)]

train_data, train_labels = load_data(train_files, data_dir, label_count)

test_data, test_labels = load_data(['test_batch'], data_dir, label_count)

print("Train data:", np.shape(train_data), np.shape(train_labels))

print("Test data :", np.shape(test_data), np.shape(test_labels))

print("======Load finished======")

print("======Shuffling data======")

indices = np.random.permutation(len(train_data))

train_data = train_data[indices]

train_labels = train_labels[indices]

print("======Prepare Finished======")

return train_data, train_labels, test_data, test_labels

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape, dtype=tf.float32)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool(input, k_size=1, stride=1, name=None):

return tf.nn.max_pool(input, ksize=[1, k_size, k_size, 1], strides=[1, stride, stride, 1],

padding='SAME', name=name)

def batch_norm(input):

return tf.contrib.layers.batch_norm(input, decay=0.9, center=True, scale=True, epsilon=1e-3,

is_training=train_flag, updates_collections=None)

# random crop 随机裁剪,下面的数据增强

def _random_crop(batch, crop_shape, padding=None):

oshape = np.shape(batch[0])

if padding:

oshape = (oshape[0] + 2*padding, oshape[1] + 2*padding)

new_batch = []

npad = ((padding, padding), (padding, padding), (0, 0))

for i in range(len(batch)):

new_batch.append(batch[i])

if padding:

new_batch[i] = np.lib.pad(batch[i], pad_width=npad,

mode='constant', constant_values=0)

nh = random.randint(0, oshape[0] - crop_shape[0])

nw = random.randint(0, oshape[1] - crop_shape[1])

new_batch[i] = new_batch[i][nh:nh + crop_shape[0],

nw:nw + crop_shape[1]]

return new_batch

def _random_flip_leftright(batch):

for i in range(len(batch)):

if bool(random.getrandbits(1)):

batch[i] = np.fliplr(batch[i])

return batch

def data_augmentation(batch):

batch = _random_flip_leftright(batch)

batch = _random_crop(batch, [32, 32], 4)

return batch

def data_preprocessing(x_train,x_test):

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train[:, :, :, 0] = (x_train[:, :, :, 0] - np.mean(x_train[:, :, :, 0])) / np.std(x_train[:, :, :, 0])

x_train[:, :, :, 1] = (x_train[:, :, :, 1] - np.mean(x_train[:, :, :, 1])) / np.std(x_train[:, :, :, 1])

x_train[:, :, :, 2] = (x_train[:, :, :, 2] - np.mean(x_train[:, :, :, 2])) / np.std(x_train[:, :, :, 2])

x_test[:, :, :, 0] = (x_test[:, :, :, 0] - np.mean(x_test[:, :, :, 0])) / np.std(x_test[:, :, :, 0])

x_test[:, :, :, 1] = (x_test[:, :, :, 1] - np.mean(x_test[:, :, :, 1])) / np.std(x_test[:, :, :, 1])

x_test[:, :, :, 2] = (x_test[:, :, :, 2] - np.mean(x_test[:, :, :, 2])) / np.std(x_test[:, :, :, 2])

return x_train, x_test

def learning_rate_schedule(epoch_num):

if epoch_num < 81:

return 0.1 # 0.01

elif epoch_num < 121:

return 0.01 #0.001

else:

return 0.001 #0.0001

def run_testing(sess, ep):

acc = 0.0

loss = 0.0

pre_index = 0

add = 1000

for it in range(10):

batch_x = test_x[pre_index:pre_index+add]

batch_y = test_y[pre_index:pre_index+add]

pre_index = pre_index + add

loss_, acc_ = sess.run([cross_entropy, accuracy],

feed_dict={x: batch_x, y_: batch_y, keep_prob: 1.0, train_flag: False})

loss += loss_ / 10.0

acc += acc_ / 10.0

summary = tf.Summary(value=[tf.Summary.Value(tag="test_loss", simple_value=loss),

tf.Summary.Value(tag="test_accuracy", simple_value=acc)])

return acc, loss, summary

if __name__ == '__main__':

train_x, train_y, test_x, test_y = prepare_data()

train_x, test_x = data_preprocessing(train_x, test_x)

# define placeholder x, y_ , keep_prob, learning_rate

x = tf.placeholder(tf.float32,[None, image_size, image_size, 3])

y_ = tf.placeholder(tf.float32, [None, class_num])

keep_prob = tf.placeholder(tf.float32)

learning_rate = tf.placeholder(tf.float32)

train_flag = tf.placeholder(tf.bool)

# build_network

# 32x32x3

W_conv1_1 = tf.get_variable('conv1_1', shape=[3, 3, 3, 64], initializer=tf.contrib.keras.initializers.he_normal())

b_conv1_1 = bias_variable([64])

output = tf.nn.relu(batch_norm(conv2d(x, W_conv1_1) + b_conv1_1))

W_conv1_2 = tf.get_variable('conv1_2', shape=[3, 3, 64, 64], initializer=tf.contrib.keras.initializers.he_normal())

b_conv1_2 = bias_variable([64])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv1_2) + b_conv1_2))

output = max_pool(output, 2, 2, "pool1") # 16x16x64

W_conv2_1 = tf.get_variable('conv2_1', shape=[3, 3, 64, 128], initializer=tf.contrib.keras.initializers.he_normal())

b_conv2_1 = bias_variable([128])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv2_1) + b_conv2_1))

W_conv2_2 = tf.get_variable('conv2_2', shape=[3, 3, 128, 128], initializer=tf.contrib.keras.initializers.he_normal())

b_conv2_2 = bias_variable([128])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv2_2) + b_conv2_2))

output = max_pool(output, 2, 2, "pool2") # 8x8x128

W_conv3_1 = tf.get_variable('conv3_1', shape=[3, 3, 128, 256], initializer=tf.contrib.keras.initializers.he_normal())

b_conv3_1 = bias_variable([256])

output = tf.nn.relu( batch_norm(conv2d(output,W_conv3_1) + b_conv3_1))

W_conv3_2 = tf.get_variable('conv3_2', shape=[3, 3, 256, 256], initializer=tf.contrib.keras.initializers.he_normal())

b_conv3_2 = bias_variable([256])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv3_2) + b_conv3_2))

W_conv3_3 = tf.get_variable('conv3_3', shape=[3, 3, 256, 256], initializer=tf.contrib.keras.initializers.he_normal())

b_conv3_3 = bias_variable([256])

output = tf.nn.relu( batch_norm(conv2d(output, W_conv3_3) + b_conv3_3))

output = max_pool(output, 2, 2, "pool3") # 4x4x256

W_conv4_1 = tf.get_variable('conv4_1', shape=[3, 3, 256, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv4_1 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv4_1) + b_conv4_1))

W_conv4_2 = tf.get_variable('conv4_2', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv4_2 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv4_2) + b_conv4_2))

W_conv4_3 = tf.get_variable('conv4_3', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv4_3 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv4_3) + b_conv4_3))

output = max_pool(output, 2, 2) # 2x2x512

W_conv5_1 = tf.get_variable('conv5_1', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv5_1 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv5_1) + b_conv5_1))

W_conv5_2 = tf.get_variable('conv5_2', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv5_2 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv5_2) + b_conv5_2))

W_conv5_3 = tf.get_variable('conv5_3', shape=[3, 3, 512, 512], initializer=tf.contrib.keras.initializers.he_normal())

b_conv5_3 = bias_variable([512])

output = tf.nn.relu(batch_norm(conv2d(output, W_conv5_3) + b_conv5_3))

##output = max_pool(output, 2, 2) # 这里应该要池化 ######3

# output = tf.contrib.layers.flatten(output)

output = tf.reshape(output, [-1, 2*2*512])

##output = tf.reshape(output, [-1, 1 * 1 * 512])

##W_fc1 = tf.get_variable('fc1', shape=[1 * 1 * 512, 4096], initializer=tf.contrib.keras.initializers.he_normal())

W_fc1 = tf.get_variable('fc1', shape=[2 * 2 * 512, 4096], initializer=tf.contrib.keras.initializers.he_normal())

b_fc1 = bias_variable([4096])

output = tf.nn.relu(batch_norm(tf.matmul(output, W_fc1) + b_fc1) )

output = tf.nn.dropout(output, keep_prob)

W_fc2 = tf.get_variable('fc7', shape=[4096, 4096], initializer=tf.contrib.keras.initializers.he_normal())

b_fc2 = bias_variable([4096])

output = tf.nn.relu(batch_norm(tf.matmul(output, W_fc2) + b_fc2))

output = tf.nn.dropout(output, keep_prob)

W_fc3 = tf.get_variable('fc3', shape=[4096, 10], initializer=tf.contrib.keras.initializers.he_normal())

b_fc3 = bias_variable([10])

output = tf.nn.relu(batch_norm(tf.matmul(output, W_fc3) + b_fc3))

# output = tf.reshape(output,[-1,10])

# loss function: cross_entropy

# train_step: training operation

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_, logits=output))

l2 = tf.add_n([tf.nn.l2_loss(var) for var in tf.trainable_variables()])

train_step = tf.train.MomentumOptimizer(learning_rate, momentum_rate, use_nesterov=True).\

minimize(cross_entropy + l2 * weight_decay)

correct_prediction = tf.equal(tf.argmax(output, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# initial an saver to save model

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

summary_writer = tf.summary.FileWriter(log_save_path,sess.graph)

# epoch = 164

# make sure [bath_size * iteration = data_set_number]

for ep in range(1, total_epoch+1):

lr = learning_rate_schedule(ep)

pre_index = 0

train_acc = 0.0

train_loss = 0.0

start_time = time.time()

print("\n epoch %d/%d:" % (ep, total_epoch))

for it in range(1, iterations+1):

batch_x = train_x[pre_index:pre_index+batch_size]

batch_y = train_y[pre_index:pre_index+batch_size]

batch_x = data_augmentation(batch_x)

_, batch_loss = sess.run([train_step, cross_entropy],

feed_dict={x: batch_x, y_: batch_y, keep_prob: dropout_rate,

learning_rate: lr, train_flag: True})

batch_acc = accuracy.eval(feed_dict={x: batch_x, y_: batch_y, keep_prob: 1.0, train_flag: True})

train_loss += batch_loss

train_acc += batch_acc

pre_index += batch_size

if it == iterations:

train_loss /= iterations

train_acc /= iterations

loss_, acc_ = sess.run([cross_entropy, accuracy],

feed_dict={x: batch_x, y_: batch_y, keep_prob: 1.0, train_flag: True})

train_summary = tf.Summary(value=[tf.Summary.Value(tag="train_loss", simple_value=train_loss),

tf.Summary.Value(tag="train_accuracy", simple_value=train_acc)])

val_acc, val_loss, test_summary = run_testing(sess, ep)

summary_writer.add_summary(train_summary, ep)

summary_writer.add_summary(test_summary, ep)

summary_writer.flush()

print("iteration: %d/%d, cost_time: %ds, train_loss: %.4f, "

"train_acc: %.4f, test_loss: %.4f, test_acc: %.4f"

% (it, iterations, int(time.time()-start_time), train_loss, train_acc, val_loss, val_acc))

else:

print("iteration: %d/%d, train_loss: %.4f, train_acc: %.4f"

% (it, iterations, train_loss / it, train_acc / it))

save_path = saver.save(sess, model_save_path)

print("Model saved in file: %s" % save_path)

目前,在将代码改为tensorflow2.0版本,后面有时间在写一篇关于2.0的训练代码。

参考博文网址:https://blog.csdn/GOGO_YAO/article/details/80348200;