使用google的bert结合哈工大预训练模型进行中文/英文文本二分类,基于pytorch和transformer

使用bert的哈工大预训练模型进行中文/英文文本二分类,基于pytorch和transformer

-

- 前提

- 简要介绍

- 开始

- 导入必要的包和环境

- 准备并读取数据

- 导入模型的tokenizer

- 对数据进行tokenizer,也就是分片,并加入`[CLS]`、`[SEP]`等bert的默认标签

-

- 对句子进行attention_mask:

- 分割训练数据集和验证数据集,在这将90%的进行训练,10%进行验证

- 转换为torch tensor:

- 使用pytorch的dataloader帮助我们进行batch_size的划分和自动化输入

- 模型导入

-

- 查看模型的参数:

- 准备模型优化器和计时函数:

- 激动人心的模型训练!

- 如果你能顺利运行到这一步,恭喜你已经训练好了属于你的模型!

- test数据集进行模型评估!

- test数据预处理完成后,开始测试验证

- 总结

最近在弄与中文自然语言处理相关的内容,陆陆续续看了好多的教程,知道bert的效果相对比较好,后来找到了哈工大的中文预训练模型。但是作者就是不想说这个模型怎么用,说跟谷歌的预训练模型一样(但是谷歌的我也不会用),后来辗转找到了一篇非常不错的英文教程,想看英文文本分类的跟着这个教程就行。跟着它也终于把中文的文本分类基本搞定了,用的就是哈工大的模型。在这里就将其分享一下,之前也一直想着写博客,那就让这成为我的第一篇博客吧

前提

看这篇教程的前提:

- 你需要自己准备一个中文文本分类的数据集(因为我的项目暂时不能公开数据集),格式的话能用pandas读进去就行,excel、csv、tsv、等等的表格数据都行,格式要求自然是要有待分类的数据和分类标签了,下文会具体举例。至于怎么读进去,下文也会介绍。记住如果你准备好了,提前将其分为

train.xxx和test.xxx,方便下文使用。 - (可选)最好能安装jupyter lab,如果你有谷歌的colab账号那就更加完美不过了,这样就能用到谷歌的免费GPU,而且本次教程也是基于谷歌的colab运行环境进行,至于怎么注册,就自己百度吧。

- 如果在本地运行,你需要先安装好pytorch和transformer,在此都是基于最新版本,没有指定特殊的版本。

- 这里只介绍2分类,但是扩展成多分类应该很简单,修改下模型参数就行,下文会介绍

简要介绍

bert在2018年被提出之后,刷榜了很多NLP任务,基本上是现阶段做NLP无法绕过的技术。在中文文本领域,目前做的比较好的是哈工大和百度的ERNIE,当然ERNIE也是基于bert来进行中文优化以及性能提升的,至于怎么用ERNIE,如果我搞清楚了还会继续写教程。

更多原理性的东西我不再赘述,写的比我好的一大堆,在这里我只探讨怎么使用bert的中文预训练模型来进行文本分类

开始

首先安装transformer并导入pythrch,并检测当前环境是否有GPU,剩余的实验均使用GPU进行加速,如果你没有GPU,那么把下文的.cuda 和 .device 去掉就行

导入必要的包和环境

! pip3 install transformers

! pip3 install keras

! pip3 install tensorflow

import torch

# If there's a GPU available...

if torch.cuda.is_available():

# Tell PyTorch to use the GPU.

device = torch.device("cuda")

print('There are %d GPU(s) available.' % torch.cuda.device_count())

print('We will use the GPU:', torch.cuda.get_device_name(0))

# If not...

else:

print('No GPU available, using the CPU instead.')

device = torch.device("cpu")

如果你使用Google colab,下面的代码可以帮助你把文件从本地上传到colab,直接运行它就会叫你选择本地文件进行上传

from google.colab import files

uploaded = files.upload()

for fn in uploaded.keys():

print('User file "{name}"'.format(

name=fn, length= len(uploaded[fn])

))

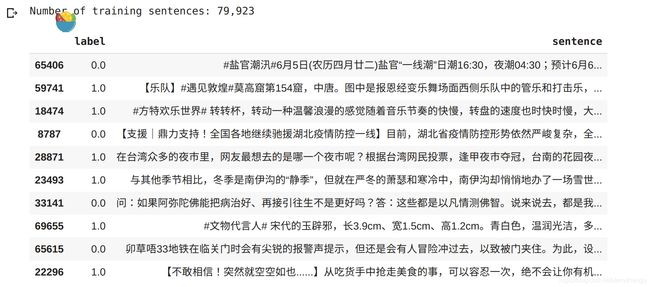

准备并读取数据

import pandas as pd

# Load the dataset into a pandas dataframe.

df = pd.read_csv("all_comment_data.csv",

header=None,

names=['label', 'sentence']

)

# Report the number of sentences.

df = df.drop([0])

df = df.dropna(axis=0, how='any')

df = df[df['label'].isin(["1","0"])]

print(df)

print('Number of training sentences: {:,}\n'.format(df.shape[0]))

# Display 10 random rows from the data.

df.sample(10)

在这里我使用的是自己爬并自己标注的旅游数据,一共79000条训练数据和20000条测试数据

注意:

- 你的数据有可能跟我上面的不一样,可能文件名、列名等不同,只要修改下上面读取函数的参数就能正确读入成dataframe的文件,这样后面就能统一处理,如果实在不知道参数代表什么,查查看

pd.read_csv函数的用法 - 这个读取数据的步骤很重要,理论上只要你准备好相应的文本和它的类别,基本上就能顺利做下去,如果你的文本标签不是01这些,那么你需要参考源博客来进行修改,或者研究下下面的代码注释,理论上应该是有对应的label属性可供选择,不过你还可以直接建立字典将你的自定义标签映射到012上,这样本质上没有区别。

pandas默认读入是string类型,需要自己将标签处理成整数0或1(这样对下文处理更加方便)

这里利用numpy进行转换,如果你没有numpy,自己百度安装

# Get the lists of sentences and their labels.

import numpy as np

sentences = df.sentence.values

labels = np.array(df.label.values,dtype=np.int32)

导入模型的tokenizer

from transformers import BertTokenizer

from transformers import AutoTokenizer, AutoModelForMaskedLM

# Load the BERT tokenizer.

print('Loading BERT tokenizer...')

tokenizer = BertTokenizer.from_pretrained("hfl/chinese-bert-wwm-ext")

这里导入的模型是hfl/chinese-bert-wwm-ext不同模型差异看哈工大的中文预训练模型

对数据进行tokenizer,也就是分片,并加入[CLS]、[SEP]等bert的默认标签

# Tokenize all of the sentences and map the tokens to thier word IDs.

input_ids = []

# For every sentence...

for sent in sentences:

# `encode` will:

# (1) Tokenize the sentence.

# (2) Prepend the `[CLS]` token to the start.

# (3) Append the `[SEP]` token to the end.

# (4) Map tokens to their IDs.

encoded_sent = tokenizer.encode(

sent, # Sentence to encode.

add_special_tokens = True, # Add '[CLS]' and '[SEP]'

# This function also supports truncation and conversion

# to pytorch tensors, but we need to do padding, so we

# can't use these features :( .

#max_length = 128, # Truncate all sentences.

#return_tensors = 'pt', # Return pytorch tensors.

)

# Add the encoded sentence to the list.

input_ids.append(encoded_sent)

# Print sentence 0, now as a list of IDs.

print('Original: ', sentences[0])

print('Token IDs:', input_ids[0])

print('Max sentence length: ', max([len(sen) for sen in input_ids]))

输出

Original: 1

Token IDs: [101, 122, 102]

Max sentence length: 24306

这里最长的句子有20000行,因为要统一句子长度,所以其实最好弄个循环看看适合自己的句子长度是多少:

len(input_ids)

s = 0

for i in input_ids:

if len(i) > 160:

s +=1

print(s)

# 打印出大于160的,发现没多少,所以下面的统一长度使用160

注意:句子长度与训练性能有很大关系,建议酌情选择

# We'll borrow the `pad_sequences` utility function to do this.

from keras.preprocessing.sequence import pad_sequences

# Set the maximum sequence length.

# I've chosen 160 somewhat arbitrarily.

MAX_LEN = 160

print('\nPadding/truncating all sentences to %d values...' % MAX_LEN)

print('\nPadding token: "{:}", ID: {:}'.format(tokenizer.pad_token, tokenizer.pad_token_id))

# Pad our input tokens with value 0.

# "post" indicates that we want to pad and truncate at the end of the sequence,

# as opposed to the beginning.

input_ids = pad_sequences(input_ids, maxlen=MAX_LEN, dtype="long",

value=0, truncating="post", padding="post")

print('\Done.')

对句子进行attention_mask:

attention_mask是什么我就不多解释了,感兴趣可以自己看引用的源博客

# Create attention masks

attention_masks = []

# For each sentence...

for sent in input_ids:

# Create the attention mask.

# - If a token ID is 0, then it's padding, set the mask to 0.

# - If a token ID is > 0, then it's a real token, set the mask to 1.

att_mask = [int(token_id > 0) for token_id in sent]

# Store the attention mask for this sentence.

attention_masks.append(att_mask)

分割训练数据集和验证数据集,在这将90%的进行训练,10%进行验证

# Use train_test_split to split our data into train and validation sets for

# training

from sklearn.model_selection import train_test_split

# Use 90% for training and 10% for validation.

train_inputs, validation_inputs, train_labels, validation_labels = train_test_split(input_ids, labels,

random_state=2018, test_size=0.1)

# Do the same for the masks.

train_masks, validation_masks, _, _ = train_test_split(attention_masks, labels,

random_state=2018, test_size=0.1)

转换为torch tensor:

# Convert all inputs and labels into torch tensors, the required datatype

# for our model.

train_inputs = torch.tensor(train_inputs)

validation_inputs = torch.tensor(validation_inputs)

train_labels = torch.tensor(train_labels)

validation_labels = torch.tensor(validation_labels)

train_masks = torch.tensor(train_masks)

validation_masks = torch.tensor(validation_masks)

使用pytorch的dataloader帮助我们进行batch_size的划分和自动化输入

from torch.utils.data import TensorDataset, DataLoader, RandomSampler, SequentialSampler

# The DataLoader needs to know our batch size for training, so we specify it

# here.

# For fine-tuning BERT on a specific task, the authors recommend a batch size of

# 16 or 32.

batch_size = 16

# Create the DataLoader for our training set.

print(train_inputs.size(),train_masks.size(),train_labels.size())

train_data = TensorDataset(train_inputs, train_masks, train_labels)

train_sampler = RandomSampler(train_data)

train_dataloader = DataLoader(train_data, sampler=train_sampler, batch_size=batch_size)

# Create the DataLoader for our validation set.

validation_data = TensorDataset(validation_inputs, validation_masks, validation_labels)

validation_sampler = SequentialSampler(validation_data)

validation_dataloader = DataLoader(validation_data, sampler=validation_sampler, batch_size=batch_size)

上面的batch_size设置成16,自己可以根据自己的机子性能进行调整,size越大,用的内存显存就越多,自己酌情设置。

至此,数据基本准备完成,下面开始模型的导入和设置

模型导入

from transformers import BertForSequenceClassification, AdamW, BertConfig

# Load BertForSequenceClassification, the pretrained BERT model with a single

# linear classification layer on top.

model = BertForSequenceClassification.from_pretrained(

"hfl/chinese-bert-wwm-ext",

num_labels = 2, # The number of output labels--2 for binary classification.

# You can increase this for multi-class tasks.

output_attentions = False, # Whether the model returns attentions weights.

output_hidden_states = False, # Whether the model returns all hidden-states.

)

# "bert-base-uncased", # Use the 12-layer BERT model, with an uncased vocab.

# num_labels = 2, # The number of output labels--2 for binary classification.

# # You can increase this for multi-class tasks.

# output_attentions = False, # Whether the model returns attentions weights.

# output_hidden_states = False, # Whether the model returns all hidden-states.

# )

# Tell pytorch to run this model on the GPU.

model.cuda()

在这里用的是hfl/chinese-bert-wwm-ext模型,自己可以参考哈工大的模型介绍来更换,但是必须要跟上面的tokenizer导入的模型一样。

最后一句:model.cuda()是将模型放进GPU里面,如果你没有GPU,那么把它注释掉

查看模型的参数:

# Get all of the model's parameters as a list of tuples.

params = list(model.named_parameters())

print('The BERT model has {:} different named parameters.\n'.format(len(params)))

print('==== Embedding Layer ====\n')

for p in params[0:5]:

print("{:<55} {:>12}".format(p[0], str(tuple(p[1].size()))))

print('\n==== First Transformer ====\n')

for p in params[5:21]:

print("{:<55} {:>12}".format(p[0], str(tuple(p[1].size()))))

print('\n==== Output Layer ====\n')

for p in params[-4:]:

print("{:<55} {:>12}".format(p[0], str(tuple(p[1].size()))))

准备模型优化器和计时函数:

# Note: AdamW is a class from the huggingface library (as opposed to pytorch)

# I believe the 'W' stands for 'Weight Decay fix"

optimizer = AdamW(model.parameters(),

lr = 2e-5, # args.learning_rate - default is 5e-5, our notebook had 2e-5

eps = 1e-8 # args.adam_epsilon - default is 1e-8.

)

from transformers import get_linear_schedule_with_warmup

# Number of training epochs (authors recommend between 2 and 4)

epochs = 4

# Total number of training steps is number of batches * number of epochs.

total_steps = len(train_dataloader) * epochs

print(total_steps)

# Create the learning rate scheduler.

scheduler = get_linear_schedule_with_warmup(optimizer,

num_warmup_steps = 0, # Default value in run_glue.py

num_training_steps = total_steps)

这里使用AdamW模型优化函数,可以看成是一种自适应的梯度下降算法,帮助模型更快收敛,一般来说如果你不知道用什么优化函数,选他就是了,上面还设置了一些学习率等

注意,有个很重要的参数:epochs,代表你要运行多少次,一个epochs代表将你所有的训练数据跑一遍,在这里就是跑70000条数据,4个epochs就是跑4遍,至于为什么跑四次就能收敛,这就是bert预训练模型的强大之处,它已经帮助我们调整好了很多参数,我们只要进行fine-tuning就能得到很好的结果,当然如果你的数据集比较小,可以跑多几次看看结果会怎样,因为本次的训练数据相对较多,跑一个epochs要花40分钟(如果用CPU,内存就爆炸了,动不了),所以就不多尝试了

下面是一些计时函数和评价函数,在这里提早进行定义。

import numpy as np

# Function to calculate the accuracy of our predictions vs labels

def flat_accuracy(preds, labels):

pred_flat = np.argmax(preds, axis=1).flatten()

labels_flat = labels.flatten()

return np.sum(pred_flat == labels_flat) / len(labels_flat)

import time

import datetime

def format_time(elapsed):

'''

Takes a time in seconds and returns a string hh:mm:ss

'''

# Round to the nearest second.

elapsed_rounded = int(round((elapsed)))

# Format as hh:mm:ss

return str(datetime.timedelta(seconds=elapsed_rounded))

激动人心的模型训练!

弄了这么久,终于开始了模型训练!下面定义了一大堆的参数,不过总体来说就是首先在epoch里面设置各种参数,然后进行计时训练,然后每次epoch结束后会进行验证,代码注释都非常详细了,可以自己研究下,在这里就不多展开。

import random

# Set the seed value all over the place to make this reproducible.

seed_val = 42

random.seed(seed_val)

np.random.seed(seed_val)

torch.manual_seed(seed_val)

torch.cuda.manual_seed_all(seed_val)

# Store the average loss after each epoch so we can plot them.

loss_values = []

# For each epoch...

for epoch_i in range(0, epochs):

# ========================================

# Training

# ========================================

# Perform one full pass over the training set.

print("")

print('======== Epoch {:} / {:} ========'.format(epoch_i + 1, epochs))

print('Training...')

# Measure how long the training epoch takes.

t0 = time.time()

# Reset the total loss for this epoch.

total_loss = 0

# Put the model into training mode. Don't be mislead--the call to

# `train` just changes the *mode*, it doesn't *perform* the training.

# `dropout` and `batchnorm` layers behave differently during training

# vs. test (source: https://stackoverflow.com/questions/51433378/what-does-model-train-do-in-pytorch)

model.train()

# For each batch of training data...

for step, batch in enumerate(train_dataloader):

# Progress update every 40 batches.

if step % 40 == 0 and not step == 0:

# Calculate elapsed time in minutes.

elapsed = format_time(time.time() - t0)

# Report progress.

print(' Batch {:>5,} of {:>5,}. Elapsed: {:}.'.format(step, len(train_dataloader), elapsed))

# Unpack this training batch from our dataloader.

#

# As we unpack the batch, we'll also copy each tensor to the GPU using the

# `to` method.

#

# `batch` contains three pytorch tensors:

# [0]: input ids

# [1]: attention masks

# [2]: labels

b_input_ids = batch[0].long().to(device)

b_input_mask = batch[1].to(device)

b_labels = batch[2].long().to(device)

# Always clear any previously calculated gradients before performing a

# backward pass. PyTorch doesn't do this automatically because

# accumulating the gradients is "convenient while training RNNs".

# (source: https://stackoverflow.com/questions/48001598/why-do-we-need-to-call-zero-grad-in-pytorch)

model.zero_grad()

# Perform a forward pass (evaluate the model on this training batch).

# This will return the loss (rather than the model output) because we

# have provided the `labels`.

# The documentation for this `model` function is here:

# https://huggingface.co/transformers/v2.2.0/model_doc/bert.html#transformers.BertForSequenceClassification

outputs = model(b_input_ids,

token_type_ids=None,

attention_mask=b_input_mask,

labels=b_labels)

# The call to `model` always returns a tuple, so we need to pull the

# loss value out of the tuple.

loss = outputs[0]

# Accumulate the training loss over all of the batches so that we can

# calculate the average loss at the end. `loss` is a Tensor containing a

# single value; the `.item()` function just returns the Python value

# from the tensor.

total_loss += loss.item()

# Perform a backward pass to calculate the gradients.

loss.backward()

# Clip the norm of the gradients to 1.0.

# This is to help prevent the "exploding gradients" problem.

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

# Update parameters and take a step using the computed gradient.

# The optimizer dictates the "update rule"--how the parameters are

# modified based on their gradients, the learning rate, etc.

optimizer.step()

# Update the learning rate.

scheduler.step()

# Calculate the average loss over the training data.

avg_train_loss = total_loss / len(train_dataloader)

# Store the loss value for plotting the learning curve.

loss_values.append(avg_train_loss)

print("")

print(" Average training loss: {0:.2f}".format(avg_train_loss))

print(" Training epcoh took: {:}".format(format_time(time.time() - t0)))

# ========================================

# Validation

# ========================================

# After the completion of each training epoch, measure our performance on

# our validation set.

print("")

print("Running Validation...")

t0 = time.time()

# Put the model in evaluation mode--the dropout layers behave differently

# during evaluation.

model.eval()

# Tracking variables

eval_loss, eval_accuracy = 0, 0

nb_eval_steps, nb_eval_examples = 0, 0

# Evaluate data for one epoch

for batch in validation_dataloader:

# Add batch to GPU

batch = tuple(t.to(device) for t in batch)

# Unpack the inputs from our dataloader

b_input_ids, b_input_mask, b_labels = batch

# Telling the model not to compute or store gradients, saving memory and

# speeding up validation

with torch.no_grad():

# Forward pass, calculate logit predictions.

# This will return the logits rather than the loss because we have

# not provided labels.

# token_type_ids is the same as the "segment ids", which

# differentiates sentence 1 and 2 in 2-sentence tasks.

# The documentation for this `model` function is here:

# https://huggingface.co/transformers/v2.2.0/model_doc/bert.html#transformers.BertForSequenceClassification

outputs = model(b_input_ids,

token_type_ids=None,

attention_mask=b_input_mask)

# Get the "logits" output by the model. The "logits" are the output

# values prior to applying an activation function like the softmax.

logits = outputs[0]

# Move logits and labels to CPU

logits = logits.detach().cpu().numpy()

label_ids = b_labels.to('cpu').numpy()

# Calculate the accuracy for this batch of test sentences.

tmp_eval_accuracy = flat_accuracy(logits, label_ids)

# Accumulate the total accuracy.

eval_accuracy += tmp_eval_accuracy

# Track the number of batches

nb_eval_steps += 1

# Report the final accuracy for this validation run.

print(" Accuracy: {0:.2f}".format(eval_accuracy/nb_eval_steps))

print(" Validation took: {:}".format(format_time(time.time() - t0)))

print("")

print("Training complete!")

在这里可以看到如果用我的数据集,用16G的Tesla T4半小时还没跑完一个epoch…

如果你能顺利运行到这一步,恭喜你已经训练好了属于你的模型!

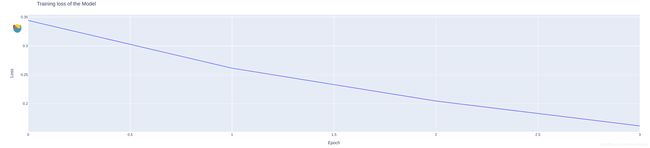

下面将模型的loss输出下:

import plotly.express as px

f = pd.DataFrame(loss_values)

f.columns=['Loss']

fig = px.line(f, x=f.index, y=f.Loss)

fig.update_layout(title='Training loss of the Model',

xaxis_title='Epoch',

yaxis_title='Loss')

fig.show()

test数据集进行模型评估!

首先如果你用的是Google colab,那肯定要先上传一个test.tsv文件,然后按照下面的代码进行读取,其实数据处理的过程跟上面的一样,代码都差不多,在这里就不多赘述了

import pandas as pd

# Load the dataset into a pandas dataframe.

df = pd.read_csv("comment_test.csv", header=None, names=['label', 'sentence'])

# Report the number of sentences.

df = df.drop([0])

df = df.dropna(axis=0, how='any')

df = df[df['label'].isin(["1","0"])]

print(df)

print('Number of training sentences: {:,}\n'.format(df.shape[0]))

print('Number of test sentences: {:,}\n'.format(df.shape[0]))

# Create sentence and label lists

sentences = df.sentence.values

labels = np.array(df.label.values,dtype=np.int32)

# Tokenize all of the sentences and map the tokens to thier word IDs.

input_ids = []

# For every sentence...

for sent in sentences:

# `encode` will:

# (1) Tokenize the sentence.

# (2) Prepend the `[CLS]` token to the start.

# (3) Append the `[SEP]` token to the end.

# (4) Map tokens to their IDs.

encoded_sent = tokenizer.encode(

sent, # Sentence to encode.

add_special_tokens = True, # Add '[CLS]' and '[SEP]'

)

input_ids.append(encoded_sent)

# Pad our input tokens

input_ids = pad_sequences(input_ids, maxlen=MAX_LEN,

dtype="long", truncating="post", padding="post")

# Create attention masks

attention_masks = []

# Create a mask of 1s for each token followed by 0s for padding

for seq in input_ids:

seq_mask = [float(i>0) for i in seq]

attention_masks.append(seq_mask)

# Convert to tensors.

prediction_inputs = torch.tensor(input_ids)

prediction_masks = torch.tensor(attention_masks)

prediction_labels = torch.tensor(labels)

# Set the batch size.

batch_size = 32

# Create the DataLoader.

prediction_data = TensorDataset(prediction_inputs, prediction_masks, prediction_labels)

prediction_sampler = SequentialSampler(prediction_data)

prediction_dataloader = DataLoader(prediction_data, sampler=prediction_sampler, batch_size=batch_size)

test数据预处理完成后,开始测试验证

# Prediction on test set

print('Predicting labels for {:,} test sentences...'.format(len(prediction_inputs)))

# Put model in evaluation mode

model.eval()

# Tracking variables

predictions , true_labels = [], []

# Predict

for batch in prediction_dataloader:

# Add batch to GPU

batch = tuple(t.to(device) for t in batch)

# Unpack the inputs from our dataloader

b_input_ids, b_input_mask, b_labels = batch

# Telling the model not to compute or store gradients, saving memory and

# speeding up prediction

with torch.no_grad():

# Forward pass, calculate logit predictions

outputs = model(b_input_ids, token_type_ids=None,

attention_mask=b_input_mask)

logits = outputs[0]

# Move logits and labels to CPU

logits = logits.detach().cpu().numpy()

label_ids = b_labels.to('cpu').numpy()

# Store predictions and true labels

predictions.append(logits)

true_labels.append(label_ids)

print('DONE.')

print('Positive samples: %d of %d (%.2f%%)' % (df.label.sum(), len(df.label), (df.label.sum() / len(df.label) * 100.0)))

在它的代码里面,我们验证的数据放在了两个列表里面,其实原作者使用了一个更高级的验证函数:mcc验证,原理我就不多讲了,简单概括就是:越接近1,分类器越牛逼,越接近0,分类器越垃圾…

from sklearn.metrics import matthews_corrcoef

matthews_set = []

# Evaluate each test batch using Matthew's correlation coefficient

print('Calculating Matthews Corr. Coef. for each batch...')

# For each input batch...

for i in range(len(true_labels)):

# The predictions for this batch are a 2-column ndarray (one column for "0"

# and one column for "1"). Pick the label with the highest value and turn this

# in to a list of 0s and 1s.

pred_labels_i = np.argmax(predictions[i], axis=1).flatten()

# Calculate and store the coef for this batch.

matthews = matthews_corrcoef(true_labels[i], pred_labels_i)

matthews_set.append(matthews)

# Combine the predictions for each batch into a single list of 0s and 1s.

flat_predictions = [item for sublist in predictions for item in sublist]

flat_predictions = np.argmax(flat_predictions, axis=1).flatten()

# Combine the correct labels for each batch into a single list.

flat_true_labels = [item for sublist in true_labels for item in sublist]

# Calculate the MCC

mcc = matthews_corrcoef(flat_true_labels, flat_predictions)

print('MCC: %.3f' % mcc)

其实对于我来说,只关心它准确率多少,也就是对了多少个,让我们来输出下:

count = 0

for i in range(len(flat_true_labels)):

if int(flat_predictions[i]) == int(flat_true_labels[i]):

count +=1

print(count, "正确率: " count/len(flat_true_labels))

在本次案例中,正确率为74%,MCC为0.53。数字看起来并不惊人。但是要知道这个数据集是自己准备的,而且没有经过任何的调参和优化,test数据集也有些偏差,它能做到这一步我已经非常吃惊,下次可以看看XLnet之类的,看看有没有更好的效果。

总结

入坑NLP也有几个月了,在几个月的断断续续的学习摸索中,从SVM到RNN,再到LSTM再到BERT,一步步基本也是踩着NLP的步伐过来的。网上说原理的很多,懂也能看懂,但对于一个入门的人来说,它最先关心的可能不是原理,不是背后用了多少高深的技术,而是到底先怎么用。只有弄清楚了怎么用,再回头看原理这样反而更加有助于学习实践,要不然所有原理都懂了,成了懂王,一敲代码啥都不会,不仅信心全无反而会导致厌恶。

记得有个博客讲的很好,很多高校都是在你什么都不懂得时候,拼命给你灌原理,仿佛所有的公式理论都懂了你就能徒手写bert。这种自底向上的学习对初学者来说反而是最晦涩而且致命的。而编程和技术恰好相反,你可以啥都不知道就开始上手干活,等学会用了,再回头看原理和实现,就会发现很多东西都触类旁通,这也是一种自顶向下的学习方法,就我个人看来,编码这类技术活,就应该这样。

源博客:

使用bert进行英文文本分类