pyg的COLAB NOTEBOOKS1.Introduction: Hands-on Graph Neural Networks

原文链接:(需要梯子)

https://colab.research.google.com/drive/1h3-vJGRVloF5zStxL5I0rSy4ZUPNsjy8?usp=sharing&pli=1#scrollTo=AkQAVluLuxT_

可视化代码

# Install required packages.

import os

import torch

os.environ['TORCH'] = torch.__version__

print(torch.__version__)

!pip install -q torch-scatter -f https://data.pyg.org/whl/torch-${TORCH}.html

!pip install -q torch-sparse -f https://data.pyg.org/whl/torch-${TORCH}.html

!pip install -q git+https://github.com/pyg-team/pytorch_geometric.git

# Helper function for visualization.

%matplotlib inline

import networkx as nx

import matplotlib.pyplot as plt

def visualize_graph(G, color):

plt.figure(figsize=(7,7)) #设置幕布大小为7*7

plt.xticks([]) #默认坐标轴无刻度和标签

plt.yticks([])

nx.draw_networkx(G, pos=nx.spring_layout(G, seed=42), with_labels=False,

node_color=color, cmap="Set2")

plt.show()

def visualize_embedding(h, color, epoch=None, loss=None):

plt.figure(figsize=(7,7))

plt.xticks([])

plt.yticks([])

h = h.detach().cpu().numpy()

plt.scatter(h[:, 0], h[:, 1], s=140, c=color, cmap="Set2")

if epoch is not None and loss is not None:

plt.xlabel(f'Epoch: {epoch}, Loss: {loss.item():.4f}', fontsize=16)

plt.show()networkx.draw_networkx的使用:

draw_networkx(G, pos=None, arrows=None, with_labels=True, **kwds)

具体用法:

pos:字典(可选),以节点作为关键字,位置作为值;作用是让节点自动布局。

layout:可选,画成一个随机图,二部图或者其他;spring_layout:使用FR算法对节点进行自动布局。

seed=42:一种玄学?设置seed的目的是保证结果可复现。

导入数据库

from torch_geometric.datasets import KarateClub

dataset = KarateClub()

print(f'Dataset: {dataset}:')

print('======================')

print(f'Number of graphs: {len(dataset)}')

print(f'Number of features: {dataset.num_features}')

print(f'Number of classes: {dataset.num_classes}')KarateClub是一个著名的空手道俱乐部数据集,该数据集中仅有一张图即len(dataset)=1,每个节点具有一个34维的特征向量,节点根据社团内部的关系共分为四类。

观察数据

data = dataset[0] # Get the first graph object.

print(data)

print('==============================================================')

# Gather some statistics about the graph.

print(f'Number of nodes: {data.num_nodes}')

print(f'Number of edges: {data.num_edges}')

print(f'Average node degree: {data.num_edges / data.num_nodes:.2f}')

print(f'Number of training nodes: {data.train_mask.sum()}')

print(f'Training node label rate: {int(data.train_mask.sum()) / data.num_nodes:.2f}')

print(f'Has isolated nodes: {data.has_isolated_nodes()}')

print(f'Has self-loops: {data.has_self_loops()}')

print(f'Is undirected: {data.is_undirected()}')print(data)的输出结果是:Data(x=[34, 34], edge_index=[2, 156], y=[34], train_mask=[34])

x是34个节点,每个节点具有一个34维的特征向量;edge_index是邻接矩阵,存储格式为coo格式(coordinate format),y是标签,

train_mask表示针对哪些节点进行训练(140个节点)。val_mask表示哪些节点用于验证,例如,用于执行早期停止(500个节点)。test_mask表示对哪些节点进行测试(1000个节点)。

。

将图可视化

from torch_geometric.utils import to_networkx

G = to_networkx(data, to_undirected=True)

visualize_graph(G, color=data.y)torch_geometric.utils.to_networkx的使用:

to_networkx(data, node_attrs=None, edge_attrs=None, graph_attrs=None, to_undirected: Union[bool, str] = False, remove_self_loops: bool = False)

作用:将torch_geometric.data.Data 类型数据转换为networkx.Graph,如果 to_undirected是True的话,就是无向图,否则反之。

搭建GCN框架

import torch

from torch.nn import Linear

from torch_geometric.nn import GCNConv

class GCN(torch.nn.Module):

def __init__(self):

super().__init__()

torch.manual_seed(1234)

# torch.manual_seed:设置CPU生成随机数的种子,方便下次复现实验结果

self.conv1 = GCNConv(dataset.num_features, 4)

self.conv2 = GCNConv(4, 4)

self.conv3 = GCNConv(4, 2)

self.classifier = Linear(2, dataset.num_classes)

def forward(self, x, edge_index):

h = self.conv1(x, edge_index)

h = h.tanh()

h = self.conv2(h, edge_index)

h = h.tanh()

h = self.conv3(h, edge_index)

h = h.tanh() # Final GNN embedding space.

# Apply a final (linear) classifier.

out = self.classifier(h)

return out, h

model = GCN()

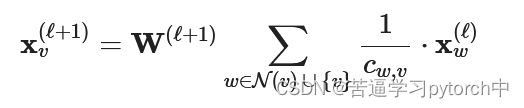

print(model)GCN层公式原理:

该网络模型使用了三层的GCN,聚合了三跳的邻域信息,同时,GCN层也将节点特征的维度降到2,34-->4-->4-->2。最后使用线形层作为分类器,将所有节点分为四类。

该模型返回的是分类器分类结果,即一个shape为[34, 4]的矩阵,同时也返回节点的特征向量矩阵。

将降维后的特征向量可视化

model = GCN()

_, h = model(data.x, data.edge_index)

#_,是什么意思呢?

print(f'Embedding shape: {list(h.shape)}')

visualize_embedding(h, color=data.y)降维后的向量可自动聚类,说明 GNNs introduce a strong inductive bias, leading to similar embeddings for nodes that are close to each other in the input graph.

Karate Club Network的训练

import time

from IPython.display import Javascript # Restrict height of output cell.

display(Javascript('''google.colab.output.setIframeHeight(0, true, {maxHeight: 430})'''))

model = GCN()

criterion = torch.nn.CrossEntropyLoss() # Define loss criterion.

optimizer = torch.optim.Adam(model.parameters(), lr=0.01) # Define optimizer.

def train(data):

optimizer.zero_grad() # Clear gradients.

out, h = model(data.x, data.edge_index) # Perform a single forward pass.

loss = criterion(out[data.train_mask], data.y[data.train_mask]) # Compute the loss solely based on the training nodes.

loss.backward() # Derive gradients.

optimizer.step() # Update parameters based on gradients.

return loss, h

for epoch in range(401):

loss, h = train(data)

if epoch % 10 == 0:

visualize_embedding(h, color=data.y, epoch=epoch, loss=loss)

time.sleep(0.3)这里:使用的损失函数是crossentroyloss,使用的优化器是Adam,

训练的流程:zero the parameter gradients + forward + backward + optimize

梯度清零+前向传播+反向传播+优化