torch.optim.lr_scheduler.CosineAnnealingWarmRestarts、OneCycleLR定义与使用

torch中有多种余弦退火学习率调整方法,包括:OneCycleLR、CosineAnnealingLR和CosineAnnealingWarmRestarts。

CosineAnnealingWarmRestarts(带预热的余弦退火)学习率方法定义

torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0, T_mult=1, \

eta_min=0, last_epoch=- 1, verbose=False)CosineAnnealingWarmRestarts参数:

- optimizer (Optimizer) – Wrapped optimizer. 优化器

- T_0 (int) – Number of iterations for the first restart.学习率第一次回到初始值的epoch位置

- T_mult (int, optional) – A factor increases T_{i} mult应该是multiply的意思,即T_mult=2意思是周期翻倍,第一个周期是1,则第二个周期是2,第三个周期是4。

- eta_min (float, optional) – Minimum learning rate. Default: 0.

- last_epoch (int, optional) – The index of last epoch. Default: -1.

- verbose (bool) – If True, prints a message to stdout for each update. Default: False.

CosineAnnealingWarmRestarts使用

scheduler 初始化之后,在batch中使用scheduler.step()

import torch

from torch.optim.lr_scheduler import CosineAnnealingLR, CosineAnnealingWarmRestarts,StepLR, OneCycleLR

import torch.nn as nn

from torchvision.models import resnet18

import matplotlib.pyplot as plt

model = resnet18(pretrained=False)

optimizer = torch.optim.SGD(model.parameters(), lr=0.1)

mode = 'cosineAnnWarm'

#mode = 'cosineAnn'

if mode == 'cosineAnn':

scheduler = CosineAnnealingLR(optimizer, T_max=5, eta_min=0.001)

elif mode == 'cosineAnnWarm':

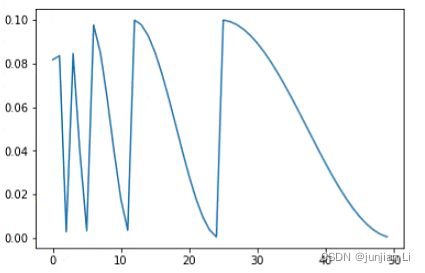

scheduler = CosineAnnealingWarmRestarts(optimizer, T_0=5, T_mult=2)

plt.figure()

max_epoch = 50

iters = 200

cur_lr_list = []

for epoch in range(max_epoch):

for batch in range(iters):

optimizer.step()

scheduler.step()

cur_lr = optimizer.param_groups[-1]['lr']

cur_lr_list.append(cur_lr)

print('Cur lr:', cur_lr)

x_list = list(range(len(cur_lr_list)))

plt.plot(x_list, cur_lr_list)

plt.show()OneCycleLR使用

pytorch版本>=1.7

mode = 'OneCycleLR'

#mode = 'cosineAnn'

max_epoch = 500

iters = 200

if mode == 'cosineAnn':

scheduler = CosineAnnealingLR(optimizer, T_max=5, eta_min=0.001)

elif mode == 'cosineAnnWarm':

scheduler = CosineAnnealingWarmRestarts(optimizer, T_0=5, T_mult=2)

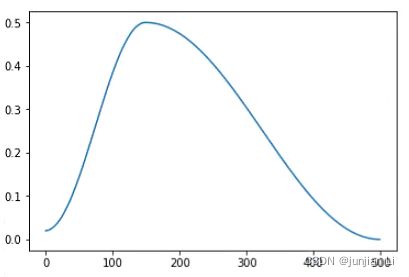

elif mode == 'OneCycleLR':

scheduler = OneCycleLR(optimizer, max_lr=0.5, steps_per_epoch=iters, epochs=max_epoch, pct_start=o.3)

plt.figure()

cur_lr_list = []

for epoch in range(max_epoch):

for batch in range(iters):

optimizer.step()

scheduler.step()

cur_lr = optimizer.param_groups[-1]['lr']

cur_lr_list.append(cur_lr)

#print('Cur lr:', cur_lr)

x_list = list(range(len(cur_lr_list)))

plt.plot(x_list, cur_lr_list)

plt.show()在目前的pytorch1.9版本中新添加了一个three_phase参数,当这个three_phase=True

scheduler=torch.optim.lr_scheduler.OneCycleLR(optimizer,max_lr=0.9,steps_per_epoch=iters, epochs=max_epoch, pct_start=o.3,three_phase=True)得到的学习率变成下图:对称+陡降

————————————————

修改自:https://blog.csdn.net/qq_30129009/article/details/121732567