Jetson AGX Orin上部署YOLOv5_v5.0+TensorRT8

一.首先是捋请思路

①刷机后的Orin上环境是:CUDA11.4+CUDNN8.3.2使得后续需要的部署环境只能为TensorRT8.x(这是根据cuda和cudnn的版本确定的,安装见:三.TensorRT加速优化(1))

②TensorRT部署这里需要一个版本对应可以看到tensorrtx的最高yolov5支持的是v5.0模型,所以第③步最高选择是到YOLOv5_v5.0.下载:(GitHub - wang-xinyu/tensorrtx at yolov5-v5.0)

③ YOLOv5_v5.0下载:(https://github.com/ultralytics/yolov5/tree/v5.0)

④ 下载.pt文件,有yolov5s.pt / yolov5l.pt / yolov5m.pt ....等,这里下载yolov5s.pt(对应上面的YOLOv5_v5.0)下载:https://github.com/ultralytics/yolov5/releases/download/v5.0/yolov5s.pt

⑤ 需要有OpenCV,我安装的是opencv-4.6.0 : 安装OpenCV4.6.0简洁过程--Jetson AGX Orin

二.参考Github上的步骤流程

1. generate .wts from pytorch with .pt, or download .wts from model zoo

git clone -b v5.0 https://github.com/ultralytics/yolov5.git

git clone https://github.com/wang-xinyu/tensorrtx.git

// download https://github.com/ultralytics/yolov5/releases/download/v5.0/yolov5s.pt

cp {tensorrtx}/yolov5/gen_wts.py {ultralytics}/yolov5

cd {ultralytics}/yolov5

python gen_wts.py -w yolov5s.pt -o yolov5s.wts

// a file 'yolov5s.wts' will be generated.2. build tensorrtx/yolov5 and run

cd {tensorrtx}/yolov5/

// update CLASS_NUM in yololayer.h if your model is trained on custom dataset

mkdir build

cd build

cp {ultralytics}/yolov5/yolov5s.wts {tensorrtx}/yolov5/build

cmake ..

make

sudo ./yolov5 -s [.wts] [.engine] [s/m/l/x/s6/m6/l6/x6 or c/c6 gd gw] // serialize model to plan file

sudo ./yolov5 -d [.engine] [image folder] // deserialize and run inference, the images in [image folder] will be processed.

// For example yolov5s

sudo ./yolov5 -s yolov5s.wts yolov5s.engine s

sudo ./yolov5 -d yolov5s.engine ../samples

// For example Custom model with depth_multiple=0.17, width_multiple=0.25 in yolov5.yaml

sudo ./yolov5 -s yolov5_custom.wts yolov5.engine c 0.17 0.25

sudo ./yolov5 -d yolov5.engine ../samples3. check the images generated, as follows. _zidane.jpg and _bus.jpg

4. optional, load and run the tensorrt model in python

// install python-tensorrt, pycuda, etc.

// ensure the yolov5s.engine and libmyplugins.so have been built

python yolov5_trt.py三.实际部署过程

1. 根据前面的第一点准备好所需要的三个文件,分别是:

① tensorrtx/yolov5(准备tensorrtx文件下的yolov5文件)

② yolov5s.pt

③ yolov5-5.0.zip解压为yolov5-5.0

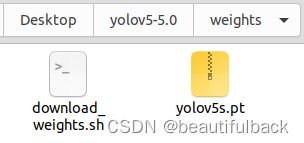

2. 将yolov5s.pt文件放到yolov5-5.0/weights文件下,如:

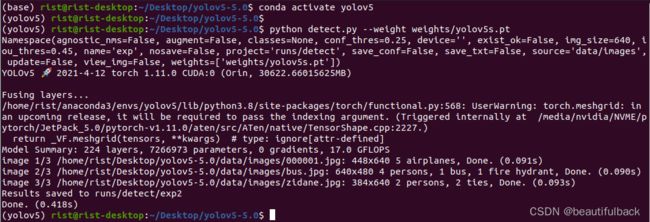

3. 打开之前实现yolov5模型检查的虚拟环境(参考:第一大点的(2)/(3)/(4))

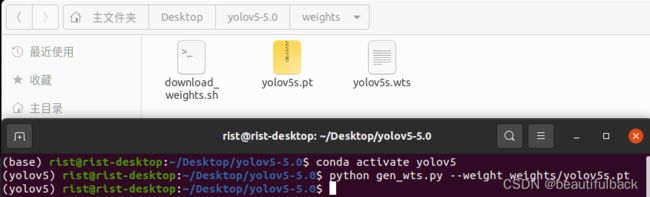

4. 将tenorrtx/yolov5/gen_wts.py复制到yolov5-5.0文件中,并运行下面的代码,生成yolov5s.wts

python gen_wts.py --weight weights/yolov5s.pt

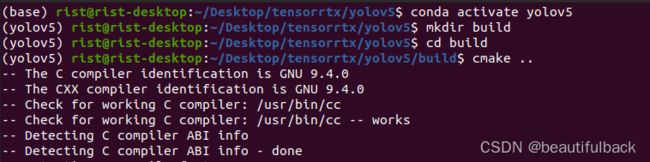

5. 然后进入tensorrtx/yolov5进行如下操作:

mkdir build

cd build

cmake ..

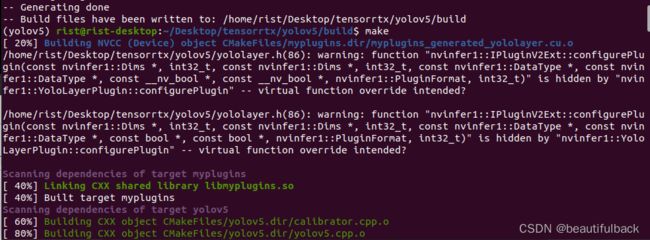

make

可见这步执行完会生成一个yolov5的文件

6. 将上面第4点生成的yolov5s.wts文件复制到tensorrtx/yolov5/build

![]()

7. 执行下面代码生成引擎文件

sudo ./yolov5 -s yolov5s.wts yolov5s.engine s8. 部署后测试

sudo ./yolov5 -d yolov5s.engine ../samples9. 最后来对比一下是否加速了

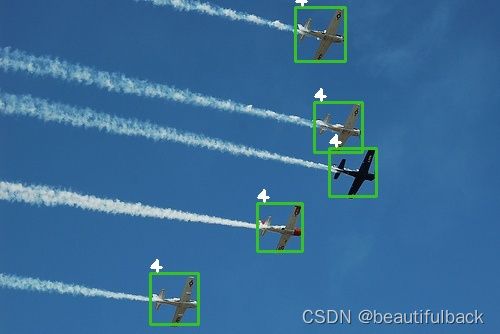

选择3张待检测的图片,放在tensorrtx/yolov5/examples/下

用于检测的3张图片

用于检测的3张图片

① 首先是测试没有经过训练直接检测yolov5模型

000001.jpg :91ms

bus.jpg :90ms

zidane.jpg :93ms

② TensorRT部署后的结果

000001.jpg :10ms

bus.jpg :15ms

zidane.jpg :12ms

可见经过TensoRT部署后在检查时间上有所提升!

10. 训练

11. 训练后会得到一个best.pt模型,用这个模型再进行TensorRT部署结果又是如何?

过程与上面相同,结果如下:

000001.jpg :10ms

bus.jpg :10ms

zidane.jpg :8ms

12. 最后关于yolov5_trt.py(可选,在 python 中加载并运行 tensorrt 模型)

这里要确保执行了前面的过程tensorrtx/yolov5/build/中有yolov5s.engine and libmyplugins.so

安装pycuda(大概十多分钟)

pip install pycuda# cd ../tensorrtx/yolov5

python yolov5_trt.py input->['samples/zidane.jpg'], time->12.71ms, saving into output/

input->['samples/zidane.jpg'], time->12.71ms, saving into output/

input->['samples/bus.jpg'], time->8.90ms, saving into output/

bus.jpg(output/)-->8.9ms

bus.jpg(output/)-->8.9ms