PaddleDetection简单教程

文章目录

-

- 一、 PaddleDetection简介

- 二、入门教程

-

- 2.1 快速使用

-

- 2.1.1 训练/评估/预测

- 2.1.2 混合精度训练

- 2.1.3 预训练、迁移学习

- 2.2 训练自定义数据集

-

- 2.2.1 准备数据

-

- 2.2.1.1 将数据集转换为VOC格式

- 2.2.1.2 将数据集转换为COCO格式

- 2.2.2 选择模型

- 2.2.3 生成Anchor

- 2.2.4 修改参数配置

- 2.2.5 开始训练与部署

- 2.2.6 自定义数据集训练demo

- 三、进阶使用教程

-

- 3.1 配置模块设计与介绍

- 四、飞桨学习赛:钢铁缺陷检测

-

- 4.1 赛事简介

- 4.2 安装PaddleDetection

- 4.3 数据预处理

-

- 4.3.1 解压数据集

- 4.3.2 自定义数据集(感觉很麻烦,暂时放弃)

- 4.3.3 准备VOC数据集(直接用这个)

- 4.4 修改配置文件,准备训练

-

- 4.4.1 使用pp-yoloe+

- 4.4.2 推理测试集

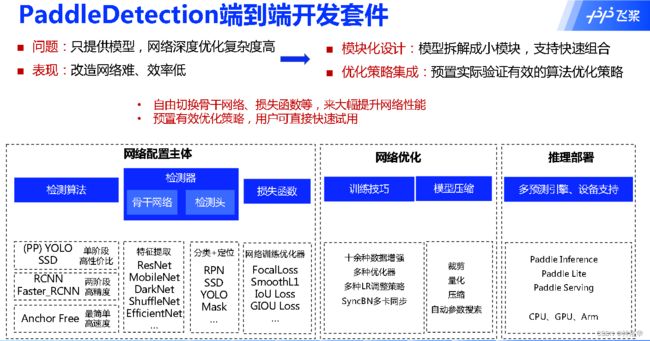

一、 PaddleDetection简介

github地址《PaddleDetection》、官方文档

- 模块化设计

Backbones:骨干网络,大多时候指的是提取特征的网络,其作用就是提取图片中的信息,供后面的网络使用。这些网络经常使用的是resnet、VGG等。在用这些网络作为backbone的时候,都是直接加载官方已经训练好的模型参数来训练,然后进行微调。

AnchorHead:主要是Anchor两阶段算法中RPN模块

RoIExtractor:Anchor两阶段算法提取RoI候选区特征

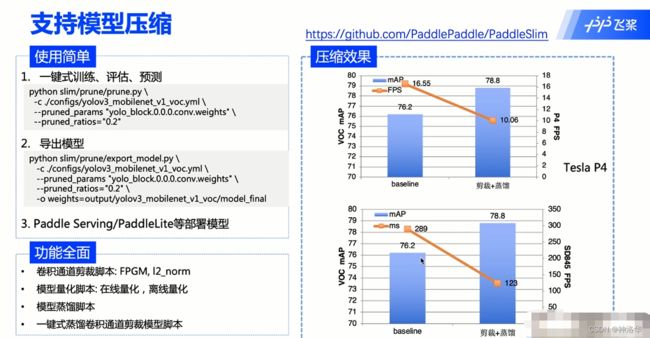

Slim:模型压缩

实际使用中不需要对模型改动太大时,维护好YAML Config文件就行。 - 丰富的模型库

CBResNet为Cascade-Faster-RCNN-CBResNet200vd-FPN模型,COCO数据集mAP高达53.3%。精度最高,可以打比赛Cascade-Faster-RCNN为Cascade-Faster-RCNN-ResNet50vd-DCN,PaddleDetection将其优化到COCO数据mAP为47.8%时推理速度为20FPSPP-YOLO:在YOLOv3上改进的模型,精度速度均优于YOLOv4,性价比高。在COCO数据集精度45.9%,Tesla V100预测速度72.9FPS。PP-YOLO v2是对PP-YOLO模型的进一步优化,在COCO数据集精度49.5%,Tesla V100预测速度68.9FPSPP-YOLOE是对PP-YOLO v2模型的进一步优化,在COCO数据集精度51.6%,Tesla V100预测速度78.1FPS- YOLOX和YOLOv5均为基于PaddleDetection复现算法

- TTFNet:AnchorFree模型,速度很快

Cascade-Faster-RCNN:在两阶段RCNN基础上改进,比一些量阶段模型速度精度更好- 图中模型均可在模型库中获取

- 端到端

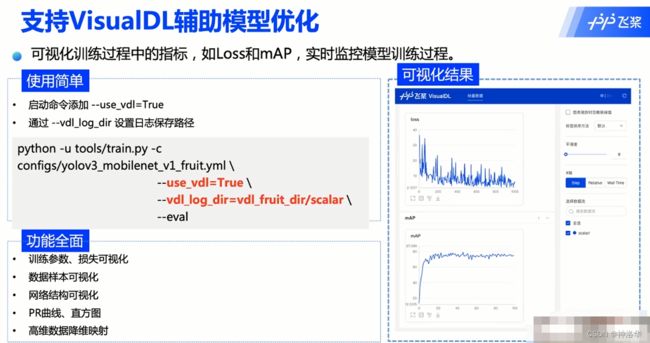

添加两个参数就可以进行可视化辅助:

下图展示的是对yolov3_moblienet_v1模型上进行模型压缩(裁剪和蒸馏)后,在Tesla P4 GPU和高通845芯片上运行的效果对比图。可以看到精度和速度都有提升(预测速度289ms降到123ms)

- 模型部署

使用paddle-serving-vlient可以进行在线服务部署

二、入门教程

参考PaddleDetection《入门使用教程》、github地址《PaddleDetection》

PaddleDetection安装:

- 安装参考github上的安装文档方式,PaddleDetection《入门使用教程》里面的安装方式是以前的版本,安装最新版会有问题。

- 环境要求PaddlePaddle 2.2以上

# 克隆PaddleDetection仓库

cd <path>#切换到自己要安装PaddleDetection的安装目录

git clone https://github.com/PaddlePaddle/PaddleDetection.git

#如果github下载代码较慢,可尝试使用gitee

#git clone https://gitee.com/paddlepaddle/PaddleDetection

# 安装其他依赖

cd PaddleDetection

pip install -r requirements.txt

# 编译安装paddledet

python setup.py install

安装后确认测试通过:

python ppdet/modeling/tests/test_architectures.py

测试通过后会提示如下信息:

.......

----------------------------------------------------------------------

Ran 7 tests in 12.816s

OK

常见报错:

- 无法

import pycocotools或者无法import ppdet可能是没有编译安装paddledet,运行python setup.py install。 - AI studio下安装后切换CPU/GPU环境时报错,需要重新运行

python setup.py install。

2.1 快速使用

2.1.1 训练/评估/预测

PaddleDetection提供了训练/评估/预测,支持通过不同可选参数实现特定功能

数据集

数据集为Kaggle数据集 ,包含877张图像,数据类别4类:crosswalk,speedlimit,stop,trafficlight。将数据划分为训练集701张图和测试集176张图,点此下载。

# 设置PYTHONPATH路径

export PYTHONPATH=$PYTHONPATH:.

# GPU训练 支持单卡,多卡训练,通过CUDA_VISIBLE_DEVICES指定卡号

export CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7

训练:

python tools/train.py -c configs/yolov3_mobilenet_v1_roadsign.yml --eval -o use_gpu=true

如果想通过VisualDL实时观察loss变化曲线,在训练命令中添加–use_vdl=true,以及通过–vdl_log_dir设置日志保存路径。首先安装VisualDL:

python -m pip install visualdl -i https://mirror.baidu.com/pypi/simple

python -u tools/train.py -c configs/yolov3_mobilenet_v1_roadsign.yml \

--use_vdl=true \

--vdl_log_dir=vdl_dir/scalar \

--eval

#通过visualdl命令实时查看变化曲线:

visualdl --logdir vdl_dir/scalar/ --host <host_IP> --port <port_num>

评估:

# GPU评估,默认使用训练过程中保存的best_model

export CUDA_VISIBLE_DEVICES=0

python tools/eval.py -c configs/yolov3_mobilenet_v1_roadsign.yml -o use_gpu=true

除此之外,也可以使用指定的模型来评估或者预测。

- 评估时指定模型权重,例如:

python -u tools/eval.py -c configs/faster_rcnn_r50_1x.yml \

-o weights=https://paddlemodels.bj.bcebos.com/object_detection/faster_rcnn_r50_1x.tar \

- 指定本地模型评估,例如

-o weights=output/faster_rcnn_r50_1x/best_model。 - 通过json文件评估。json文件必须命名为bbox.json或者mask.json,放在

evaluation/目录下

python -u tools/eval.py -c configs/faster_rcnn_r50_1x.yml \

--json_eval \

--output_eval evaluation/

预测:

# 预测结束后会在output文件夹中生成一张画有预测结果的同名图像

python tools/infer.py -c configs/yolov3_mobilenet_v1_roadsign.yml -o use_gpu=true --infer_img=demo/road554.png

CUDA_VISIBLE_DEVICES可指定计算的GPU,计算规则参考FAQ-c参数表示指定使用哪个配置文件-o参数表示指定配置文件中的全局变量(覆盖配置文件中的设置),这里设置使用gpu--eval参数表示边训练边评估,会自动将一个最佳mAP模型(名为best_model.pdmodel)保存到best_model文件夹下。- 模型checkpoints默认保存在output中,可通过修改配置文件中save_dir进行配置

- 若本地未找到数据集,将自动下载数据集并保存在~/.cache/paddle/dataset中

可选参数列表(可以通过--help查看):

预测结果如下图:

也可以设置其它参数,比如输出路径 && 设置预测阈值:

python -u tools/infer.py -c configs/faster_rcnn_r50_1x.yml \

--infer_img=demo/000000570688.jpg \

--output_dir=infer_output/ \

--draw_threshold=0.5 \

-o weights=output/faster_rcnn_r50_1x/model_final \

--draw_threshold可视化时分数的阈值,默认大于0.5的box会显示出来。- 此预测过程依赖PaddleDetection源码,如果您想使用C++进行服务器端预测、或在移动端预测、或使用PaddleServing部署、或独立于PaddleDetection源码使用Python预测可以参考模型导出教程和推理部署。

2.1.2 混合精度训练

通过设置 --fp16 命令行选项可以启用混合精度训练。目前混合精度训练已经在Faster-FPN, Mask-FPN 及 Yolov3 上进行验证,几乎没有精度损失(小于0.2 mAP)。建议使用多进程方式来进一步加速混合精度训练。示例如下:

python -m paddle.distributed.launch --selected_gpus 0,1,2,3,4,5,6,7 tools/train.py --fp16 -c configs/faster_rcnn_r50_fpn_1x.yml

细节请参看混合精度训练相关的Nvidia文档。

2.1.3 预训练、迁移学习

参考《迁移学习教程》

迁移学习需要使用自己的数据集,准备完成后,在配置文件中配置数据路径,对应修改reader中的路径参数即可:

- COCO数据集需要修改COCODataSet中的参数,以yolov3_darknet.yml为例,修改

yolov3_reader中的配置:

dataset:

!COCODataSet

dataset_dir: custom_data/coco # 自定义数据目录

image_dir: train2017 # 自定义训练集目录,该目录在dataset_dir中

anno_path: annotations/instances_train2017.json # 自定义数据标注路径,该目录在dataset_dir中

with_background: false

- VOC数据集需要修改VOCDataSet中的参数,以yolov3_darknet_voc.yml为例:

dataset:

!VOCDataSet

dataset_dir: custom_data/voc # 自定义数据集目录

anno_path: trainval.txt # 自定义数据标注路径,该目录在dataset_dir中

use_default_label: true

with_background: false

加载预训练模型

在迁移学习中,对预训练模型进行选择性加载(比如与类别数相关的权重),支持如下两种迁移学习方式:

- . 直接加载预训练权重(推荐方式)

模型中和预训练模型中对应参数形状不同的参数将自动被忽略,例如:

export CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7

python -u tools/train.py -c configs/faster_rcnn_r50_1x.yml \

-o pretrain_weights=https://paddlemodels.bj.bcebos.com/object_detection/faster_rcnn_r50_1x.tar

- 使用

finetune_exclude_pretrained_params参数控制忽略参数名- 在 YMAL 配置文件中通过设置

finetune_exclude_pretrained_params字段。可参考配置文件 - 在 train.py的启动参数中设置

finetune_exclude_pretrained_params。例如:

- 在 YMAL 配置文件中通过设置

export CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7

python -u tools/train.py -c configs/faster_rcnn_r50_1x.yml \

-o pretrain_weights=https://paddlemodels.bj.bcebos.com/object_detection/faster_rcnn_r50_1x.tar \

finetune_exclude_pretrained_params=['cls_score','bbox_pred'] \

pretrain_weights的路径为COCO数据集上开源的faster RCNN模型链接,完整模型链接可参考模型库和基线- 预训练模型自动下载并保存在〜/.cache/paddle/weights中

2.2 训练自定义数据集

2.2.1 准备数据

如果数据符合COCO或VOC数据集格式,可以直接进入下一步,否则需要将数据集转换至COCO格式或VOC格式。

2.2.1.1 将数据集转换为VOC格式

- VOC数据格式的目标检测数据,是指每个图像文件对应一个同名的xml文件,xml文件中标记物体框的坐标和类别等信息。

- Pascal VOC比赛对目标检测任务,对目标物体是否遮挡、是否被截断、是否是难检测物体进行了标注。对于用户自定义数据可根据实际情况对这些字段进行标注。

- VOC数据集所必须的文件内容如下所示,数据集根目录需有VOCdevkit/VOC2007或VOCdevkit/VOC2012文件夹,该文件夹中需有Annotations,JPEGImages和ImageSets/Main三个子目录,Annotations存放图片标注的xml文件,JPEGImages存放数据集图片,ImageSets/Main存放训练trainval.txt和测试test.txt列表。

VOCdevkit

├──VOC2007(或VOC2012)

│ ├── Annotations

│ ├── xxx.xml

│ ├── JPEGImages

│ ├── xxx.jpg

│ ├── ImageSets

│ ├── Main

│ ├── trainval.txt

│ ├── test.txt

最终VOC数据集文件组织格式为:

VOCdevkit/VOC2007

├── annotations

│ ├── road0.xml

│ ├── road1.xml

│ ├── road10.xml

│ | ...

├── images

│ ├── road0.jpg

│ ├── road1.jpg

│ ├── road2.jpg

│ | ...

├── label_list.txt

├── trainval.txt

└── test.txt

- 由于该数据集中缺少已标注图片名列表文件trainval.txt和test.txt,所以需要进行生成,利用如下python脚本,在数据集根目录下执行,便可生成trainval.txt和test.txt文件:(里面就是jpg和xml文件的id,所以最终结果是一串数字)

import os

file_train = open('trainval.txt', 'w')

file_test = open('test.txt', 'w')

for xml_name in os.listdir('train/annotations/xmls'):

file_train.write(xml_name[:-4] + '\n')

for xml_name in os.listdir('val/annotations/xmls'):

file_test.write(xml_name[:-4] + '\n')

file_train.close()

file_test.close()

生成后将其放在ImageSets/Main文件夹

- 执行以下脚本,将根据ImageSets/Main目录下的trainval.txt和test.txt文件在数据集根目录生成最终的

trainval.txt和test.txt列表文件:(除了这种写法,也可以用pandas处理得到trainval.txt和test.txt列表文件,在本文第四章会介绍)

python dataset/voc/create_list.py -d path/to/dataset

#此代码有问题,需要自己重写

- -d或–dataset_dir:VOC格式数据集所在文件夹路径

重写代码如下:

import logging

import sys

import os

import re

import os.path as osp

import random

def create_list(devkit_dir, year, output_dir):

"""

create following list:

1. trainval.txt

2. test.txt

"""

trainval_list = []

test_list = []

trainval, test = _walk_voc_dir(devkit_dir, year, output_dir)

trainval_list.extend(trainval)

test_list.extend(test)

random.shuffle(trainval_list)

with open(osp.join(output_dir, 'trainval.txt'), 'w') as ftrainval:

for item in trainval_list:

ftrainval.write(item[0] + ' ' + item[1] + '\n')

with open(osp.join(output_dir, 'test.txt'), 'w') as fval:

ct = 0

for item in test_list:

ct += 1

fval.write(item[0] + ' ' + item[1] + '\n')

def _get_voc_dir(devkit_dir, year, type):

return osp.join(devkit_dir, 'VOC' + year, type)

def _walk_voc_dir(devkit_dir, year, output_dir):

filelist_dir = _get_voc_dir(devkit_dir, year, 'ImageSets/Main')

annotation_dir = _get_voc_dir(devkit_dir, year, 'Annotations')

img_dir = _get_voc_dir(devkit_dir, year, 'JPEGImages')

print( filelist_dir,annotation_dir,img_dir)

trainval_list = []

test_list = []

added = set()

fnames=['trainval.txt','test.txt']

for fname in fnames:

img_ann_list=[]

fpath = osp.join(filelist_dir, fname)

for line in open(fpath):

name_prefix = line.strip().split()[0]

if name_prefix in added:

continue

added.add(name_prefix)

ann_path = osp.join(

osp.relpath(annotation_dir, output_dir),

name_prefix + '.xml')

img_path = osp.join(

osp.relpath(img_dir, output_dir), name_prefix + '.jpg')

img_ann_list.append((img_path, ann_path))

if fname=='trainval.txt':

trainval_list = img_ann_list

elif fname=='test.txt':

test_list=img_ann_list

return trainval_list, test_list

作者的VOCdevkit数据集在/home/aistudio/work文件夹下。切换路径为/home/aistudio/work,运行代码:

devkit_dir='./VOCdevkit'

output_dir='./'

year='2007'

create_list(devkit_dir, year, output_dir)

下面以路标数据集为例(参考《目标检测7日打卡营作业一:PaddleDetection快速上手》),划分成训练集和测试集,总共877张图,其中训练集701张图、测试集176张图。

- 本项目以路标数据集roadsign为例,详细说明了如何使用PaddleDetection训练一个目标检测模型,并对模型进行评估和预测。路标数据集roadsign提供了Pascal VOC格式和COCO格式两种格式。

- 划分好的数据下载地址为 roadsign_voc.tar, AiStudio上数据地址为roadsign_voc。

- 划分好的数据下载地址为roadsign_coco.tar,AiStudio上数据地址为roadsign_coco

一共4个类别:

speedlimit 限速牌

crosswalk 人行道

trafficlight 交通灯

stop 指示牌

- 所以label_list.txt内容为

speedlimit

crosswalk

trafficlight

stop

- 最终生成的valid.txt和train.txt格式为:(只是举例,每一行都是图片名+xml文件名,中间是空格隔开)

cat trainval.txt

VOCdevkit/VOC2007/JPEGImages/007276.jpg VOCdevkit/VOC2007/Annotations/007276.xml

VOCdevkit/VOC2012/JPEGImages/2011_002612.jpg VOCdevkit/VOC2012/Annotations/2011_002612.xml

-

xml文件中包含以下字段:

-

filename,表示图像名称。

road650.png -

object字段,表示每个物体。包括

name: 目标物体类别名称

pose: 关于目标物体姿态描述(非必须字段)

truncated: 目标物体目标因为各种原因被截断(非必须字段)

occluded: 目标物体是否被遮挡(非必须字段)

difficult: 目标物体是否是很难识别(非必须字段)

bndbox: 物体位置坐标,用左上角坐标和右下角坐标表示:xmin、ymin、xmax、ymax -

size,表示图像尺寸。包括:图像宽度、图像高度、图像深度

-

# 查看一条数据

! cat ./annotations/road650.xml

<annotation>

<folder>images</folder>

<filename>road650.png</filename>

<size>

<width>300</width>

<height>400</height>

<depth>3</depth>

</size>

<segmented>0</segmented>

<object>

<name>speedlimit</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<occluded>0</occluded>

<difficult>0</difficult>

<bndbox>

<xmin>126</xmin>

<ymin>110</ymin>

<xmax>162</xmax>

<ymax>147</ymax>

</bndbox>

</object>

</annotation>

2.2.1.2 将数据集转换为COCO格式

COCO数据格式,是指将所有训练图像的标注都存放到一个json文件中。数据以字典嵌套的形式存放,其格式如下:

annotations/

├── train.json

└── valid.json

images/

├── road0.png

├── road100.png

json文件中存放了 info licenses images annotations categories的信息:

-

info: 存放标注文件标注时间、版本等信息。 -

licenses: 存放数据许可信息。 -

images: 存放一个list,存放所有图像的图像名,下载地址,图像宽度,图像高度,图像在数据集中的id等信息。 -

annotations: 存放一个list,存放所有图像的所有物体区域的标注信息,每个目标物体标注以下信息:{ 'area': 899, 'iscrowd': 0, 'image_id': 839, 'bbox': [114, 126, 31, 29], 'category_id': 0, 'id': 1, 'ignore': 0, 'segmentation': [] }

# 查看一条数据

import json

coco_anno = json.load(open('./annotations/train.json'))

# coco_anno.keys

print('\nkeys:', coco_anno.keys())

# 查看类别信息

print('\n物体类别:', coco_anno['categories'])

# 查看一共多少张图

print('\n图像数量:', len(coco_anno['images']))

# 查看一共多少个目标物体

print('\n标注物体数量:', len(coco_anno['annotations']))

# 查看一条目标物体标注信息

print('\n查看一条目标物体标注信息:', coco_anno['annotations'][0])

keys: dict_keys(['images', 'type', 'annotations', 'categories'])

物体类别: [{'supercategory': 'none', 'id': 1, 'name': 'speedlimit'}, {'supercategory': 'none', 'id': 2, 'name': 'crosswalk'}, {'supercategory': 'none', 'id': 3, 'name': 'trafficlight'}, {'supercategory': 'none', 'id': 4, 'name': 'stop'}]

图像数量: 701

标注物体数量: 991

查看一条目标物体标注信息: {'area': 899, 'iscrowd': 0, 'bbox': [114, 126, 31, 29], 'category_id': 1, 'ignore': 0, 'segmentation': [], 'image_id': 0, 'id': 1}

在./tools/中提供了x2coco.py用于将voc格式数据集、labelme标注的数据集或cityscape数据集转换为COCO数据集,例如:

(1)labelmes数据转换为COCO格式:

python tools/x2coco.py \

--dataset_type labelme \

--json_input_dir ./labelme_annos/ \

--image_input_dir ./labelme_imgs/ \

--output_dir ./cocome/ \

--train_proportion 0.8 \

--val_proportion 0.2 \

--test_proportion 0.0

--dataset_type:需要转换的数据格式,目前支持:’voc‘、’labelme‘和’cityscape‘--json_input_dir:使用labelme标注的json文件所在文件夹--image_input_dir:图像文件所在文件夹--output_dir:转换后的COCO格式数据集存放位置--train_proportion:标注数据中用于train的比例--val_proportion:标注数据中用于validation的比例--test_proportion:标注数据中用于infer的比例

(2)voc数据转换为COCO格式:

python tools/x2coco.py \

--dataset_type voc \

--voc_anno_dir path/to/VOCdevkit/VOC2007/Annotations/ \

--voc_anno_list path/to/VOCdevkit/VOC2007/ImageSets/Main/trainval.txt \

--voc_label_list dataset/voc/label_list.txt \

--voc_out_name voc_train.json

参数说明:

--dataset_type:需要转换的数据格式,当前数据集是voc格式时,指定’voc‘即可。--voc_anno_dir:VOC数据转换为COCO数据集时的voc数据集标注文件路径。 例如:

├──Annotations/

├── 009881.xml

├── 009882.xml

├── 009886.xml

...

--voc_anno_list:VOC数据转换为COCO数据集时的标注列表文件,文件中是文件名前缀列表,一般是ImageSets/Main下trainval.txt和test.txt文件。 例如:trainval.txt里的内容如下:

009881

009882

009886

...

- -

-voc_label_list:VOC数据转换为COCO数据集时的类别列表文件,文件中每一行表示一种物体类别。 例如:label_list.txt里的内容如下:

background

aeroplane

bicycle

...

--voc_out_name:VOC数据转换为COCO数据集时的输出的COCO数据集格式json文件名。

2.2.2 选择模型

PaddleDetection中提供了丰富的模型库,具体可在模型库和基线中查看各个模型的指标,您可依据实际部署算力的情况,选择合适的模型:

- 算力资源小时,推荐您使用移动端模型,PaddleDetection中的移动端模型经过迭代优化,具有较高性价比。

- 算力资源强大时,推荐您使用服务器端模型,该模型是PaddleDetection提出的面向服务器端实用的目标检测方案。

同时也可以根据使用场景不同选择合适的模型: - 当小物体检测时,推荐您使用两阶段检测模型,比如Faster RCNN系列模型,具体可在模型库和基线中找到。

- 当在交通领域使用,如行人,车辆检测时,推荐您使用特色垂类检测模型。

- 当在竞赛中使用,推荐您使用竞赛冠军模型CACascadeRCNN与OIDV5_BASELINE_MODEL。

- 当在人脸检测中使用,推荐您使用人脸检测模型。

同时也可以尝试PaddleDetection中开发的YOLOv3增强模型、YOLOv4模型与Anchor Free模型等。

2.2.3 生成Anchor

在yolo系列模型中,可以运行tools/anchor_cluster.py来得到适用于你的数据集Anchor,使用方法如下:

python tools/anchor_cluster.py -c configs/ppyolo/ppyolo.yml -n 9 -s 608 -m v2 -i 1000

目前tools/anchor_cluster.py支持的主要参数配置如下表所示:

2.2.4 修改参数配置

选择好模型后,需要在configs目录中找到对应的配置文件进行修改:

- 数据路径配置: 在yaml配置文件中,依据1.数据准备中准备好的路径,配置TrainReader、EvalReader和TestReader的路径。

- COCO数据集:

dataset:

!COCODataSet

image_dir: val2017 # 图像数据基于数据集根目录的相对路径

anno_path: annotations/instances_val2017.json # 标注文件基于数据集根目录的相对路径

dataset_dir: dataset/coco # 数据集根目录

with_background: true # 背景是否作为一类标签,默认为true。

- VOC数据集:

dataset:

!VOCDataSet

anno_path: trainval.txt # 训练集列表文件基于数据集根目录的相对路径

dataset_dir: dataset/voc # 数据集根目录

use_default_label: true # 是否使用默认标签,默认为true。

with_background: true # 背景是否作为一类标签,默认为true。

说明: 如果您使用自己的数据集进行训练,需要将use_default_label设为false,并在数据集根目录中修改label_list.txt文件,添加自己的类别名,其中行号对应类别号。

- 类别数修改: 如果您自己的数据集类别数和COCO/VOC的类别数不同, 需修改yaml配置文件中类别数,

num_classes: XX。 注意:如果dataset中设置with_background: true,那么num_classes数必须是真实类别数+1(背景也算作1类) - 根据需要修改LearningRate相关参数:

- 如果GPU卡数变化,依据lr,batch-size关系调整lr: 学习率调整策略

- 自己数据总数样本数和COCO不同,依据batch_size, 总共的样本数,换算总迭代次数

max_iters,以及LearningRate中的milestones(学习率变化界限)。

- 预训练模型配置:通过在yaml配置文件中的

pretrain_weights: path/to/weights参数可以配置路径,可以是链接或权重文件路径。可直接沿用配置文件中给出的在ImageNet数据集上的预训练模型。同时我们支持训练在COCO或Obj365数据集上的模型权重作为预训练模型,做迁移学习。

2.2.5 开始训练与部署

- 参数配置完成后,就可以开始训练模型了,具体可参考本文1.1.1 训练/评估/预测章节。

- 训练测试完成后,根据需要可以进行模型部署:首先需要导出可预测的模型,可参考导出模型教程;导出模型后就可以进行C++预测部署或者python端预测部署。

2.2.6 自定义数据集训练demo

以AI识虫数据集(下载链接)为例,对自定义数据集上训练过程进行演示,该数据集提供了2183张图片,其中训练集1693张,验证集与测试集分别有245张,共包含7种昆虫。训练具体过程/代码参考《一个自定义数据集demo》

其中python dataset/voc/create_list.py -d path/to/dataset这一步有问题,可参考本章节《2.2.1.2 将数据集转换为VOC格式》。

三、进阶使用教程

3.1 配置模块设计与介绍

四、飞桨学习赛:钢铁缺陷检测

4.1 赛事简介

- 比赛地址:https://aistudio.baidu.com/aistudio/competition/detail/114/0/introduction

- 赛题介绍:本次比赛为图像目标识别比赛,要求参赛选手识别出钢铁表面出现缺陷的位置,并给出锚点框的坐标,同时对不同的缺陷进行分类。

- 数据简介:本数据集来自NEU表面缺陷检测数据集,收集了6种典型的热轧带钢表面缺陷,即氧化铁皮压入(RS)、斑块(Pa)、开裂(Cr)、点蚀(PS)、夹杂(In)和划痕(Sc)。下图为六种典型表面缺陷的示例,每幅图像的分辨率为200 * 200像素。

- 训练集图片1400张,测试集图片400张

提交内容及格式:

- 结果文件命名:submission.csv(否则无法成功提交)

- 结果文件格式:.csv(否则无法成功提交)

- 结果文件内容:submission.csv结果文件需包含多行记录,每行包括4个字段,内容示例如下:

image_id bbox category_id confidence

1400 [0, 0, 0, 0] 0 1

各字段含义如下:

- image_id(int): 图片id

- bbox(list[float]): 检测框坐标(XMin, YMin, XMax, YMax)

- category_id: 缺陷所属类别(int),类别对应字典为:{‘ crazing’:0,’inclusion’:1, ’pitted_surface’:2, ,’scratches’:3,’patches’:4,’rolled-in_scale’:5}

- confidence(float): 置信度

备注: 每一行记录1个检测框,并给出对应的category_id;同张图片中检测到的多个检测框,需分别记录在不同的行内。

4.2 安装PaddleDetection

- 克隆PaddleDetection

- 安装PaddleDetection文件夹的requirements.txt(python依赖)

- 编译安装paddledet

- 安装后确认测试通过

%cd ~/work

#!git clone https://github.com/PaddlePaddle/PaddleDetection.git

#如果github下载代码较慢,可尝试使用gitee

#git clone https://gitee.com/paddlepaddle/PaddleDetection

# 安装其他依赖

%cd PaddleDetection

!pip install -r requirements.txt

# 编译安装paddledet

!python setup.py install

#安装后确认测试通过:

!python ppdet/modeling/tests/test_architectures.py

!python ppdet/modeling/tests/test_architectures.py

W1001 15:08:57.768669 1185 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 11.2

W1001 15:08:57.773610 1185 gpu_resources.cc:91] device: 0, cuDNN Version: 8.2.

.......

----------------------------------------------------------------------

Ran 7 tests in 2.142s

OK

4.3 数据预处理

4.3.1 解压数据集

# 解压到work下的dataset文件夹

!mkdir dataset

!unzip ../data/test.zip -d dataset

!unzip ../data/train.zip -d dataset

# 重命名为annotations和images

!mv dataset/train/IMAGES dataset/train/images

!mv dataset/train/ANNOTATIONS dataset/train/annotations

4.3.2 自定义数据集(感觉很麻烦,暂时放弃)

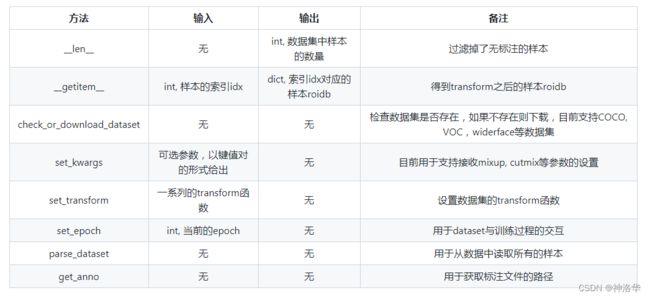

PaddleDetection的数据处理模块的所有代码逻辑在ppdet/data/中,数据处理模块用于加载数据并将其转换成适用于物体检测模型的训练、评估、推理所需要的格式。- 数据集定义在

source目录下,其中dataset.py中定义了数据集的基类DetDataSet, 所有的数据集均继承于基类,DetDataset基类里定义了如下等方法:

- 当一个数据集类继承自DetDataSet,那么它只需要实现parse_dataset函数即可

parse_dataset根据数据集设置:

- 数据集根路径

dataset_dir - 图片文件夹

image_dir - 标注文件路径

anno_path

取出所有的样本,并将其保存在一个列表roidbs中,每一个列表中的元素为一个样本xxx_rec(比如coco_rec或者voc_rec),用dict表示,dict中包含样本的image, gt_bbox, gt_class等字段。COCO和Pascal-VOC数据集中的xxx_rec的数据结构定义如下:

xxx_rec = {

'im_file': im_fname, # 一张图像的完整路径

'im_id': np.array([img_id]), # 一张图像的ID序号

'h': im_h, # 图像高度

'w': im_w, # 图像宽度

'is_crowd': is_crowd, # 是否是群落对象, 默认为0 (VOC中无此字段)

'gt_class': gt_class, # 标注框标签名称的ID序号

'gt_bbox': gt_bbox, # 标注框坐标(xmin, ymin, xmax, ymax)

'gt_poly': gt_poly, # 分割掩码,此字段只在coco_rec中出现,默认为None

'difficult': difficult # 是否是困难样本,此字段只在voc_rec中出现,默认为0

}

-

xxx_rec中的内容也可以通过DetDataSet的data_fields参数来控制,即可以过滤掉一些不需要的字段,但大多数情况下不需要修改,按照configs/dataset中的默认配置即可。

-

此外,在parse_dataset函数中,保存了类别名到id的映射的一个字典cname2cid。在coco数据集中,会利用COCO API从标注文件中加载数据集的类别名,并设置此字典。在voc数据集中,如果设置use_default_label=False,将从label_list.txt中读取类别列表,反之将使用voc默认的类别列表。

4.3.3 准备VOC数据集(直接用这个)

参考:《如何准备训练数据》

-

尝试coco数据集

- COCO数据标注是将所有训练图像的标注都存放到一个json文件中。数据以字典嵌套的形式存放

- 用户数据集转成COCO数据后目录结构如下:

dataset/xxx/ ├── annotations │ ├── train.json # coco数据的标注文件 │ ├── valid.json # coco数据的标注文件 ├── images │ ├── xxx1.jpg │ ├── xxx2.jpg │ ├── xxx3.jpg │ | ... ...- json格式标注文件,得自己生成,目前我知道的就是用

paddledetection./tools/中提供的x2coco.py,将VOC数据集、labelme标注的数据集或cityscape数据集转换为COCO数据(生成json标准为文件)。这样太麻烦,还不如直接用VOC格式训练。

-

尝试自定义数据集(参考《数据处理模块》,重写

parse_dataset感觉也很麻烦) -

准备voc数据集(最简单,麻烦一点的就是生成txt文件)

-

模仿VOC数据集目录结构,新建

VOCdevkit文件夹并进入其中,然后继续新建VOC2007文件夹并进入其中,之后新建Annotations、JPEGImages和ImageSets文件夹,最后进入ImageSets文件夹中新建Main文件夹,至此完成VOC数据集目录结构的建立。 -

将该数据集中的

train/annotations/xmls与val/annotations/xmls(如果有val验证集的话)下的所有xml标注文件拷贝到VOCdevkit/VOC2007/Annotations中, -

将该数据集中的

train/images/与val/images/下的所有图片拷贝到VOCdevkit/VOC2007/JPEGImages中 -

最后在数据集根目录下输出最终的trainval.txt和test.txt文件(可用pandas完成,一会说):

-

-

生成VOC格式目录。

如果觉得后面移动文件很麻烦,可以先生成VOC目录,再将数据集解压到VOC2007中,将其图片和标注文件夹分别重命名为Annotations和JPEGImages。

%cd work

!mkdir VOCdevkit

%cd VOCdevkit

!mkdir VOC2007

%cd VOC2007

!mkdir Annotations JPEGImages ImageSets

%cd ImageSets

!mkdir Main

%cd ../../

- 生成

trainval.txt和val.txt

由于该数据集中缺少已标注图片名列表文件trainval.txt和val.txt,所以需要进行生成,用pandas处理更直观

# 遍历图片和标注文件夹,将所有文件后缀正确的文件添加到列表中

import os

import pandas as pd

ls_xml,ls_image=[],[]

for xml in os.listdir('dataset/train/annotations'):

if xml.split('.')[1]=='xml':

ls_xml.append(xml)

for image in os.listdir('dataset/train/images'):

if image.split('.')[1]=='jpg':

ls_image.append(image)

读取xml文件列表和image文件名列表之后,要先进行排序。

- 直接df.sort_values([‘image’,‘xml’],inplace=True)是先对image排序,再对xml排序,是整个表排序而不是单列分别排序,这样的结果是不对的

- 直接分别排序再合并,结果也不对。因为添加xml列的时候,默认是按key=index来合并的,而两个列表的文件都是乱序的,这样排序后index也不相同,所以需要分别排序后重设索引,且丢弃原先索引,最后再合并。

df=pd.DataFrame(ls_image,columns=['image'])

df.sort_values('image',inplace=True)

df=df.reset_index(drop=True)

s=pd.Series(ls_xml).sort_values().reset_index(drop=True)

df['xml']=s

df.head(3)

image xml

0 0.jpg 0.xml

1 1.jpg 1.xml

2 10.jpg 10.xml

训练时,文件都是相对路径,所以要加前缀VOC2007/JPEGImages/和VOC2007/Annotations/

%cd VOCdevkit

voc=df.sample(frac=1)

voc.image=voc.image.apply(lambda x : 'VOC2007/JPEGImages/'+str(x))

voc.xml=voc.xml.apply(lambda x : 'VOC2007/Annotations/'+str(x))

voc.to_csv('trainval.txt',sep=' ',index=0,header=0)

# 划分出训练集和验证集,保存为txt格式,中间用空格隔开

train_df=trainval[:1200]

val_df=trainval[1200:]

train_df.to_csv('train.txt',sep=' ',index=0,header=0)

val_df.to_csv('val.txt',sep=' ',index=0,header=0)

!cp -r train/annotations/* ../VOCdevkit/VOC2007/Annotations

!cp -r train/images/* ../VOCdevkit/VOC2007/JPEGImages

查看一张图片信息:

from PIL import Image

image = Image.open('dataset/train/images/0.jpg')

print('width: ', image.width)

print('height: ', image.height)

print('size: ', image.size)

print('mode: ', image.mode)

print('format: ', image.format)

print('category: ', image.category)

print('readonly: ', image.readonly)

print('info: ', image.info)

image.show()

4.4 修改配置文件,准备训练

4.4.1 使用pp-yoloe+

- 模型效果请参考ppyolo文档

- 配置参数参考yolo配置参数说明、《如何更好的理解reader和自定义修改reader文件》

- PaddleYOLO库集成了yolo系列模型,包括yolov5/6/7/X,后续可以试试。

- 修改配置文件

- 手动复制一个

configs/ppyoloe/ppyoloe_plus_crn_m_80e_coco.yml文件的副本,其它类似,防止改错了无法还原 configs/datasets/voc.yml不用复制,免得下次重新写,修改后如下:(TestDataset最好不要写dataset_dir字段,否则后面infer.py推断的时候,选择参数save_results=True会报错label_list label_list.txt not a file)metric: VOC map_type: 11point num_classes: 6 TrainDataset: !VOCDataSet dataset_dir: ../VOCdevkit anno_path: train.txt label_list: label_list.txt data_fields: ['image', 'gt_bbox', 'gt_class', 'difficult'] EvalDataset: !VOCDataSet dataset_dir: ../VOCdevkit anno_path: val.txt label_list: label_list.txt data_fields: ['image', 'gt_bbox', 'gt_class', 'difficult'] TestDataset: !ImageFolder anno_path: ../VOCdevkit/label_list.txt- 学习率改为0.00025,epoch=60,max_epochs=72,!LinearWarmup下的epoch改为4。

- reader部分训练验证集的batch_size从8改为16,推理时bs也改为16,大大加快推理速度(之前默认为1,特别慢)

- PP-YOLOE+支持混合精度训练,请添加

--amp. - 使用YOLOv3模型如何通过yml文件修改输入图片尺寸

Multi-Scale Training:多尺度训练 。yolov3中作者认为网络输入尺寸固定的话,模型鲁棒性受限,所以考虑多尺度训练。具体的,在训练过程中每隔10个batches,重新随机选择输入图片的尺寸[320,352,416…608](Darknet-19最终将图片缩放32倍,所以一般选择32的倍数)。- 模型预测部署需要用到指定的尺寸时,首先在训练前需要修改

configs/_base_/yolov3_reader.yml中的TrainReader的BatchRandomResize中target_size包含指定的尺寸,训练完成后,在评估或者预测时,需要将EvalReader和TestReader中的Resize的target_size修改成对应的尺寸,如果是需要模型导出(export_model),则需要将TestReader中的image_shape修改为对应的图片输入尺寸 。 - 如果只改了训练集入网尺寸,验证测试集尺寸不变,会报错

- Paddle中支持的优化器Optimizer在PaddleDetection中均支持,需要手动修改下配置文件即可

- 手动复制一个

ppyoloe_plus_reader.yml修改如下:(图片都很小,把默认的入网尺寸改了)

worker_num: 4

eval_height: &eval_height 224

eval_width: &eval_width 224

eval_size: &eval_size [*eval_height, *eval_width]

TrainReader:

sample_transforms:

- Decode: {}

- RandomDistort: {}

- RandomExpand: {fill_value: [123.675, 116.28, 103.53]}

- RandomCrop: {}

- RandomFlip: {}

batch_transforms:

- BatchRandomResize: {target_size: [96, 128, 160, 192, 224, 256, 288,320,352], random_size: True, random_interp: True, keep_ratio: False}

- NormalizeImage: {mean: [0., 0., 0.], std: [1., 1., 1.], norm_type: none}

- Permute: {}

- PadGT: {}

batch_size: 16

shuffle: true

drop_last: true

use_shared_memory: true

collate_batch: true

EvalReader:

sample_transforms:

- Decode: {}

- Resize: {target_size: *eval_size, keep_ratio: False, interp: 2}

- NormalizeImage: {mean: [0., 0., 0.], std: [1., 1., 1.], norm_type: none}

- Permute: {}

batch_size: 16

TestReader:

inputs_def:

image_shape: [3, *eval_height, *eval_width]

sample_transforms:

- Decode: {}

- Resize: {target_size: *eval_size, keep_ratio: False, interp: 2}

- NormalizeImage: {mean: [0., 0., 0.], std: [1., 1., 1.], norm_type: none}

- Permute: {}

batch_size: 8

训练参数列表:(可通过–help查看)

| FLAG | 支持脚本 | 用途 | 默认值 | 备注 |

|---|---|---|---|---|

| -c | ALL | 指定配置文件 | None | 必选,例如-c configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.yml |

| -o | ALL | 设置或更改配置文件里的参数内容 | None | 相较于-c设置的配置文件有更高优先级,例如:-o use_gpu=False |

| –eval | train | 是否边训练边测试 | False | 如需指定,直接--eval即可 |

| -r/–resume_checkpoint | train | 恢复训练加载的权重路径 | None | 例如:-r output/faster_rcnn_r50_1x_coco/10000 |

| –slim_config | ALL | 模型压缩策略配置文件 | None | 例如--slim_config configs/slim/prune/yolov3_prune_l1_norm.yml |

| –use_vdl | train/infer | 是否使用VisualDL记录数据,进而在VisualDL面板中显示 | False | VisualDL需Python>=3.5 |

| –vdl_log_dir | train/infer | 指定 VisualDL 记录数据的存储路径 | train:vdl_log_dir/scalar infer: vdl_log_dir/image |

VisualDL需Python>=3.5 |

| –output_eval | eval | 评估阶段保存json路径 | None | 例如 --output_eval=eval_output, 默认为当前路径 |

| –json_eval | eval | 是否通过已存在的bbox.json或者mask.json进行评估 | False | 如需指定,直接--json_eval即可, json文件路径在--output_eval中设置 |

| –classwise | eval | 是否评估单类AP和绘制单类PR曲线 | False | 如需指定,直接--classwise即可 |

| –output_dir | infer/export_model | 预测后结果或导出模型保存路径 | ./output |

例如--output_dir=output |

| –draw_threshold | infer | 可视化时分数阈值 | 0.5 | 例如--draw_threshold=0.7 |

| –infer_dir | infer | 用于预测的图片文件夹路径 | None | --infer_img和--infer_dir必须至少设置一个 |

| –infer_img | infer | 用于预测的图片路径 | None | --infer_img和--infer_dir必须至少设置一个,infer_img具有更高优先级 |

| –save_results | infer | 是否在文件夹下将图片的预测结果保存到文件中 | False | 可选 |

- 之前有ppyoloe+s,lr=0.0002,epoch=40,时间花了2572s,map=77.6%.

- bs=16,lr=0.00025,epoch=60,mAP(0.50, 11point) = 84.71%

# bs=16,60epoch花了3571s

%cd ~/work/PaddleDetection/

!python -u tools/train.py -c configs/ppyoloe/ppyoloe_plus_crn_l_80e_coco-Copy1.yml \

--use_vdl=true \

--vdl_log_dir=vdl_dir/scalar \

--eval \

--amp

《VisualDL可视化的使用方法》

!visualdl --logdir PaddleDetection/vdl_dir/scalar这种是打不开的,因为用的是别人的服务器- 要使用直接用AI Stadio 上左侧的可视化窗口就行

- 很多参数用–xx来写是不行的,–help里面列出的都是train.py文件本身加的一些参数,可以用–来更改。train.py之外的参数,需要前面加-o来改,比如-o weight=‘path’,output_dir=‘path’,log_iter/snapshot_epoch/lr等等。这样直接在跑的时候改参数就行,不用每次都改yaml文件。

#

# bs=16,60epoch花了3571s

%cd ~/work/PaddleDetection/

!python -u tools/train.py -c configs/ppyoloe/ppyoloe_plus_crn_l_80e_coco-Copy1.yml \

--use_vdl=true \

--vdl_log_dir=vdl_dir/scalar \

--eval \

-o output_dir=output/ppyoloe_l_plus_10e\

snapshot_epoch=2\

lr=0.0005 \

--amp

/home/aistudio/work/PaddleDetection

W1002 02:11:54.517324 1348 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 11.2

W1002 02:11:54.523236 1348 gpu_resources.cc:91] device: 0, cuDNN Version: 8.2.

[10/02 02:11:56] ppdet.utils.download INFO: Downloading ppyoloe_crn_l_obj365_pretrained.pdparams from https://bj.bcebos.com/v1/paddledet/models/pretrained/ppyoloe_crn_l_obj365_pretrained.pdparams

100%|████████████████████████████████| 207813/207813 [00:04<00:00, 44873.76KB/s]

[10/02 02:12:02] ppdet.utils.checkpoint INFO: Finish loading model weights: /home/aistudio/.cache/paddle/weights/ppyoloe_crn_l_obj365_pretrained.pdparams

[10/02 02:12:04] ppdet.engine INFO: Epoch: [0] [ 0/75] learning_rate: 0.000000 loss: 4.481521 loss_cls: 2.520099 loss_iou: 0.469868 loss_dfl: 1.573502 loss_l1: 1.543327 eta: 2:59:06 batch_cost: 2.3881 data_cost: 0.0005 ips: 6.6999 images/s

Found inf or nan, current scale is: 1024.0, decrease to: 1024.0*0.5

Found inf or nan, current scale is: 512.0, decrease to: 512.0*0.5

[10/02 02:12:52] ppdet.engine INFO: Epoch: [1] [ 0/75] learning_rate: 0.000063 loss: 4.358507 loss_cls: 2.552031 loss_iou: 0.381841 loss_dfl: 1.487746 loss_l1: 1.117891 eta: 0:42:08 batch_cost: 0.5713 data_cost: 0.0048 ips: 28.0056 images/s

[10/02 02:13:40] ppdet.engine INFO: Epoch: [2] [ 0/75] learning_rate: 0.000125 loss: 3.417750 loss_cls: 1.887220 loss_iou: 0.335269 loss_dfl: 1.236278 loss_l1: 0.810590 eta: 0:39:58 batch_cost: 0.5514 data_cost: 0.0061 ips: 29.0193 images/s

[10/02 02:14:29] ppdet.engine INFO: Epoch: [3] [ 0/75] learning_rate: 0.000188 loss: 2.748218 loss_cls: 1.460885 loss_iou: 0.297337 loss_dfl: 1.116637 loss_l1: 0.591470 eta: 0:39:16 batch_cost: 0.5468 data_cost: 0.0072 ips: 29.2587 images/s

[10/02 02:15:18] ppdet.engine INFO: Epoch: [4] [ 0/75] learning_rate: 0.000250 loss: 2.616204 loss_cls: 1.336550 loss_iou: 0.280882 loss_dfl: 1.083125 loss_l1: 0.534236 eta: 0:38:38 batch_cost: 0.5527 data_cost: 0.0077 ips: 28.9473 images/s

[10/02 02:16:08] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:16:10] ppdet.engine INFO: Eval iter: 0

[10/02 02:16:16] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:16:16] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 56.13%

[10/02 02:16:16] ppdet.engine INFO: Total sample number: 200, averge FPS: 26.627658767732193

[10/02 02:16:17] ppdet.engine INFO: Best test bbox ap is 0.561.

[10/02 02:16:23] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:16:24] ppdet.engine INFO: Epoch: [5] [ 0/75] learning_rate: 0.000250 loss: 2.568801 loss_cls: 1.325704 loss_iou: 0.275041 loss_dfl: 1.060024 loss_l1: 0.521037 eta: 0:37:44 batch_cost: 0.5458 data_cost: 0.0046 ips: 29.3125 images/s

[10/02 02:17:12] ppdet.engine INFO: Epoch: [6] [ 0/75] learning_rate: 0.000249 loss: 2.456914 loss_cls: 1.281628 loss_iou: 0.267069 loss_dfl: 1.025206 loss_l1: 0.489662 eta: 0:37:04 batch_cost: 0.5473 data_cost: 0.0045 ips: 29.2325 images/s

[10/02 02:18:01] ppdet.engine INFO: Epoch: [7] [ 0/75] learning_rate: 0.000249 loss: 2.370815 loss_cls: 1.203813 loss_iou: 0.258562 loss_dfl: 0.995687 loss_l1: 0.452931 eta: 0:36:19 batch_cost: 0.5485 data_cost: 0.0069 ips: 29.1685 images/s

[10/02 02:18:49] ppdet.engine INFO: Epoch: [8] [ 0/75] learning_rate: 0.000248 loss: 2.295363 loss_cls: 1.152321 loss_iou: 0.252165 loss_dfl: 0.973720 loss_l1: 0.436992 eta: 0:35:35 batch_cost: 0.5499 data_cost: 0.0089 ips: 29.0954 images/s

[10/02 02:19:38] ppdet.engine INFO: Epoch: [9] [ 0/75] learning_rate: 0.000247 loss: 2.245720 loss_cls: 1.134426 loss_iou: 0.247205 loss_dfl: 0.970247 loss_l1: 0.430111 eta: 0:34:53 batch_cost: 0.5468 data_cost: 0.0095 ips: 29.2599 images/s

[10/02 02:20:29] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:20:29] ppdet.engine INFO: Eval iter: 0

[10/02 02:20:36] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:20:36] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 67.52%

[10/02 02:20:36] ppdet.engine INFO: Total sample number: 200, averge FPS: 29.694107725586296

[10/02 02:20:36] ppdet.engine INFO: Best test bbox ap is 0.675.

[10/02 02:20:42] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:20:43] ppdet.engine INFO: Epoch: [10] [ 0/75] learning_rate: 0.000245 loss: 2.214799 loss_cls: 1.128656 loss_iou: 0.240882 loss_dfl: 0.962219 loss_l1: 0.404927 eta: 0:34:14 batch_cost: 0.5493 data_cost: 0.0046 ips: 29.1285 images/s

[10/02 02:21:34] ppdet.engine INFO: Epoch: [11] [ 0/75] learning_rate: 0.000244 loss: 2.188340 loss_cls: 1.103160 loss_iou: 0.236131 loss_dfl: 0.962468 loss_l1: 0.406858 eta: 0:33:42 batch_cost: 0.5609 data_cost: 0.0043 ips: 28.5266 images/s

[10/02 02:22:24] ppdet.engine INFO: Epoch: [12] [ 0/75] learning_rate: 0.000242 loss: 2.143307 loss_cls: 1.091550 loss_iou: 0.233911 loss_dfl: 0.964779 loss_l1: 0.407539 eta: 0:33:03 batch_cost: 0.5672 data_cost: 0.0052 ips: 28.2102 images/s

[10/02 02:23:12] ppdet.engine INFO: Epoch: [13] [ 0/75] learning_rate: 0.000239 loss: 2.135755 loss_cls: 1.079319 loss_iou: 0.233911 loss_dfl: 0.954541 loss_l1: 0.403308 eta: 0:32:18 batch_cost: 0.5558 data_cost: 0.0076 ips: 28.7890 images/s

[10/02 02:24:00] ppdet.engine INFO: Epoch: [14] [ 0/75] learning_rate: 0.000237 loss: 2.139629 loss_cls: 1.070959 loss_iou: 0.236416 loss_dfl: 0.943961 loss_l1: 0.396193 eta: 0:31:36 batch_cost: 0.5484 data_cost: 0.0088 ips: 29.1783 images/s

[10/02 02:24:52] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:24:52] ppdet.engine INFO: Eval iter: 0

[10/02 02:24:58] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:24:58] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 71.62%

[10/02 02:24:58] ppdet.engine INFO: Total sample number: 200, averge FPS: 33.06715962648959

[10/02 02:24:58] ppdet.engine INFO: Best test bbox ap is 0.716.

[10/02 02:25:04] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:25:05] ppdet.engine INFO: Epoch: [15] [ 0/75] learning_rate: 0.000234 loss: 2.110590 loss_cls: 1.059678 loss_iou: 0.234108 loss_dfl: 0.935301 loss_l1: 0.393397 eta: 0:30:55 batch_cost: 0.5516 data_cost: 0.0055 ips: 29.0044 images/s

[10/02 02:25:56] ppdet.engine INFO: Epoch: [16] [ 0/75] learning_rate: 0.000231 loss: 2.087882 loss_cls: 1.058837 loss_iou: 0.225832 loss_dfl: 0.919775 loss_l1: 0.382988 eta: 0:30:18 batch_cost: 0.5601 data_cost: 0.0059 ips: 28.5642 images/s

[10/02 02:26:45] ppdet.engine INFO: Epoch: [17] [ 0/75] learning_rate: 0.000228 loss: 2.082806 loss_cls: 1.051872 loss_iou: 0.222922 loss_dfl: 0.919006 loss_l1: 0.375779 eta: 0:29:37 batch_cost: 0.5566 data_cost: 0.0057 ips: 28.7453 images/s

[10/02 02:27:33] ppdet.engine INFO: Epoch: [18] [ 0/75] learning_rate: 0.000225 loss: 2.075028 loss_cls: 1.031468 loss_iou: 0.229123 loss_dfl: 0.929744 loss_l1: 0.376942 eta: 0:28:54 batch_cost: 0.5498 data_cost: 0.0083 ips: 29.0990 images/s

[10/02 02:28:23] ppdet.engine INFO: Epoch: [19] [ 0/75] learning_rate: 0.000221 loss: 2.069324 loss_cls: 1.018056 loss_iou: 0.229435 loss_dfl: 0.934903 loss_l1: 0.376221 eta: 0:28:15 batch_cost: 0.5594 data_cost: 0.0093 ips: 28.6033 images/s

[10/02 02:29:14] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:29:15] ppdet.engine INFO: Eval iter: 0

[10/02 02:29:20] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:29:21] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 73.32%

[10/02 02:29:21] ppdet.engine INFO: Total sample number: 200, averge FPS: 33.009636989961244

[10/02 02:29:21] ppdet.engine INFO: Best test bbox ap is 0.733.

[10/02 02:29:27] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:29:28] ppdet.engine INFO: Epoch: [20] [ 0/75] learning_rate: 0.000217 loss: 2.052241 loss_cls: 1.013339 loss_iou: 0.226882 loss_dfl: 0.927668 loss_l1: 0.371821 eta: 0:27:33 batch_cost: 0.5552 data_cost: 0.0067 ips: 28.8174 images/s

[10/02 02:30:17] ppdet.engine INFO: Epoch: [21] [ 0/75] learning_rate: 0.000213 loss: 2.019797 loss_cls: 1.012322 loss_iou: 0.219845 loss_dfl: 0.907858 loss_l1: 0.363873 eta: 0:26:52 batch_cost: 0.5542 data_cost: 0.0063 ips: 28.8687 images/s

[10/02 02:31:06] ppdet.engine INFO: Epoch: [22] [ 0/75] learning_rate: 0.000209 loss: 2.003052 loss_cls: 1.007675 loss_iou: 0.218846 loss_dfl: 0.903147 loss_l1: 0.354434 eta: 0:26:11 batch_cost: 0.5479 data_cost: 0.0047 ips: 29.2009 images/s

[10/02 02:31:54] ppdet.engine INFO: Epoch: [23] [ 0/75] learning_rate: 0.000205 loss: 2.005725 loss_cls: 1.007721 loss_iou: 0.221210 loss_dfl: 0.902577 loss_l1: 0.352408 eta: 0:25:28 batch_cost: 0.5450 data_cost: 0.0076 ips: 29.3598 images/s

[10/02 02:32:44] ppdet.engine INFO: Epoch: [24] [ 0/75] learning_rate: 0.000200 loss: 1.998717 loss_cls: 1.003333 loss_iou: 0.218765 loss_dfl: 0.905797 loss_l1: 0.354777 eta: 0:24:48 batch_cost: 0.5597 data_cost: 0.0086 ips: 28.5874 images/s

[10/02 02:33:35] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:33:35] ppdet.engine INFO: Eval iter: 0

[10/02 02:33:41] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:33:41] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 74.45%

[10/02 02:33:41] ppdet.engine INFO: Total sample number: 200, averge FPS: 33.77501799351638

[10/02 02:33:41] ppdet.engine INFO: Best test bbox ap is 0.744.

[10/02 02:33:47] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:33:48] ppdet.engine INFO: Epoch: [25] [ 0/75] learning_rate: 0.000196 loss: 1.993038 loss_cls: 0.984209 loss_iou: 0.216039 loss_dfl: 0.906242 loss_l1: 0.360660 eta: 0:24:05 batch_cost: 0.5523 data_cost: 0.0064 ips: 28.9706 images/s

[10/02 02:34:37] ppdet.engine INFO: Epoch: [26] [ 0/75] learning_rate: 0.000191 loss: 1.969199 loss_cls: 0.963072 loss_iou: 0.215468 loss_dfl: 0.897904 loss_l1: 0.352217 eta: 0:23:24 batch_cost: 0.5521 data_cost: 0.0059 ips: 28.9794 images/s

[10/02 02:35:26] ppdet.engine INFO: Epoch: [27] [ 0/75] learning_rate: 0.000186 loss: 1.957535 loss_cls: 0.965957 loss_iou: 0.214567 loss_dfl: 0.888648 loss_l1: 0.345673 eta: 0:22:43 batch_cost: 0.5481 data_cost: 0.0059 ips: 29.1935 images/s

[10/02 02:36:14] ppdet.engine INFO: Epoch: [28] [ 0/75] learning_rate: 0.000181 loss: 1.963546 loss_cls: 0.981689 loss_iou: 0.214567 loss_dfl: 0.889092 loss_l1: 0.351950 eta: 0:22:00 batch_cost: 0.5413 data_cost: 0.0087 ips: 29.5558 images/s

[10/02 02:37:01] ppdet.engine INFO: Epoch: [29] [ 0/75] learning_rate: 0.000175 loss: 1.951882 loss_cls: 0.966119 loss_iou: 0.212937 loss_dfl: 0.885117 loss_l1: 0.343484 eta: 0:21:17 batch_cost: 0.5421 data_cost: 0.0091 ips: 29.5132 images/s

[10/02 02:37:53] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:37:53] ppdet.engine INFO: Eval iter: 0

[10/02 02:37:58] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:37:58] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 74.52%

[10/02 02:37:58] ppdet.engine INFO: Total sample number: 200, averge FPS: 34.75292988056431

[10/02 02:37:59] ppdet.engine INFO: Best test bbox ap is 0.745.

[10/02 02:38:04] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:38:05] ppdet.engine INFO: Epoch: [30] [ 0/75] learning_rate: 0.000170 loss: 1.930174 loss_cls: 0.950383 loss_iou: 0.213558 loss_dfl: 0.879567 loss_l1: 0.334482 eta: 0:20:36 batch_cost: 0.5426 data_cost: 0.0059 ips: 29.4896 images/s

[10/02 02:38:56] ppdet.engine INFO: Epoch: [31] [ 0/75] learning_rate: 0.000165 loss: 1.855506 loss_cls: 0.891626 loss_iou: 0.204144 loss_dfl: 0.895400 loss_l1: 0.400485 eta: 0:19:56 batch_cost: 0.5535 data_cost: 0.0066 ips: 28.9063 images/s

[10/02 02:39:45] ppdet.engine INFO: Epoch: [32] [ 0/75] learning_rate: 0.000159 loss: 1.755724 loss_cls: 0.813473 loss_iou: 0.192575 loss_dfl: 0.908447 loss_l1: 0.467725 eta: 0:19:14 batch_cost: 0.5528 data_cost: 0.0060 ips: 28.9461 images/s

[10/02 02:40:33] ppdet.engine INFO: Epoch: [33] [ 0/75] learning_rate: 0.000154 loss: 1.729833 loss_cls: 0.797897 loss_iou: 0.186383 loss_dfl: 0.908977 loss_l1: 0.460911 eta: 0:18:33 batch_cost: 0.5486 data_cost: 0.0100 ips: 29.1663 images/s

[10/02 02:41:21] ppdet.engine INFO: Epoch: [34] [ 0/75] learning_rate: 0.000148 loss: 1.720339 loss_cls: 0.805504 loss_iou: 0.183566 loss_dfl: 0.916586 loss_l1: 0.443390 eta: 0:17:50 batch_cost: 0.5442 data_cost: 0.0087 ips: 29.4007 images/s

[10/02 02:42:15] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:42:16] ppdet.engine INFO: Eval iter: 0

[10/02 02:42:21] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:42:21] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 76.15%

[10/02 02:42:21] ppdet.engine INFO: Total sample number: 200, averge FPS: 35.52207353925214

[10/02 02:42:21] ppdet.engine INFO: Best test bbox ap is 0.762.

[10/02 02:42:27] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:42:28] ppdet.engine INFO: Epoch: [35] [ 0/75] learning_rate: 0.000142 loss: 1.723771 loss_cls: 0.809167 loss_iou: 0.182476 loss_dfl: 0.907972 loss_l1: 0.430918 eta: 0:17:09 batch_cost: 0.5449 data_cost: 0.0065 ips: 29.3628 images/s

[10/02 02:43:18] ppdet.engine INFO: Epoch: [36] [ 0/75] learning_rate: 0.000137 loss: 1.718058 loss_cls: 0.800496 loss_iou: 0.179539 loss_dfl: 0.896233 loss_l1: 0.424874 eta: 0:16:29 batch_cost: 0.5516 data_cost: 0.0053 ips: 29.0086 images/s

[10/02 02:44:09] ppdet.engine INFO: Epoch: [37] [ 0/75] learning_rate: 0.000131 loss: 1.708455 loss_cls: 0.805251 loss_iou: 0.179188 loss_dfl: 0.894763 loss_l1: 0.425437 eta: 0:15:48 batch_cost: 0.5597 data_cost: 0.0060 ips: 28.5869 images/s

[10/02 02:44:59] ppdet.engine INFO: Epoch: [38] [ 0/75] learning_rate: 0.000125 loss: 1.708790 loss_cls: 0.809766 loss_iou: 0.177069 loss_dfl: 0.899452 loss_l1: 0.416282 eta: 0:15:07 batch_cost: 0.5635 data_cost: 0.0080 ips: 28.3930 images/s

[10/02 02:45:46] ppdet.engine INFO: Epoch: [39] [ 0/75] learning_rate: 0.000119 loss: 1.702590 loss_cls: 0.798908 loss_iou: 0.178342 loss_dfl: 0.892567 loss_l1: 0.392278 eta: 0:14:25 batch_cost: 0.5496 data_cost: 0.0082 ips: 29.1121 images/s

[10/02 02:46:40] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:46:41] ppdet.engine INFO: Eval iter: 0

[10/02 02:46:46] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:46:46] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 77.01%

[10/02 02:46:46] ppdet.engine INFO: Total sample number: 200, averge FPS: 35.87872620592306

[10/02 02:46:46] ppdet.engine INFO: Best test bbox ap is 0.770.

[10/02 02:46:52] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:46:53] ppdet.engine INFO: Epoch: [40] [ 0/75] learning_rate: 0.000113 loss: 1.693899 loss_cls: 0.793664 loss_iou: 0.179780 loss_dfl: 0.896150 loss_l1: 0.399663 eta: 0:13:44 batch_cost: 0.5480 data_cost: 0.0053 ips: 29.1988 images/s

[10/02 02:47:42] ppdet.engine INFO: Epoch: [41] [ 0/75] learning_rate: 0.000108 loss: 1.684944 loss_cls: 0.793714 loss_iou: 0.178211 loss_dfl: 0.881116 loss_l1: 0.391328 eta: 0:13:02 batch_cost: 0.5430 data_cost: 0.0054 ips: 29.4671 images/s

[10/02 02:48:31] ppdet.engine INFO: Epoch: [42] [ 0/75] learning_rate: 0.000102 loss: 1.684944 loss_cls: 0.790953 loss_iou: 0.177349 loss_dfl: 0.882300 loss_l1: 0.389638 eta: 0:12:21 batch_cost: 0.5458 data_cost: 0.0053 ips: 29.3171 images/s

[10/02 02:49:20] ppdet.engine INFO: Epoch: [43] [ 0/75] learning_rate: 0.000096 loss: 1.680817 loss_cls: 0.785845 loss_iou: 0.176771 loss_dfl: 0.884926 loss_l1: 0.387992 eta: 0:11:40 batch_cost: 0.5490 data_cost: 0.0074 ips: 29.1444 images/s

[10/02 02:50:07] ppdet.engine INFO: Epoch: [44] [ 0/75] learning_rate: 0.000091 loss: 1.664115 loss_cls: 0.781636 loss_iou: 0.175688 loss_dfl: 0.878627 loss_l1: 0.385457 eta: 0:10:58 batch_cost: 0.5403 data_cost: 0.0076 ips: 29.6106 images/s

[10/02 02:51:02] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:51:02] ppdet.engine INFO: Eval iter: 0

[10/02 02:51:07] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:51:08] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 76.90%

[10/02 02:51:08] ppdet.engine INFO: Total sample number: 200, averge FPS: 35.45811733157019

[10/02 02:51:08] ppdet.engine INFO: Best test bbox ap is 0.770.

[10/02 02:51:09] ppdet.engine INFO: Epoch: [45] [ 0/75] learning_rate: 0.000085 loss: 1.671754 loss_cls: 0.781011 loss_iou: 0.174941 loss_dfl: 0.880325 loss_l1: 0.378740 eta: 0:10:17 batch_cost: 0.5481 data_cost: 0.0049 ips: 29.1918 images/s

[10/02 02:51:58] ppdet.engine INFO: Epoch: [46] [ 0/75] learning_rate: 0.000080 loss: 1.672976 loss_cls: 0.782366 loss_iou: 0.173936 loss_dfl: 0.885129 loss_l1: 0.378303 eta: 0:09:36 batch_cost: 0.5459 data_cost: 0.0049 ips: 29.3068 images/s

[10/02 02:52:48] ppdet.engine INFO: Epoch: [47] [ 0/75] learning_rate: 0.000075 loss: 1.679924 loss_cls: 0.791866 loss_iou: 0.173251 loss_dfl: 0.892923 loss_l1: 0.371434 eta: 0:08:55 batch_cost: 0.5490 data_cost: 0.0049 ips: 29.1445 images/s

[10/02 02:53:37] ppdet.engine INFO: Epoch: [48] [ 0/75] learning_rate: 0.000069 loss: 1.669277 loss_cls: 0.785255 loss_iou: 0.173793 loss_dfl: 0.879943 loss_l1: 0.384001 eta: 0:08:14 batch_cost: 0.5546 data_cost: 0.0072 ips: 28.8511 images/s

[10/02 02:54:25] ppdet.engine INFO: Epoch: [49] [ 0/75] learning_rate: 0.000064 loss: 1.653534 loss_cls: 0.783021 loss_iou: 0.173808 loss_dfl: 0.865887 loss_l1: 0.377161 eta: 0:07:32 batch_cost: 0.5445 data_cost: 0.0080 ips: 29.3847 images/s

[10/02 02:55:18] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:55:19] ppdet.engine INFO: Eval iter: 0

[10/02 02:55:24] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:55:24] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 77.23%

[10/02 02:55:24] ppdet.engine INFO: Total sample number: 200, averge FPS: 36.900204541994455

[10/02 02:55:24] ppdet.engine INFO: Best test bbox ap is 0.772.

[10/02 02:55:30] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:55:31] ppdet.engine INFO: Epoch: [50] [ 0/75] learning_rate: 0.000059 loss: 1.639129 loss_cls: 0.777811 loss_iou: 0.172295 loss_dfl: 0.865005 loss_l1: 0.371313 eta: 0:06:51 batch_cost: 0.5446 data_cost: 0.0056 ips: 29.3792 images/s

[10/02 02:56:18] ppdet.engine INFO: Epoch: [51] [ 0/75] learning_rate: 0.000054 loss: 1.643418 loss_cls: 0.775152 loss_iou: 0.170642 loss_dfl: 0.866506 loss_l1: 0.374402 eta: 0:06:10 batch_cost: 0.5376 data_cost: 0.0058 ips: 29.7619 images/s

[10/02 02:57:07] ppdet.engine INFO: Epoch: [52] [ 0/75] learning_rate: 0.000050 loss: 1.652525 loss_cls: 0.774686 loss_iou: 0.170963 loss_dfl: 0.863157 loss_l1: 0.375742 eta: 0:05:28 batch_cost: 0.5396 data_cost: 0.0068 ips: 29.6510 images/s

[10/02 02:57:56] ppdet.engine INFO: Epoch: [53] [ 0/75] learning_rate: 0.000045 loss: 1.627508 loss_cls: 0.768282 loss_iou: 0.168563 loss_dfl: 0.865570 loss_l1: 0.368651 eta: 0:04:47 batch_cost: 0.5505 data_cost: 0.0093 ips: 29.0646 images/s

[10/02 02:58:45] ppdet.engine INFO: Epoch: [54] [ 0/75] learning_rate: 0.000041 loss: 1.630234 loss_cls: 0.768148 loss_iou: 0.168092 loss_dfl: 0.868954 loss_l1: 0.361416 eta: 0:04:06 batch_cost: 0.5521 data_cost: 0.0096 ips: 28.9806 images/s

[10/02 02:59:39] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 02:59:40] ppdet.engine INFO: Eval iter: 0

[10/02 02:59:45] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 02:59:45] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 76.88%

[10/02 02:59:45] ppdet.engine INFO: Total sample number: 200, averge FPS: 34.83328737953738

[10/02 02:59:45] ppdet.engine INFO: Best test bbox ap is 0.772.

[10/02 02:59:47] ppdet.engine INFO: Epoch: [55] [ 0/75] learning_rate: 0.000037 loss: 1.630969 loss_cls: 0.772440 loss_iou: 0.170143 loss_dfl: 0.868614 loss_l1: 0.365805 eta: 0:03:25 batch_cost: 0.5482 data_cost: 0.0057 ips: 29.1862 images/s

[10/02 03:00:35] ppdet.engine INFO: Epoch: [56] [ 0/75] learning_rate: 0.000033 loss: 1.637988 loss_cls: 0.769367 loss_iou: 0.170256 loss_dfl: 0.869540 loss_l1: 0.361416 eta: 0:02:44 batch_cost: 0.5446 data_cost: 0.0055 ips: 29.3816 images/s

[10/02 03:01:24] ppdet.engine INFO: Epoch: [57] [ 0/75] learning_rate: 0.000029 loss: 1.627233 loss_cls: 0.764908 loss_iou: 0.166364 loss_dfl: 0.872990 loss_l1: 0.351342 eta: 0:02:03 batch_cost: 0.5433 data_cost: 0.0054 ips: 29.4497 images/s

[10/02 03:02:12] ppdet.engine INFO: Epoch: [58] [ 0/75] learning_rate: 0.000025 loss: 1.621432 loss_cls: 0.766320 loss_iou: 0.165519 loss_dfl: 0.872478 loss_l1: 0.342992 eta: 0:01:22 batch_cost: 0.5474 data_cost: 0.0084 ips: 29.2273 images/s

[10/02 03:03:01] ppdet.engine INFO: Epoch: [59] [ 0/75] learning_rate: 0.000022 loss: 1.618331 loss_cls: 0.764125 loss_iou: 0.167583 loss_dfl: 0.870914 loss_l1: 0.356742 eta: 0:00:41 batch_cost: 0.5461 data_cost: 0.0093 ips: 29.2967 images/s

[10/02 03:03:50] ppdet.utils.checkpoint INFO: Save checkpoint: output/ppyoloe_plus_crn_l_80e_coco-Copy1

[10/02 03:03:50] ppdet.engine INFO: Eval iter: 0

[10/02 03:03:55] ppdet.metrics.metrics INFO: Accumulating evaluatation results...

[10/02 03:03:55] ppdet.metrics.metrics INFO: mAP(0.50, 11point) = 77.04%

[10/02 03:03:55] ppdet.engine INFO: Total sample number: 200, averge FPS: 36.8503525942714

[10/02 03:03:55] ppdet.engine INFO: Best test bbox ap is 0.772.

4.4.2 推理测试集

--draw_threshold:可视化时分数的阈值,默认大于0.5的box会显示出来keep_top_k表示设置输出目标的最大数量,默认值为100,用户可以根据自己的实际情况进行设定。- 推理默认只输出图片,以前设置

--save_txt=True会输出txt文件存储bbox,新版本--save_txt没了,改成了--save_results=True,存储bbox为json文件。我也是醉了,版本一直改,参数一直动,我的学到啥时候。 - 保存的模型太多了,最佳模型移动到

ppyoloes_plus_80e文件夹,其它都删了

!python tools/infer.py -c configs/ppyoloe/ppyoloe_plus_crn_l_80e_coco-Copy1.yml \

--infer_dir=../VOCdevkit/test/images \

--output_dir=infer_output/ \

-o weights=output/ppyoloe_l_plus_80e/best_model.pdparams \

--draw_threshold=0.5 \

--save_results=True \

from PIL import Image

image_test='infer_output/1406.jpg'

image = Image.open(image_test)

image.show()

![]()

剩下数据处理生成比赛格式的csv文件,见我另一篇帖子《paddle学习赛——钢铁目标检测(yolov5、ppyoloe+,Faster-RCNN)》,实在是懒得再写了。