CNN网络的搭建(Lenet5与ResNet18)

CNN介绍

这里给出维基百科中对于卷积神经网络简介

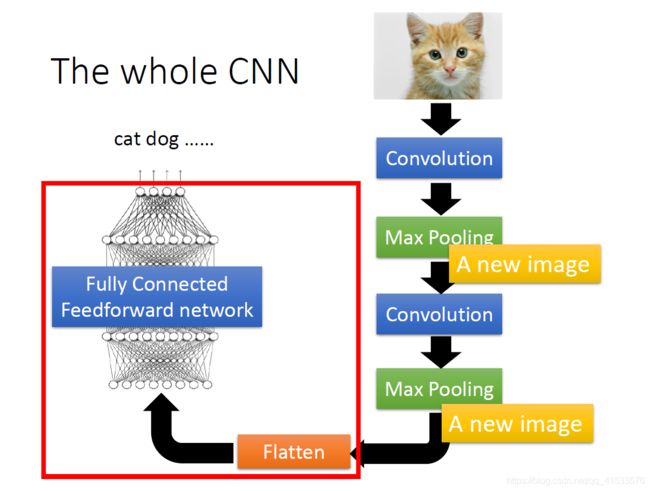

卷积神经网络(Convolutional Neural Network, CNN)是一种前馈神经网络,它的人工神经元可以响应一部分覆盖范围内的周围单元,对于大型图像处理有出色表现。

卷积神经网络由一个或多个卷积层和顶端的全连通层(对应经典的神经网络)组成,同时也包括关联权重和池化层(pooling layer)。这一结构使得卷积神经网络能够利用输入数据的二维结构。与其他深度学习结构相比,卷积神经网络在图像和语音识别方面能够给出更好的结果。这一模型也可以使用反向传播算法进行训练。相比较其他深度、前馈神经网络,卷积神经网络需要考量的参数更少,使之成为一种颇具吸引力的深度学习结构。

关于卷积神经网络更为详细的介绍可以参照这两篇博文,本文主要是进行CNN网络的搭建。

Convolutional Neural Network(part 1)

Convolutional Neural Network(part 2)

环境介绍

- python 3.8

- Pytorch 1.8.1,gpu版本

- IDE:Jupyter Notebook

LeNet5对MNIST手写数字集识别

MNIST数据集

MNIST 手写数字集的图像数据集,包含共 7 万张手写数字的图像。数据集按照60000/10000 的训练测试数据划分,每张图片都是28×28 ×1的灰度图片。

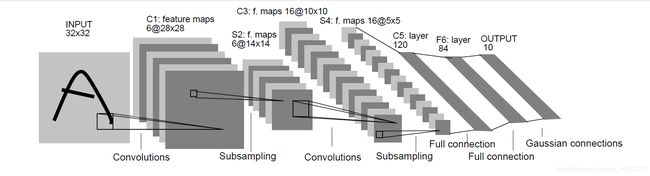

LeNet结构

LeNet-5除去输入层共有七层,从图中来看,结构是很清晰的。

- C1(卷积层):6@28×28

使用6个卷积核,大小为5×5,步长为1,32×32大小的图片经过C1层,得到6维度的28×28的特征图 - S2(池化层):6@14×14

池化单元大小为2×2,步长为2,池化后得到的张量为6@14×14 - C3(卷积层):16@10×10

使用16个卷积核,大小为5×5,步长为1,经过C3层处理后,得到16维度的10×10的特征图 - S4(池化层):16@5×5

池化单元大小为2×2,步长为2,池化后得到张量为16@5×5 - C5(卷积层):

根据S4层的输入来看,16个图的大小为5x5,与卷积核的大小相同,所以卷积后形成的图的大小为1x1,可当做一个全连接层处理 - F6(全连接层):84

将C5层输出的120张量继续送入全连接层,输出维度为84 - Output(输出层)

输出层同样也是全连接层,输出维度为10,代表10个数字。

附:kernel_size、padding、stride与output的关系

给定输入大小(H,W),卷积核大小为( F h F_h Fh, F w F_w Fw),填充为P,步长为S,设输出大小为( O h O_h Oh, O w O_w Ow)

则关系为 O h = H + 2 P − F h S + 1 O_h= \frac{H+2P-F_h}{S} +1 Oh=SH+2P−Fh+1 , O w = W + 2 P − F w S + 1 O_w= \frac{W+2P-F_w}{S} +1 Ow=SW+2P−Fw+1

实现

1.导入需要的包

import torch

import torch.nn as nn

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

from torch import optim

2. 加载数据集

# 定义超参数

batch_size = 128

epoches = 30

learning_rate = 0.01

# 加载数据集

# 首次运行,更改download=True

train_dataset = datasets.MNIST('../data', train=True, transform=transforms.ToTensor(), download=False)

test_dataset = datasets.MNIST('../data', train=False, transform=transforms.ToTensor(), download=False)

train_dataloader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_dataloader = DataLoader(test_dataset, batch_size=batch_size, shuffle=True)

3. 定义网络结构

class LeNet(nn.Module):

def __init__(self, ch_in, ch_out):

super(LeNet, self).__init__()

self.conv_unit = nn.Sequential(

# MNIST数据集图片为28*28*1,故padding设置为2

# out: 28,28,6

nn.Conv2d(ch_in, 6,kernel_size=5,stride=1, padding=2),

nn.ReLU(),

# out: 14,14,6

nn.MaxPool2d(kernel_size=2, stride=2, padding=0),

# out: 10,10,16

nn.Conv2d(6, 16, kernel_size=5, stride=1, padding=0),

nn.ReLU(),

# out: 5,5,16

nn.MaxPool2d(kernel_size=2, stride=2, padding=0)

)

self.fc_unit = nn.Sequential(

nn.Flatten(),

nn.Linear(16*5*5, 120),

nn.ReLU(),

nn.Linear(120, 84),

nn.ReLU(),

nn.Linear(84, ch_out)

)

def forward(self, x):

out = self.conv_unit(x)

out = self.fc_unit(out)

return out

4. 训练与测试

device = torch.device('cuda')

model = LeNet(1, 10)

model.to(device)

# 定义优化器和损失函数

optimizer = optim.SGD(model.parameters(), lr=learning_rate,momentum=0.9)

criterion = nn.CrossEntropyLoss().to(device)

# print(model)

for epoch in range(epoches):

# 训练

for batch_idx, (x, label) in enumerate(train_dataloader):

x, label = x.to(device), label.to(device)

# print(x.shape)

optimizer.zero_grad()

logits = model(x)

loss = criterion(logits, label)

loss.backward()

optimizer.step()

print("epoch", epoch+1, ", loss:", loss.item())

# 测试

model.eval()

with torch.no_grad():

total_correct = 0

total_num = 0

for x, label in test_dataloader:

x, label = x.to(device), label.to(device)

logits = model(x)

pred = logits.argmax(dim=1)

correct = torch.eq(pred, label).float().sum().item()

total_correct += correct

total_num += x.size(0)

acc = total_correct / total_num

print("epoch", epoch+1, ", test acc:", acc*100, "%")

print('--------------------------------------')

这里踩了一个坑,在训练中计算loss值前一定先调用optimizer.zero_grad()手动清除之前的梯度值,否则造成梯度累加会使我们看到的loss值不准确。

(我就是没有理解好这个函数,写错了位置,在第一次训练时30个epoch的loss值一直稳定地呆在2.30左右,耗费了大量时间找错。。。)

训练及测试的结果如下:

epoch 1 , loss: 0.25060728192329407

epoch 1 , test acc: 95.28 %

--------------------------------------

epoch 2 , loss: 0.15242652595043182

epoch 2 , test acc: 97.58 %

--------------------------------------

epoch 3 , loss: 0.08858081698417664

epoch 3 , test acc: 97.56 %

--------------------------------------

epoch 4 , loss: 0.04381973668932915

epoch 4 , test acc: 98.14 %

--------------------------------------

epoch 5 , loss: 0.13074694573879242

epoch 5 , test acc: 98.42999999999999 %

--------------------------------------

epoch 6 , loss: 0.0805463120341301

epoch 6 , test acc: 98.5 %

--------------------------------------

epoch 7 , loss: 0.058462340384721756

epoch 7 , test acc: 98.8 %

--------------------------------------

epoch 8 , loss: 0.06933911144733429

epoch 8 , test acc: 98.82 %

--------------------------------------

epoch 9 , loss: 0.0038293811958283186

epoch 9 , test acc: 98.83 %

--------------------------------------

epoch 10 , loss: 0.02644767425954342

epoch 10 , test acc: 98.9 %

--------------------------------------

epoch 11 , loss: 0.01148241013288498

epoch 11 , test acc: 98.89 %

--------------------------------------

epoch 12 , loss: 0.0471804253757

epoch 12 , test acc: 98.78 %

--------------------------------------

epoch 13 , loss: 0.0202518068253994

epoch 13 , test acc: 98.91 %

--------------------------------------

epoch 14 , loss: 0.01432273630052805

epoch 14 , test acc: 98.91 %

--------------------------------------

epoch 15 , loss: 0.016958141699433327

epoch 15 , test acc: 99.00999999999999 %

--------------------------------------

epoch 16 , loss: 0.02109897881746292

epoch 16 , test acc: 98.78 %

--------------------------------------

epoch 17 , loss: 0.0031826579943299294

epoch 17 , test acc: 98.78 %

--------------------------------------

epoch 18 , loss: 0.009551439434289932

epoch 18 , test acc: 98.85000000000001 %

--------------------------------------

epoch 19 , loss: 0.014796148054301739

epoch 19 , test acc: 98.8 %

--------------------------------------

epoch 20 , loss: 0.025980213657021523

epoch 20 , test acc: 98.96000000000001 %

--------------------------------------

epoch 21 , loss: 0.001810354646295309

epoch 21 , test acc: 98.94 %

--------------------------------------

epoch 22 , loss: 0.00903941411525011

epoch 22 , test acc: 98.88 %

--------------------------------------

epoch 23 , loss: 0.015878723934292793

epoch 23 , test acc: 98.9 %

--------------------------------------

epoch 24 , loss: 0.0033505165483802557

epoch 24 , test acc: 98.97 %

--------------------------------------

epoch 25 , loss: 0.01157588604837656

epoch 25 , test acc: 99.07000000000001 %

--------------------------------------

epoch 26 , loss: 0.025459831580519676

epoch 26 , test acc: 98.95 %

--------------------------------------

epoch 27 , loss: 0.009870924986898899

epoch 27 , test acc: 99.15 %

--------------------------------------

epoch 28 , loss: 0.0002311600255779922

epoch 28 , test acc: 98.92999999999999 %

--------------------------------------

epoch 29 , loss: 0.00029546089353971183

epoch 29 , test acc: 98.98 %

--------------------------------------

epoch 30 , loss: 0.004764525685459375

epoch 30 , test acc: 98.95 %

--------------------------------------

可以看出,在训练了30个epoch后,LeNet-5网络在MNIST测试集上的准确度可以接近99%,还是一个很不错的结果,考虑到MNIST数据集为灰度图片,难度不大,在使用更为复杂的数据集时,LeNet-5的表现可能就不是很好,例如使用LeNet-5在CIFAR-10数据集上,训练了10个epoch后准确率仅能达到55%左右且提升缓慢,在30个epoch后稳定在64%左右不再提升,代码和结果不再做展示。

下面搭建更复杂的ResNet18用于CIFAR-10数据集上训练和测试。

ResNet18对CIFAR-10数据集分类

CIFAR-10数据集

CIFAR-10 是一个包含60000张图片的数据集。其中每张照片为32*32的彩色照片,每个像素点包括RGB三个数值,数值范围 0 ~ 255。所有照片分属10个不同的类别,分别是 airplane、automobile、bird、cat、deer、dog、 frog、horse、ship、truck。按照50000/10000的训练测试数据划分。

ResNet18结构

ResNet18网络结构相对于LeNet-5要复杂得多,这里不做详细介绍,在代码构建过程中以注释形式说明。

实现

1. 导包

import torch

import torch.nn as nn

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

from torch import optim

from torch.nn import functional as F

2. 导入数据集

batch_size = 128

epoches = 100

learning_rate = 0.01

train_dataset = datasets.CIFAR10('../data/CIFAR_10', train=True, transform=transforms.Compose([

# 四周填充0,再将图像随机裁剪成32*32

transforms.RandomCrop(32, padding=4),

# 图像一半的概率翻转,一半的概率不翻转

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

]),download=False)

test_dataset = datasets.CIFAR10('../data/CIFAR_10', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

]),download=False)

train_dataloader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_dataloader = DataLoader(test_dataset, batch_size=batch_size, shuffle=True)

3. 定义网络结构

论文中图像输入为3@224×224,而CIFAR-10数据集为3@32×32,在实现时对网络做了少许的修改。

# 构建残差模块

class ResBlk(nn.Module):

def __init__(self, ch_in, ch_out, stride):

super(ResBlk, self).__init__()

self.conv1 = nn.Conv2d(ch_in, ch_out, kernel_size=3, stride=1, padding=1)

self.bn1 = nn.BatchNorm2d(ch_out)

self.conv2 = nn.Conv2d(ch_out, ch_out, kernel_size=3, stride=stride, padding=1)

self.bn2 = nn.BatchNorm2d(ch_out)

self.extra = nn.Sequential()

if ch_out != ch_in:

# [b, ch_in, h, w] => [b, ch_out, h, w]

self.extra = nn.Sequential(

nn.Conv2d(ch_in, ch_out, kernel_size=1, stride=stride),

nn.BatchNorm2d(ch_out)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.bn2(self.conv2(out))

# short cut:残差模块直接相连

# extra module: [b, ch_in, h, w] => [b, ch_out, h, w]

out = self.extra(x) + out

out = F.relu(out)

return out

# resnet18主体搭建

class ResNet18(nn.Module):

def __init__(self):

super(ResNet18, self).__init__()

# conv1:论文中为7×7,64,stride=2,这里做了修改

self.conv1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=3, padding=0),

nn.BatchNorm2d(64)

)

# [b, 64, h, w] => [b, 128, h ,w],即conv2_x

self.blk1 = ResBlk(64, 128, stride=2)

# [b, 128, h, w] => [b, 256, h, w],即conv3_x

self.blk2 = ResBlk(128, 256, stride=2)

# [b, 256, h, w] => [b, 512, h, w],即conv4_x

self.blk3 = ResBlk(256, 512, stride=2)

# [b, 512, h, w] => [b, 512, h, w],即conv5_x

self.blk4 = ResBlk(512, 512, stride=2)

# output

self.outlayer = nn.Linear(512, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = self.blk1(x)

x = self.blk2(x)

x = self.blk3(x)

x = self.blk4(x)

# 使用自适应平均池化,直接指定输出大小

x = F.adaptive_avg_pool2d(x, [1, 1])

# 展平张量

x = x.view(x.size(0), -1)

x = self.outlayer(x)

return x

插入话题:代码解释

在残差模块的构建中,有这样一段代码

self.extra = nn.Sequential()

if ch_out != ch_in:

# [b, ch_in, h, w] => [b, ch_out, h, w]

self.extra = nn.Sequential(

nn.Conv2d(ch_in, ch_out, kernel_size=1, stride=stride),

nn.BatchNorm2d(ch_out)

)

残差块中,对输入的x需要两次卷积、两次batch normalize,对应最后的输出维度是ch_out,在实现shortcut时,需要将原始数据x与残差网络输出数据out相加,x的维度是ch_in,若二者不相同,就需要变换,这里利用conv + bn的方法调整二者的维度。

4. 训练和测试

device= torch.device('cuda')

model = ResNet18().to(device)

criterion = nn.CrossEntropyLoss().to(device)

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

# 记录每个epoch最后一个batch的loss和acc

loss_history = []

acc_history = []

for epoch in range(epoches):

for batch_idx, (x, label) in enumerate(train_dataloader):

x, label = x.to(device), label.to(device)

logits = model(x)

loss = criterion(logits, label)

optimizer.zero_grad()

loss.backward()

optimizer.step()

loss_history.append(loss.item())

model.eval()

with torch.no_grad():

total_correct = 0

total_num = 0

for x, label in test_dataloader:

x, label = x.to(device), label.to(device)

logits = model(x)

pred = logits.argmax(dim=1)

correct = torch.eq(pred, label).float().sum().item()

total_correct += correct

total_num += x.size(0)

acc = total_correct / total_num

acc_history.append(acc)

将训练及测试结果保存画图,可得到如下结果

from matplotlib import pyplot as plt

%matplotlib inline

plt.plot(range(0,100) ,loss_history, label='loss')

plt.plot(range(0,100), acc_history,label='acc')

plt.legend()

plt.xlabel('epoch')

在训练了10个epoch后,准确率已经明显超过70 %,30个epoch后,达到80 %左右,相较于LeNet-5还是有较大提升的,从30往后到100,提升并不明显,而loss则经常出现较大的波动,后续可考虑增加learning rate decay来优化训练效果。最终准确率超过80%,并不是很高,这可能是因为在实现时对网络参数做了调整,后面会尝试继续更改参数和结构来优化测试。

查看训练时gpu的使用情况,gpu利用率达到了62%,在之前训练LeNet-5网络时,这一数字仅为4%左右,这也说明ResNet18网络比LeNet-5要复杂了许多。

在使用pytorch搭建了两种CNN网络结构后,对CNN网络在整体上加深了认知,但是对复杂网络细节的理解上还处于起步阶段,在尝试过程中也部分参考了其他文章和视频中的思路。

参考:

1. 深度学习 CNN卷积神经网络 LeNet-5详解

2. resnet18 50网络结构以及pytorch实现代码

3. Pytorch实战2:ResNet-18实现Cifar-10图像分类