【深度学习】MLP,CNN,RNN基本定义与区别

学习记录

文章目录

- 定义

-

- MLP

- CNN

- RNN

- 模型框架图

-

- 区别

- 代码实现

-

- MLP

- CNN

- RNN

- 参考

定义

MLP

multilayer perceptron(多层感知机器)。常用于处理简单逻辑数学问题。

CNN

Convolutional neural network(卷积神经网络)。适用于视频和图片的处理工作

RNN

recurrent neural network(递归神经网络)。常用于预测数据。

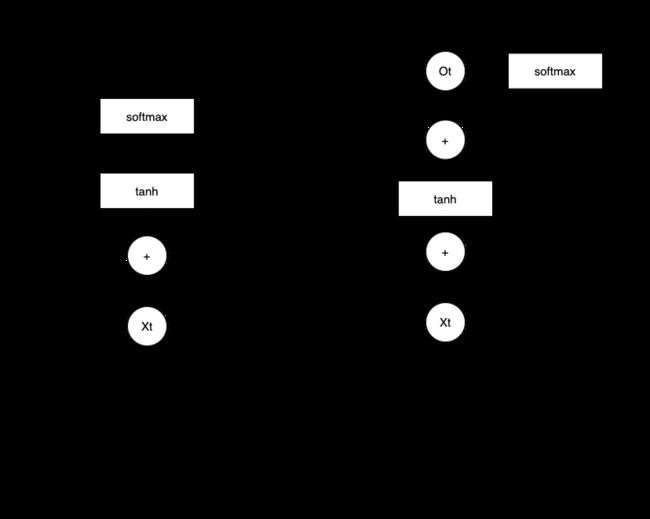

在RNN中,还有SimpleRNN,RNN等的神经元模型结构。如下图所示

模型框架图

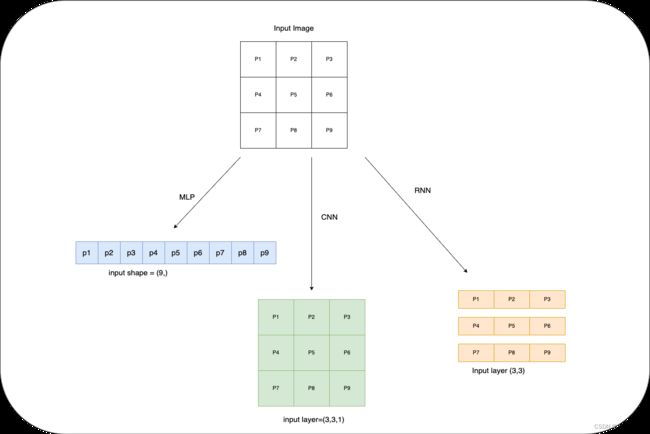

以手写数据集图片为例子,分别展示三种类型的网络结构

对于MNIST的一张输入为例子,三种模型对应的输入格式如下

具体模型架构

区别

RNN与前两个输入大不同,实际的input_shape = (timesteps,input_dim)或者是时间步长的输入多维向量序列。

代码实现

MLP

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense,Activation,Dropout

from tensorflow.keras.utils import to_categorical,plot_model

from tensorflow.keras.datasets import mnist

# 加载数据集

(x_train,y_train),(x_test,y_test)=mnist.load_data()

# 计算类别数量

num_labels = len(np.unique(y_train))

# 转换为独热编码

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

# 图片维度

image_size = x_train.shape[1]

input_size = image_size * image_size

# 转换大小和归一化

x_train = np.reshape(x_train,[-1,input_size])

x_train = x_train.astype("float32")/255

x_test = np.reshape(x_test,[-1,input_size])

x_test = x_test.astype("float32")/255

# 模型参数

batch_size = 128

hidden_units = 256

dropout = 0.45

# 模型时三层感知机,每层是relu激活函数和dropout

model = Sequential()

'''输入层'''

model.add(Dense(256,input_dim = input_size))

model.add(Activation('relu'))

model.add(Dropout(dropout))

'''隐藏层'''

model.add(Dense(hidden_units))

model.add(Activation('relu'))

model.add(Dropout(dropout))

'''输出层'''

model.add(Dense(num_labels))

# for 独热编码的输出

model.add(Activation("softmax"))

model.summary()

plot_model(model,show_shapes=True)

# 编译模型

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

# 训练模型

model.fit(x_train,y_train,epochs=20,batch_size=batch_size)

# 验证模型

_,acc = model.evaluate(x_test,y_test,batch_size=batch_size,verbose=0)

print("\nTest accuracy: %.1f%%" % (100.0 * acc))

CNN

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Activation,Dense,Dropout

from tensorflow.keras.layers import Conv2D,MaxPooling2D,Flatten

from tensorflow.keras.utils import to_categorical,plot_model

from tensorflow.keras.datasets import mnist

# 加载数据集

(x_train,y_train),(x_test,y_test)=mnist.load_data()

# 计算类别

num_labels = len(np.unique(y_train))

# 转换为独热编码

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

# 输入图片维度

image_size = x_train.shape[1]

# 更改图片尺寸和归一化

x_train = np.reshape(x_train,[-1,image_size,image_size,1])

x_test = np.reshape(x_test,[-1, image_size, image_size, 1])

x_train = x_train.astype('float32') / 255

x_test = x_test.astype('float32') / 255

# 网络参数

input_shape = (image_size,image_size,1)

batch_size = 128

kernel_size= 3

pool_size = 2

filters = 64

dropout = 0.2

# 构建模型

model = Sequential()

model.add(Conv2D(

filters=filters,

kernel_size=kernel_size,

activation='relu',

input_shape=input_shape))

model.add(MaxPooling2D(pool_size))

model.add(Conv2D(

filters=filters,

kernel_size=kernel_size,

activation='relu'))

model.add(MaxPooling2D(pool_size))

model.add(Conv2D(

filters=filters,

kernel_size=kernel_size,

activation='relu'))

model.add(Flatten())

model.add(Dropout(dropout))

model.add(Dense(num_labels))

model.add(Activation('softmax'))

model.summary()

plot_model(model, to_file='cnn-mnist.png', show_shapes=True)

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

model.fit(x_train,y_train,epochs=10,batch_size=batch_size)

_,acc = model.evaluate(x_test,y_test,batch_size=batch_size,verbose=0)

print("\nTest accuracy: %.1f%%" % (100.0 * acc))

RNN

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Activation,Dense,Dropout,SimpleRNN

from tensorflow.keras.layers import Conv2D,MaxPooling2D,Flatten

from tensorflow.keras.utils import to_categorical,plot_model

from tensorflow.keras.datasets import mnist

# 加载数据集

(x_train,y_train),(x_test,y_test)=mnist.load_data()

# 计算类别

num_labels = len(np.unique(y_train))

# 转换为独热编码

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

# 输入图片维度

image_size = x_train.shape[1]

# 更改图片尺寸和归一化

x_train = np.reshape(x_train,[-1,image_size,image_size,1])

x_test = np.reshape(x_test,[-1, image_size, image_size, 1])

x_train = x_train.astype('float32') / 255

x_test = x_test.astype('float32') / 255

# 网络参数

input_shape = (image_size, image_size)

batch_size = 128

units = 256

dropout = 0.2

# 构建模型

model = Sequential()

model.add(SimpleRNN(units=units,dropout=dropout,input_shape=input_shape))

model.add(Dense(num_labels))

model.add(Activation("softmax"))

model.summary()

plot_model(model, to_file='rnn-mnist.png', show_shapes=True)

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

model.fit(x_train,y_train,epochs=20,batch_size=batch_size)

_,acc = model.evaluate(x_test,y_test,batch_size=batch_size,verbose=0)

print("\nTest accuracy: %.1f%%" % (100.0 * acc))

参考

《Advanced Deep Learning with TensorFlow 2 and Keras》