【Opencv】形态化处理识别物体的大小

Opencv识别物体大小

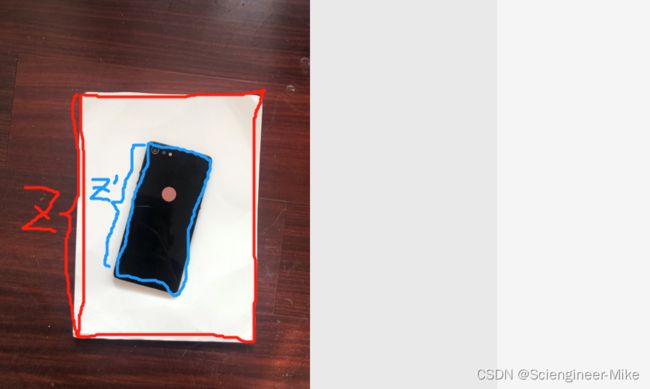

在这里,我们通过opencv读取图像来识别我们所需要的物体尺寸,其中经过了一系列形态化处理,包括:灰度化–高斯滤波–边缘检测–膨胀–腐蚀–面积计算–轮廓检测–矩形识别–透视变换,以及各种绘制技巧,对大家学习opencv有很大的帮助。

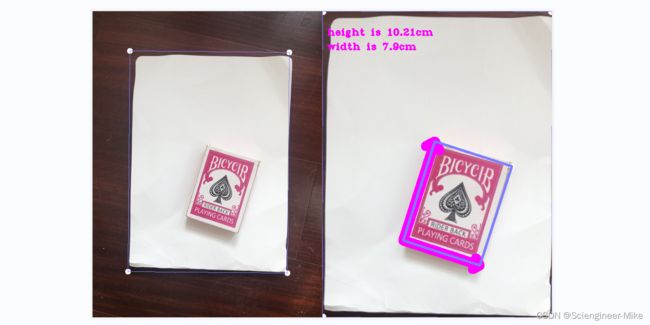

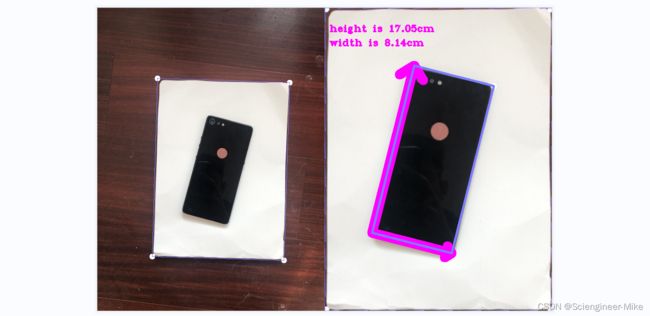

计算识别物体大小的方法其实很简单,如下图:

已知白色背景的大小为30mm(目测30mm,没有测量,更注重讲解方法),其所占的像素假设为Z,通过opencv可以获取内部手机边长像素大小为Z’,所以可以求取其长度为y’ = (Z’/Z)*30。

通过这样的形态化处理,图像的检测结果如下图:

(1)手机尺寸的识别

代码如下:

1.导入相应的包

import cv2

import numpy as np

2.选择白色背景

# 这部分筛选出图片中的白色背景,已知白色纸张的长度为30mm,图片的分辨率为640*480

# 变量的设置,不同的识别对象可能参数需要进行修改

img_path = "test1.jpg"

resizeH = 640 # 图片改变大小为640*480,在这个分辨率下白色背景的长度为30mm

resizeW = 480

canny_data = [200,250]# 边缘检测的上下限

minArea1 = 500

img = cv2.imread(img_path)

img_resized = cv2.resize(img,(resizeW ,resizeH)) # 修改图片尺寸

img_Gray = cv2.cvtColor(img_resized,cv2.COLOR_BGR2GRAY) # 转换为灰度图

img_Blur = cv2.GaussianBlur(img_Gray,(3,3),0.1,0.1) # 高斯滤波

img_Canny = cv2.Canny(img_Blur,canny_data[0],canny_data[1]) #Canny边缘检测

img_Dilate = cv2.dilate(img_Canny,kernel=np.ones((5,5)),iterations=3) #膨胀

img_Erode = cv2.erode(img_Dilate,kernel=np.ones((3,3)),iterations=3) # 腐蚀

# 接下来将从结果筛选轮廓

contours,hiearchy = cv2.findContours(img_Erode,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

finalcontours = []

for i in contours:

if cv2.contourArea(i)>minArea1: # 判断轮廓面积是否到达我们的阈值

peri = cv2.arcLength(i,True) # 轮廓周长

approx = cv2.approxPolyDP(i,0.02*peri,True) # 获取轮廓

bbox = cv2.boundingRect(approx) # 获取轮廓点

minrect = cv2.minAreaRect(approx) # 获取最小的外围矩形

points = cv2.boxPoints(minrect) # 获取轮廓矩形的点坐标

finalcontours.append([i,approx,cv2.contourArea(i),points])# 获取轮廓的集合

# print(len(finalcontours))

if len(finalcontours)>0:# 绘制满足条件的矩形

for i in finalcontours:

cv2.circle(img_resized,(int(i[3][0][0]),int(i[3][0][1])),6,(255,255,255),-1)

cv2.circle(img_resized,(int(i[3][1][0]),int(i[3][1][1])),6,(255,255,255),-1)

cv2.circle(img_resized,(int(i[3][2][0]),int(i[3][2][1])),6,(255,255,255),-1)

cv2.circle(img_resized,(int(i[3][3][0]),int(i[3][3][1])),6,(255,255,255),-1)

i[3] = i[3].reshape((-1,1,2)).astype(np.int32)

# print(i[3])

cv2.polylines(img_resized,[i[3]],isClosed=True,color=(255, 125, 125), thickness=1)

else:

print("找不到相应的轮廓,可能需要调节下阈值minArea")

3.透视变换

# 通过以上得到白色背景的矩形位置,首先对矩形的四个点进行编号,然后通过warpPerspective进行透视变换

myPointsNew = np.zeros_like(i[3])

myPoints = i[3].reshape((4,2))

add = myPoints.sum(1)

myPointsNew[0] = myPoints[np.argmin(add)]

myPointsNew[3] = myPoints[np.argmax(add)]

diff = np.diff(myPoints,axis=1)

myPointsNew[1]= myPoints[np.argmin(diff)]

myPointsNew[2] = myPoints[np.argmax(diff)]

# print(myPointsNew)

# 透视变换

pts1 = np.float32(myPointsNew)

pts2 = np.float32([[0,0],[resizeW,0],[0,resizeH],[resizeW,resizeH]])

matrix = cv2.getPerspectiveTransform(pts1,pts2)

img_Warp = cv2.warpPerspective(img_resized,matrix,(resizeW,resizeH))

4.同样的方法 获取识别对象位置,并进行尺寸计算。

# 从白色背景筛选出图中物体的位置,和上面的方法一样。

# 得到物体的像素大小,然后根据占纸张像素大小的比例,进行大小的计算

minArea2 = 20000

minArea3 = 80000

def findDis(pts1,pts2):

return ((pts2[0]-pts1[0])**2 + (pts2[1]-pts1[1])**2)**0.5

img_Gray = cv2.cvtColor(img_Warp,cv2.COLOR_BGR2GRAY) # 转换为灰度图

img_Blur = cv2.GaussianBlur(img_Gray,(3,3),0.1,0.1) # 高斯滤波

img_Canny = cv2.Canny(img_Blur,canny_data[0],canny_data[1]) #Canny边缘检测

img_Dilate = cv2.dilate(img_Canny,kernel=np.ones((5,5)),iterations=3) #膨胀

img_Erode = cv2.erode(img_Dilate,kernel=np.ones((3,3)),iterations=3) # 腐蚀

# 接下来将从结果筛选轮廓

contours,hiearchy = cv2.findContours(img_Erode,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

finalcontours = []

for i in contours:

if minArea3 >cv2.contourArea(i)>minArea2: # 判断轮廓面积是否到达我们的阈值

peri = cv2.arcLength(i,True) # 轮廓周长

approx = cv2.approxPolyDP(i,0.02*peri,True) # 获取轮廓

bbox = cv2.boundingRect(approx) # 获取轮廓点

minrect = cv2.minAreaRect(approx)

points = cv2.boxPoints(minrect)

finalcontours.append([i,approx,cv2.contourArea(i),points])

finalcontours = sorted(finalcontours,key=lambda x:x[2],reverse=True)

if len(finalcontours)>0:

i = finalcontours[0]# 在这里,我们知道只有一个识别对象,我为了简单,选取其轮廓面积最大的物体进行分析

cv2.circle(img_Warp,(int(i[3][0][0]),int(i[3][0][1])),6,(255,255,255),-1)

cv2.circle(img_Warp,(int(i[3][1][0]),int(i[3][1][1])),6,(255,255,255),-1)

cv2.circle(img_Warp,(int(i[3][2][0]),int(i[3][2][1])),6,(255,255,255),-1)

cv2.circle(img_Warp,(int(i[3][3][0]),int(i[3][3][1])),6,(255,255,255),-1)

cv2.arrowedLine(img_Warp, (int(i[3][0][0]), int(i[3][0][1])), (int(i[3][1][0]), int(i[3][1][1])),(255, 0, 255), 20, 7, 0, 0.1)

cv2.arrowedLine(img_Warp, (int(i[3][0][0]), int(i[3][0][1])), (int(i[3][3][0]), int(i[3][3][1])),(255, 0, 255), 20, 7, 0, 0.1)

hight = round((findDis(i[3][0],i[3][1])/640*30),2)

width = round((findDis(i[3][0],i[3][3])/640*30),2)

print("长和宽为:",hight,width)

i[3] = i[3].reshape((-1,1,2)).astype(np.int32)

cv2.polylines(img_Warp,[i[3]],isClosed=True,color=(255, 125, 125), thickness=3)

else:

print("找不到相应的轮廓,可能需要调节下阈值minArea")

5.绘制图像

#展示结果

cv2.putText(img_Warp,"height is {}cm".format(hight),(10, 50), cv2.FONT_HERSHEY_COMPLEX_SMALL, 1,(255, 0, 255), 2)

cv2.putText(img_Warp,"width is {}cm".format(width),(10, 80), cv2.FONT_HERSHEY_COMPLEX_SMALL, 1,(255, 0, 255), 2)

result = np.concatenate((img_resized,img_Warp),axis=1)

cv2.imshow("imgwarp",result)

cv2.imwrite('2.png',result)

cv2.waitKey(0)

cv2.destroyAllWindows()

本文选取白色背景和识别对象采用的方法一样,我们可以讲其定义为函数,进行调用。这部分不再多述,读者可以自行进行代码优化。