tensorflow实现MNIST手写数字识别演示

用tensorflow实现MNIST手写数字识别演示,分别参考这两篇文章:

https://blog.csdn.net/dusin/article/details/108544965

https://blog.csdn.net/starlet_kiss/article/details/115550344

一.tensorflow实现MNIST手写数字识别演示

1.加加载数据

import tensorflow as tf #导入tensorflow库

mnist=tf.keras.datasets.mnist

(x_train,y_train),(x_test,y_test)=mnist.load_data() #加载数据

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11493376/11490434 [==============================] - 1s 0us/step

查看数据,

其中:x_train表示训练数据集,y_train表示训练数据集对应的结果;x_test表示测试数据集,y_test表示测试集对应的结果

x_train.shape

# 输出说明有 60000条数据,每个数据是28*28的图像

(60000, 28, 28)

import matplotlib.pyplot as plt

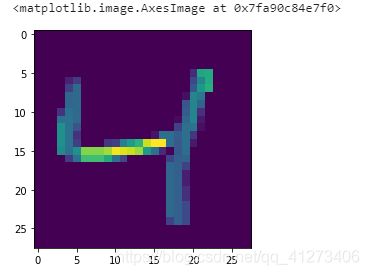

plt.imshow(x_train[2])

y_train[1]

0

2.处理数据

看一下,数据是怎么样的

x_train[1]

array([[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 51, 159, 253, 159, 50, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 48, 238, 252, 252, 252, 237, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

54, 227, 253, 252, 239, 233, 252, 57, 6, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 10, 60,

224, 252, 253, 252, 202, 84, 252, 253, 122, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 163, 252,

252, 252, 253, 252, 252, 96, 189, 253, 167, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 51, 238, 253,

253, 190, 114, 253, 228, 47, 79, 255, 168, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 48, 238, 252, 252,

179, 12, 75, 121, 21, 0, 0, 253, 243, 50, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 38, 165, 253, 233, 208,

84, 0, 0, 0, 0, 0, 0, 253, 252, 165, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 7, 178, 252, 240, 71, 19,

28, 0, 0, 0, 0, 0, 0, 253, 252, 195, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 57, 252, 252, 63, 0, 0,

0, 0, 0, 0, 0, 0, 0, 253, 252, 195, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 198, 253, 190, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 255, 253, 196, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 76, 246, 252, 112, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 253, 252, 148, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 85, 252, 230, 25, 0, 0, 0,

0, 0, 0, 0, 0, 7, 135, 253, 186, 12, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 85, 252, 223, 0, 0, 0, 0,

0, 0, 0, 0, 7, 131, 252, 225, 71, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 85, 252, 145, 0, 0, 0, 0,

0, 0, 0, 48, 165, 252, 173, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 86, 253, 225, 0, 0, 0, 0,

0, 0, 114, 238, 253, 162, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 85, 252, 249, 146, 48, 29, 85,

178, 225, 253, 223, 167, 56, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 85, 252, 252, 252, 229, 215, 252,

252, 252, 196, 130, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 28, 199, 252, 252, 253, 252, 252,

233, 145, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 25, 128, 252, 253, 252, 141,

37, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0]], dtype=uint8)

将数据归一化处理

x_train=tf.keras.utils.normalize(x_train,axis=1)

x_test=tf.keras.utils.normalize(x_test,axis=1)

查看处理后的数据

x_train[1]

array([[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.08216044, 0.2286589 , 0.3728098 , 0.30506548, 0.08583808,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.08087653,

0.38341541, 0.36240278, 0.37133624, 0.48350001, 0.4068725 ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.08861609, 0.3824786 ,

0.40758025, 0.36240278, 0.35218 , 0.44704564, 0.43262392,

0.06832372, 0.00859123, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.01621743, 0.095788 , 0.36759266, 0.42460179,

0.40758025, 0.36240278, 0.29765841, 0.16116667, 0.43262392,

0.30326141, 0.17468832, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.26434406, 0.4023096 , 0.41354174, 0.42460179,

0.40758025, 0.36240278, 0.37133624, 0.18419048, 0.32446794,

0.30326141, 0.23912253, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.08411834, 0.38597476, 0.40390606, 0.41518278, 0.32013627,

0.18365276, 0.36384089, 0.33597088, 0.09017659, 0.13562417,

0.30565873, 0.2405544 , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.07427511,

0.39255225, 0.40867916, 0.4023096 , 0.29374592, 0.02021913,

0.12082418, 0.17401086, 0.03094469, 0. , 0. ,

0.30326141, 0.34794476, 0.12263192, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.04890249, 0.2553207 ,

0.41729294, 0.37786605, 0.33206506, 0.13784725, 0. ,

0. , 0. , 0. , 0. , 0. ,

0.30326141, 0.36083161, 0.40468535, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.00966301, 0.22906954, 0.38994434,

0.39585101, 0.11514373, 0.03033287, 0.04594908, 0. ,

0. , 0. , 0. , 0. , 0. ,

0.30326141, 0.36083161, 0.47826451, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.07868449, 0.32430069, 0.38994434,

0.10391089, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.30326141, 0.36083161, 0.47826451, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.27332506, 0.3255876 , 0.29400565,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.30565873, 0.36226348, 0.48071715, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.33960736, 0.33958568, 0.32430069, 0.17330859,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.30326141, 0.36083161, 0.3629905 , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.37982402, 0.34786826, 0.29598873, 0.03868495,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.01343056, 0.23176282,

0.30326141, 0.26632809, 0.02943166, 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.37982402, 0.34786826, 0.28698037, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.0103149 , 0.25134326, 0.43262392,

0.26969888, 0.10166287, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.37982402, 0.34786826, 0.18660159, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.0690291 , 0.24313682, 0.48350001, 0.29699976,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.38429254, 0.34924869, 0.28955419, 0. ,

0. , 0. , 0. , 0. , 0. ,

0.18365276, 0.34226929, 0.3728098 , 0.31082143, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.37982402, 0.34786826, 0.32043997, 0.22592013,

0.0791702 , 0.04703054, 0.13569967, 0.29210488, 0.37910874,

0.40758025, 0.3206977 , 0.24608394, 0.10744445, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.37982402, 0.34786826, 0.32430069, 0.38994434,

0.37770784, 0.34867468, 0.4023096 , 0.41354174, 0.42460179,

0.31575387, 0.18695382, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0.1251185 , 0.27470549, 0.32430069, 0.38994434,

0.41729294, 0.40867916, 0.4023096 , 0.38236201, 0.24431452,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.03451074, 0.16472416, 0.38994434,

0.41729294, 0.40867916, 0.2251018 , 0.06071843, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ]])

3.构建神经网络

构建了一个输入层748,两个128神经元的隐藏,及10个神经元的输出层的神经网络

#构建了一个输入层748,两个128神经元的隐藏,及10个神经元的输出层的神经网络

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(128,activation=tf.nn.relu))

model.add(tf.keras.layers.Dense(128,activation=tf.nn.relu))

model.add(tf.keras.layers.Dense(10,activation=tf.nn.softmax))

model.compile(optimizer='adam',loss='sparse_categorical_crossentropy',metrics=['accuracy'])

4.训练模型以及测试

model.fit(x_train,y_train,epochs=5) #训练模型

Epoch 1/5

WARNING:tensorflow:AutoGraph could not transform .train_function at 0x7fab43c33400> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: 'arguments' object has no attribute 'posonlyargs'

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING: AutoGraph could not transform .train_function at 0x7fab43c33400> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: 'arguments' object has no attribute 'posonlyargs'

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

1875/1875 [==============================] - 11s 6ms/step - loss: 0.4852 - accuracy: 0.8598

Epoch 2/5

1875/1875 [==============================] - 10s 6ms/step - loss: 0.1202 - accuracy: 0.9637

Epoch 3/5

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0727 - accuracy: 0.9770

Epoch 4/5

1875/1875 [==============================] - 11s 6ms/step - loss: 0.0534 - accuracy: 0.9826

Epoch 5/5

1875/1875 [==============================] - 10s 5ms/step - loss: 0.0415 - accuracy: 0.9866

val_loss,val_acc=model.evaluate(x_test,y_test) #测试,获取准确率

WARNING:tensorflow:AutoGraph could not transform .test_function at 0x7fab43ae7378> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: 'arguments' object has no attribute 'posonlyargs'

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING: AutoGraph could not transform .test_function at 0x7fab43ae7378> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: 'arguments' object has no attribute 'posonlyargs'

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

313/313 [==============================] - 1s 2ms/step - loss: 0.0976 - accuracy: 0.9705

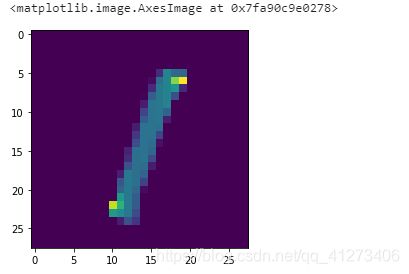

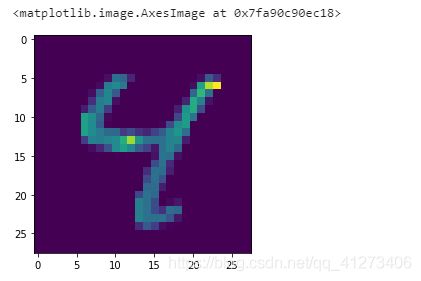

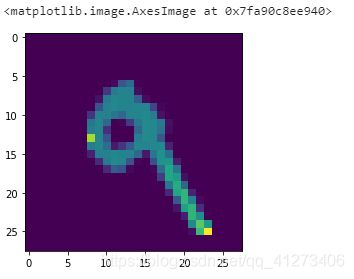

predictions = model.predict([x_test[5:8]])#识别测试集中第6到8张图片

print(predictions)#有10组概率第几组概率大就为几:先看谁的指数小,再看具体数

[[7.6170033e-07 9.9825495e-01 6.6895331e-07 3.5160573e-07 2.4966750e-05

6.1874027e-07 6.6222623e-07 1.6992649e-03 1.4578318e-05 3.2959049e-06]

[1.4816117e-12 9.7975125e-08 1.8362551e-08 5.3589950e-09 9.9984884e-01

1.4378926e-06 3.2498817e-12 4.4731055e-07 1.4663408e-04 2.4944338e-06]

[6.5769234e-07 4.2998445e-06 1.0559639e-07 9.4374955e-06 7.7115343e-05

2.8126553e-04 1.7840444e-08 1.0381923e-06 8.4870453e-06 9.9961758e-01]]

plt.imshow(x_test[5])

plt.imshow(x_test[6])

plt.imshow(x_test[7])

二.CNN实现手写数字识别

1.导入模块和数据集

import os

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, optimizers, datasets

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

(x_train,y_train),(x_test,y_test)=datasets.mnist.load_data()##直接从keras库中下载mnist数据集

2.数据预处理

#由于导入的数据集的shape为(60000,28,28),因为利用CNN需要先进行卷积和池化操作,

#所以需要保持图片的维度,经过处理之后,shape就变成了(60000,28,28,1),也就是60000张图片,每一张图片28(宽)*28(高)*1(单色)

x_train4D = x_train.reshape(x_train.shape[0],28,28,1).astype('float32')

x_test4D = x_test.reshape(x_test.shape[0],28,28,1).astype('float32')

#像素标准化

x_train,x_test = x_train4D/255.0,x_test4D/255.0

3.搭建模型

CNN模型为两个卷积和池化层以及全连接层输出的小型网络。加入了Dropout层防止过拟合,提高模型的泛化能力。

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(filters=16,kernel_size=(5,5),padding='same',input_shape=(28,28,1),activation='relu'),

tf.keras.layers.MaxPool2D(pool_size=(2,2)),

tf.keras.layers.Conv2D(filters=36,kernel_size=(5,5),padding='same',activation='relu'),

tf.keras.layers.MaxPool2D(pool_size=(2,2)),

tf.keras.layers.Dropout(0.25),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128,activation='relu'),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(10,activation='softmax')

])

4.训练模型

选取的loss函数为sparse_categorical_crossentropy函数,优化器选用的为adam,使用accuracy作为评判标准。对于训练集,我们分出80%作为训练集,20%作为测试集。一共训练20次,批训练尺寸为300

model.compile(loss='sparse_categorical_crossentropy',optimizer='adam',metrics=['acc'])

Model = model.fit(x=x_train,y=y_train,validation_split=0.2,epochs=20,batch_size=300,verbose=2)

Epoch 1/20

WARNING:tensorflow:AutoGraph could not transform .train_function at 0x7f952a678598> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: 'arguments' object has no attribute 'posonlyargs'

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING: AutoGraph could not transform .train_function at 0x7f952a678598> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: 'arguments' object has no attribute 'posonlyargs'

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING:tensorflow:AutoGraph could not transform .test_function at 0x7f9526ebd9d8> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: 'arguments' object has no attribute 'posonlyargs'

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING: AutoGraph could not transform .test_function at 0x7f9526ebd9d8> and will run it as-is.

Please report this to the TensorFlow team. When filing the bug, set the verbosity to 10 (on Linux, `export AUTOGRAPH_VERBOSITY=10`) and attach the full output.

Cause: 'arguments' object has no attribute 'posonlyargs'

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

160/160 - 55s - loss: 0.5106 - acc: 0.8392 - val_loss: 0.1076 - val_acc: 0.9662

Epoch 2/20

160/160 - 53s - loss: 0.1461 - acc: 0.9566 - val_loss: 0.0679 - val_acc: 0.9788

Epoch 3/20

160/160 - 53s - loss: 0.1038 - acc: 0.9687 - val_loss: 0.0523 - val_acc: 0.9843

Epoch 4/20

160/160 - 53s - loss: 0.0847 - acc: 0.9744 - val_loss: 0.0457 - val_acc: 0.9869

Epoch 5/20

160/160 - 54s - loss: 0.0699 - acc: 0.9783 - val_loss: 0.0429 - val_acc: 0.9881

Epoch 6/20

160/160 - 53s - loss: 0.0615 - acc: 0.9811 - val_loss: 0.0366 - val_acc: 0.9893

Epoch 7/20

160/160 - 53s - loss: 0.0534 - acc: 0.9835 - val_loss: 0.0365 - val_acc: 0.9893

Epoch 8/20

160/160 - 53s - loss: 0.0504 - acc: 0.9839 - val_loss: 0.0340 - val_acc: 0.9907

Epoch 9/20

160/160 - 54s - loss: 0.0449 - acc: 0.9861 - val_loss: 0.0337 - val_acc: 0.9904

Epoch 10/20

160/160 - 53s - loss: 0.0430 - acc: 0.9868 - val_loss: 0.0285 - val_acc: 0.9909

Epoch 11/20

160/160 - 54s - loss: 0.0388 - acc: 0.9883 - val_loss: 0.0291 - val_acc: 0.9912

Epoch 12/20

160/160 - 53s - loss: 0.0364 - acc: 0.9882 - val_loss: 0.0329 - val_acc: 0.9909

Epoch 13/20

160/160 - 53s - loss: 0.0344 - acc: 0.9896 - val_loss: 0.0289 - val_acc: 0.9913

Epoch 14/20

160/160 - 54s - loss: 0.0311 - acc: 0.9904 - val_loss: 0.0304 - val_acc: 0.9915

Epoch 15/20

160/160 - 54s - loss: 0.0305 - acc: 0.9906 - val_loss: 0.0275 - val_acc: 0.9917

Epoch 16/20

160/160 - 54s - loss: 0.0278 - acc: 0.9913 - val_loss: 0.0270 - val_acc: 0.9917

Epoch 17/20

160/160 - 52s - loss: 0.0258 - acc: 0.9913 - val_loss: 0.0267 - val_acc: 0.9922

Epoch 18/20

160/160 - 52s - loss: 0.0245 - acc: 0.9918 - val_loss: 0.0291 - val_acc: 0.9918

Epoch 19/20

160/160 - 54s - loss: 0.0245 - acc: 0.9923 - val_loss: 0.0287 - val_acc: 0.9929

Epoch 20/20

160/160 - 54s - loss: 0.0216 - acc: 0.9930 - val_loss: 0.0267 - val_acc: 0.9926

用搭建好的模型对测试集进行实验

model.evaluate(x_test,y_test,batch_size=200,verbose=2)

50/50 - 3s - loss: 0.0229 - acc: 0.9922

[0.022936329245567322, 0.9922000169754028]

ss: 0.0258 - acc: 0.9913 - val_loss: 0.0267 - val_acc: 0.9922

Epoch 18/20

160/160 - 52s - loss: 0.0245 - acc: 0.9918 - val_loss: 0.0291 - val_acc: 0.9918

Epoch 19/20

160/160 - 54s - loss: 0.0245 - acc: 0.9923 - val_loss: 0.0287 - val_acc: 0.9929

Epoch 20/20

160/160 - 54s - loss: 0.0216 - acc: 0.9930 - val_loss: 0.0267 - val_acc: 0.9926

用搭建好的模型对测试集进行实验

model.evaluate(x_test,y_test,batch_size=200,verbose=2)

50/50 - 3s - loss: 0.0229 - acc: 0.9922

[0.022936329245567322, 0.9922000169754028]