Inception v1 v2 v3

Inception v1

论文:Going deeper with convolutions

Inception的目的

论文主要介绍了,如何在有限的计算资源内,进一步提升网络的性能。

提升网络的性能的方法有很多,例如硬件的升级,更大的数据集等。但一般而言,提升网络性能最直接的方法是增加网络的深度和宽度。其中,网络的深度只的是网络的层数,宽度指的是每层的通道数。但是,这种方法会带来两个不足:

a) 容易发生过拟合。当深度和宽度不断增加的时候,需要学习到的参数也不断增加,巨大的参数容易发生过拟合。

b) 均匀地增加网络的大小,会导致计算量的加大。

因此,解决上述不足的方法是引入稀疏特性和将全连接层转换成稀疏连接。这个思路的缘由来自于两方面:1)生物的神经系统连接是稀疏的;2)有文献指出:如果数据集的概率分布能够被大型且非常稀疏的DNN网络所描述的话,那么通过分析前面层的激活值的相关统计特性和将输出高度相关的神经元进行聚类,便可逐层构建出最优的网络拓扑结构。说明臃肿的网络可以被不失性能地简化。

但是,现在的计算框架对非均匀的稀疏数据进行计算是非常低效的,主要是因为查找和缓存的开销。因此,作者提出了一个想法,既能保持滤波器级别的稀疏特性,又能充分密集矩阵的高计算性能。有大量文献指出,将稀疏矩阵聚类成相对密集的子矩阵,能提高计算性能。根据此想法,提出了Inception结构。

Inception结构

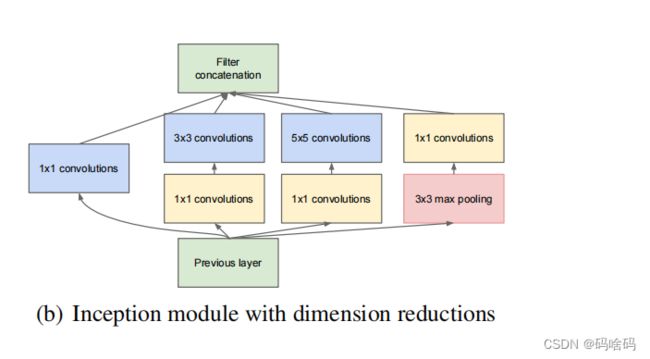

inception结构的主要思路是:如何使用一个密集成分来近似或者代替最优的局部稀疏结构。inception V1的结构如下面两个图所示。

代码

以图b为例

class Inception(nn.Module):

def __init__(self, in_channels, ch1x1, ch3x3red, ch3x3, ch5x5red, ch5x5, pool_proj):

super(Inception, self).__init__()

self.branch1 = BasicConv2d(in_channels, ch1x1, kernel_size=1)

self.branch2 = nn.Sequential(

BasicConv2d(in_channels, ch3x3red, kernel_size=1),

BasicConv2d(ch3x3red, ch3x3, kernel_size=3, padding=1)

)

self.branch3 = nn.Sequential(

BasicConv2d(in_channels, ch5x5red, kernel_size=1),

BasicConv2d(ch5x5red, ch5x5, kernel_size=5, padding=2)

)

self.branch4 = nn.Sequential(

nn.MaxPool2d(kernel_size=3, stride=1, padding=1),

BasicConv2d(in_channels, pool_proj, kernel_size=1)

)

def forward(self, x):

branch1 = self.branch1(x)

branch2 = self.branch2(x)

branch3 = self.branch3(x)

branch4 = self.branch4(x)

outputs = [branch1, branch2, branch3, branch4]

return torch.cat(outputs, dim=1)

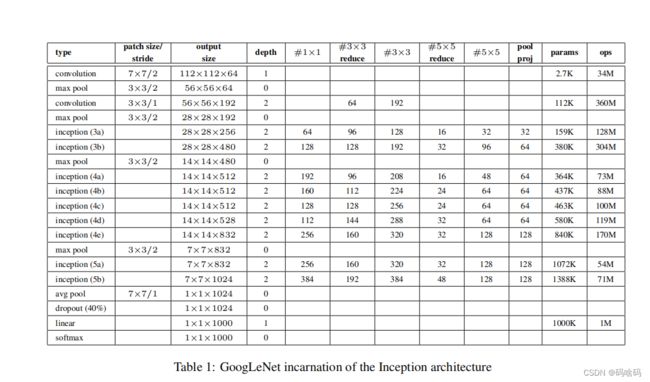

例子:GoogLeNet

GoogLeNet是由inception模块进行组成的,结构太大了,就不放出来了,这里做出几点说明:

a)GoogLeNet采用了模块化的结构,方便增添和修改;

b)网络最后采用了average pooling来代替全连接层,想法来自NIN,事实证明可以将TOP1 accuracy提高0.6%。但是,实际在最后还是加了一个全连接层,主要是为了方便以后大家finetune;

c)虽然移除了全连接,但是网络中依然使用了Dropout;

d)为了避免梯度消失,网络额外增加了2个辅助的softmax用于向前传导梯度。文章中说这两个辅助的分类器的loss应该加一个衰减系数,但看源码中的model也没有加任何衰减。此外,实际测试的时候,这两个额外的softmax会被去掉。

结构图如下所示,

每一层的输入输出以及参数量和Flops的大小

代码构建

GooLeNet

import torch

import torch.nn as nn

import torchvision.models as models

from torchsummary import summary

import torch.optim as optim

class GoogLeNet(nn.Module):

def __init__(self, num_classes=1000, aux_logits=True, init_weights=False):

super(GoogLeNet, self).__init__()

self.aux_logits = aux_logits

#输入shape(224,224,3)

self.conv1 = BasicConv2d(3, 64, kernel_size=7, stride=2, padding=3)#OUTPOUT:(112,112,64)

self.maxpool1 = nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True)#output:(56,56,64)

#depth=2

self.conv2 = BasicConv2d(64, 64, kernel_size=1)#output:(56,56,64)

self.conv3 = BasicConv2d(64, 192, kernel_size=3, padding=1)#output:(56,56,192)

self.maxpool2 = nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True)#output:(28,28,192)

self.inception3a = Inception(192, 64, 96, 128, 16, 32, 32)#output:(28,28,256)

self.inception3b = Inception(256, 128, 128, 192, 32, 96, 64)#output:(28,28,480)

self.maxpool3 = nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True)#output:(14,14,480)

self.inception4a = Inception(480, 192, 96, 208, 16, 48, 64)#output:(14,14,512)

self.inception4b = Inception(512, 160, 112, 224, 24, 64, 64)#output:(14,14,512)

self.inception4c = Inception(512, 128, 128, 256, 24, 64, 64)#output:(14,14,512)

self.inception4d = Inception(512, 112, 144, 288, 32, 64, 64)#output:(14,14,528)

self.inception4e = Inception(528, 256, 160, 320, 32, 128, 128)#output:(14,14,832)

self.maxpool4 = nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True)#output:(7,7,832)

self.inception5a = Inception(832, 256, 160, 320, 32, 128, 128)#output:(7,7,832)

self.inception5b = Inception(832, 384, 192, 384, 48, 128, 128)#output:(7,7,1024)

if self.aux_logits:

self.aux1 = InceptionAux(512, num_classes)

self.aux2 = InceptionAux(528, num_classes)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.dropout = nn.Dropout(p=0.2)

self.fc = nn.Linear(1024, num_classes)

if init_weights:

self._init_weight()

def forward(self, x):

# N x 3 x 224 x 224

x = self.conv1(x)

# N x 64 x 112 x 112

x = self.maxpool1(x)

# N x 64 x 56 x 56

x = self.conv2(x)

# N x 64 x 56 x 56

x = self.conv3(x)

# N x 192 x 56 x 56

x = self.maxpool2(x)

# N x 192 x 28 x 28

x = self.inception3a(x)

# N x 256 x 28 x 28

x = self.inception3b(x)

# N x 480 x 28 x 28

x = self.maxpool3(x)

# N x 480 x 14 x 14

x = self.inception4a(x)

# N x 512 x 14 x 14

if self.aux_logits and self.training:

aux1 = self.aux1(x)

x = self.inception4b(x)

# N x 512 x 14 x 14

x = self.inception4c(x)

# N x 512 x 14 x 14

x = self.inception4d(x)

# N x 528 x 14 x 14

if self.aux_logits and self.training:

aux2 = self.aux2(x)

x = self.inception4e(x)

# N x 832 x 14 x 14

x = self.maxpool4(x)

# N x 832 x 7 x 7

x = self.inception5a(x)

# N x 832 x 7 x 7

x = self.inception5b(x)

# N x 1024 x 7 x 7

x = self.avgpool(x)

# N x 1024 x 1 x 1

x = torch.flatten(x, start_dim=1)

# N x 1024

x = self.dropout(x)

x = self.fc(x)

if self.aux_logits and self.training:

return x, aux2, aux1

return x

def _init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

class Inception(nn.Module):

def __init__(self, in_channels, ch1x1, ch3x3red, ch3x3, ch5x5red, ch5x5, pool_proj):

super(Inception, self).__init__()

self.branch1 = BasicConv2d(in_channels, ch1x1, kernel_size=1)

self.branch2 = nn.Sequential(

BasicConv2d(in_channels, ch3x3red, kernel_size=1),

BasicConv2d(ch3x3red, ch3x3, kernel_size=3, padding=1)

)

self.branch3 = nn.Sequential(

BasicConv2d(in_channels, ch5x5red, kernel_size=1),

BasicConv2d(ch5x5red, ch5x5, kernel_size=5, padding=2)

)

self.branch4 = nn.Sequential(

nn.MaxPool2d(kernel_size=3, stride=1, padding=1),

BasicConv2d(in_channels, pool_proj, kernel_size=1)

)

def forward(self, x):

branch1 = self.branch1(x)

branch2 = self.branch2(x)

branch3 = self.branch3(x)

branch4 = self.branch4(x)

outputs = [branch1, branch2, branch3, branch4]

return torch.cat(outputs, dim=1)

class InceptionAux(nn.Module):

def __init__(self, in_channels, num_classes):

super(InceptionAux, self).__init__()

self.averagePool = nn.AdaptiveAvgPool2d((4, 4))

self.conv = BasicConv2d(in_channels, 128, kernel_size=1)

self.aux_classifier = nn.Sequential(

nn.Linear(128 * 4 * 4, 1024),

nn.Dropout(p=0.5),

nn.ReLU(inplace=True),

nn.Linear(1024, num_classes)

)

def forward(self, x):

x = self.averagePool(x)

x = self.conv(x)

x = torch.flatten(x, start_dim=1)

x = self.aux_classifier(x)

return x

class BasicConv2d(nn.Module):

def __init__(self, in_channels, out_channels, **kwargs):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, **kwargs)

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

x = self.conv(x)

x = self.relu(x)

return x

运行输出Flops

'''

module name input shape output shape params memory(MB) MAdd Flops MemRead(B) MemWrite(B) duration[%] MemR+W(B)

0 conv1.conv 3 224 224 64 112 112 9472.0 3.06 236,027,904.0 118,816,768.0 640000.0 3211264.0 24.30% 3851264.0

1 conv1.relu 64 112 112 64 112 112 0.0 3.06 802,816.0 802,816.0 3211264.0 3211264.0 2.83% 6422528.0

2 maxpool1 64 112 112 64 56 56 0.0 0.77 1,605,632.0 802,816.0 3211264.0 802816.0 5.08% 4014080.0

3 conv2.conv 64 56 56 64 56 56 4160.0 0.77 25,690,112.0 13,045,760.0 819456.0 802816.0 1.70% 1622272.0

4 conv2.relu 64 56 56 64 56 56 0.0 0.77 200,704.0 200,704.0 802816.0 802816.0 0.00% 1605632.0

5 conv3.conv 64 56 56 192 56 56 110784.0 2.30 693,633,024.0 347,418,624.0 1245952.0 2408448.0 3.96% 3654400.0

6 conv3.relu 192 56 56 192 56 56 0.0 2.30 602,112.0 602,112.0 2408448.0 2408448.0 0.56% 4816896.0

7 maxpool2 192 56 56 192 28 28 0.0 0.57 1,204,224.0 602,112.0 2408448.0 602112.0 1.13% 3010560.0

8 inception3a.branch1.conv 192 28 28 64 28 28 12352.0 0.19 19,267,584.0 9,683,968.0 651520.0 200704.0 1.13% 852224.0

9 inception3a.branch1.relu 64 28 28 64 28 28 0.0 0.19 50,176.0 50,176.0 200704.0 200704.0 0.00% 401408.0

10 inception3a.branch2.0.conv 192 28 28 96 28 28 18528.0 0.29 28,901,376.0 14,525,952.0 676224.0 301056.0 1.13% 977280.0

11 inception3a.branch2.0.relu 96 28 28 96 28 28 0.0 0.29 75,264.0 75,264.0 301056.0 301056.0 0.00% 602112.0

12 inception3a.branch2.1.conv 96 28 28 128 28 28 110720.0 0.38 173,408,256.0 86,804,480.0 743936.0 401408.0 1.69% 1145344.0

13 inception3a.branch2.1.relu 128 28 28 128 28 28 0.0 0.38 100,352.0 100,352.0 401408.0 401408.0 0.00% 802816.0

14 inception3a.branch3.0.conv 192 28 28 16 28 28 3088.0 0.05 4,816,896.0 2,420,992.0 614464.0 50176.0 0.56% 664640.0

15 inception3a.branch3.0.relu 16 28 28 16 28 28 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

16 inception3a.branch3.1.conv 16 28 28 32 28 28 12832.0 0.10 20,070,400.0 10,060,288.0 101504.0 100352.0 0.56% 201856.0

17 inception3a.branch3.1.relu 32 28 28 32 28 28 0.0 0.10 25,088.0 25,088.0 100352.0 100352.0 0.00% 200704.0

18 inception3a.branch4.0 192 28 28 192 28 28 0.0 0.57 1,204,224.0 150,528.0 602112.0 602112.0 1.70% 1204224.0

19 inception3a.branch4.1.conv 192 28 28 32 28 28 6176.0 0.10 9,633,792.0 4,841,984.0 626816.0 100352.0 0.57% 727168.0

20 inception3a.branch4.1.relu 32 28 28 32 28 28 0.0 0.10 25,088.0 25,088.0 100352.0 100352.0 0.00% 200704.0

21 inception3b.branch1.conv 256 28 28 128 28 28 32896.0 0.38 51,380,224.0 25,790,464.0 934400.0 401408.0 1.13% 1335808.0

22 inception3b.branch1.relu 128 28 28 128 28 28 0.0 0.38 100,352.0 100,352.0 401408.0 401408.0 0.00% 802816.0

23 inception3b.branch2.0.conv 256 28 28 128 28 28 32896.0 0.38 51,380,224.0 25,790,464.0 934400.0 401408.0 0.56% 1335808.0

24 inception3b.branch2.0.relu 128 28 28 128 28 28 0.0 0.38 100,352.0 100,352.0 401408.0 401408.0 0.00% 802816.0

25 inception3b.branch2.1.conv 128 28 28 192 28 28 221376.0 0.57 346,816,512.0 173,558,784.0 1286912.0 602112.0 2.26% 1889024.0

26 inception3b.branch2.1.relu 192 28 28 192 28 28 0.0 0.57 150,528.0 150,528.0 602112.0 602112.0 0.00% 1204224.0

27 inception3b.branch3.0.conv 256 28 28 32 28 28 8224.0 0.10 12,845,056.0 6,447,616.0 835712.0 100352.0 0.56% 936064.0

28 inception3b.branch3.0.relu 32 28 28 32 28 28 0.0 0.10 25,088.0 25,088.0 100352.0 100352.0 0.00% 200704.0

29 inception3b.branch3.1.conv 32 28 28 96 28 28 76896.0 0.29 120,422,400.0 60,286,464.0 407936.0 301056.0 0.56% 708992.0

30 inception3b.branch3.1.relu 96 28 28 96 28 28 0.0 0.29 75,264.0 75,264.0 301056.0 301056.0 0.00% 602112.0

31 inception3b.branch4.0 256 28 28 256 28 28 0.0 0.77 1,605,632.0 200,704.0 802816.0 802816.0 1.69% 1605632.0

32 inception3b.branch4.1.conv 256 28 28 64 28 28 16448.0 0.19 25,690,112.0 12,895,232.0 868608.0 200704.0 0.57% 1069312.0

33 inception3b.branch4.1.relu 64 28 28 64 28 28 0.0 0.19 50,176.0 50,176.0 200704.0 200704.0 0.00% 401408.0

34 maxpool3 480 28 28 480 14 14 0.0 0.36 752,640.0 376,320.0 1505280.0 376320.0 0.57% 1881600.0

35 inception4a.branch1.conv 480 14 14 192 14 14 92352.0 0.14 36,126,720.0 18,100,992.0 745728.0 150528.0 1.13% 896256.0

36 inception4a.branch1.relu 192 14 14 192 14 14 0.0 0.14 37,632.0 37,632.0 150528.0 150528.0 0.00% 301056.0

37 inception4a.branch2.0.conv 480 14 14 96 14 14 46176.0 0.07 18,063,360.0 9,050,496.0 561024.0 75264.0 0.56% 636288.0

38 inception4a.branch2.0.relu 96 14 14 96 14 14 0.0 0.07 18,816.0 18,816.0 75264.0 75264.0 0.00% 150528.0

39 inception4a.branch2.1.conv 96 14 14 208 14 14 179920.0 0.16 70,447,104.0 35,264,320.0 794944.0 163072.0 5.65% 958016.0

40 inception4a.branch2.1.relu 208 14 14 208 14 14 0.0 0.16 40,768.0 40,768.0 163072.0 163072.0 0.00% 326144.0

41 inception4a.branch3.0.conv 480 14 14 16 14 14 7696.0 0.01 3,010,560.0 1,508,416.0 407104.0 12544.0 0.00% 419648.0

42 inception4a.branch3.0.relu 16 14 14 16 14 14 0.0 0.01 3,136.0 3,136.0 12544.0 12544.0 0.00% 25088.0

43 inception4a.branch3.1.conv 16 14 14 48 14 14 19248.0 0.04 7,526,400.0 3,772,608.0 89536.0 37632.0 0.57% 127168.0

44 inception4a.branch3.1.relu 48 14 14 48 14 14 0.0 0.04 9,408.0 9,408.0 37632.0 37632.0 0.00% 75264.0

45 inception4a.branch4.0 480 14 14 480 14 14 0.0 0.36 752,640.0 94,080.0 376320.0 376320.0 1.13% 752640.0

46 inception4a.branch4.1.conv 480 14 14 64 14 14 30784.0 0.05 12,042,240.0 6,033,664.0 499456.0 50176.0 0.57% 549632.0

47 inception4a.branch4.1.relu 64 14 14 64 14 14 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

48 inception4b.branch1.conv 512 14 14 160 14 14 82080.0 0.12 32,112,640.0 16,087,680.0 729728.0 125440.0 0.56% 855168.0

49 inception4b.branch1.relu 160 14 14 160 14 14 0.0 0.12 31,360.0 31,360.0 125440.0 125440.0 0.00% 250880.0

50 inception4b.branch2.0.conv 512 14 14 112 14 14 57456.0 0.08 22,478,848.0 11,261,376.0 631232.0 87808.0 0.00% 719040.0

51 inception4b.branch2.0.relu 112 14 14 112 14 14 0.0 0.08 21,952.0 21,952.0 87808.0 87808.0 0.00% 175616.0

52 inception4b.branch2.1.conv 112 14 14 224 14 14 226016.0 0.17 88,510,464.0 44,299,136.0 991872.0 175616.0 0.57% 1167488.0

53 inception4b.branch2.1.relu 224 14 14 224 14 14 0.0 0.17 43,904.0 43,904.0 175616.0 175616.0 0.00% 351232.0

54 inception4b.branch3.0.conv 512 14 14 24 14 14 12312.0 0.02 4,816,896.0 2,413,152.0 450656.0 18816.0 0.00% 469472.0

55 inception4b.branch3.0.relu 24 14 14 24 14 14 0.0 0.02 4,704.0 4,704.0 18816.0 18816.0 0.00% 37632.0

56 inception4b.branch3.1.conv 24 14 14 64 14 14 38464.0 0.05 15,052,800.0 7,538,944.0 172672.0 50176.0 0.57% 222848.0

57 inception4b.branch3.1.relu 64 14 14 64 14 14 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

58 inception4b.branch4.0 512 14 14 512 14 14 0.0 0.38 802,816.0 100,352.0 401408.0 401408.0 1.13% 802816.0

59 inception4b.branch4.1.conv 512 14 14 64 14 14 32832.0 0.05 12,845,056.0 6,435,072.0 532736.0 50176.0 0.56% 582912.0

60 inception4b.branch4.1.relu 64 14 14 64 14 14 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

61 inception4c.branch1.conv 512 14 14 128 14 14 65664.0 0.10 25,690,112.0 12,870,144.0 664064.0 100352.0 0.56% 764416.0

62 inception4c.branch1.relu 128 14 14 128 14 14 0.0 0.10 25,088.0 25,088.0 100352.0 100352.0 0.00% 200704.0

63 inception4c.branch2.0.conv 512 14 14 128 14 14 65664.0 0.10 25,690,112.0 12,870,144.0 664064.0 100352.0 0.56% 764416.0

64 inception4c.branch2.0.relu 128 14 14 128 14 14 0.0 0.10 25,088.0 25,088.0 100352.0 100352.0 0.00% 200704.0

65 inception4c.branch2.1.conv 128 14 14 256 14 14 295168.0 0.19 115,605,504.0 57,852,928.0 1281024.0 200704.0 1.13% 1481728.0

66 inception4c.branch2.1.relu 256 14 14 256 14 14 0.0 0.19 50,176.0 50,176.0 200704.0 200704.0 0.00% 401408.0

67 inception4c.branch3.0.conv 512 14 14 24 14 14 12312.0 0.02 4,816,896.0 2,413,152.0 450656.0 18816.0 0.57% 469472.0

68 inception4c.branch3.0.relu 24 14 14 24 14 14 0.0 0.02 4,704.0 4,704.0 18816.0 18816.0 0.00% 37632.0

69 inception4c.branch3.1.conv 24 14 14 64 14 14 38464.0 0.05 15,052,800.0 7,538,944.0 172672.0 50176.0 0.00% 222848.0

70 inception4c.branch3.1.relu 64 14 14 64 14 14 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

71 inception4c.branch4.0 512 14 14 512 14 14 0.0 0.38 802,816.0 100,352.0 401408.0 401408.0 1.13% 802816.0

72 inception4c.branch4.1.conv 512 14 14 64 14 14 32832.0 0.05 12,845,056.0 6,435,072.0 532736.0 50176.0 0.00% 582912.0

73 inception4c.branch4.1.relu 64 14 14 64 14 14 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

74 inception4d.branch1.conv 512 14 14 112 14 14 57456.0 0.08 22,478,848.0 11,261,376.0 631232.0 87808.0 0.00% 719040.0

75 inception4d.branch1.relu 112 14 14 112 14 14 0.0 0.08 21,952.0 21,952.0 87808.0 87808.0 0.00% 175616.0

76 inception4d.branch2.0.conv 512 14 14 144 14 14 73872.0 0.11 28,901,376.0 14,478,912.0 696896.0 112896.0 0.00% 809792.0

77 inception4d.branch2.0.relu 144 14 14 144 14 14 0.0 0.11 28,224.0 28,224.0 112896.0 112896.0 0.00% 225792.0

78 inception4d.branch2.1.conv 144 14 14 288 14 14 373536.0 0.22 146,313,216.0 73,213,056.0 1607040.0 225792.0 1.13% 1832832.0

79 inception4d.branch2.1.relu 288 14 14 288 14 14 0.0 0.22 56,448.0 56,448.0 225792.0 225792.0 0.00% 451584.0

80 inception4d.branch3.0.conv 512 14 14 32 14 14 16416.0 0.02 6,422,528.0 3,217,536.0 467072.0 25088.0 0.57% 492160.0

81 inception4d.branch3.0.relu 32 14 14 32 14 14 0.0 0.02 6,272.0 6,272.0 25088.0 25088.0 0.00% 50176.0

82 inception4d.branch3.1.conv 32 14 14 64 14 14 51264.0 0.05 20,070,400.0 10,047,744.0 230144.0 50176.0 0.56% 280320.0

83 inception4d.branch3.1.relu 64 14 14 64 14 14 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

84 inception4d.branch4.0 512 14 14 512 14 14 0.0 0.38 802,816.0 100,352.0 401408.0 401408.0 1.13% 802816.0

85 inception4d.branch4.1.conv 512 14 14 64 14 14 32832.0 0.05 12,845,056.0 6,435,072.0 532736.0 50176.0 0.00% 582912.0

86 inception4d.branch4.1.relu 64 14 14 64 14 14 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

87 inception4e.branch1.conv 528 14 14 256 14 14 135424.0 0.19 52,985,856.0 26,543,104.0 955648.0 200704.0 0.56% 1156352.0

88 inception4e.branch1.relu 256 14 14 256 14 14 0.0 0.19 50,176.0 50,176.0 200704.0 200704.0 0.00% 401408.0

89 inception4e.branch2.0.conv 528 14 14 160 14 14 84640.0 0.12 33,116,160.0 16,589,440.0 752512.0 125440.0 0.00% 877952.0

90 inception4e.branch2.0.relu 160 14 14 160 14 14 0.0 0.12 31,360.0 31,360.0 125440.0 125440.0 0.00% 250880.0

91 inception4e.branch2.1.conv 160 14 14 320 14 14 461120.0 0.24 180,633,600.0 90,379,520.0 1969920.0 250880.0 1.13% 2220800.0

92 inception4e.branch2.1.relu 320 14 14 320 14 14 0.0 0.24 62,720.0 62,720.0 250880.0 250880.0 0.00% 501760.0

93 inception4e.branch3.0.conv 528 14 14 32 14 14 16928.0 0.02 6,623,232.0 3,317,888.0 481664.0 25088.0 0.00% 506752.0

94 inception4e.branch3.0.relu 32 14 14 32 14 14 0.0 0.02 6,272.0 6,272.0 25088.0 25088.0 0.00% 50176.0

95 inception4e.branch3.1.conv 32 14 14 128 14 14 102528.0 0.10 40,140,800.0 20,095,488.0 435200.0 100352.0 0.56% 535552.0

96 inception4e.branch3.1.relu 128 14 14 128 14 14 0.0 0.10 25,088.0 25,088.0 100352.0 100352.0 0.00% 200704.0

97 inception4e.branch4.0 528 14 14 528 14 14 0.0 0.39 827,904.0 103,488.0 413952.0 413952.0 0.56% 827904.0

98 inception4e.branch4.1.conv 528 14 14 128 14 14 67712.0 0.10 26,492,928.0 13,271,552.0 684800.0 100352.0 0.57% 785152.0

99 inception4e.branch4.1.relu 128 14 14 128 14 14 0.0 0.10 25,088.0 25,088.0 100352.0 100352.0 0.00% 200704.0

100 maxpool4 832 14 14 832 7 7 0.0 0.16 326,144.0 163,072.0 652288.0 163072.0 0.00% 815360.0

101 inception5a.branch1.conv 832 7 7 256 7 7 213248.0 0.05 20,873,216.0 10,449,152.0 1016064.0 50176.0 2.26% 1066240.0

102 inception5a.branch1.relu 256 7 7 256 7 7 0.0 0.05 12,544.0 12,544.0 50176.0 50176.0 0.00% 100352.0

103 inception5a.branch2.0.conv 832 7 7 160 7 7 133280.0 0.03 13,045,760.0 6,530,720.0 696192.0 31360.0 0.56% 727552.0

104 inception5a.branch2.0.relu 160 7 7 160 7 7 0.0 0.03 7,840.0 7,840.0 31360.0 31360.0 0.56% 62720.0

105 inception5a.branch2.1.conv 160 7 7 320 7 7 461120.0 0.06 45,158,400.0 22,594,880.0 1875840.0 62720.0 1.13% 1938560.0

106 inception5a.branch2.1.relu 320 7 7 320 7 7 0.0 0.06 15,680.0 15,680.0 62720.0 62720.0 0.00% 125440.0

107 inception5a.branch3.0.conv 832 7 7 32 7 7 26656.0 0.01 2,609,152.0 1,306,144.0 269696.0 6272.0 0.00% 275968.0

108 inception5a.branch3.0.relu 32 7 7 32 7 7 0.0 0.01 1,568.0 1,568.0 6272.0 6272.0 0.00% 12544.0

109 inception5a.branch3.1.conv 32 7 7 128 7 7 102528.0 0.02 10,035,200.0 5,023,872.0 416384.0 25088.0 0.56% 441472.0

110 inception5a.branch3.1.relu 128 7 7 128 7 7 0.0 0.02 6,272.0 6,272.0 25088.0 25088.0 0.00% 50176.0

111 inception5a.branch4.0 832 7 7 832 7 7 0.0 0.16 326,144.0 40,768.0 163072.0 163072.0 0.57% 326144.0

112 inception5a.branch4.1.conv 832 7 7 128 7 7 106624.0 0.02 10,436,608.0 5,224,576.0 589568.0 25088.0 0.56% 614656.0

113 inception5a.branch4.1.relu 128 7 7 128 7 7 0.0 0.02 6,272.0 6,272.0 25088.0 25088.0 0.00% 50176.0

114 inception5b.branch1.conv 832 7 7 384 7 7 319872.0 0.07 31,309,824.0 15,673,728.0 1442560.0 75264.0 1.13% 1517824.0

115 inception5b.branch1.relu 384 7 7 384 7 7 0.0 0.07 18,816.0 18,816.0 75264.0 75264.0 0.56% 150528.0

116 inception5b.branch2.0.conv 832 7 7 192 7 7 159936.0 0.04 15,654,912.0 7,836,864.0 802816.0 37632.0 0.56% 840448.0

117 inception5b.branch2.0.relu 192 7 7 192 7 7 0.0 0.04 9,408.0 9,408.0 37632.0 37632.0 0.00% 75264.0

118 inception5b.branch2.1.conv 192 7 7 384 7 7 663936.0 0.07 65,028,096.0 32,532,864.0 2693376.0 75264.0 0.56% 2768640.0

119 inception5b.branch2.1.relu 384 7 7 384 7 7 0.0 0.07 18,816.0 18,816.0 75264.0 75264.0 0.00% 150528.0

120 inception5b.branch3.0.conv 832 7 7 48 7 7 39984.0 0.01 3,913,728.0 1,959,216.0 323008.0 9408.0 0.56% 332416.0

121 inception5b.branch3.0.relu 48 7 7 48 7 7 0.0 0.01 2,352.0 2,352.0 9408.0 9408.0 0.00% 18816.0

122 inception5b.branch3.1.conv 48 7 7 128 7 7 153728.0 0.02 15,052,800.0 7,532,672.0 624320.0 25088.0 1.13% 649408.0

123 inception5b.branch3.1.relu 128 7 7 128 7 7 0.0 0.02 6,272.0 6,272.0 25088.0 25088.0 0.00% 50176.0

124 inception5b.branch4.0 832 7 7 832 7 7 0.0 0.16 326,144.0 40,768.0 163072.0 163072.0 0.56% 326144.0

125 inception5b.branch4.1.conv 832 7 7 128 7 7 106624.0 0.02 10,436,608.0 5,224,576.0 589568.0 25088.0 0.57% 614656.0

126 inception5b.branch4.1.relu 128 7 7 128 7 7 0.0 0.02 6,272.0 6,272.0 25088.0 25088.0 0.00% 50176.0

127 aux1.averagePool 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

128 aux1.conv.conv 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

129 aux1.conv.relu 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

130 aux1.aux_classifier.0 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

131 aux1.aux_classifier.1 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

132 aux1.aux_classifier.2 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

133 aux1.aux_classifier.3 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

134 aux2.averagePool 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

135 aux2.conv.conv 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

136 aux2.conv.relu 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

137 aux2.aux_classifier.0 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

138 aux2.aux_classifier.1 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

139 aux2.aux_classifier.2 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

140 aux2.aux_classifier.3 0 0 0 0 0 0 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

141 avgpool 1024 7 7 1024 1 1 0.0 0.00 0.0 0.0 0.0 0.0 5.08% 0.0

142 dropout 1024 1024 0.0 0.00 0.0 0.0 0.0 0.0 0.00% 0.0

143 fc 1024 1000 1025000.0 0.00 2,047,000.0 1,024,000.0 4104096.0 4000.0 4.52% 4108096.0

total 6998552.0 30.03 3,179,908,680.0 1,591,999,904.0 4104096.0 4000.0 100.00% 102538752.0

=======================================================================================================================================================================

Total params: 6,998,552

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------

Total memory: 30.03MB

Total MAdd: 3.18GMAdd

Total Flops: 1.59GFlops

Total MemR+W: 97.79MB

'''

Inception v2

论文:《Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift》。

Inception v3

论文:《Rethinking the Inception Architecture for Computer Vision》