第一个深度学习入门项目——使用AlexNet网络实现花分类

本文共5部分,内容结构如下:

1. 数据预处理:划分数据集

2. 加载自定义数据集

3. 建立Alexnet模型

4. 模型训练

5. 模型的评估和使用训练好的模型进行图片分类

6. 项目注意事项与测试结果展示

本文代码简单易懂,有较具体的注释,只需具备基础的python知识,便可以顺序通读。本文代码可从下文顺序复制,

运行本文代码前,先安装torch, matplotlib, torchvision, tqdm等工具包,可使用清华源安装,代码如下:pip install torch, matplotlib, torchvision, tqdm -i https://pypi.tuna.tsinghua.edu.cn/simple

1. 数据预处理:划分数据集

文件名:_001_split_data.py

运行该文件实现的功能:

1. 读取数据集中,各文件夹下的图片,并存入dataset.txt文件

2. 将dataset.txt文件存储的所有图片路径,划分为训练集、验证集和测试集,比率为3:1:1, 分别存入train.txt,val.txt和test.txt

代码如下:

'''

该程序适合三级目录,将存储格式为:数据集名称/类别名称/图片

得到图片路径为: img_path = 数据集名称/类别名称/图片名

使用img_path.split('/')得到:

img_path[0] = 数据集名称

img_path[1] = 类别名称

img_path[2] = 图片名

'''

import json

import os

import matplotlib.pyplot as plt

import random

# 将数据集图片路径保存到txt文件

def write_dataset2txt(dataset_path, save_path):

'''

:param save_path: txt文件保存的目标路径

:return:

'''

# 分类文件夹名称

classes_name = os.listdir(dataset_path) # 列表形式存储

# print(f'classes_name: {classes_name}')

for classes in classes_name:

cls_path = f'{dataset_path}/{classes}'

for i in os.listdir(cls_path):

img_path = f'{cls_path}/{i}'

with open(os.path.join(save_path), "a+", encoding="utf-8", errors="ignore") as f:

f.write(img_path + '\n')

print('Writing dataset to file is finish!')

# 逐行读取dataset.txt

def read_dataset(read_path):

'''

读取数据集所有图片路径

:param read_path: dataset.txt文件所在路径

:return: 返回所有图像存储路径的列表

'''

with open(os.path.join(read_path), "r+", encoding="utf-8", errors="ignore") as f:

img_list = f.read().split('\n')

img_list.remove('') # 因为写入程序最后一个循环会有换行,所以最后一个元素是空元素,故删去

# print(f'Read total of images: {len(img_list)}')

random.seed(0)

return img_list

# 读取训练集和验证集txt文件,获得图片存储路径和图片对应标签

def get_dataset_list(read_path):

'''

读取训练集和验证集txt文件,获得图片存储路径和图片对应标签

:param read_path: txt文件读取的目标路径

:return: 返回所有图像存储路径和对应标签的列表的列表

'''

with open(os.path.join(read_path), "r+", encoding="utf-8", errors="ignore") as f:

# 图片路径

data_list = f.read().split('\n')

# print(data_list)

# print(f'Read total of images: {len(data_list)}')

# 对应图片标签

img_path = []

labels = []

for i in range(len(data_list)):

image = data_list[i]

img_path.append(image)

label = data_list[i].split('/')[1]

labels.append(str(label))

# print(img_path)

return img_path, labels

# 静态划分训练集与验证集,并将训练集和验证集的图片路径和对应的标签存入txt文件中

def write_train_val_test_list(img_list, train_rate, val_rate,

train_save_path, val_save_path, test_save_path):

'''

随机划分训练集与验证集,并将训练集和验证集的图片路径和对应的标签存入txt文件中

本方法因使用random.seed(0)语句,所以本方法是静态划分数据集,若想实现动态划分,可注释掉random.seed(0)语句

:param img_list: 保存图像路径的列表

:param train_rate: 训练集数量的比率

:param train_save_path: 训练图像保存路径

:param val_save_path: 验证集图像保存路径

:return:

'''

train_index = len(img_list) * train_rate # 以train_index为界限,img_list[0, train_index)为训练集

val_index = len(img_list) * (train_rate + val_rate) # 索引在[train_index, val_index)之间的为验证集,其余的为测试集

# 列表随机打乱顺序,放入种子数,保证随机固定,使结果可复现

random.seed(0)

random.shuffle(img_list)

# 划分训练集和验证集,并写入txt文件

for i in range(len(img_list)):

# 写入训练集

if i < train_index:

with open(os.path.join(train_save_path), "a+", encoding="utf-8", errors="ignore") as f:

if i < train_index - 1:

f.write(img_list[i] + '\n')

else:

f.write(img_list[i])

# 写入验证集

elif i >= train_index and i < val_index:

with open(os.path.join(val_save_path), 'a+', encoding='utf-8', errors='ignore') as f:

if i < val_index - 1:

f.write(img_list[i] + '\n')

else:

f.write(img_list[i])

# 写入测试集

else:

with open(os.path.join(test_save_path), 'a+', encoding='utf-8', errors='ignore') as f:

if i < len(img_list) - 1:

f.write(img_list[i] + '\n')

else:

f.write(img_list[i])

print(f'Train datasets was saved: {train_save_path}')

print(f'Val datasets was saved: {val_save_path}')

print(f'Test datasets was saved: {test_save_path}')

print('Splitting datasets Finished!')

# 读取train.txt和val.txt文件中的图片路径和对应标签,并绘制柱状图

def get_train_and_val(train_txt_path, val_txt_path):

# 读取train.txt和val.txt文件中的图片路径和对应标签

train_img_path, train_label = get_dataset_list(train_txt_path)

val_img_path, val_label = get_dataset_list(val_txt_path)

# 类别的集合

classes = list(set(train_label + val_label)) # 去重

classes.sort() # 排序,固定顺序

# 统计各类别数量

every_class_num = []

for cls in classes:

# print(f'{cls} total:{train_label.count(cls) + val_label.count(cls)}')

every_class_num.append(train_label.count(cls) + val_label.count(cls)) # 追加各类别元素的数量

# print(every_class_num)

# 将标签字符串转为数值

classes_dict = {}

for i in range(len(classes)):

key = classes[i]

value = i

classes_dict[key] = value

train_labels = []

val_labels = []

for label in train_label:

train_labels.append(classes_dict[label])

for label in val_label:

val_labels.append(classes_dict[label])

# 改变字典组织格式

classes_dict = dict((v, k) for k, v in classes_dict.items())

# 将类别写入json文件

classes_json = json.dumps(classes_dict, indent=4)

json_path = r'classes.json'

with open(json_path, 'w') as f:

f.write(classes_json)

# 是否绘制每种类别个数柱状图

plot_image = False

if plot_image:

# 绘制每种类别个数柱状图

plt.bar(range(len(classes)), every_class_num, align='center')

# 将横坐标0,1,2,3,4替换为相应的类别名称

plt.xticks(range(len(classes)), classes)

# 在柱状图上添加数值标签

for i, v in enumerate(every_class_num):

plt.text(x=i, y=v + 5, s=str(v), ha='center')

# 设置x坐标

plt.xlabel('image class')

# 设置y坐标

plt.ylabel('number of images')

# 设置柱状图的标题

plt.title('Classes distribution')

plt.show()

return train_img_path, train_labels, val_img_path, val_labels, classes

if __name__ == '__main__':

write_dataset2txt(r'flower_photos', r'dataset.txt') # 创建dataset.txt数据集,将flower_photos修改为自己的数据集名称

img_list = read_dataset('dataset.txt') # 读取dataset.txt中的内容

train_rate = 0.6 # 训练集比重60%

val_rate = 0.2 # 验证集比重20%,测试集比重20%

train_save = r'train.txt'

val_save = r'val.txt'

test_save = r'test.txt'

write_train_val_test_list(img_list, train_rate, val_rate, train_save, val_save, test_save)

get_train_and_val('train.txt', 'val.txt')

train_data = r'train.txt'

val_data = r'val.txt'

train_img_path, train_labels, val_img_path, val_labels, classes = get_train_and_val(train_data, val_data)

print(f'classes: {classes}')注意:该文件写入规则使用的是'a+'的追加规则,因此只适合运行一次,若运行多次,txt文件中会生成重复的路径,后续运行会出现错误。若想多次运行该文件的main()方法,可在每次运行前,删除该程序生成的4个txt文件,或者注释write_dataset2txt()、write_train_val_test_list()两个方法,也可根据自己的需求修改。

2. 加载自定义数据集

文件名:_002_MyDataset.py

该文件实现的功能:

1. 定义一个MyDataSet()类

2. 将传入的数据集,返回RGB图像和对应图像的标签。

代码如下:

from torch.utils.data import Dataset, DataLoader

import PIL.Image as Image

class MyDataSet(Dataset):

'''

自定义数据集

'''

def __init__(self, images_path: list, images_class: list, transform=None):

self.images_path = images_path

self.images_class = images_class

self.transform = transform

def __len__(self):

return len(self.images_path)

def __getitem__(self, item):

img = Image.open(self.images_path[item])

# RGB为彩色图片,L为灰度图片

if img.mode != 'RGB':

raise ValueError("image: {} isn't RGB mode.".format(self.images_path[item]))

label = self.images_class[item]

if self.transform is not None:

img = self.transform(img)

return img, label

3. 建立Alexnet模型

文件名:_003_model.py

该文件实现的功能:

1. 创建Alexnet网络模型

2. 模型创建的思路见AlexNet论文。本文代码参考本站博文:AlexNet网络结构详解

代码如下:

import torch

import torch.nn as nn

class AlexNet(nn.Module):

def __init__(self, num_classes=1000, init_weights=False):

super(AlexNet, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=96, padding=0, stride=4, kernel_size=11), # 卷积:(3, 224, 224)-->(96, 55, 55)

nn.ReLU(inplace=True), # 激活函数

nn.MaxPool2d(kernel_size=3, padding=0, stride=2), # 池化:(96, 27, 27)

)

self.conv2 = nn.Sequential(

nn.Conv2d(in_channels=96, out_channels=256, padding=2, stride=1, kernel_size=5), # 卷积:(96, 55, 55)-->(256, 27, 27)

nn.ReLU(inplace=True), # 激活函数

nn.MaxPool2d(kernel_size=3, padding=0, stride=2), # 池化:(256, 13, 13)

)

self.conv3 = nn.Sequential(

nn.Conv2d(in_channels=256, out_channels=384, padding=1, stride=1, kernel_size=3), # 卷积:(256, 27, 27)-->(384, 13, 13)

nn.ReLU(inplace=True) # 激活函数

)

self.conv4 = nn.Sequential(

nn.Conv2d(in_channels=384, out_channels=384, padding=1, stride=1, kernel_size=3), # 卷积:(384, 13, 13)-->(384, 13, 13)

nn.ReLU(inplace=True) # 激活函数

)

self.conv5 = nn.Sequential(

nn.Conv2d(in_channels=384, out_channels=256, padding=1, stride=1, kernel_size=3), # 卷积:(384, 13, 13)-->(256, 13, 13)

nn.ReLU(inplace=True), # 激活函数

nn.MaxPool2d(kernel_size=3, padding=0, stride=2) # 池化:(256, 13, 13)-->(256, 6, 6)

)

self.fc1 = nn.Sequential(

nn.Conv2d(in_channels=256, out_channels=4096, padding=0, stride=1, kernel_size=6), # 全连接:(256, 6, 6)-->4096

nn.ReLU(inplace=True), # 激活函数

nn.Dropout(p=0.5)

)

self.fc2 = nn.Sequential(

nn.Linear(in_features=4096, out_features=4096), # 全连接:4096-->4096

nn.ReLU(inplace=True), # 激活函数

nn.Dropout(p=0.5)

)

self.fc3 = nn.Sequential(

nn.Linear(in_features=4096, out_features=num_classes) # 全连接:4096-->num_classes

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.fc1(x)

x = torch.flatten(x, start_dim=1) # 展平处理,start_dim=1:从channel的维度开始展开

x = self.fc2(x)

x = self.fc3(x)

return x

def _initialize_weights(self): # 初始化权重

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

4. 模型训练

文件名:_004_train.py

该程序实现的功能:

1. 训练AlexNet模型

代码如下:

import torch

from torch.utils.data import Dataset

from torchvision import transforms

import torch.nn as nn

import torch.optim as optim

from tqdm import tqdm

from _002_MyDataset import MyDataSet

from _001_split_data import get_train_and_val

from _003_model import AlexNet

import time

t1 = time.time()

# 1. 读取train.txt和val.txt文件中的图片路径和对应标签,并绘制柱状图

train_data = r'train.txt'

val_data = r'val.txt'

train_img_path, train_labels, val_img_path, val_labels, classes = get_train_and_val(train_data, val_data)

# 2. 数据预处理格式

data_transform = { # 数据预处理。 方法:随机裁剪,随机反转

"train": transforms.Compose([transforms.RandomResizedCrop(227),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),

"val": transforms.Compose([transforms.Resize((227, 227)), # cannot 224, must (224, 224)

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

# 3. 实例化训练集和验证集

train_dataset = MyDataSet(images_path=train_img_path,

images_class=train_labels,

transform=data_transform["train"])

val_dataset = MyDataSet(images_path=val_img_path,

images_class=val_labels,

transform=data_transform["val"])

# 4. 加载训练集和验证集

batch_size = 8 # 每次抓取图片的数量

num_classes = 5 # 类别数量

num_workers = 0 # 并行处理器数量,默认0

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

pin_memory=True,

num_workers=num_workers)

val_loader = torch.utils.data.DataLoader(val_dataset,

batch_size=batch_size,

shuffle=True,

pin_memory=True,

num_workers=num_workers)

t2 = time.time()

print(f"using {len(train_dataset)} images for training, {len(val_dataset)} images for validation.")

print(f'Loading images using time is {round((t2 - t1), 4)}s')

# 生成网络实例

model = AlexNet(num_classes=num_classes, init_weights=True) # 初始化网络实例

# device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") # 判断显卡设备

device = 'cpu'

print(f"using {device} device.")

model.to(device=device) # 将模型载入cpu或gpu

t3 = time.time()

print(f'Loading model using time is {round((t3 - t2), 4)}s')

# 定义损失函数

loss_function = nn.CrossEntropyLoss()

# 定义优化器

# print(f'model.parameters: {list(model.parameters())}')

optimizer = optim.Adam(model.parameters(), lr=0.0002)

epochs = 20 # 训练总轮次

model_save_path = r'AlexNet.pth'

best_acc = 0.0

train_steps = len(train_loader)

val_num = len(val_labels)

# print(train_steps)

for epoch in range(epochs):

# 训练过程

model.train()

running_loss = 0.0

train_bar = tqdm(train_loader) # 定义训练进度条,并保存数据集

for step, data in enumerate(train_bar): # 遍历数据集

images, labels = data

labels = torch.tensor(labels)

optimizer.zero_grad()

outputs = model(images.to(device)) # 将训练图像运行到训练设备

loss = loss_function(outputs, labels.to(device))

loss.backward()

optimizer.step()

# 打印训练信息

running_loss += loss.item()

train_bar.desc = f'train epoch[{epoch}/{epochs} loss:{round(float(loss), 3)}]'

# 验证过程

model.eval()

acc = 0.0

with torch.no_grad(): # 梯度清零

val_bar = tqdm(val_loader)

for data in val_bar:

val_images, val_labels = data

outputs = model(val_images.to(device))

predict = torch.max(outputs, dim=1)[1]

# print(predict)

acc += torch.eq(predict, val_labels.to(device)).sum().item()

val_acc = round((acc / val_num)*100, 4) # 百分率

print(f'[epoch {epoch+1}] train_loss:{round((running_loss/train_steps), 3)} val_accuracy:{round(val_acc)}%')

if val_acc > best_acc:

best_acc = val_acc

torch.save(model.state_dict(), model_save_path)

print('Finished Training.')

注意:可根据自己的需求,修改如下超参数:

batch_size = 8 # 每次抓取图片的数量,越大越好,运行时报OOM错误,将该参数降低 num_classes = 5 # 类别数量,修改为自己数据集中的类别数device = 'cpu' # 如果自己有可用于训练的显卡,可替换为:device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")epochs = 20 # 训练总轮次,可根据自己的需求,修改训练轮次 model_save_path = r'AlexNet.pth' # 可根据自己的需求,修改模型名称,但文件后缀为.pth

5. 模型的评估和使用训练好的模型进行图片分类

文件名:_005_predict.py

该文件实现的功能:

1. 运行test()方法,可得到模型评估的结果,包含模型的准确率,混淆矩阵,精准率,召回率,F1分数,并将结果保存到result.txt文件中

2. 运行predict()方法,可对指定图片进行分类,并显示对应图片和预测结果

代码如下:

import os

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

import json

from _001_split_data import get_dataset_list, get_train_and_val

from _003_model import AlexNet

from sklearn.metrics import confusion_matrix

from sklearn.metrics import classification_report

from sklearn.metrics import accuracy_score

def predict():

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

num_classes = 5

# 数据组织方式

data_transform = transforms.Compose(

[transforms.Resize((227, 227)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]

)

# 加载图片

image_path = r'daisy.jpg' # 测试一张图片

print(image_path)

assert os.path.exists(image_path), f'file: {image_path} does not exist'

img = Image.open(image_path) # 打开图片,数据格式为RGB

plt.imshow(img) # 显示图片

# 将图片转换为[N, C, H, W]数据组织格式

img = data_transform(img)

# 展开批次维度

# unsqueeze()在dim维插入一个维度为1的维,例如原来x是n×m维的,torch.unqueeze(x,0)这返回1×n×m的tensor

img = torch.unsqueeze(img, dim=0)

# 读取类别标签

json_path = 'classes.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f:

class_json = json.load(f)

# 载入训练模型

model = AlexNet(num_classes=num_classes).to(device)

# 载入模型权重

weights_path = r'AlexNet.pth'

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path))

# 开始用模型预测

model.eval()

with torch.no_grad(): # 梯度清零

# 预测图片类别

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

# 预测结果可能性做大的结果的下标

predict_index = torch.argmax(predict).numpy()

img_title = f'class: {class_json[str(predict_index)]} prob: {predict[predict_index].numpy():.3}'

plt.title(img_title)

for i in range(len(predict)): # 打印预测各类别的可能性

print(f'class: {class_json[str(i)]} prob: {predict[i].numpy():.3}')

# 打印图片和结果

plt.show()

def test():

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

num_classes = 5

# 数据组织方式

data_transform = transforms.Compose(

[transforms.Resize((227, 227)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]

)

# 加载图片

test_list = get_dataset_list(r'test.txt')

img_list, labels = test_list # 获取图像路径和标签

# 载入训练模型

model = AlexNet(num_classes=num_classes).to(device)

predict_labels = []

for i in range(len(img_list)):

image_path = f'{img_list[i]}' # 读取一张图片

# print(image_path)

assert os.path.exists(image_path), f'file: {image_path} does not exist'

img = Image.open(image_path) # 打开图片,数据格式为RGB

# 将图片转换为[N, C, H, W]数据组织格式

img = data_transform(img)

# 展开批次维度

# unsqueeze()在dim维插入一个维度为1的维,例如原来x是n×m维的,torch.unqueeze(x,0)这返回1×n×m的tensor

img = torch.unsqueeze(img, dim=0)

# 读取类别标签

json_path = 'classes.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f:

class_json = json.load(f)

# 载入模型权重

weights_path = r'AlexNet.pth'

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path))

# 开始用模型预测

model.eval()

with torch.no_grad(): # 梯度清零

# 预测图片类别

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

# 预测结果可能性做大的结果的下标

predict_index = torch.argmax(predict).numpy()

img_title = f'[Image {(i+1)}]\tclass:{class_json[str(predict_index)]} prob:{predict[predict_index].numpy():.3}'

predict_labels.append(class_json[str(predict_index)]) # 将预测结果加入预测标签列表

print(img_title)

# 获取测试集的标签列表

classes_name = list(set(labels))

classes_name.sort()

print(f'classes_name: {classes_name}')

print(f'labels: {labels}')

print(f'predict_labels: {predict_labels}')

# 统计预测准确率

accuracy = accuracy_score(labels, predict_labels)

print(f'accuracy: {accuracy}')

# # 计算混淆矩阵

Array = confusion_matrix(labels, predict_labels)

print(f'confusion_matrix:\n{Array}')

# 计算precision, recall, F1-score, support

result = classification_report(labels, predict_labels, target_names=classes_name)

print(result)

# 保存混淆矩阵结果

save_result_path = r'test_result.txt'

with open(save_result_path, 'w') as f:

f.write(f'{classes_name}\n')

f.write(str(Array) + '\n')

f.write(result)

if __name__ == '__main__':

test()

predict()

6. 项目注意事项与测试结果展示

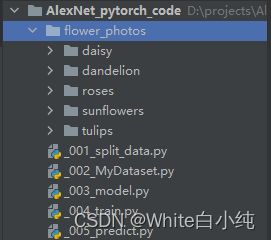

(1) 项目目录结构如下图所示:

注意:可将flower_photos数据集替换为自己的数据集,但目录结构要与之相同,同时改为自己的数据集后,注意_001_split_data.py文件中的该语句:

write_dataset2txt(r'flower_photos', r'dataset.txt') # 创建dataset.txt数据集,将flower_photos修改为自己的数据集名称

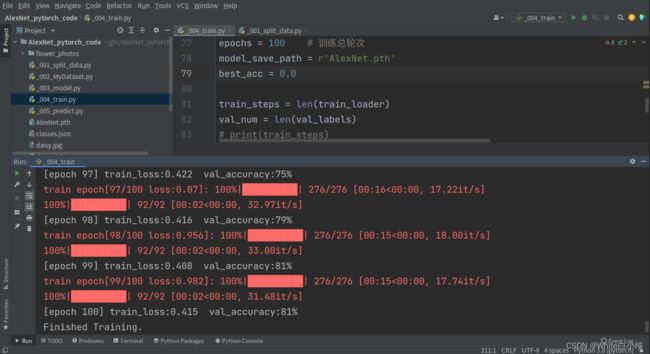

(2)模型训练界面如下:

这里因为我调用了实验室服务器的缘故,所以每轮的训练用时很短,因此把训练轮次设置为100轮,得到的模型准确率在81%左右。

(3)模型评估结果下所示:

accuracy: 0.7970027247956403

confusion_matrix:

[[109 17 9 1 3]

[ 3 145 3 14 5]

[ 4 3 88 7 22]

[ 4 5 3 121 4]

[ 6 4 28 4 122]]

precision recall f1-score support

daisy 0.87 0.78 0.82 139

dandelion 0.83 0.85 0.84 170

roses 0.67 0.71 0.69 124

sunflowers 0.82 0.88 0.85 137

tulips 0.78 0.74 0.76 164

accuracy 0.80 734

macro avg 0.80 0.79 0.79 734

weighted avg 0.80 0.80 0.80 734

可见,模型通过对734张图片进行预测,最终预测的准确率在79.7%,近似80%,由此可看出,在训练数据集上经过100轮训练后,得到的模型对花图片分类有较高的准确率。

(4)预测单张图片结果如下图所示:

代码中,让模型预测这张有小雏菊的图片,模型最后生成的结论就是,这100%是张小雏菊的图片。

写在最后

该项目可顺序复制上述代码到与文件名相同的文件中,组织相同的目录结构,配置好相应的pytorch环境,就可以顺利运行。本文所写的代码,都有较完整的注释,供大家研究以理解代码。

完整的项目已上传到本站,内容包含完整的项目代码、花分类数据集,以及训练好的AlexNet.pth模型。大家可以免费(0积分)下载该项目部署到自己的计算机,配置好pytorch环境后,可直接运行_005_predict.py文件中的predict()方法,预测花分类图片。

资源下载传送门:https://download.csdn.net/download/qq_35284513/86613225

关于这个项目的讲解这里就结束了,希望对初入计算机视觉和深度学习图像分类学习的伙伴有所帮助。创作不易,希望大家点赞支持。