PyTorch深度学习实践——卷积神经网络(基础篇)

参考资料

参考资料1:https://blog.csdn.net/bit452/article/details/109690712

参考资料2:http://biranda.top/Pytorch%E5%AD%A6%E4%B9%A0%E7%AC%94%E8%AE%B0011%E2%80%94%E2%80%94Simple_CNN/

卷积神经网络(基础篇)

1、conv

import torch

in_channels, out_channels = 5, 10#输入通道是5,输出通道是10

width, height = 100, 100

kernel_size = 3 #默认转为3*3,最好用奇数正方形

#在pytorch中的数据处理都是通过batch来实现的

#因此对于C*W*H的三个维度图像,在代码中实际上是一个B(batch)*C*W*H的四个维度的图像

batch_size = 1 # 1

#生成一个四维的随机数

input = torch.randn(batch_size, in_channels, width, height)

#Conv2d需要设定,输入输出的通道数以及卷积核尺寸

conv_layer = torch.nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size)

output = conv_layer(input)

print(input.shape)# 输入的图像的形状

print(output.shape)#输出的图像的形状

print(conv_layer.weight.shape)#卷积核的形状

#torch.Size([1, 5, 100, 100])

#torch.Size([1, 10, 98, 98])

#torch.Size([10, 5, 3, 3]) #10就是输出的通道,5是输入的通道,3和3是卷积核的大小

2、max_pooling

import torch

input = [3,4,6,5,7,

2,4,6,8,2,

1,6,7,8,4,

9,7,4,6,2,

3,7,5,4,1]

#将输入变为B*C*W*H

input = torch.Tensor(input).view(1, 1, 5, 5)

#构建卷积层,输入是1个通道,输出是1个通道,卷积核3×3,padding设为1,偏置量bias设为false,stride=2可设置卷积核的步长为2,不写默认为1

conv_layer = torch.nn.Conv2d(1, 1, kernel_size=3, stride=2, padding=1, bias=False)

#构建卷积核,将卷积核改变形状为 输出通道数*输入通道数*W*H

kernel = torch.Tensor([1,2,3,4,5,6,7,8,9]).view(1, 1, 3, 3)

#将做出来的卷积核张量,赋值给卷积运算中的权重(参与卷积计算)

conv_layer.weight.data = kernel.data

output = conv_layer(input)

print(output)

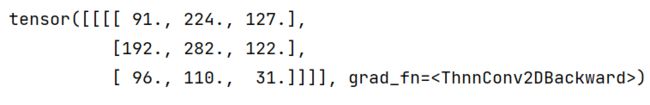

3、kernal

import torch

input = [3,4,6,5,

2,4,6,8,

1,6,7,8,

9,7,4,6]

#batch_size, in_channels, width, height

input = torch.Tensor(input).view(1, 1, 4, 4)

#kernel_size=2 则MaxPooling中的Stride也为2

maxpooling_layer = torch.nn.MaxPool2d(kernel_size=2)

output = maxpooling_layer(input)

print(output)

4、简单卷积神经网络的实现

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

# 均值 和标准差,,为了能把图像数据映射成 (0,1)分布

])

#训练集

train_dataset = datasets.MNIST(root='../dataset/mnist',

train=True,

download=True,

transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

#测试集

test_dataset = datasets.MNIST(root='../dataset/mnist',

train=False,

download=True,

transform=transform)

test_loader = DataLoader(dataset=test_dataset,batch_size=batch_size,shuffle=False,)

# 构建网络

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

#卷积层 输入通道1个,输出通道10个,核是5×5

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

#池化层

self.pooling = torch.nn.MaxPool2d(2)

#全连接层

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# data from (n,1,28,28) to (n,784) ,n是batch

batch_size = x.size(0) # 这一步在干什么 ,得到样本数量n

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size, 320)

x = self.fc(x) # 交叉熵损失函数,最后一层没有激活函数,请注意

return x

model = Net()

#cuda 0是选择第一块显卡,cuda 1是选择第二块显卡。

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

#将来model迁移到device

model.to(device)

#交叉熵损失

criterion = torch.nn.CrossEntropyLoss()

#梯度下降法,每一次迭代计算mini-batch

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

# 训练 —— 把一轮循环 封装成函数

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

#send the inputs and targets at every step to the GPU。

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()# 优化器梯度清零

# 前馈,反馈,更新

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' %(epoch + 1, batch_idx + 1, running_loss/300))

running_loss = 0.0

def test():

corret = 0

total = 0

with torch.no_grad(): # 测试集不需要计算梯度

for data in test_loader:

inputs, target = data

# send the inputs and targets at every step to the GPU。

inputs, target = inputs.to(device), target.to(device)

outputs = model(inputs)

_, predicted = torch.max(outputs.data, dim=1) # 返回两个值,一个是最大值,一个最大值下标

total += target.size(0)

corret += (predicted == target).sum().item()

print('Accuracy on test set: %d %%' %(100*corret / total))

if __name__=='__main__':

for epoch in range(10):

train(epoch)

test()