【PyTorch】2 Kaggle猫狗二分类实战——搭建CNN网络

利用PyTorch搭建CNN网络

- 1. 背景

- 2. 数据说明

- 3. 训练与测试

- 4. CNN网络完整代码

- 小结

1. 背景

Kaggle 上 Dogs vs. Cats 二分类实战

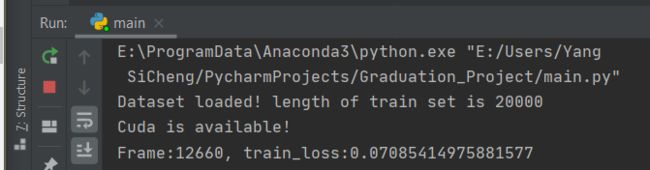

数据集是RGB三通道图像,由于下载的test数据集没有标签,我们把train的cat.10000.jpg-cat.12499.jpg和dog.10000.jpg-dog.12499.jpg作为测试集,这样一共有20000张图片作为训练集,5000张图片作为测试集

pytorch torch.utils.data 可训练数据集创建

文章主要参考此文,修改数据处理方式,增加一些步骤和注释,以及此文

2. 数据说明

image = np.array(image)[:, :, :3]此步骤产生的

第0个 image(200 * 200 * 3):

[[[203 164 87]

[206 167 90]

[210 171 94]

...

第0个 label:

0

返回的是tensor(3 * 200 * 200):

tensor([[[0.7961, 0.8078, 0.8235, ..., 0.9608, 0.9490, 0.9373],

[0.7961, 0.8078, 0.8235, ..., 0.9608, 0.9529, 0.9412],

[0.7961, 0.8078, 0.8235, ..., 0.9608, 0.9569, 0.9451],

...,

[[0.6431, 0.6549, 0.6706, ..., 0.8000, 0.7922, 0.7843],

[0.6431, 0.6549, 0.6706, ..., 0.8039, 0.7961, 0.7882],

[0.6431, 0.6549, 0.6706, ..., 0.8039, 0.8000, 0.7922],

...,

[[0.3412, 0.3529, 0.3686, ..., 0.4706, 0.4745, 0.4745],

[0.3412, 0.3529, 0.3686, ..., 0.4784, 0.4784, 0.4784],

[0.3412, 0.3529, 0.3686, ..., 0.4824, 0.4863, 0.4824],

...,

tensor([0])

可以发现:

ToTensor()将shape为(H, W, C)的nump.ndarray或img转为shape为(C, H, W)的tensor,其将每一个数值归一化到[0,1]

nn.Conv2d的用法见此

3. 训练与测试

训练图片见此:

5000 Finished!0.7044

可见正确率为 0.7044

4. CNN网络完整代码

from PIL import Image # pillow库,PIL读取图片

from torch.utils.data import Dataset # Dataset的抽象类,所有其他数据集都应该进行子类化,所有子类应该override__len__和__getitem__,前者提供了数据集的大小,后者支持整数索引,范围从0到len(self)

import torchvision.transforms as transforms # 一般的图像转换操作类

import os # 打开文件夹用

import numpy as np # 图片数据转换成numpy数组形式

import torch

IMAGE_H = 200 # 默认输入网络的图片大小

IMAGE_W = 200

data_transform = transforms.Compose([

transforms.ToTensor()

# transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5)) # 变成[-1,1]的数

]) # 定义一个转换关系,用于将图像数据转换成PyTorch的Tensor形式,并且数值归一化到[0.0, 1.0]

class DogsVSCatsDataset(Dataset):

def __init__(self, mode, dir):

self.mode = mode

self.list_train_image_path = []

self.list_train_label = []

self.data_train_size = 0

self.list_test_image_path = []

self.list_test_label = []

self.data_test_size = 0

self.transform = data_transform

if self.mode == 'train':

dir = dir + '/train/'

for file in os.listdir(dir): # 遍历dir文件夹

self.list_train_image_path.append(dir + file) # file 形如 cat.0.jpg

self.data_train_size += 1

name = file.split('.')

if name[0] == 'cat':self.list_train_label.append(0) # 0是cat

else: self.list_train_label.append(1) # 1是dog

elif self.mode == 'test':

dir = dir + '/test/'

for file in os.listdir(dir): # 遍历dir文件夹

self.list_test_image_path.append(dir + file)

self.data_test_size += 1

name = file.split('.')

if name[0] == 'cat':self.list_test_label.append(0)

else:

self.list_test_label.append(1) # 1是dog

else:

print('No such mode!')

def __getitem__(self, item):

if self.mode == 'train':

image = Image.open(self.list_train_image_path[item])

image = image.resize((IMAGE_H, IMAGE_W)) # raise ValueError(ValueError: Unknown resampling filter (200). Use Image.NEAREST (0),

image = np.array(image)[:, :, :3] # 200 * 200 * 3

label = self.list_train_label[item]

return self.transform(image), torch.LongTensor([label]) # 将image和label转换成PyTorch形式并返回

elif self.mode == 'test':

image = Image.open(self.list_test_image_path[item])

image = image.resize((IMAGE_H, IMAGE_W))

image = np.array(image)[:, :, :3]

label = self.list_test_label[item]

return self.transform(image), torch.LongTensor([label]) # 3 * 200 * 200

def __len__(self):

return self.data_test_size + self.data_train_size

import torch.nn as nn

import torch.nn.functional as F

# 输入四维张量,[N, C, H, W]

class Net(nn.Module): # 继承PyTorch的nn.Module父类

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 16, 3, padding=1) # 第一个卷积层,输入通道数3,输出通道数16,卷积核大小3×3,padding大小1

self.conv2 = nn.Conv2d(16, 16, 3, padding=1)

self.fc1 = nn.Linear(50 * 50 * 16, 128) # 第一个全连层,线性连接,输入节点数50×50×16,输出节点数128

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 2)

def forward(self, x): # 重写父类forward方法,即前向计算,通过该方法获取网络输入数据后的输出值

x = self.conv1(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2) # n * 16 * 50 * 50

x = x.view(x.size()[0], -1) # 由于全连层输入的是一维张量,因此需要对输入的[50×50×16]格式数据排列成[40000×1]形式,n * 40000

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return F.softmax(x, dim=1) # 采用SoftMax方法将输出的2个输出值调整至[0.0, 1.0],两者和为1,并返回,tensor([[0.4544, 0.5456]], grad_fn=)

from torch.utils.data import DataLoader

from torch.autograd import Variable

import numpy as np

dataset_dir = '... your path/dogs-vs-cats-redux-kernels-edition'

if __name__ == '__main__':

model = Net()

# Train

# datafile = DogsVSCatsDataset('train', dataset_dir)

# dataloader = DataLoader(datafile, batch_size=10, shuffle=True, num_workers=10) # batch_size:一次性读取多少张图片(采样器个数), num_workers:PyTorch读取数据线程数量

# print('Dataset loaded! length of train set is {0}'.format(len(datafile)))

# if torch.cuda.is_available() == True:

# print('Cuda is available!')

# model = model.cuda()

# optimizer = torch.optim.Adam(model.parameters(), lr=0.0001) # 学习率

# criterion = nn.CrossEntropyLoss()

#

# cnt = 0

# for img, label in dataloader:

# img, label = Variable(img).cuda(), Variable(label).cuda()

# out = model(img)

# loss = criterion(out, label.squeeze()) # torch.Size([10, 1]) → tensor([1, 1, 1, 1, 1, 1, 1, 0, 0, 1]

# loss.backward()

# optimizer.step()

# optimizer.zero_grad()

# cnt += 1

#

# print('\rFrame:{0}, train_loss:{1}'.format(cnt * 10, loss / 10), end='')

# torch.save(model.state_dict(), dataset_dir + '/model.pth')

# Test

datafile = DogsVSCatsDataset('test', dataset_dir)

dataloader = DataLoader(datafile)

print('Dataset loaded! length of test set is {0}'.format(len(datafile)))

if torch.cuda.is_available() == True:

model.cuda()

model.load_state_dict(torch.load(dataset_dir + '/model.pth'))

model.eval()

correct_num = 0

cnt = 0

for img, label in dataloader:

img, label = Variable(img).cuda(), Variable(label).cuda()

out = model(img) # tensor([[0.8892, 0.1108]], device='cuda:0', grad_fn=)

if (out[0][0] > out[0][1] and label[0][0] == 0) or (out[0][0] < out[0][1] and label[0][0] == 1):

correct_num += 1

cnt += 1

if cnt % 20 == 0:

print('\r%d Finished!' % cnt, end='')

print(correct_num/len(datafile))

小结

能够利用PyTorch搭建CNN网络,对卷积神经网络的一些概念、函数的参数有了一些了解,此仅为动手实践,所以精确度并不高,可以尝试增加验证集、多次迭代训练、更改损失函数、更改梯度下降计算方式、修改softmax函数等方式

未来进行RNN和NLP的实战