def getData2():

r"""

使用numpy生成随机数;

使用pandas构造满足条件的随机数;

:return:

"""

df = pd.DataFrame()

df['X'] = np.random.randint(1,100,size=(100))

df['Y'] = np.random.randint(1,100,size=(100))

G1 = df[(df['X']>50) & (df['Y']>50)]

G2 = df[(df['X']<50) & (df['Y']<50)]

G1 = G1.reset_index(drop=True)

G2 = G2.reset_index(drop=True)

return (G1, G2)

# 解决分类问题

import math

from LinearRegression import *

def calDistance(x,y,w,b):

r"""

计算一个点(x,y)到直线(w,b)的距离

:param x: point x

:param y: point y

:param w: 直线的斜率

:param b: 直线的截距

:return: 返回距离

"""

x0 = x

y0 = y

x1 = (y0-b)/w

y1 = w*x0+b

d0 = math.sqrt((x0-x1)**2+(y0-y1)**2)

if d0==0:

return 0

else:

dis = abs(x0-x1)*abs(y0-y1)/d0

return dis

def getSVMLoss(G1, G2, w, b):

r"""

计算在(w,b)的前提下,整个数据集的loss;

loss function 是 hinge loss

:param G1:第一类样本pandas,第一列是X,第二列是Y

:param G2:第二类样本pandas,第一列是X,第二列是Y

:param w:斜率

:param b:截距

:return:返回当前斜率和截距下的loss

"""

total_loss = 0

#G1的loss

class1Num = G1.shape[0]

d1min = 99999

x_f_1, y_f_1 = 0, 0

for i in range(class1Num):

x = G1.iloc[i,0]

y = G1.iloc[i,1]

d = calDistance(x,y,w,b)

if (w*x+b) > y:

total_loss += d

####

if d < d1min:

x_f_1, y_f_1 = x, y

d1min = d

#G2的loss

class2Num = G2.shape[0]

d2min = 99999

x_f_2, y_f_2 = 0, 0

for i in range(class2Num):

x = G2.iloc[i,0]

y = G2.iloc[i,1]

d = calDistance(x,y,w,b)

if w*x+b < y: #分类错误进行惩罚

total_loss += d

if d < d2min:#分类错误进行惩罚

x_f_2, y_f_2 = x, y

d2min = d

total_loss = total_loss + abs(d2min - d1min)#如果两者相距太远,进行惩罚

return total_loss

def SVMFit(G1, G2):

w_last, b_last = -5, 100

w, b = -6, 99

loss_last = 1

loss = 0

stop = 10000

i = 0

eta = 1e-4

count = 0

while(i < stop):

print("{:05d}: w is {:.2f}, b is {:.2f}, loss is {:.2f}".format(i,w,b,loss))

loss = getSVMLoss(G1, G2, w, b)

if loss == 0:

break

if loss - loss_last < 0.1:

count += 1

if count>1000:

break

wn = w - eta * (loss-loss_last)/(w-w_last)

bn = b - eta * (loss-loss_last)/(b-b_last)

w_last = w

w = wn

b_last = b

b = bn

loss_last = loss

i += 1

return w, b

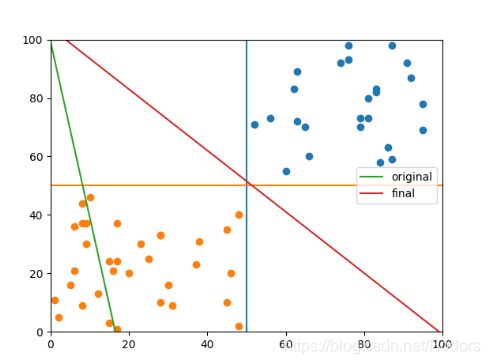

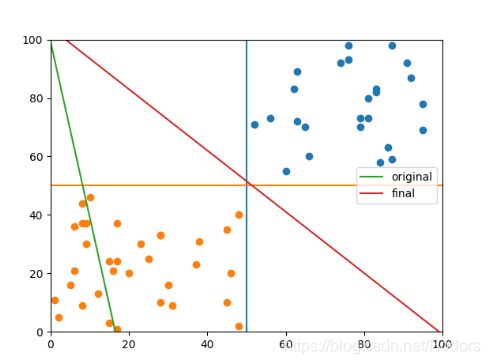

if __name__ == "__main__":

print("to solve classification problem")

np.random.seed(5)

G1, G2 = getData2()

fig, ax = plt.subplots()

ax.scatter(G1['X'], G1['Y'], color="C0")

ax.scatter(G2['X'], G2['Y'], color="C1")

ax.plot(np.array([50,50]), np.array([0,100]))

ax.plot(np.array([0,100]), np.array([50,50]))

w, b = -6, 99

x = np.arange(0, 100, 1)

y = w * x + b

ax.plot(x, y, color="C2",label="original")

w_f, b_f = SVMFit(G1, G2)

y_f = w_f * x + b_f

ax.plot(x, y_f, color="C3",label="final")

ax.legend()

ax.set_xlim(xmin = 0, xmax = 100)

ax.set_ylim(ymin = 0, ymax = 100)

fig.show()

# x,y,w,b

# print("距离是:{:.2f}".format(calDistance(1,0,1,0)))