NNDL 作业7:第五章课后题(1×1 卷积核 | CNN BP)

目录

- 习题

-

- 5-2证明宽卷积具有交换性。即公式 r o t 180 ( W ) ⨂ ~ X = r o t 180 ( X ) ⨂ ~ W rot180(W) {\widetilde{\bigotimes}}X=rot180(X){\widetilde{\bigotimes}}W rot180(W)⨂ X=rot180(X)⨂ W

- 5-3分析卷积神经网络中用1×1的卷积核的作用。

-

- 1、跨通道的特征整合

- 2、降维/升维

- 3 、加非线性

- 4、跨通道信息交互(channal 的变换)

- 5、减少计算量

- 5-4对于一个输入为100×100×256的特征映射组,使用3×3的卷积核,输出为100×100×256的特征映射组的卷积层,求其时间和空间复杂度。如果引入一个1×1的卷积核,先得到100×100×64的特征映射,再进行3×3的卷积,得到100×100×256的特征映射组,求其时间和空间复杂度。

- 5-7忽略激活函数,分析卷积网络中卷积层的前向计算和反向传播是一种转置关系。

-

- 1. 卷积运算的前向传播

- 2. 卷积运算的反向传播

- 推导CNN反向传播算法

- 参考文献

- 个人总结

习题

5-2证明宽卷积具有交换性。即公式 r o t 180 ( W ) ⨂ ~ X = r o t 180 ( X ) ⨂ ~ W rot180(W) {\widetilde{\bigotimes}}X=rot180(X){\widetilde{\bigotimes}}W rot180(W)⨂ X=rot180(X)⨂ W

如果不限制两个卷积信号的长度,真正的翻转卷积是具有交换性的,即 x ∗ y = y ∗ x . x*y=y*x. x∗y=y∗x.对于互相关的的“卷积”,也具有一定的“交换性”。

我们先介绍宽卷积(Wide Convolution)的定义。给定一个二维图像 X ∈ R M × N X\in\mathbb{R}^{M\times N} X∈RM×N和一个二维卷积核 W ∈ R U × V W\in\mathbb{R}^{U\times V} W∈RU×V,对图像 X X X进行零填充,两端各补 U − 1 U-1 U−1和 V − 1 V-1 V−1个零,得到全填充(Full Padding)的图像 X ~ ∈ R M + 2 U − 2 × N + 2 V − 2 \widetilde{X}\in\mathbb{R}^{{M+2U-2}\times{N+2V-2}} X ∈RM+2U−2×N+2V−2。图像 X X X和卷积核 W W W的宽卷积定义为: W ⨂ ~ X ≜ W ⨂ X ~ W{\widetilde{\bigotimes}}X\triangleq W\bigotimes\widetilde{X} W⨂ X≜W⨂X 其中 ⨂ ~ {\widetilde{\bigotimes}} ⨂ 表示宽卷积运算。

当输入信息和卷积核有固有长度时,他们的卷积依然具有交换性,即 r o t 180 ( W ) ⨂ ~ X = r o t 180 ( X ) ⨂ ~ W rot180(W) {\widetilde{\bigotimes}}X=rot180(X){\widetilde{\bigotimes}}W rot180(W)⨂ X=rot180(X)⨂ W或者 r o t 180 ( W ) ⨂ X ~ = r o t 180 ( X ) ⨂ W ~ rot180(W) {\bigotimes}\widetilde{X}=rot180(X){\bigotimes}\widetilde{W} rot180(W)⨂X =rot180(X)⨂W 其中 r o t 180 ( ⋅ ) rot180(\cdot ) rot180(⋅)表示旋转180度。

下面给出该公式的证明:

首先给定一个二维图像 X ∈ R M × N X\in\mathbb{R}^{M\times N} X∈RM×N和一个二维卷积核 W ∈ R U × V W\in\mathbb{R}^{U\times V} W∈RU×V

为了方便证明我们假设 M = N = 3 , U = V = 2 M=N=3,U=V=2 M=N=3,U=V=2

W = ( a 1 b 1 c 1 d 1 ) W=\left( \begin{matrix} a_1 & b_1 \\ c_1 & d_1 \\ \end{matrix} \right) \ W=(a1c1b1d1) X = ( a 2 b 2 c 2 d 2 e 2 f 2 g 2 h 2 i 2 ) X=\left( \begin{matrix} a_2 & b_2 &c_2 \\ d_2 & e_2 & f_2\\ g_2& h_2 & i_2\\ \end{matrix} \right) \ X=⎝ ⎛a2d2g2b2e2h2c2f2i2⎠ ⎞

W和X填充后图像为:

W ~ = ( 0 0 0 0 0 0 0 0 0 0 0 0 0 0 a 1 b 1 0 0 0 0 c 1 d 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 ) \widetilde{W}=\left( \begin{matrix} 0 & 0 & 0 & 0 & 0 &0 \\ 0 & 0 & 0 & 0 & 0 &0 \\ 0&0&a_1 & b_1 &0&0 \\ 0&0&c_1 & d_1 &0&0\\ 0 & 0 & 0 & 0 & 0 &0 \\ 0 & 0 & 0 & 0 & 0 &0 \\ \end{matrix} \right) \ W =⎝ ⎛00000000000000a1c10000b1d100000000000000⎠ ⎞ X ~ = ( 0 0 0 0 0 0 a 2 b 2 c 2 0 0 d 2 e 2 f 2 0 0 g 2 h 2 i 2 0 0 0 0 0 0 ) \widetilde{X}=\left( \begin{matrix} 0 & 0 & 0 & 0 & 0 \\ 0 &a_2 & b_2 &c_2& 0 \\ 0 &d_2 & e_2 & f_2& 0\\ 0 &g_2& h_2 & i_2& 0\\ 0 & 0 & 0 & 0 & 0 \\ \end{matrix} \right) \ X =⎝ ⎛000000a2d2g200b2e2h200c2f2i2000000⎠ ⎞

W和X旋转后图像为:

r o t 180 ( W ) = ( d 1 c 1 b 1 a 1 ) rot180(W)=\left( \begin{matrix} d_1 & c_1 \\ b_1 & a_1 \\ \end{matrix} \right) \ rot180(W)=(d1b1c1a1) r o t 180 ( X ) = ( i 2 h 2 g 2 f 2 e 2 d 2 c 2 b 2 a 2 ) rot180(X)=\left( \begin{matrix} i_2 & h_2 &g_2 \\ f_2 & e_2 & d_2\\ c_2& b_2 & a_2\\ \end{matrix} \right) \ rot180(X)=⎝ ⎛i2f2c2h2e2b2g2d2a2⎠ ⎞

然后我们计算一下:

等号左边:

r o t 180 ( W ) ⨂ ~ X rot180(W) {\widetilde{\bigotimes}}X rot180(W)⨂ X = r o t 180 ( W ) ⨂ X ~ =rot180(W) {\bigotimes}\widetilde{X} =rot180(W)⨂X = ( d 1 c 1 b 1 a 1 ) =\left(\begin{matrix}d_1 & c_1 \\b_1 & a_1 \\\end{matrix}\right) =(d1b1c1a1) ⨂ \bigotimes ⨂ ( 0 0 0 0 0 0 a 2 b 2 c 2 0 0 d 2 e 2 f 2 0 0 g 2 h 2 i 2 0 0 0 0 0 0 ) \left(\begin{matrix}0 & 0 & 0 & 0 & 0 \\0 &a_2 & b_2 &c_2& 0 \\0 &d_2 & e_2 & f_2& 0\\0 &g_2& h_2 & i_2& 0\\0 & 0 & 0 & 0 & 0 \\\end{matrix}\right) ⎝ ⎛000000a2d2g200b2e2h200c2f2i2000000⎠ ⎞ = ( a 1 a 2 b 1 a 2 + a 1 b 2 b 1 b 2 + a 1 c 2 b 1 c 2 c 1 a 2 + a 1 d 2 d 1 a 2 + c 1 b 2 + b 1 d 2 + a 1 e 2 d 1 b 2 + c 1 c 2 + b 1 e 2 + a 1 f 2 d 1 c 2 + b 1 f 2 c 1 d 2 + a 1 g 2 d 1 d 2 + c 1 e 2 + b 1 g 2 + a 1 h 2 d 1 e 2 + c 1 f 2 + b 1 h 2 + a 1 i 2 d 1 f 2 + b 1 i 2 c 1 g 2 d 1 g 2 + c 1 h 2 d 1 h 2 + c 1 i 2 d 1 i 2 ) =\left( \begin{matrix} &a_1a_2 &b_1a_2+a_1b_2 &b_1b_2+a_1c_2 &b_1c_2\\ &c_1a_2+a_1d_2 &d_1a_2+c_1b_2+b_1d_2+a_1e_2 &d_1b_2+c_1c_2+b_1e_2+a_1f_2 &d_1c_2+b_1f_2\\ &c_1d_2+a_1g_2 &d_1d_2+c_1e_2+b_1g_2+a_1h_2 &d_1e_2+c_1f_2+b_1h_2+a_1i_2 &d_1f_2+b_1i_2\\ &c_1g_2 &d_1g_2+c_1h_2 &d_1h_2+c_1i_2 &d_1i_2\\ \end{matrix} \right) =⎝ ⎛a1a2c1a2+a1d2c1d2+a1g2c1g2b1a2+a1b2d1a2+c1b2+b1d2+a1e2d1d2+c1e2+b1g2+a1h2d1g2+c1h2b1b2+a1c2d1b2+c1c2+b1e2+a1f2d1e2+c1f2+b1h2+a1i2d1h2+c1i2b1c2d1c2+b1f2d1f2+b1i2d1i2⎠ ⎞

等号右边:

r o t 180 ( X ) ⨂ ~ W = r o t 180 ( X ) ⨂ W ~ = rot180(X){\widetilde{\bigotimes}}W=rot180(X){\bigotimes}\widetilde{W}= rot180(X)⨂ W=rot180(X)⨂W = ( i 2 h 2 g 2 f 2 e 2 d 2 c 2 b 2 a 2 ) \left(\begin{matrix}i_2 & h_2 &g_2 \\f_2 & e_2 & d_2\\c_2& b_2 & a_2\\\end{matrix}\right) ⎝ ⎛i2f2c2h2e2b2g2d2a2⎠ ⎞ ⨂ \bigotimes ⨂ ( 0 0 0 0 0 0 0 0 0 0 0 0 0 0 a 1 b 1 0 0 0 0 c 1 d 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 ) = \left(\begin{matrix}0 & 0 & 0 & 0 & 0 &0 \\0 & 0 & 0 & 0 & 0 &0 \\0&0&a_1 & b_1 &0&0 \\0&0&c_1 & d_1 &0&0\\0 & 0 & 0 & 0 & 0 &0 \\0 & 0 & 0 & 0 & 0 &0 \\\end{matrix}\right)= ⎝ ⎛00000000000000a1c10000b1d100000000000000⎠ ⎞= ( a 1 a 2 b 1 a 2 + a 1 b 2 b 1 b 2 + a 1 c 2 b 1 c 2 c 1 a 2 + a 1 d 2 d 1 a 2 + c 1 b 2 + b 1 d 2 + a 1 e 2 d 1 b 2 + c 1 c 2 + b 1 e 2 + a 1 f 2 d 1 c 2 + b 1 f 2 c 1 d 2 + a 1 g 2 d 1 d 2 + c 1 e 2 + b 1 g 2 + a 1 h 2 d 1 e 2 + c 1 f 2 + b 1 h 2 + a 1 i 2 d 1 f 2 + b 1 i 2 c 1 g 2 d 1 g 2 + c 1 h 2 d 1 h 2 + c 1 i 2 d 1 i 2 ) \left( \begin{matrix} &a_1a_2 &b_1a_2+a_1b_2 &b_1b_2+a_1c_2 &b_1c_2\\ &c_1a_2+a_1d_2 &d_1a_2+c_1b_2+b_1d_2+a_1e_2 &d_1b_2+c_1c_2+b_1e_2+a_1f_2 &d_1c_2+b_1f_2\\ &c_1d_2+a_1g_2 &d_1d_2+c_1e_2+b_1g_2+a_1h_2 &d_1e_2+c_1f_2+b_1h_2+a_1i_2 &d_1f_2+b_1i_2\\ &c_1g_2 &d_1g_2+c_1h_2 &d_1h_2+c_1i_2 &d_1i_2\\ \end{matrix} \right) ⎝ ⎛a1a2c1a2+a1d2c1d2+a1g2c1g2b1a2+a1b2d1a2+c1b2+b1d2+a1e2d1d2+c1e2+b1g2+a1h2d1g2+c1h2b1b2+a1c2d1b2+c1c2+b1e2+a1f2d1e2+c1f2+b1h2+a1i2d1h2+c1i2b1c2d1c2+b1f2d1f2+b1i2d1i2⎠ ⎞$

我们可以清晰地看到,原公式等号左边和右边的结果是一样的,即公式 r o t 180 ( W ) ⨂ ~ X = r o t 180 ( X ) ⨂ ~ W rot180(W) {\widetilde{\bigotimes}}X=rot180(X){\widetilde{\bigotimes}}W rot180(W)⨂ X=rot180(X)⨂ W得证,所以宽卷积具有交换性。

5-3分析卷积神经网络中用1×1的卷积核的作用。

1、跨通道的特征整合

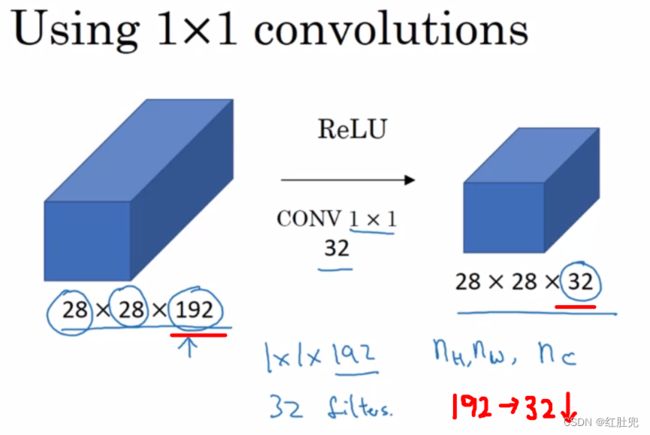

如果当前层和下一层都只有一个通道那么1×1卷积核确实没什么作用,但是如果它们分别为m层和n层的话,1×1卷积核可以起到一个跨通道聚合的作用,所以进一步可以起到降维(或者升维)的作用,起到减少参数的目的。

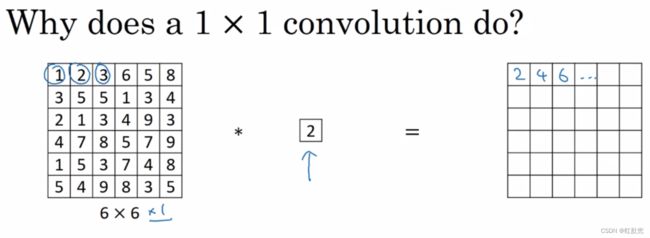

这里通过一个例子来直观地介绍1x1卷积。输入6x6x1的矩阵,这里的1x1卷积形式为1x1x1,即为元素2,输出也是6x6x1的矩阵。但输出矩阵中的每个元素值是输入矩阵中每个元素值x2的结果。

上述情况,并没有显示1x1卷积的特殊之处,那是因为上面输入的矩阵channel为1,所以1x1卷积的channel也为1。这时候只能起到升维的作用。这并不是1x1卷积的魅力所在。

让我们看一下真正work的示例。当输入为6x6x32时,1x1卷积的形式是1x1x32,当只有一个1x1卷积核的时候,此时输出为6x6x1。此时便可以体会到1x1卷积的实质作用:降维。当1x1卷积核的个数小于输入channels数量时,即降维。

注意,下图中第二行左起第二幅图像中的黄色立方体即为1x1x32卷积核,而第二行左起第一幅图像中的黄色立方体即是要与1x1x32卷积核进行叠加运算的区域。

其实1x1卷积,可以看成一种全连接(full connection)。

第一层有6个神经元,分别是a1—a6,通过全连接之后变成5个,分别是b1—b5,第一层的六个神经元要和后面五个实现全连接,本图中只画了a1—a6连接到b1的示意,可以看到,在全连接层b1其实是前面6个神经元的加权和,权对应的就是w1—w6,到这里就很清晰了:

第一层的6个神经元其实就相当于输入特征里面那个通道数:6,而第二层的5个神经元相当于1x1卷积之后的新的特征通道数:5。w1—w6是一个卷积核的权系数,若要计算b2—b5,显然还需要4个同样尺寸的卷积核。

上述列举的全连接例子不是很严谨,因为图像的一层相比于神经元还是有区别的,图像是2D矩阵,而神经元就是一个数字,但是即便是一个2D矩阵(可以看成很多个神经元)的话也还是只需要一个参数(1x1的核),这就是因为参数的权值共享。

注:1x1卷积一般只改变输出通道数(channels),而不改变输出的宽度和高度

2、降维/升维

由于 1×1 并不会改变 height 和 width,改变通道的第一个最直观的结果,就是可以将原本的数据量进行增加或者减少。这里看其他文章或者博客中都称之为升维、降维。但我觉得维度并没有改变,改变的只是 height × width × channels 中的 channels 这一个维度的大小而已

3 、加非线性

1x1卷积核,可以在保持feature map尺度不变的(即不损失分辨率)的前提下大幅增加非线性特性(利用后接的非线性激活函数),把网络做的很deep。

备注:一个filter对应卷积后得到一个feature map,不同的filter(不同的weight和bias),卷积以后得到不同的feature map,提取不同的特征,得到对应的specialized neuron。

4、跨通道信息交互(channal 的变换)

例子:使用1x1卷积核,实现降维和升维的操作其实就是channel间信息的线性组合变化,3x3,64channels的卷积核后面添加一个1x1,28channels的卷积核,就变成了3x3,28channels的卷积核,原来的64个channels就可以理解为跨通道线性组合变成了28channels,这就是通道间的信息交互。

注意:只是在channel维度上做线性组合,W和H上是共享权值的sliding window

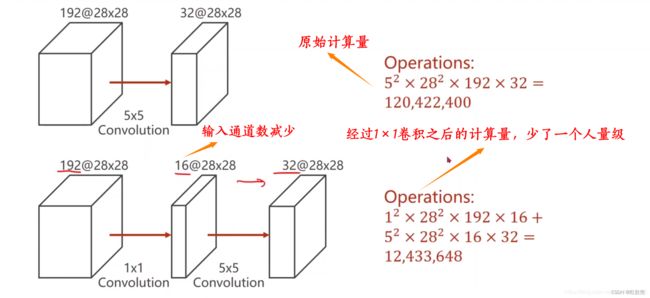

5、减少计算量

5-4对于一个输入为100×100×256的特征映射组,使用3×3的卷积核,输出为100×100×256的特征映射组的卷积层,求其时间和空间复杂度。如果引入一个1×1的卷积核,先得到100×100×64的特征映射,再进行3×3的卷积,得到100×100×256的特征映射组,求其时间和空间复杂度。

时间复杂度:时间复杂度即模型的运行次数。

计算公式: T i m e ∼ O ( M 2 ∗ K 2 ∗ C i n ∗ C o u t ) Time\sim O(M^2*K^2*C_{in}*C_{out}) Time∼O(M2∗K2∗Cin∗Cout)

注:

- M:输出特征图(Feature Map)的尺寸。(默认输入和卷积核的形状是正方形)

- K:卷积核(Kernel)的尺寸。

- Cin:输入通道数。

- Cout:输出通道数。

空间复杂度:空间复杂度即模型的参数数量。

计算公式: S p a c e ∼ O ( K 2 ∗ C i n ∗ C o u t + M 2 ∗ C o u t ) Space\sim O(K^2 * C_{in} * C_{out}+M^2*C_{out}) Space∼O(K2∗Cin∗Cout+M2∗Cout)

(1)

- 时间复杂度:256×100×100×256×3×3 = 5,898,240,000

- 空间复杂度:256×100×100 = 2,560,000

(2)

- 时间复杂度:64×100×100×256 + 256×100×100×64×3×3 = 1,638,400,000

- 空间复杂度:64×100×100 + 256×100×100 = 3,200,000

5-7忽略激活函数,分析卷积网络中卷积层的前向计算和反向传播是一种转置关系。

以3x3作为输入,2x2作为输出。即:

X = ( x 11 x 12 x 13 x 21 x 22 x 23 x 31 x 32 x 33 ) X=\left(\begin{matrix}x_{11} & x_{12}&x_{13} \\x_{21} & x_{22} &x_{23} \\ x_{31}&x_{32} &x_{33}\\\end{matrix}\right) X=⎝ ⎛x11x21x31x12x22x32x13x23x33⎠ ⎞

W = ( w 11 w 12 w 21 w 22 ) W=\left(\begin{matrix}w_{11} & w_{12} \\w_{21} & w_{22} \\\end{matrix}\right) W=(w11w21w12w22)

Y = ( y 11 y 12 y 21 y + 22 ) Y=\left(\begin{matrix}y_{11} & y_{12} \\y_{21} & y+_{22} \\\end{matrix}\right) Y=(y11y21y12y+22)

1. 卷积运算的前向传播

(1) 卷积运算:

( x 11 x 12 x 13 x 21 x 22 x 23 x 31 x 32 x 33 ) \left(\begin{matrix}x_{11} & x_{12}&x_{13} \\x_{21} & x_{22} &x_{23} \\ x_{31}&x_{32} &x_{33}\\\end{matrix}\right) ⎝ ⎛x11x21x31x12x22x32x13x23x33⎠ ⎞ ⨂ \bigotimes ⨂ ( w 11 w 12 w 21 w 22 ) \left(\begin{matrix}w_{11} & w_{12} \\w_{21} & w_{22} \\\end{matrix}\right) (w11w21w12w22) = ( y 11 y 12 y 21 y 22 ) =\left(\begin{matrix}y_{11} & y_{12} \\y_{21} & y_{22} \\\end{matrix}\right) =(y11y21y12y22)

其中

y 11 = x 11 ∗ w 11 + x 12 ∗ w 12 + x 21 ∗ w 21 + x 22 ∗ w 22 y_{11}=x_{11}*w_{11}+x_{12}*w_{12}+x_{21}*w_{21}+x_{22}*w_{22} y11=x11∗w11+x12∗w12+x21∗w21+x22∗w22

y 12 = x 12 ∗ w 11 + x 13 ∗ w 12 + x 22 ∗ w 21 + x 23 ∗ w 22 y_{12}=x_{12}*w_{11}+x_{13}*w_{12}+x_{22}*w_{21}+x_{23}*w_{22} y12=x12∗w11+x13∗w12+x22∗w21+x23∗w22

y 21 = x 21 ∗ w 11 + x 22 ∗ w 12 + x 31 ∗ w 21 + x 32 ∗ w 22 y_{21}=x_{21}*w_{11}+x_{22}*w_{12}+x_{31}*w_{21}+x_{32}*w_{22} y21=x21∗w11+x22∗w12+x31∗w21+x32∗w22

y 22 = x 22 ∗ w 11 + x 23 ∗ w 12 + x 32 ∗ w 21 + x 33 ∗ w 22 y_{22}=x_{22}*w_{11}+x_{23}*w_{12}+x_{32}*w_{21}+x_{33}*w_{22} y22=x22∗w11+x23∗w12+x32∗w21+x33∗w22

我们将 X X X展开成16x1的形式,同时 Y Y Y也展开得到4x1的形式,即:

X = ( x 11 x 12 x 13 x 21 x 22 x 23 x 31 x 32 x 33 ) Y = ( y 11 y 12 y 21 y 22 ) X=\left(\begin{matrix}x_{11} \\ x_{12}\\x_{13} \\x_{21} \\ x_{22} \\x_{23} \\ x_{31}\\x_{32} \\x_{33}\\\end{matrix}\right) Y=\left(\begin{matrix}y_{11}\\ y_{12} \\y_{21} \\ y_{22} \end{matrix}\right) X=⎝ ⎛x11x12x13x21x22x23x31x32x33⎠ ⎞Y=⎝ ⎛y11y12y21y22⎠ ⎞

我们假设 C ⨂ X = Y C\bigotimes X=Y C⨂X=Y

那么很容易得到:

C = ( w 11 w 12 0 w 21 w 22 0 0 0 0 0 w 11 w 12 0 w 21 w 22 0 0 0 0 0 0 w 11 w 12 0 w 21 w 22 0 0 0 0 0 w 11 w 12 0 w 21 w 22 ) C=\left(\begin{matrix} w_{11} &w_{12} &0 &w_{21} &w_{22} &0 &0 &0 &0 \\ 0 & w_{11} &w_{12} &0 &w_{21}&w_{22} &0 &0 &0 \\ 0 &0 &0 & w_{11} &w_{12} &0 &w_{21}&w_{22} &0 \\ 0 &0 &0 & 0 & w_{11} &w_{12} &0 &w_{21}&w_{22} \\ \end{matrix}\right) C=⎝ ⎛w11000w12w11000w1200w210w110w22w21w12w110w220w1200w21000w22w21000w22⎠ ⎞

我们再将 C C C定义为 ( C 1 , C 2 , . . . . C 9 ) (C_1,C_2,....C_9) (C1,C2,....C9)即:

C = ( C 1 , C 2 , . . . . C 9 ) C=(C_1,C_2,....C_9) C=(C1,C2,....C9) C 1 = ( w 11 0 0 0 ) C 2 = ( w 12 w 11 0 0 ) . . . . . . C 9 = ( 0 0 0 w 22 ) C_1=\left(\begin{matrix}w_{11}\\ 0 \\0 \\ 0 \end{matrix}\right)C_2=\left(\begin{matrix}w_{12}\\ w_{11} \\0 \\ 0 \end{matrix}\right)......C_9=\left(\begin{matrix}0\\ 0 \\0 \\ w_{22} \end{matrix}\right) C1=⎝ ⎛w11000⎠ ⎞C2=⎝ ⎛w12w1100⎠ ⎞......C9=⎝ ⎛000w22⎠ ⎞

(2) 损失函数:

定义损失函数: L = l o s s ( y 11 , y 12 , y 21 , y 22 ) L=loss(y_{11},y_{12},y_{21},y_{22}) L=loss(y11,y12,y21,y22)

从 X → Y → L X\rightarrow Y\rightarrow L X→Y→L的过程是卷积运算的前向传播过程,这里忽略了偏置项b以及卷积之后的激活函数。

2. 卷积运算的反向传播

(1)计算 X X X的梯度

∂ L ∂ X = ( ∂ L ∂ x 11 ∂ L ∂ x 12 ∂ L ∂ x 13 ∂ L ∂ x 21 ∂ L ∂ x 22 ∂ L ∂ x 23 ∂ L ∂ x 31 ∂ L ∂ x 32 ∂ L ∂ x 33 ) \frac{\partial L}{\partial X}=\left(\begin{matrix}\frac{\partial L}{\partial x_{11}} & \frac{\partial L}{\partial x_{12}}&\frac{\partial L}{\partial x_{13}} \\\frac{\partial L}{\partial x_{21}} & \frac{\partial L}{\partial x_{22}} &\frac{\partial L}{\partial x_{23}} \\ \frac{\partial L}{\partial x_{31}}&\frac{\partial L}{\partial x_{32}} &\frac{\partial L}{\partial x_{33}}\\\end{matrix}\right) ∂X∂L=⎝ ⎛∂x11∂L∂x21∂L∂x31∂L∂x12∂L∂x22∂L∂x32∂L∂x13∂L∂x23∂L∂x33∂L⎠ ⎞

其中每一项的梯度:

∂ L ∂ x 11 = ∂ L ∂ y 11 ∂ y 11 ∂ x 11 + ∂ L ∂ y 12 ∂ y 12 ∂ x 11 + ∂ L ∂ y 21 ∂ y 21 ∂ x 11 + ∂ L ∂ y 22 ∂ y 22 ∂ x 11 \frac{\partial L}{\partial x_{11}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{11}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{11}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{11}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{11}} ∂x11∂L=∂y11∂L∂x11∂y11+∂y12∂L∂x11∂y12+∂y21∂L∂x11∂y21+∂y22∂L∂x11∂y22

∂ L ∂ x 12 = ∂ L ∂ y 11 ∂ y 11 ∂ x 12 + ∂ L ∂ y 12 ∂ y 12 ∂ x 12 + ∂ L ∂ y 21 ∂ y 21 ∂ x 12 + ∂ L ∂ y 22 ∂ y 22 ∂ x 12 \frac{\partial L}{\partial x_{12}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{12}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{12}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{12}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{12}} ∂x12∂L=∂y11∂L∂x12∂y11+∂y12∂L∂x12∂y12+∂y21∂L∂x12∂y21+∂y22∂L∂x12∂y22

∂ L ∂ x 13 = ∂ L ∂ y 11 ∂ y 11 ∂ x 13 + ∂ L ∂ y 12 ∂ y 12 ∂ x 13 + ∂ L ∂ y 21 ∂ y 21 ∂ x 13 + ∂ L ∂ y 22 ∂ y 22 ∂ x 13 \frac{\partial L}{\partial x_{13}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{13}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{13}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{13}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{13}} ∂x13∂L=∂y11∂L∂x13∂y11+∂y12∂L∂x13∂y12+∂y21∂L∂x13∂y21+∂y22∂L∂x13∂y22

∂ L ∂ x 21 = ∂ L ∂ y 11 ∂ y 11 ∂ x 21 + ∂ L ∂ y 12 ∂ y 12 ∂ x 21 + ∂ L ∂ y 21 ∂ y 21 ∂ x 21 + ∂ L ∂ y 22 ∂ y 22 ∂ x 21 \frac{\partial L}{\partial x_{21}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{21}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{21}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{21}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{21}} ∂x21∂L=∂y11∂L∂x21∂y11+∂y12∂L∂x21∂y12+∂y21∂L∂x21∂y21+∂y22∂L∂x21∂y22

∂ L ∂ x 22 = ∂ L ∂ y 11 ∂ y 11 ∂ x 22 + ∂ L ∂ y 12 ∂ y 12 ∂ x 22 + ∂ L ∂ y 21 ∂ y 21 ∂ x 22 + ∂ L ∂ y 22 ∂ y 22 ∂ x 22 \frac{\partial L}{\partial x_{22}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{22}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{22}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{22}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{22}} ∂x22∂L=∂y11∂L∂x22∂y11+∂y12∂L∂x22∂y12+∂y21∂L∂x22∂y21+∂y22∂L∂x22∂y22

∂ L ∂ x 23 = ∂ L ∂ y 11 ∂ y 11 ∂ x 23 + ∂ L ∂ y 12 ∂ y 12 ∂ x 23 + ∂ L ∂ y 21 ∂ y 21 ∂ x 23 + ∂ L ∂ y 22 ∂ y 22 ∂ x 23 \frac{\partial L}{\partial x_{23}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{23}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{23}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{23}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{23}} ∂x23∂L=∂y11∂L∂x23∂y11+∂y12∂L∂x23∂y12+∂y21∂L∂x23∂y21+∂y22∂L∂x23∂y22

∂ L ∂ x 31 = ∂ L ∂ y 11 ∂ y 11 ∂ x 31 + ∂ L ∂ y 12 ∂ y 12 ∂ x 31 + ∂ L ∂ y 21 ∂ y 21 ∂ x 31 + ∂ L ∂ y 22 ∂ y 22 ∂ x 31 \frac{\partial L}{\partial x_{31}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{31}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{31}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{31}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{31}} ∂x31∂L=∂y11∂L∂x31∂y11+∂y12∂L∂x31∂y12+∂y21∂L∂x31∂y21+∂y22∂L∂x31∂y22

∂ L ∂ x 32 = ∂ L ∂ y 11 ∂ y 11 ∂ x 32 + ∂ L ∂ y 12 ∂ y 12 ∂ x 32 + ∂ L ∂ y 21 ∂ y 21 ∂ x 32 + ∂ L ∂ y 22 ∂ y 22 ∂ x 32 \frac{\partial L}{\partial x_{32}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{32}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{32}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{32}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{32}} ∂x32∂L=∂y11∂L∂x32∂y11+∂y12∂L∂x32∂y12+∂y21∂L∂x32∂y21+∂y22∂L∂x32∂y22

∂ L ∂ x 33 = ∂ L ∂ y 11 ∂ y 11 ∂ x 33 + ∂ L ∂ y 12 ∂ y 12 ∂ x 33 + ∂ L ∂ y 21 ∂ y 21 ∂ x 33 + ∂ L ∂ y 22 ∂ y 22 ∂ x 33 \frac{\partial L}{\partial x_{33}}=\frac{\partial L}{\partial y_{11}}\frac{\partial y_{11}}{\partial x_{33}}+\frac{\partial L}{\partial y_{12}}\frac{\partial y_{12}}{\partial x_{33}}+\frac{\partial L}{\partial y_{21}}\frac{\partial y_{21}}{\partial x_{33}}+\frac{\partial L}{\partial y_{22}}\frac{\partial y_{22}}{\partial x_{33}} ∂x33∂L=∂y11∂L∂x33∂y11+∂y12∂L∂x33∂y12+∂y21∂L∂x33∂y21+∂y22∂L∂x33∂y22

由于:

∂ L ∂ x a b = ∑ i = 1 2 ∑ j = 1 2 ∂ L ∂ y i j ∂ y i j ∂ x a b \frac{\partial L}{\partial x_{ab}}=\sum^{2}_{i=1}\sum^{2}_{j=1}\frac{\partial L}{\partial y_{ij}}\frac{\partial y_{ij}}{\partial x_{ab}} ∂xab∂L=∑i=12∑j=12∂yij∂L∂xab∂yij

y i j = ∑ a = 1 2 ∑ b = 1 2 w a b ∗ x i + a − 1 , j + b − 1 y_{ij}=\sum_{a=1}^2\sum_{b=1}^2w_{ab}*x_{i+a-1,j+b-1} yij=∑a=12∑b=12wab∗xi+a−1,j+b−1

可以得到:

∂ Y ∂ x a b = C 3 ∗ a + b − 3 T \frac{\partial Y}{\partial x_{ab}}=C_{3*a+b-3}^T ∂xab∂Y=C3∗a+b−3T

所以 ∂ L ∂ X = ( ∂ L ∂ x 11 ∂ L ∂ x 12 . . . . ∂ L ∂ x 33 ) = ( C 1 T C 2 T . . . C 9 T ) ∗ ∂ L ∂ Y = C T ∗ ∂ L ∂ Y \frac{\partial L}{\partial X}=\left(\begin{matrix}\frac{\partial L}{\partial x_{11}}\\ \frac{\partial L}{\partial x_{12}} \\....\\ \frac{\partial L}{\partial x_{33}}\\ \end{matrix}\right) = \left(\begin{matrix}C_1^T\\C_2^T\\...\\C_9^T \end{matrix}\right) *\frac{\partial L}{\partial Y}=C^T*\frac{\partial L}{\partial Y} ∂X∂L=⎝ ⎛∂x11∂L∂x12∂L....∂x33∂L⎠ ⎞=⎝ ⎛C1TC2T...C9T⎠ ⎞∗∂Y∂L=CT∗∂Y∂L

通过上述的分析,我们很清晰的得到在不考虑激活函数情况下,卷积层的反向传播就是和 C C C的转置相乘。

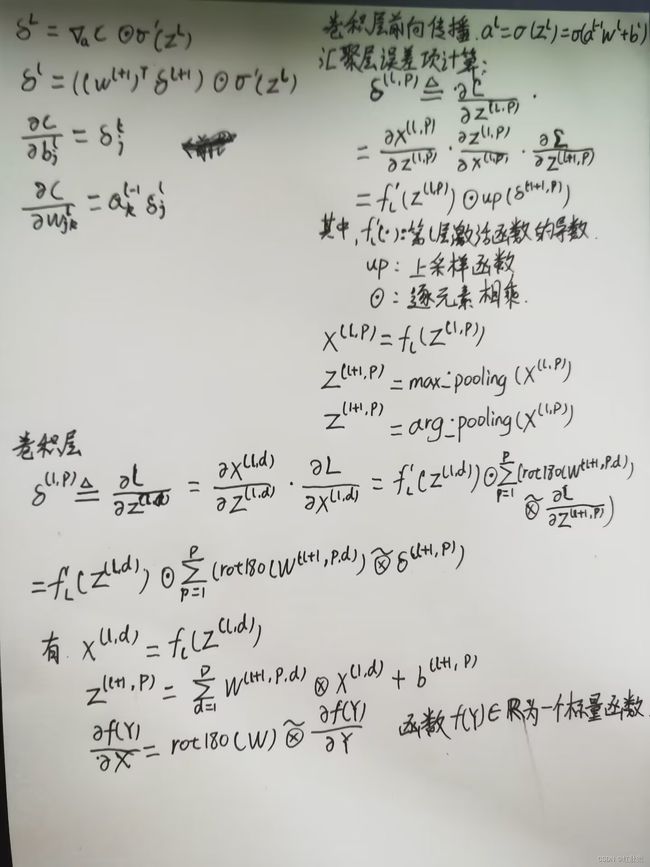

推导CNN反向传播算法

参考文献

1*1卷积核的作用

卷积神经网络(CNN)反向传播算法 - 刘建平Pinard - 博客园 (cnblogs.com)

卷积神经网络(CNN)反向传播算法推导

https://blog.csdn.net/ABU366/article/details/127586130?spm=1001.2014.3001.5502

个人总结

敲公式敲了半天,本次作业所有公式都是纯手打的,不得不说敲的心烦,眼睛都快瞎了,如果有不对的地方还请指出。对于1x1卷积核的作用也算是有了一个更好更全面的理解,算是长了见识。卷积层的前向计算和反向传播是一种转置关系的证明的公式也是一个一个字打出来的。关于推导CNN反向传播算法手写的字迹不太好看,碍于内容太多,实在不想打字了,还望海涵。