Meta-learning algorithms for Few-Shot Computer Vision论文解读(三)

Meta-learning algorithms for Few-Shot Computer Vision论文解读完结

文章目录

-

- MAML用于小样本目标检测

-

- 形式化定义

- YOLOMAML

- 具体实现

-

- 重点,可复用

- 问题分析

-

- 考虑到这一点,我们可以假设**训练YOLOMAML的瓶颈是对对象置信度的预测**。

- 未来工作

- 总结

- 参考文献

MAML用于小样本目标检测

形式化定义

- 支持集:

- N个类标签

- 对于每个类,K个标记图像包含至少一个属于该类的对象(每张都有一个目标)

- Q张查询图片

我们可以立即发现这和小样本图像分类的一个关键区别:一幅图像可以包含多个对象,它们属于N个类中的一个或多个。因此,在求解N-way K-shot检测任务时,算法对每个类至少训练K个样本对象。而在N-way K-shot分类任务中,算法看到每个类的K个例子。注意,这可能会成为一个挑战:在这个配置中,类之间的支持集可能不平衡。因此,这种形式化的少镜头目标检测问题留下了改进的空间。之所以选择它,是因为它是一个相当简单的设置,也很容易实现。

YOLOMAML

为了解决小样本目标检测问题,我们想到将模型不可知的元学习算法(MAML)[9]应用到YOLOv3[37]检测器上。我们称它为YOLOMAML,因为没有更好的名字。

Fu等人提出了Meta-SSD[29]。它将Meta-SGD [10] (MAML的一种变体,对基础模型的超参数进行元学习)应用于SSD[25]。Fu等人提出了有希望的结果。虽然Meta-SSD和YOLOMAML非常相似,但我认为继续致力于YOLOMAML是有意义的,因为:

- 用相似的算法,在更广泛的数据集上,证实或挑战Fu等人的有趣结果;

- 揭示开发这样一种算法的挑战。

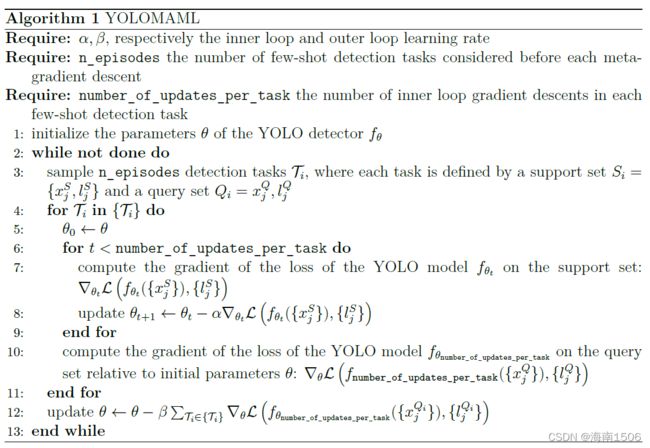

YOLOMAML是一个将MAML算法直接应用到YOLO检测器的程序。算法如图所示。

具体实现

我决定重用我在图像分类工作中使用的MAML算法的结构。对于YOLO模型,我使用了来自Erik Linder-Norén 5的实现https://github.com/eriklindernoren/PyTorch-YOLOv3,它基本上是一个PyTorch重新实现了Joseph Redmon最初的C实现。它包含两个主要部分:

- 从原始图像和标签到可迭代数据加载器的数据处理。类ListDataset负责这个过程。

- YOLO算法的定义、训练和归纳,主要由Darknet类处理。

- 它从一个用户可定制的配置文件中创建YOLO算法作为一个PyTorch模块对象序列。

- 它允许为部分或全部网络加载预先训练的参数。

- 它定义了模型的前向传递和损失计算。

在COCO 2014数据集[38]上进行了小样本目标检测。为了实现YOLO和MAML之间的互补性,我必须在三个主要层面上工作:

- 模型初始化

- 卷积层权值的快速适应

- 以小样本检测集(插曲)的形式进行数据处理

模型初始化 标准形式的YOLOv3包含超过800万个参数。因此,在内存方面,使用MAML(涉及到二阶梯度计算)对其进行完整的元训练是禁止的。因此:

-

我使用定制的Deep Tiny YOLO,而不是标准的YOLOv3神经网络。该模型的支柱是Tiny Darknet。在此基础上,我添加了两个输出块(而不是常规YOLOv3中的三个)。此网络的完整配置文件可以在repository中的detect /configs/deep-tiny-yolo-5-way.cfg中找到。

-

我用ImageNet上训练的参数初始化了主干,然后冻结了这些层。

这样,网络中就只剩下5个可训练的卷积块。这使得在标准GPU上训练YOLOMAML只需几个小时。请注意,存在一个Tiny YOLO,但这个网络没有经过ImageNet培训的可用骨干网络,这促使我选择了一个新的自定义网络。

快速适应 MAML的核心思想是在每个新任务上更新可训练参数,同时跨任务训练初始化参数。为此,我们需要在任务期间存储更新的参数,以及初始化参数。解决方案是为每个参数添加一个快速存储更新参数的字段。在我们的实现中(继承自[34]),这是由Linear_fw, Conv2d_fw和BatchNorm2d_fw处理的,这些层分别扩展自nn.Linear, nn.Conv2d and nn.BatchNorm2d PyTorch objects。我修改了Darknet对象的结构,让它们使用这些自定义层,而不是常规层。

重点,可复用

数据处理 正如小样本图像分类,我们可以对N-way K-shot检测任务进行采样,每个类有Q个查询,首先对N个类进行采样。然后,对于每个类,我们采样K + Q图像,每个图像中包含至少一个对应于这个类的框。检测的不同之处在于,我们需要从标签中删除属于不属于检测任务的类的框。多标签分类也会出现同样的问题。

为了解决这个问题,我创建了标准PyTorch采样器对象的扩展:DetectionTaskSampler。除了向DataLoader返回数据实例的索引外,它还返回已采样类的索引。这些信息在ListDataset中处理,以提供给模型适当的小样本检测任务,而不引用任务外的类。

问题分析

作者的方法并没有取得成功,所以作者对原因进行了分析:

第一个实验,初始化了一个Deep Tiny YOLO,如前一节所述。它在COCO数据集上进行3-way 5-shot目标检测任务训练。它使用的Adam优化器的学习速度为 1 0 − 3 10^{-3} 10−3(在内部循环和外部循环中)。它被训练为10000个epoch,每个epoch对应4个插曲上平均损失上的一个梯度下降。在每一个插曲中,在对查询集执行检测之前,允许对支持集进行两次更新。

损失迅速收敛(见图),但在推理时,模型无法执行成功的检测(F1-score低于 1 0 − 3 10^{-3} 10−3)。已经执行了大量的超参数调优,但结果没有明显改善。

为了确保这些令人失望的表现不是由于我重新实现了YOLO,我在相同的设置下,在没有MAML的情况下训练了Deep Tiny YOLO 40个epoch。虽然这种训练不是最优的,但模型仍然能够执行相关的检测,而对于YOLOMAML则不是这样(见图15)。

YOLOv3算法在预测的三个不同部分汇总了三种损失:

-

预测对象的边界框的形状和位置,使用均方误差;

-

使用二元交叉熵的对象置信度(模型在预测的边界框中有一个对象的确定程度);

-

利用交叉熵对每个预测框进行分类精度分析。

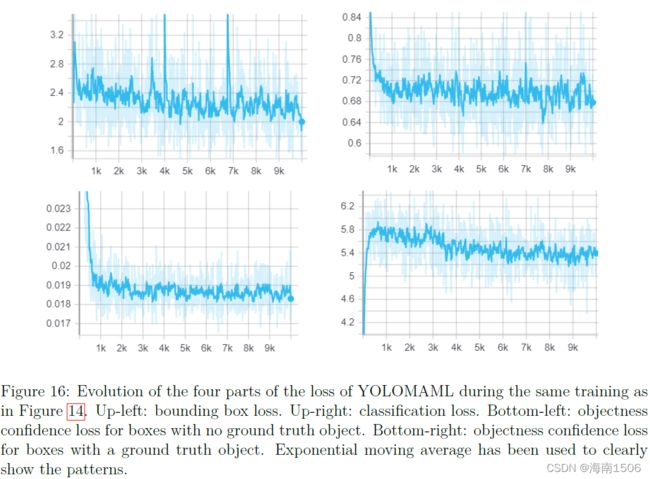

图16显示了这些损失的不同部分的演变过程。由于对象信任而造成的损失被进一步分为两部分:地面真相中包含对象的盒子的损失,以及地面真相中不包含对象的盒子的损失。

我们可以看到,在训练过程中,由于分类和边界盒的形状和位置而造成的损失并没有进化。no-object-confidence loss在停滞前的前1000个时期下降,而yes-object-confidence在停滞前上升到一个临界量。

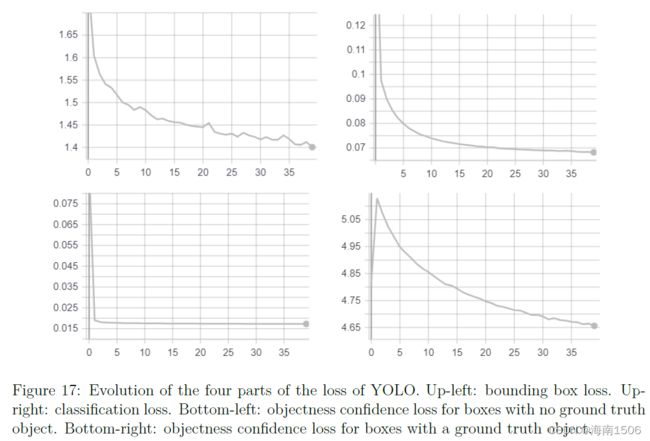

图17显示了YOLO训练的相同数据。我们可以看到,在这种情况下,yes-object-confidence在第一个时代达到顶峰后下降。在训练过程中,除无目标置信度达到一个相对于其他部分较小的下限值外,其他部分的损失都在减小。

考虑到这一点,我们可以假设训练YOLOMAML的瓶颈是对对象置信度的预测。

未来工作

不幸的是,作者没有足够的时间来开发YOLOMAML的工作版本。在这一点上,我相信答案在于对对象置信度的预测,但当这个问题解决后,很可能会出现其他问题。

未来工作的另一个方向是建立一个适合于小目标检测的数据集。其他工作[28] [29] 提出了一种适合于小样本检测的PASCAL VOC数据集的分割方法。然而,PASCAL VOC只包含25个类,而COCO包含80个类。我相信这使得COCO更适应元学习,这与学习泛化到新类的想法纠缠在一起。

最后,(有效的)YOLOMAML的一个缺点是它不允许方式改变,也就是说,在N-way小样本检测任务上训练的模型不能应用于在N0-way小样本检测任务上。解决这个问题将是对YOLOMAML的一个有益的改进。

总结

关于“小样本学习”的高级研究还很年轻。到目前为止,只有很少的作品解决了小样本对象的检测问题,对于这个问题还没有一个公认的基准(比如用于小样本分类的mini-ImageNet)。然而,解决这一问题将是计算机视觉领域中非常重要的一步。使用元学习算法,我们可以通过几个例子和几分钟的时间来学习检测新的、看不见的物体。

我很失望,因为我在Sicara实习期间没能让YOLOMAML工作。然而,我强烈认为,继续寻找解决小样本目标检测的新方法是很重要的,我打算继续在这方面努力。

参考文献

[1] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for

image recognition. In Proceedings of the IEEE conference on computer vision and pattern

recognition, pages 770–778, 2016.

[2] Adam Santoro, Sergey Bartunov, Matthew Botvinick, Daan Wierstra, and Timothy Lillicrap.

Meta-learning with memory-augmented neural networks. In International conference

on machine learning, pages 1842–1850, 2016.

[3] Tsendsuren Munkhdalai and Hong Yu. Meta networks. In Proceedings of the 34th International

Conference on Machine Learning-Volume 70, pages 2554–2563. JMLR. org,

2017.

[4] Pablo Sprechmann, Siddhant M Jayakumar, Jack W Rae, Alexander Pritzel, Adria Puigdomenech

Badia, Benigno Uria, Oriol Vinyals, Demis Hassabis, Razvan Pascanu, and

Charles Blundell. Memory-based parameter adaptation. arXiv preprint arXiv:1802.10542,

2018.

[5] Gregory Koch, Richard Zemel, and Ruslan Salakhutdinov. Siamese neural networks for

one-shot image recognition. In ICML deep learning workshop, volume 2, 2015.

[6] Oriol Vinyals, Charles Blundell, Timothy Lillicrap, koray kavukcuoglu, and Daan Wierstra.

Matching networks for one shot learning. In D. D. Lee, M. Sugiyama, U. V. Luxburg,

I. Guyon, and R. Garnett, editors, Advances in Neural Information Processing Systems

29, pages 3630–3638. Curran Associates, Inc., 2016.

[7] Jake Snell, Kevin Swersky, and Richard Zemel. Prototypical networks for few-shot learning.

In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems 30, pages 4077–Curran Associates, Inc., 2017.

[8] Flood Sung, Yongxin Yang, Li Zhang, Tao Xiang, Philip H.S. Torr, and Timothy M.

Hospedales. Learning to compare: Relation network for few-shot learning. In The IEEE

Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

[9] Chelsea Finn, Pieter Abbeel, and Sergey Levine. Model-agnostic meta-learning for fast

adaptation of deep networks. In Doina Precup and Yee Whye Teh, editors, Proceedings

of the 34th International Conference on Machine Learning, volume 70 of Proceedings of

Machine Learning Research, pages 1126–1135, International Convention Centre, Sydney,

Australia, 06–11 Aug 2017. PMLR.

[10] Zhenguo Li, Fengwei Zhou, Fei Chen, and Hang Li. Meta-sgd: Learning to learn quickly

for few-shot learning. arXiv preprint arXiv:1707.09835, 2017.

[11] Sachin Ravi and Hugo Larochelle. Optimization as a model for few-shot learning. 2016.

[12] Nikhil Mishra, Mostafa Rohaninejad, Xi Chen, and Pieter Abbeel. A simple neural attentive

meta-learner. In International Conference on Learning Representations, 2018.

[13] Victor Garcia Satorras and Joan Bruna Estrach. Few-shot learning with graph neural

networks. In International Conference on Learning Representations, 2018.

[14] Bharath Hariharan and Ross Girshick. Low-shot visual recognition by shrinking and hallucinating

features. In Proceedings of the IEEE International Conference on Computer

Vision, pages 3018–3027, 2017.

[15] Antreas Antoniou, Amos Storkey, and Harrison Edwards. Data augmentation generative

adversarial networks. arXiv preprint arXiv:1711.04340, 2017.

[16] Yu-XiongWang, Ross Girshick, Martial Hebert, and Bharath Hariharan. Low-shot learning

from imaginary data. In Proceedings of the IEEE Conference on Computer Vision and

Pattern Recognition, pages 7278–7286, 2018.

[17] Sebastian Thrun and Lorien Pratt. Learning to learn. 1998.

[18] Sepp Hochreiter and Jürgen Schmidhuber. Long short-term memory. Neural computation,

9(8):1735–1780, 1997.

[19] Brenden Lake, Ruslan Salakhutdinov, Jason Gross, and Joshua Tenenbaum. One shot

learning of simple visual concepts. In Proceedings of the annual meeting of the cognitive

science society, volume 33, 2011.

[20] Brenden M Lake, Ruslan Salakhutdinov, and Joshua B Tenenbaum. Human-level concept

learning through probabilistic program induction. Science, 350(6266):1332–1338, 2015.

[21] R. Girshick, J. Donahue, T. Darrell, and J. Malik. Rich feature hierarchies for accurate

object detection and semantic segmentation. In 2014 IEEE Conference on Computer

Vision and Pattern Recognition, pages 580–587, June 2014.

[22] Ross Girshick. Fast r-cnn. In Proceedings of the IEEE international conference on computer

vision, pages 1440–1448, 2015.

[23] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster r-cnn: Towards realtime

object detection with region proposal networks. In Advances in neural information

processing systems, pages 91–99, 2015.

[24] Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. Mask r-cnn. In Proceedings

of the IEEE international conference on computer vision, pages 2961–2969, 2017.

[25] Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang

Fu, and Alexander C Berg. Ssd: Single shot multibox detector. In European conference

on computer vision, pages 21–37. Springer, 2016.

[26] Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Dollár. Focal loss for

dense object detection. In Proceedings of the IEEE international conference on computer

vision, pages 2980–2988, 2017.

[27] Joseph Redmon, Santosh Divvala, Ross Girshick, and Ali Farhadi. You only look once:

Unified, real-time object detection. In Proceedings of the IEEE conference on computer

vision and pattern recognition, pages 779–788, 2016.

[28] Bingyi Kang, Zhuang Liu, Xin Wang, Fisher Yu, Jiashi Feng, and Trevor Darrell. Few-shot

object detection via feature reweighting. arXiv preprint arXiv:1812.01866, 2018.

[29] Kun Fu, Tengfei Zhang, Yue Zhang, Menglong Yan, Zhonghan Chang, Zhengyuan Zhang,

and Xian Sun. Meta-ssd: Towards fast adaptation for few-shot object detection with

meta-learning. IEEE Access, 7:77597–77606, 2019.

[30] Tao Wang, Xiaopeng Zhang, Li Yuan, and Jiashi Feng. Few-shot adaptive faster R-CNN.

CoRR, abs/1903.09372, 2019.

[31] Hao Chen, Yali Wang, Guoyou Wang, and Yu Qiao. Lstd: A low-shot transfer detector

for object detection. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

[32] Xuanyi Dong, Liang Zheng, Fan Ma, Yi Yang, and Deyu Meng. Few-shot object detection.

ArXiv, abs/1706.08249, 2017.

[33] P. Welinder, S. Branson, T. Mita, C. Wah, F. Schroff, S. Belongie, and P. Perona. Caltech-

UCSD Birds 200. Technical Report CNS-TR-2010-001, California Institute of Technology,

2010.

[34] Wei-Yu Chen, Yen-Cheng Liu, Zsolt Kira, Yu-Chiang Frank Wang, and Jia-Bin Huang. A

closer look at few-shot classification. arXiv preprint arXiv:1904.04232, 2019.

[35] Gregory Cohen, Saeed Afshar, Jonathan Tapson, and André van Schaik. Emnist: an

extension of mnist to handwritten letters. arXiv preprint arXiv:1702.05373, 2017.

[36] Luca Bertinetto, Joao F Henriques, Philip HS Torr, and Andrea Vedaldi. Meta-learning

with differentiable closed-form solvers. arXiv preprint arXiv:1805.08136, 2018.

[37] Joseph Redmon and Ali Farhadi. Yolov3: An incremental improvement. arXiv preprint

arXiv:1804.02767, 2018.

[38] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan,

Piotr Dollár, and C Lawrence Zitnick. Microsoft coco: Common objects in context. In

European conference on computer vision, pages 740–755. Springer, 2014.