李宏毅机器学习hw3

Homework 3 - Convolutional Neural Network

本文是对课程作业代码范例的复现,但也写了一些自己的理解和期间遇到的问题,如有写的不对的地方欢迎各位大佬指正。问题的解决方法和参考资料在文中以链接的形式给出

主要的参考文章是iteapoy的这篇⭐ 李宏毅2020机器学习作业3-CNN:食物图片分类

1作业概述

在food_11文件中有三个文件分别是training、validation、testing,打开training和validation可以看见总共有11种食物(0-10的类别编号),而testing是没有类别编号的。

然后用卷积神经网络对食物图片进行分类。

2导入用到的库

- torch库

这个我弄了半天,直接pip总是timeout,用镜像源下下来又出现OSError: [WinError 126]这个问题。最稳的还是直接去官网下torch和torchvision文件(他会给出你要下的版本),如果用浏览器下载速度太慢可以用迅雷试试,之后进行离线安装。

具体操作看这里,如果用的是Anaconda好像就不用下CUDA和CUDNN。 - opencv-python

import cv2 需要安装opencv-python库,还是用离线安装的方法,先从https://pypi.org/project/opencv-python/#files下对应版本的文件,再安装。

import os

import numpy as np

import cv2

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import pandas as pd

from torch.utils.data import DataLoader, Dataset

import time3读取图片

def readfile(path, label):

#因为训练集和验证集需要y的值,而test集不需要,label用来确定是哪一种情况

image_dir = sorted(os.listdir(path))

x = np.zeros((len(image_dir),128,128,3), dtype = np.uint8)

y = np.zeros((len(image_dir)), dtype = np.uint8)

for i, file in enumerate(image_dir):

#读取图片

img = cv2.imread(os.path.join(path, file))

# 利用cv2.resize()函数将不同大小的图片统一为128(高)*128(宽)

x[i,:,:] = cv2.resize(img,(128,128))

if label:

y[i] = int(file.split('_')[0])

#label表示需不需要返回y的值

if label:

return x,y

else:

return xworkspace_dir='C:/Users/ASUS/hwdata/hw3/food-11'

print('Reading data')

train_x,train_y = readfile(os.path.join(workspace_dir,'training'), True)

print("Size of training data = {}".format(len(train_x)))

val_x, val_y = readfile(os.path.join(workspace_dir,'validation'), True)

print("Size of validation data = {}".format(len(val_x)))

test_x = readfile(os.path.join(workspace_dir,"testing"), False)

print("Size of Testing data = {}".format(len(test_x)))Reading data

Size of training data = 9866

Size of validation data = 3430

Size of Testing data = 33474数据增强

图像数据准备对神经网络与卷积神经网络模型训练有重要影响,当样本空间不够或者样本数量不足的时候会严重影响训练或者导致训练出来的模型泛化程度不够,识别率与准确率不高。对图像数据进行数据增强,获取样本的多样性与数据的多样性从而为训练模型打下良好基础。

#training 时,通过随机旋转、水平翻转图片来进行数据增强(data augmentation)

train_transform = transforms.Compose([

transforms.ToPILImage(),

transforms.RandomHorizontalFlip(), #随机翻转图片

transforms.RandomRotation(15.0), #随机旋转图片

transforms.ToTensor(), #将图片变成 Tensor,并且把数值normalize到[0,1]

])

#testing 时,不需要进行数据增强(data augmentation)

test_transform = transforms.Compose([

transforms.ToPILImage(),

transforms.ToTensor(),

])5DataSet 和 DataLoader

具体可参考PyTorch 入门实战(三)——Dataset和DataLoader、pytorch源码分析之torch.utils.data.Dataset类和torch.utils.data.DataLoader类、python __getitem__()方法理解

利用Dataset 和 DataLoader 来包装数据

Dataset把数据打包成[ [X], Y ],X的数据形式为 图片数 * 3(彩色三通道) * 128*128(像素),每个X对应一个Y。例如train_set[0] 为 [ [第一张图的数据],第一张图的分类编号] ,train_set[1] 为 [ [第二张图的数据],第二张图的分类编号] 。

DataLoader把打包的数据分每128个图片为一个batch,一个batch的形式为[ [X], Y], X为 128(图片数) * 3(彩色三通道) * 128*128(像素), Y 也有128个编号。[ [X(128个)],Y(128个) ]每组X对应组Y。

#利用Dataset和DataLoader来包装数据

#DataLoader 把每128个data包成一个batch. batchsize = 128

class ImgDataset(Dataset):

def __init__(self, x, y = None, transform = None):

self.x = x

self.y = y

if y is not None:

self.y = torch.LongTensor(y)

self.transform = transform

def __len__(self):

return len(self.x)

def __getitem__(self,index):

#把x,y一个一个传入X,Y

#一个X有128个图片,组成一个batch。Y同理

X = self.x[index]

if self.transform is not None:

X = self.transform(X)

if self.y is not None:

Y = self.y[index]

return X, Y

else:

return X

#DataLoader

batch_size = 128

#包装训练集

train_set = ImgDataset(train_x, train_y, train_transform)#把数据打包成[ [X], Y ],X的形式为 图片数* 3 * 128*128

#包装验证集

val_set = ImgDataset(val_x, val_y, test_transform)#testing 时,不需要进行数据增强(data augmentation),所以用test_transform

#DataLoader把打包的数据分每128个图片为一个batch,一个batch的形式为[ [X], Y], X为 128(图片数) * 3(RGB) * 128*128(像素)

train_loader = DataLoader(train_set, batch_size = batch_size, shuffle = True)#shuffle表示是否在每个epoch开始的时候,对数据进行重新排序

val_loader = DataLoader(val_set, batch_size = batch_size, shuffle = False)6定义模型

先是一个卷积神经网络,再是一个全连接的前向传播神经网络,最后输出11种分类。

进行模型训练时,不需要直接使用forward,只需在实例一个对象中传入对应参数就可以自动调用forward函数。能调用的原因是nn.Module的__call__方法。

参考资料:pytorch 调用forward 的具体流程、pytorch 中的 forward 的使用与解释

例如后面代码中:

train_pred = model(data[0].cuda()) # 利用 model 得到预测的概率分布,这边实际上是调用模型的 forward 函数nn.Conv2d(self, in_channels, out_channels, kernel_size, stride = 1, padding = 0)

- in_channels: 输入数据的通道数(RGB图片为3)

- out_channels: 输出数据的通道数,根据模型调整

- kennel_size : 卷积核大小(课程里PPT展示的filter的形状)

- stride : 步长,每次移动的步数

- padding : 零填充

一次卷积中有多少个filter,输出就有多少个channel。也就是说out_channel 实际上就是filter的个数。

#定义模型

#先是一个卷积神经网络,再是一个全连接的前向传播神经网络,最后输出11种分类

class Classifier(nn.Module):

def __init__(self):

super(Classifier, self).__init__()

#torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)

#torch.nn.MaxPool2d(kernel_size, stride, padding)

#input 维度 [3, 128, 128]

self.cnn = nn.Sequential(

nn.Conv2d(3, 64, 3, 1, 1), # 输出[64, 128, 128]

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # 输出[64, 64, 64]

nn.Conv2d(64, 128, 3, 1, 1), # 输出[128, 64, 64]

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # 输出[128, 32, 32]

nn.Conv2d(128, 256, 3, 1, 1), # 输出[256, 32, 32]

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # 输出[256, 16, 16]

nn.Conv2d(256, 512, 3, 1, 1), # 输出[512, 16, 16]

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # 输出[512, 8, 8]

nn.Conv2d(512, 512, 3, 1, 1), # 输出[512, 8, 8]

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # 输出[512, 4, 4]

)

# 全连接的前向传播神经网络

self.fc = nn.Sequential(

nn.Linear(512*4*4,1024),

nn.ReLU(),

nn.Linear(1024,512),

nn.ReLU(),

nn.Linear(512,11),

)

def forward(self,x):

out = self.cnn(x) #out是cnn输出128 * [512, 4, 4]。传进的x以一个batch为单位:{128(图片数) * 3(RGB) * 128*128(像素)}

out = out.view(out.size()[0], -1) #[512, 4, 4]躺平成一维,总的应该是[128(行), 512*4*4(列)]

return self.fc(out)7训练

参考资料:torch代码解析 为什么要使用optimizer.zero_grad()

optimizer.zero_grad()

意思是把梯度置零,也就是把loss关于weight的导数变成0.即将梯度初始化为零(因为一个batch的loss关于weight的导数是所有sample的loss关于weight的导数的累加和)

model.train()

启用 BatchNormalization 和 Dropout(训练时开启)

model.eval()

不启用 BatchNormalization 和 Dropout(测试时关闭)

with torch.no_grad()或者@torch.no_grad()

中的数据不需要计算梯度,也不会进行反向传播

.item()方法

是得到一个元素张量里面的元素值。具体参考碎片篇——Pytorch中.item()用法

遇到的问题:有时候训练会出现CUDA out of memory,这是显存满了。可以用torch.cuda.empty_cache()这个试试,也可以调小batch_size。其他解决方法,这个在任务管理器直接关闭进程也行,但缺点是需要重新打开程序跑一遍。

还有一个问题我的GPU是笔记本的RTX2060,训练的时候从第4个epoch开始速度开始下降(前三个是正常速度),后面稳定在130s左右一个epoch。我想2060没这么菜吧,然后去查了一下发现了这篇文章深度学习PyTorch,TensorFlow中GPU利用率较低,CPU利用率很低,且模型训练速度很慢的问题总结与分析。我估计是CPU出了问题,因为我的笔记本一般是静音模式(因为一玩游戏风扇呼呼的响,太吵了)限制了一些CPU的性能,之后把笔记本调成性能模式后就正常了。

model = Classifier().cuda()

loss = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr = 0.001)

#optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

num_epoch = 30

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

val_acc = 0.0

val_loss = 0.0

model.train() # 确保 model 是在 训练 model (开启 Dropout 等...)

for i, data in enumerate(train_loader):

optimizer.zero_grad() # 用 optimizer 将模型参数的梯度 gradient 归零

train_pred = model(data[0].cuda()) # 利用 model 得到预测的概率分布,这边实际上是调用模型的 forward 函数

batch_loss = loss(train_pred, data[1].cuda()) # 计算 loss (注意 prediction 跟 label 必须同时在 CPU 或是 GPU 上)

batch_loss.backward() # 利用 back propagation 算出每个参数的 gradient

optimizer.step() # 以 optimizer 用 gradient 更新参数

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis = 1) == data[1].numpy())

train_loss = batch_loss.item()#.item()方法 是得到一个元素张量里面的元素值

#查看下train_acc

print(train_acc)

#验证集val

model.eval()

with torch.no_grad():

for i, data in enumerate(val_loader):

val_pred = model(data[0].cuda())

batch_loss = loss(val_pred, data[1].cuda())

val_acc += np.sum(np.argmax(val_pred.cpu().data.numpy(), axis = 1) == data[1].numpy())

val_loss += batch_loss.item()

#将结果 print 出來

print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f | Val Acc: %3.6f loss: %3.6f' % \

(epoch + 1, num_epoch, time.time()-epoch_start_time, \

train_acc/train_set.__len__(), train_loss/train_set.__len__(), val_acc/val_set.__len__(), val_loss/val_set.__len__()))

torch.cuda.empty_cache()输出结果

2455.0

[001/030] 24.32 sec(s) Train Acc: 0.248834 Loss: 0.000205 | Val Acc: 0.281050 loss: 0.015768

3481.0

[002/030] 25.70 sec(s) Train Acc: 0.352828 Loss: 0.000234 | Val Acc: 0.367638 loss: 0.014540

4006.0

[003/030] 25.83 sec(s) Train Acc: 0.406041 Loss: 0.000176 | Val Acc: 0.358309 loss: 0.014507

4594.0

[004/030] 25.80 sec(s) Train Acc: 0.465640 Loss: 0.000113 | Val Acc: 0.389213 loss: 0.014277

4818.0

[005/030] 25.92 sec(s) Train Acc: 0.488344 Loss: 0.000229 | Val Acc: 0.399417 loss: 0.014102

5138.0

[006/030] 25.89 sec(s) Train Acc: 0.520778 Loss: 0.000108 | Val Acc: 0.362974 loss: 0.015778

5342.0

[007/030] 25.86 sec(s) Train Acc: 0.541456 Loss: 0.000127 | Val Acc: 0.342857 loss: 0.018288

5543.0

[008/030] 25.91 sec(s) Train Acc: 0.561829 Loss: 0.000075 | Val Acc: 0.528863 loss: 0.011208

5916.0

[009/030] 25.89 sec(s) Train Acc: 0.599635 Loss: 0.000109 | Val Acc: 0.470554 loss: 0.012759

6026.0

[010/030] 25.98 sec(s) Train Acc: 0.610785 Loss: 0.000172 | Val Acc: 0.485423 loss: 0.012941

6277.0

[011/030] 26.01 sec(s) Train Acc: 0.636225 Loss: 0.000116 | Val Acc: 0.516910 loss: 0.011776

6396.0

[012/030] 25.93 sec(s) Train Acc: 0.648287 Loss: 0.000129 | Val Acc: 0.535860 loss: 0.012350

6451.0

[013/030] 25.88 sec(s) Train Acc: 0.653862 Loss: 0.000191 | Val Acc: 0.602624 loss: 0.009563

6787.0

[014/030] 26.04 sec(s) Train Acc: 0.687918 Loss: 0.000075 | Val Acc: 0.546356 loss: 0.011047

6952.0

[015/030] 26.04 sec(s) Train Acc: 0.704642 Loss: 0.000086 | Val Acc: 0.616035 loss: 0.009330

7088.0

[016/030] 26.14 sec(s) Train Acc: 0.718427 Loss: 0.000079 | Val Acc: 0.541983 loss: 0.012012

7158.0

[017/030] 25.91 sec(s) Train Acc: 0.725522 Loss: 0.000078 | Val Acc: 0.588921 loss: 0.010723

7317.0

[018/030] 25.96 sec(s) Train Acc: 0.741638 Loss: 0.000124 | Val Acc: 0.604665 loss: 0.010033

7372.0

[019/030] 25.94 sec(s) Train Acc: 0.747213 Loss: 0.000100 | Val Acc: 0.660350 loss: 0.008743

7532.0

[020/030] 26.01 sec(s) Train Acc: 0.763430 Loss: 0.000161 | Val Acc: 0.460350 loss: 0.019849

7461.0

[021/030] 25.93 sec(s) Train Acc: 0.756234 Loss: 0.000169 | Val Acc: 0.608746 loss: 0.010954

7492.0

[022/030] 25.95 sec(s) Train Acc: 0.759376 Loss: 0.000032 | Val Acc: 0.559767 loss: 0.011882

7932.0

[023/030] 25.93 sec(s) Train Acc: 0.803973 Loss: 0.000111 | Val Acc: 0.661808 loss: 0.008978

8065.0

[024/030] 26.03 sec(s) Train Acc: 0.817454 Loss: 0.000053 | Val Acc: 0.642857 loss: 0.009964

8211.0

[025/030] 26.11 sec(s) Train Acc: 0.832252 Loss: 0.000069 | Val Acc: 0.590379 loss: 0.012406

8168.0

[026/030] 26.02 sec(s) Train Acc: 0.827894 Loss: 0.000073 | Val Acc: 0.606706 loss: 0.011236

8251.0

[027/030] 26.12 sec(s) Train Acc: 0.836307 Loss: 0.000070 | Val Acc: 0.679592 loss: 0.009355

8382.0

[028/030] 26.03 sec(s) Train Acc: 0.849584 Loss: 0.000058 | Val Acc: 0.625073 loss: 0.011136

8519.0

[029/030] 26.03 sec(s) Train Acc: 0.863471 Loss: 0.000090 | Val Acc: 0.614577 loss: 0.012530

8422.0

[030/030] 26.03 sec(s) Train Acc: 0.853639 Loss: 0.000026 | Val Acc: 0.658892 loss: 0.010937合并训练集和验证集

得到好的参数后,我们使用training set和validation set共同训练(数据量变多,模型效果更好)

train_val_x = np.concatenate((train_x, val_x), axis = 0)

train_val_y = np.concatenate((train_y, val_y), axis = 0)

train_val_set = ImgDataset(train_val_x, train_val_y, train_transform)

train_val_loader = DataLoader(train_val_set, batch_size=batch_size, shuffle=True)

model_best = Classifier().cuda()

loss = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model_best.parameters(), lr = 0.001)

num_epoch = 30

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0

train_loss = 0.0

moudel_best.train()

for i, data in enumerate(train_val_loader):

optimizer.zero_grad()

train_pred = model_best(data[0].cuda())

batch_loss = loss(train_pred, data[1].cuda())

batch_loss.backward()

optimizer.step()

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis = 1) == data[1].numpy())

train_loss += batch_loss.item()#.item()方法 是得到一个元素张量里面的元素值

print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f' % \

(epoch + 1, num_epoch, time.time()-epoch_start_time, \

train_acc/train_val_set.__len__(), train_loss/train_val_set.__len__()))输出结果

[001/030] 34.21 sec(s) Train Acc: 0.245713 Loss: 0.017318

[002/030] 31.55 sec(s) Train Acc: 0.375000 Loss: 0.014000

[003/030] 31.73 sec(s) Train Acc: 0.442013 Loss: 0.012501

[004/030] 31.76 sec(s) Train Acc: 0.490523 Loss: 0.011377

[005/030] 31.83 sec(s) Train Acc: 0.535725 Loss: 0.010500

[006/030] 31.94 sec(s) Train Acc: 0.572879 Loss: 0.009732

[007/030] 31.99 sec(s) Train Acc: 0.601835 Loss: 0.008936

[008/030] 32.26 sec(s) Train Acc: 0.623947 Loss: 0.008496

[009/030] 32.14 sec(s) Train Acc: 0.659221 Loss: 0.007754

[010/030] 32.80 sec(s) Train Acc: 0.675015 Loss: 0.007382

[011/030] 33.86 sec(s) Train Acc: 0.695924 Loss: 0.006893

[012/030] 33.37 sec(s) Train Acc: 0.714275 Loss: 0.006430

[013/030] 33.70 sec(s) Train Acc: 0.721570 Loss: 0.006217

[014/030] 33.10 sec(s) Train Acc: 0.738643 Loss: 0.005756

[015/030] 32.40 sec(s) Train Acc: 0.755641 Loss: 0.005431

[016/030] 34.19 sec(s) Train Acc: 0.768878 Loss: 0.005226

[017/030] 33.39 sec(s) Train Acc: 0.779558 Loss: 0.004895

[018/030] 33.78 sec(s) Train Acc: 0.796330 Loss: 0.004622

[019/030] 33.73 sec(s) Train Acc: 0.814004 Loss: 0.004258

[020/030] 33.77 sec(s) Train Acc: 0.820548 Loss: 0.004053

[021/030] 32.88 sec(s) Train Acc: 0.835966 Loss: 0.003707

[022/030] 32.53 sec(s) Train Acc: 0.840779 Loss: 0.003544

[023/030] 32.62 sec(s) Train Acc: 0.848676 Loss: 0.003353

[024/030] 32.86 sec(s) Train Acc: 0.857852 Loss: 0.003135

[025/030] 33.20 sec(s) Train Acc: 0.876053 Loss: 0.002795

[026/030] 32.79 sec(s) Train Acc: 0.883875 Loss: 0.002611

[027/030] 32.62 sec(s) Train Acc: 0.891847 Loss: 0.002404

[028/030] 33.24 sec(s) Train Acc: 0.895984 Loss: 0.002307

[029/030] 32.83 sec(s) Train Acc: 0.910951 Loss: 0.002022

[030/030] 32.60 sec(s) Train Acc: 0.902151 Loss: 0.0022278测试

test_set = ImgDataset(test_x, transform = test_transform)

test_loader = DataLoader(test_set, batch_size = batch_size, shuffle = False)

model_best.eval()#不启动BatchNormalization 和 Dropout

prediction = []

with torch.no_grad():

for i , data in enumerate(test_loader):

test_pred = model_best(data.cuda())

# 预测值中概率最大的下标即为模型预测的食物标签

test_lable = np.argmax(test_pred.cpu().data.numpy(), axis = 1)

for y in test_lable:

prediction.append(y)

with open('precdict.csv','w') as f:

f.write('Id,Category\n')

for i, pred in enumerate(prediction):

f.write('{},{}\n'.format(i,pred))

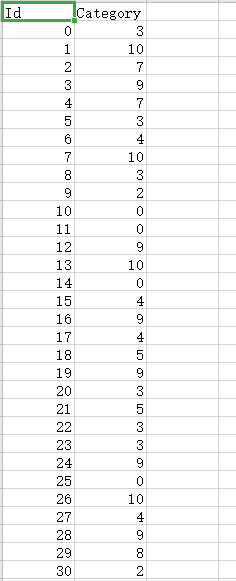

torch.cuda.empty_cache()结果