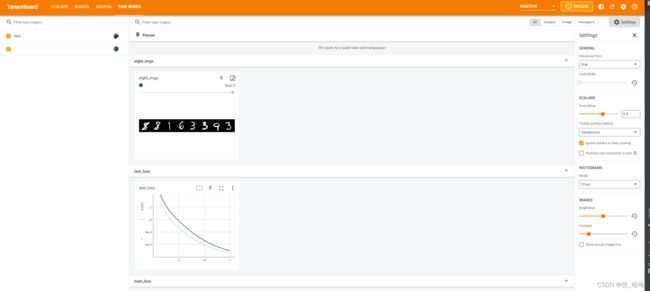

Pytorch通过Tensorboard实现对手写数字模型的可视化

文章目录

- 一、TensorBoard简介与安装

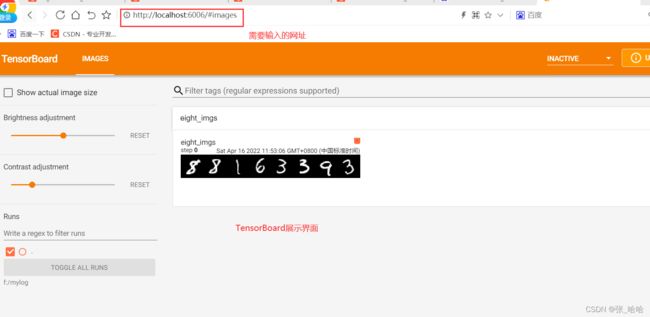

- 二、TensorBoard数据(图片)可视化

-

- 1.创建writer对象,指定数据存入磁盘路径

- 2.通过writer将数据(例如图片)写入到磁盘

- 3.打开tensorboard界面

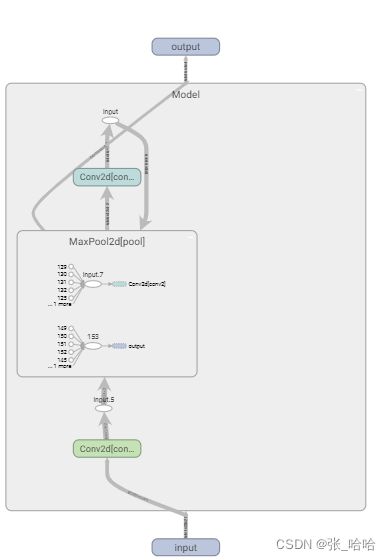

- 三、TensorBoard网络模型可视化

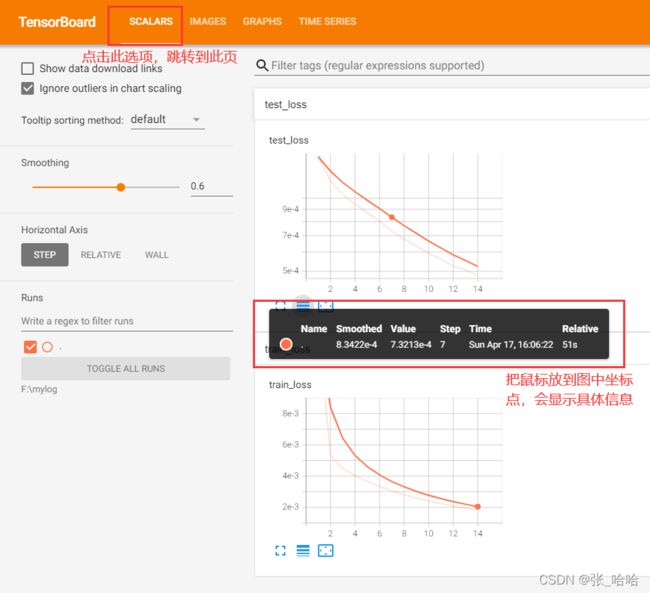

- 四、标量数据可视化(例如动态显示loss、acc值)

- 总结(附本文全部代码)

-

- 1.TensorBoard展示数据(图片)和网络模型代码

- 2.TensorBoard展示标量数据代码

一、TensorBoard简介与安装

TensorBoard是TensorFlow下的一个可视化的工具,能在训练大规模神经网络时将复杂的运算过程可视化。TensorBoard能展示你训练过程中绘制的图像、网络结构等。

可以通过

pip install tensorboard

等命令安装,如果速度慢,可以采用国内镜像源

二、TensorBoard数据(图片)可视化

可视化两步骤:

1.在代码行中将需要可视化的数据写入磁盘

2.在命令行中打开tensorboard, 并指定写入的文件位置,进行可视化

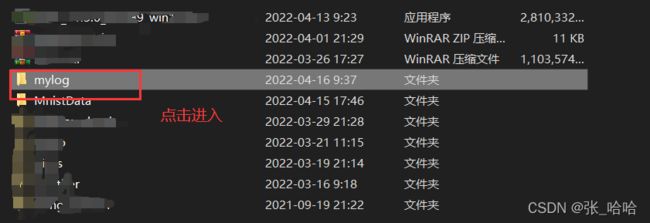

1.创建writer对象,指定数据存入磁盘路径

示例代码如下:

import matplotlib.pyplot as plt

import torch.utils.data

import torchvision.utils

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.tensorboard import SummaryWriter

from torchvision import datasets

from torchvision.transforms import transforms

writer = SummaryWriter(r'F:\mylog')

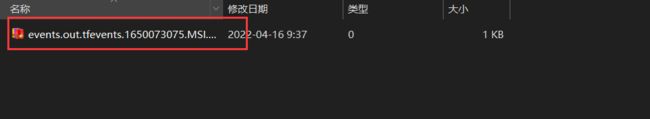

2.通过writer将数据(例如图片)写入到磁盘

代码示例如下

import matplotlib.pyplot as plt

import numpy as np

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.data

import torchvision.utils

writer = SummaryWriter(r'F:\mylog')

from torchvision import datasets, transforms

transformation = transforms.Compose([

transforms.ToTensor(), # 1.转换成Tensor 2.转换到0-1之间 3.会将channel放到第一维度

])

train_ds = datasets.MNIST(

'F:/MnistData/', # 数据存放的路径

train=True,

transform=transformation,

download=True # 如果没有就下载

)

print(train_ds)

test_ds = datasets.MNIST(

'F:/MnistData/',

train=False, # 注意此处为False

transform=transformation,

download=True

)

print(test_ds)

train_dl = torch.utils.data.DataLoader(train_ds, batch_size=64, shuffle=True)

test_dl = torch.utils.data.DataLoader(test_ds, batch_size=256) # 测试集计算量比较少,批次一次可以大点

print(train_dl)

print(test_dl)

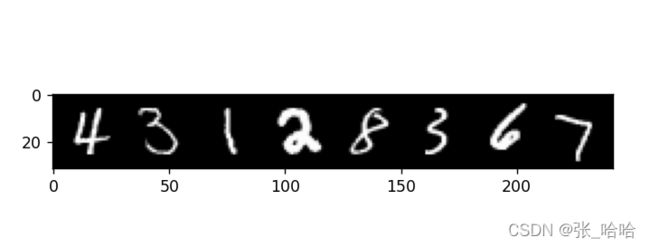

# ------------上述代码为数据装入-------

# ---------------显示图片--------------

imgs, labels = next(iter(train_dl))

# 将8张图片组成一张

img_grid = torchvision.utils.make_grid(imgs[:8])

npimg = img_grid.permute(1, 2, 0).numpy()

plt.imshow(npimg)

plt.show()

writer.add_image('eight images', npimg) # 第一个参数是名称, 第二个参数是传入图片

writer.close()

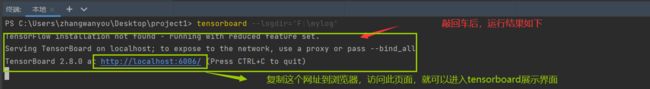

3.打开tensorboard界面

三、TensorBoard网络模型可视化

操作步骤:

(1)创建或引入一个想要展示的网络模型

(2)将网络模型写入磁盘

(3)关闭流

示例代码如下:

# 创建一个模型

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 6, 5)

self.pool = nn.MaxPool2d((2, 2))

self.conv2 = nn.Conv2d(6, 16, 5)

self.liner_1 = nn.Linear(16*4*4, 256)

self.liner_2 = nn.Linear(256, 10)

def forward(self, input):

x = F.relu(self.conv1(input))

x = self.pool(x)

x = F.relu(self.conv2(x))

x = self.pool(x)

x = x.view(-1, 16*4*4)

x = F.relu(self.liner_1(x))

x = self.liner_2(x)

return x

model = Model()

writer.add_graph(model, imgs) # 第一个参数:你的模型 第二个参数:模型要传入的数据

writer.close()

四、标量数据可视化(例如动态显示loss、acc值)

需要利用到add_scalar()方法

第一个参数:传入的值的标签名称

第二个参数:传入的值

第三个参数:指定对应哪个epoch 一般填epoch+1,使其从1开始

# 举例

writer.add_scalar('train_loss', epoch_loss, epoch+1)

writer.add_scalar('test_loss',epoch_acc,epoch+1)

总结(附本文全部代码)

1.TensorBoard展示数据(图片)和网络模型代码

import matplotlib.pyplot as plt

import torch.utils.data

import torchvision.utils

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.tensorboard import SummaryWriter

from torchvision import datasets

from torchvision.transforms import transforms

writer = SummaryWriter(r'F:\mylog')

transformation = transforms.Compose([

transforms.ToTensor(), # 1.转换成Tensor 2.转换到0-1之间 3.会将channel放到第一维度

])

train_ds = datasets.MNIST(

'F:/MnistData/', # 数据存放的路径

train=True,

transform=transformation,

download=True # 如果没有就下载

)

print(train_ds)

test_ds = datasets.MNIST(

'F:/MnistData/',

train=False, # 注意此处为False

transform=transformation,

download=True

)

print(test_ds)

train_dl = torch.utils.data.DataLoader(train_ds, batch_size=64, shuffle=True)

test_dl = torch.utils.data.DataLoader(test_ds, batch_size=256) # 测试集计算量比较少,批次一次可以大点

print(train_dl)

print(test_dl)

imgs, labels = next(iter(train_dl))

# 将8张图片组成一张

img_grid = torchvision.utils.make_grid(imgs[:8])

npimg = img_grid.permute(1, 2, 0).numpy()

plt.imshow(npimg)

plt.show()

writer.add_image('eight_imgs', img_grid) # 将torchvision结果之间交给tensorboard

# 创建一个模型

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 6, 5)

self.pool = nn.MaxPool2d((2, 2))

self.conv2 = nn.Conv2d(6, 16, 5)

self.liner_1 = nn.Linear(16*4*4, 256)

self.liner_2 = nn.Linear(256, 10)

def forward(self, input):

x = F.relu(self.conv1(input))

x = self.pool(x)

x = F.relu(self.conv2(x))

x = self.pool(x)

x = x.view(-1, 16*4*4)

x = F.relu(self.liner_1(x))

x = self.liner_2(x)

return x

model = Model()

writer.add_graph(model, imgs) # 第一个参数:你的模型 第二个参数:模型要传入的数据

writer.close()

2.TensorBoard展示标量数据代码

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.data

from torch.utils.tensorboard import SummaryWriter

from torchvision import datasets, transforms

writer = SummaryWriter(r'F:\mylog')

transformation = transforms.Compose([

transforms.ToTensor(), # 1.转换成Tensor 2.转换到0-1之间 3.会将channel放到第一维度

])

train_ds = datasets.MNIST(

'F:/MnistData/', # 数据存放的路径

train=True,

transform=transformation,

download=True # 如果没有就下载

)

test_ds = datasets.MNIST(

'F:/MnistData/',

train=False, # 注意此处为False

transform=transformation,

download=True

)

train_dl = torch.utils.data.DataLoader(train_ds, batch_size=64, shuffle=True)

test_dl = torch.utils.data.DataLoader(test_ds, batch_size=256) # 测试集计算量比较少,批次一次可以大点

# 创建模型

class Model(nn.Module):

def __init__(self):

super().__init__()

self.liner_1 = nn.Linear(28*28, 120) # 输入特征维度28*28

self.liner_2 = nn.Linear(120, 84)

self.liner_3 = nn.Linear(84, 10) # 十分类故输出应为十

def forward(self, input):

x = input.view(-1, 28*28)

x = F.relu(self.liner_1(x))

x = F.relu(self.liner_2(x))

x = self.liner_3(x)

return x

model = Model()

print(model)

# 定义损失函数

loss_fn = torch.nn.CrossEntropyLoss()

# 编写优化器

optim = torch.optim.Adam(model.parameters(), lr=0.0001)

# 编写fit函数,输入模型、输入数据(train_dl, test_dl),对数据输入在模型上训练,并且返回loss和acc

def fit(epoch, model, trainloader, testloader):

correct = 0

total = 0

running_loss = 0

for x, y in trainloader:

y_pred = model(x)

loss = loss_fn(y_pred, y)

optim.zero_grad()

loss.backward()

optim.step()

with torch.no_grad():

y_pred = torch.argmax(y_pred, dim=1)

correct += (y_pred == y).sum().item()

total += y.size(0)

running_loss += loss.item()

epoch_acc = correct / total

epoch_loss = running_loss/len(train_dl.dataset)

# 数据写入磁盘,让tensorboard使用

writer.add_scalar('train_loss', epoch_loss, epoch+1)

test_correct = 0

test_total = 0

test_running_loss = 0

with torch.no_grad():

for x, y in testloader:

y_pred = model(x)

loss = loss_fn(y_pred, y)

y_pred = torch.argmax(y_pred, dim=1)

test_correct += (y_pred == y).sum().item()

test_total += y.size(0)

test_running_loss += loss.item()

epoch_test_acc = correct / total

epoch_test_loss = test_running_loss / len(test_dl.dataset)

# 数据写入磁盘,让tensorboard使用

writer.add_scalar('test_loss', epoch_test_loss, epoch+1)

print('epoch:', epoch,

'loss:', round(epoch_loss, 3),

'accuracy:', round(epoch_acc, 3),

'test_loss:', round(epoch_test_loss, 3),

'test_accuracy:', round(epoch_test_acc, 3)

)

return epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc

# 运行训练过程代码

train_loss = []

train_acc = []

test_loss = []

test_acc = []

epochs = 15

for epoch in range(epochs):

epoch_loss, epoch_acc, epoch_test_loss, epoch_test_acc = fit(epoch, model, train_dl, test_dl)

train_loss.append(epoch_loss)

train_acc.append(epoch_acc)

test_loss.append(epoch_test_loss)

test_acc.append(epoch_test_acc)

writer.close()