《PyTorch深度学习实战》学习小结

前言

PyTorch是Facebook发布的一款非常具有个性的深度学习框架,它和Tensorflow,Keras,Theano等其他深度学习框架都不同,它是动态计算图模式,其应用模型支持在运行过程中根据运行参数动态改变,而其他几种框架都是静态计算图模式,其模型在运行之前就已经确定。

PyTorch安装

简易安装

pip install numpy

pip install scipy

pip install http://download.pytorch.org/whl/cu75/torch-0.1.12.post2-cp27-none-linux_x86_64.whl

指定版本安装

基础变量

Tensor 基本的数据结构

- pytorch 中的数据都是封装成 Tensor 来引用的,

- Tensor 实际上就类似于 numpy 中的数组,两者可以自由转换。

import torch

x = torch.Tensor(3,4)

print("x Tensor: ",x)

Variable 基本的变量结构

- Variable变量包含了数据和数据的导数两个部分

- x.data 是数据,这里 x.data 就是 Tensor。x.grad 是计算过程中动态变化的导数。

import torch

from torch.autograd import Variable

x=Variable(torch.Tensor(2,2))

print("x variable: ",x)

求导

- 数学上求导简单来说就是求取方程式相对于输入参数的变化率

- 求导的作用是用导数对神经网络的权重参数进行调整

- Pytorch 中为求导提供了专门的包,包名叫 autograd。如果用 autograd.Variable 来定义参数,则 Variable 自动定义了两个变量,data 代表原始权重数据;而 grad 代表求导后的数据,也就是梯度。每次迭代过程就用这个 grad 对权重数据进行修正。

import torch

from torch.autograd import Variable

x = Variable(torch.ones(2, 2), requires_grad=True)

print(x)

y=x+2

print(y)

z = y * y * 3

out = z.mean()

print(z, out)

out.backward() # backward 就是求导的意思

print(x.grad)

z=(x+2)*(x+2)*3,它的导数是 3*(x+2)/2,

当 x=1 时导数的值就是 3*(1+2)/2=4.5

如何用导数来修正梯度

权值更新方法:learning_rate 是学习速率,多数时候就叫做 lr,是学习步长,用步长 * 导数就是每次权重修正的 delta 值,lr 越大表示学习的速度越快,相应的精度就会降低。

weight = weight + learning_rate * gradient

learning_rate = 0.01

for f in model.parameters():

f.data.sub_(f.grad.data * learning_rate)

损失函数

- 损失函数是指用于计算标签值和预测值之间差异的函数,在机器学习过程中,有多种损失函数可供选择,典型的有距离向量,绝对值向量等。

- pytorch 中定义了很多类型的预定义损失函数,

- 也支持自己自定义loss function

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

sample = Variable(torch.ones(2,2))

a=torch.Tensor(2,2)

a[0,0]=0

a[0,1]=1

a[1,0]=2

a[1,1]=3

target = Variable (a)

sample 的值为:[[1,1],[1,1]]

target 的值为:[[0,1],[2,3]]

nn.L1Loss

criterion = nn.L1Loss()

loss = criterion(sample, target)

print(loss)

loss = (|0-1|+|1-1|+|2-1|+|3-1|) / 4 = 1

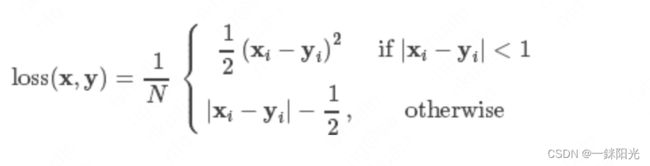

nn.SmoothL1Loss

criterion = nn.SmoothL1Loss()

loss = criterion(sample, target)

print(loss)

loss = 0.625

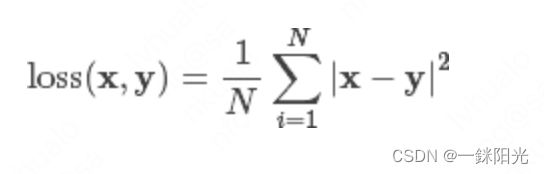

nn.MSELoss

criterion = nn.MSELoss()

loss = criterion(sample, target)

print(loss)

loss = 1.5

nn.BCELoss

criterion = nn.BCELoss()

loss = criterion(sample, target)

print(loss)

loss = -13.8155

nn.CrossEntropyLoss

优化器

TODO

- PyTorch的动态计算图模式指的是什么,与其他框架的静态计算图模式有什么差异?