Sklearn学习记录之CountVectorizer

在文本处理过程中,先挖坑,有时间再填。

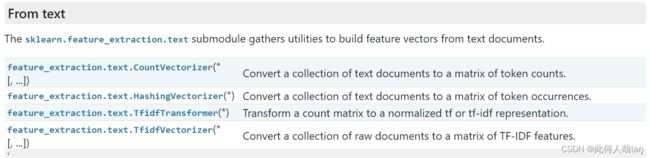

在sklearn.feature_extraction.text中有四个用来从文本中提出特征向量的子模块,其中以CountVectorizer为基础模块。其模块的主要函数是fit 和transformer。以下截图是具体的源码。

Type: CountVectorizer

String form: CountVectorizer()

File: /opt/conda/lib/python3.9/site-packages/sklearn/feature_extraction/text.py

Source:

class CountVectorizer(_VectorizerMixin, BaseEstimator):

r"""Convert a collection of text documents to a matrix of token counts

This implementation produces a sparse representation of the counts using

scipy.sparse.csr_matrix.

If you do not provide an a-priori dictionary and you do not use an analyzer

that does some kind of feature selection then the number of features will

be equal to the vocabulary size found by analyzing the data.

Read more in the :ref:`User Guide `. File: /opt/conda/lib/python3.9/site-packages/scipy/sparse/csr.py

Source:

class csr_matrix(_cs_matrix):

"""

Compressed Sparse Row matrix

This can be instantiated in several ways:

csr_matrix(D)

with a dense matrix or rank-2 ndarray D

csr_matrix(S)

with another sparse matrix S (equivalent to S.tocsr())

csr_matrix((M, N), [dtype])

to construct an empty matrix with shape (M, N)

dtype is optional, defaulting to dtype='d'.

csr_matrix((data, (row_ind, col_ind)), [shape=(M, N)])

where ``data``, ``row_ind`` and ``col_ind`` satisfy the

relationship ``a[row_ind[k], col_ind[k]] = data[k]``.

csr_matrix((data, indices, indptr), [shape=(M, N)])

is the standard CSR representation where the column indices for

row i are stored in ``indices[indptr[i]:indptr[i+1]]`` and their

corresponding values are stored in ``data[indptr[i]:indptr[i+1]]``.

If the shape parameter is not supplied, the matrix dimensions

are inferred from the index arrays.

Attributes

----------

dtype : dtype

Data type of the matrix

shape : 2-tuple

Shape of the matrix

ndim : int

Number of dimensions (this is always 2)

nnz

Number of stored values, including explicit zeros

data

CSR format data array of the matrix

indices

CSR format index array of the matrix

indptr

CSR format index pointer array of the matrix

has_sorted_indices

Whether indices are sorted

Notes

-----

Sparse matrices can be used in arithmetic operations: they support

addition, subtraction, multiplication, division, and matrix power.

Advantages of the CSR format

- efficient arithmetic operations CSR + CSR, CSR * CSR, etc.

- efficient row slicing

- fast matrix vector products

Disadvantages of the CSR format

- slow column slicing operations (consider CSC)

- changes to the sparsity structure are expensive (consider LIL or DOK)

Examples def transform(self, raw_X):

"""Transform a sequence of instances to a scipy.sparse matrix.

Parameters

----------

raw_X : iterable over iterable over raw features, length = n_samples

Samples. Each sample must be iterable an (e.g., a list or tuple)

containing/generating feature names (and optionally values, see

the input_type constructor argument) which will be hashed.

raw_X need not support the len function, so it can be the result

of a generator; n_samples is determined on the fly.

Returns

-------

X : sparse matrix of shape (n_samples, n_features)

Feature matrix, for use with estimators or further transformers.

"""

self._validate_params(self.n_features, self.input_type)

raw_X = iter(raw_X)

if self.input_type == "dict":

raw_X = (_iteritems(d) for d in raw_X)

elif self.input_type == "string":

raw_X = (((f, 1) for f in x) for x in raw_X)

indices, indptr, values = _hashing_transform(

raw_X, self.n_features, self.dtype, self.alternate_sign, seed=0

)

n_samples = indptr.shape[0] - 1

if n_samples == 0:

raise ValueError("Cannot vectorize empty sequence.")

X = sp.csr_matrix(

(values, indices, indptr),

dtype=self.dtype,

shape=(n_samples, self.n_features),

)

X.sum_duplicates() # also sorts the indices

return X def fit(self, X, y=None):

"""No-op: this transformer is stateless.

Parameters

----------

X : ndarray of shape [n_samples, n_features]

Training data.

y : Ignored

Not used, present for API consistency by convention.

Returns

-------

self : object

HashingVectorizer instance.

"""

# triggers a parameter validation

if isinstance(X, str):

raise ValueError(

"Iterable over raw text documents expected, string object received."

)

self._warn_for_unused_params()

self._validate_params()

self._get_hasher().fit(X, y=y)

return self

def transform(self, X):

"""Transform a sequence of documents to a document-term matrix.

Parameters

----------

X : iterable over raw text documents, length = n_samples

Samples. Each sample must be a text document (either bytes or

unicode strings, file name or file object depending on the

constructor argument) which will be tokenized and hashed.

Returns

-------

X : sparse matrix of shape (n_samples, n_features)

Document-term matrix.

"""

if isinstance(X, str):

raise ValueError(

"Iterable over raw text documents expected, string object received."

)

self._validate_params()

analyzer = self.build_analyzer()

X = self._get_hasher().transform(analyzer(doc) for doc in X)

if self.binary:

X.data.fill(1)

if self.norm is not None:

X = normalize(X, norm=self.norm, copy=False)

return X

def fit_transform(self, X, y=None):

"""Transform a sequence of documents to a document-term matrix.

Parameters

----------

X : iterable over raw text documents, length = n_samples

Samples. Each sample must be a text document (either bytes or

unicode strings, file name or file object depending on the

constructor argument) which will be tokenized and hashed.

y : any

Ignored. This parameter exists only for compatibility with

sklearn.pipeline.Pipeline.

Returns

-------

X : sparse matrix of shape (n_samples, n_features)

Document-term matrix.

"""

return self.fit(X, y).transform(X)参考:

1、【sklearn文本特征提取】词袋模型/稀疏表示/停用词/TF-IDF模型_Yanqiang_CS的博客-CSDN博客

2、sklearn——CountVectorizer详解_九点澡堂子的博客-CSDN博客_countvectorizer

3、文本预处理:词袋模型(bag of words,BOW)、TF-IDF_天泽28的博客-CSDN博客_词袋模型和tfidf