python pca主成分

Data is the fuel of big data era, and we can get insightful information from data. However, tons of data in a high number of dimensions may cover valuable knowledge. Therefore, data mining and feature engineering become essential skills to uncover valuable information underneath the data.

数据是大数据时代的推动力,我们可以从数据中获得有见地的信息。 但是,大量维度的大量数据可能涵盖了宝贵的知识。 因此,数据挖掘和特征工程成为发现数据下有价值信息的基本技能。

1.什么是PCA (1. What is PCA)

For the dimensionality reduction, Principle Component Analysis (PCA) is the most popular algorithm. PCA is an algorithm encoding the original features into a compact representation and we can drop the “unimportant” features while still retaining most of useful information. PCA does not simply select the useful features and discard the others from original data set, the principal components resulting from PCA are linear combinations of original features and these components are good alternative to represent the original data.

对于降维,主成分分析(PCA)是最流行的算法。 PCA是一种将原始特征编码为紧凑表示形式的算法,我们可以删除“不重要”的特征,同时仍保留大多数有用的信息。 PCA不会简单地选择有用的功能并从原始数据集中丢弃其他功能,PCA产生的主要成分是原始特征的线性组合,而这些成分是表示原始数据的良好选择。

The reason why PCA becomes a popular algorithm in data science field is that PCA help us:

PCA之所以成为数据科学领域流行的算法,是因为PCA帮助我们:

1. Dimensionality reduction and speed up the model training time of a learning algorithm.

1.降维并加快学习算法的模型训练时间。

2. Use new combined features representation can enhance model’s performance.

2.使用新的组合特征表示法可以增强模型的性能。

3. Reduced dimensionality can be easily visualized.

3.降低的尺寸可以很容易地看到。

2. PCA的本质 (2. Essence of PCA)

The principle of PCA algorithm is to create a set of new features based on raw data, and rank the order of variance of new feature, finally create a set of principle components. Why the variance is considered as the most important index, it is because more variance in feature values can provide better predicting ability for machine learning model. For example, predicting car price with two features: color and mileage. If all the cars have same color, but with different mileage, then we can not predict car’s price with color (feature without variance). We can only rely on mileage to predict car’s price, the more mileage, the lower car’s price.

PCA算法的原理是基于原始数据创建一组新特征,并对新特征的方差排序,最后创建一组主成分。 为什么将方差视为最重要的指标,这是因为特征值的更多方差可以为机器学习模型提供更好的预测能力。 例如,预测汽车价格具有两个功能:颜色和里程。 如果所有汽车都具有相同的颜色,但里程数不同,那么我们就无法用颜色预测汽车的价格(功能无差异)。 我们只能依靠里程来预测汽车的价格,里程越多,汽车的价格越低。

Fig 1 showed us the basic principle of PCA. The basic principle for PCA is to remain as much as possible of the variance, and it also means to discard as little as possible. After combining the two original features (x1 and x2), new feature of U becomes the first principle component of dataset, and V is the second principle component. The principal component transform the original data into a new dimension space, in this space, U explains most of the data variance and V explains small part of data variance.

图1向我们展示了PCA的基本原理。 PCA的基本原理是保持尽可能多的差异,这也意味着丢弃尽可能少的差异。 在将两个原始特征(x1和x2)组合之后,U的新特征成为数据集的第一个主成分,而V是第二个主成分。 主成分将原始数据转换为新的维空间,在该空间中,U解释大多数数据方差,V解释小部分数据方差。

3. PCA的实施 (3. Implement of PCA)

From above description, we have a high level understanding about PCA algorithm. It is time to dive into the detailed implementation of PCA.

通过以上描述,我们对PCA算法有较高的了解。 现在该深入探讨PCA的详细实施了。

- Organize all independent features into matrix X, and centralize each feature by minus the mean of the feature so then make each feature with zero mean. If different features on different scales, standardize the feature by diving the standard deviation of feature. 将所有独立特征整理到矩阵X中,并通过减去特征均值来集中每个特征,然后使每个特征均值为零。 如果不同比例上的要素不同,请通过减除要素的标准偏差来标准化要素。

2. Secondly, try to obtain the new vectors (for example, U and V in fig 1). The specific procedure to implement the calculation is compute covariance matrix:

2.其次,尝试获得新的向量(例如,图1中的U和V)。 实现计算的具体过程是计算协方差矩阵:

3. Thirdly, solve the eigen decomposition problem. The major idea for eigen decomposition is to solve the equation:

3.第三,解决本征分解问题。 特征分解的主要思想是求解方程:

C is covariance matrix; x is eigenvector, is corresponding is eigenvalue to explained variance.

C是协方差矩阵; x是特征向量,对应于特征值以解释方差。

4. Rank the eigenvalues obtained from the step 3, select the number of eigenvectors that correspond to the number of largest eigenvalues.

4.排列从步骤3获得的特征值,选择与最大特征值数量相对应的特征向量数量。

5. Transform the original dataset onto the selected the number of new feature subspace.

5.将原始数据集转换为选定数量的新要素子空间。

In reality, the implementation of PCA need to compute the full covariance matrix which require extensive usage of memory. There is another beautiful algorithm can achieve the same purpose as PCA based on raw dataset without calculating covariance matrix. The new algorithm is Singular Value Decomposition (SVD). When implementing PCA eigen decomposition, a squared covariance matrix is required, however, SVD can decompose an arbitrary matrix with m rows and n columns into a set of vectors. In addition it is possible to implement truncated SVD, this makes the computing procedure of much faster and numerically stable, and actually the popular machine learning library scikit-learn use SVD for PCA package. As for singular decomposition:

实际上,PCA的实现需要计算需要大量使用内存的完整协方差矩阵。 在不计算协方差矩阵的情况下,还有另一种漂亮的算法可以基于原始数据集达到与PCA相同的目的。 新算法是奇异值分解(SVD)。 在执行PCA本征分解时,需要平方协方差矩阵,但是,SVD可以将m行和n列的任意矩阵分解为一组向量。 另外,可以实现截断的SVD,这使得计算过程更加快捷且数值稳定,实际上流行的机器学习库scikit-learn将SVD用于PCA软件包。 至于奇异分解:

Orthogonal matrices U composed by orthonormal eigenvectors of XX^T, and orthogonal matrices composed by orthonormal eigenvectors of X^TX, diagonal matrix is the root of positive eigenvalues of or (two matrices should have the same positive eigenvalues).

由XX ^ T的正交特征向量组成的正交矩阵U和由X ^ TX的正交特征向量组成的正交矩阵,对角矩阵是或的正特征值的根(两个矩阵应具有相同的正特征值)。

According to the equation

根据等式

the following equation can be attained

可以得到以下等式

Based on above equation, principal component scores can be computed.

基于以上等式,可以计算主成分得分。

4.案例研究 (4. Case Study)

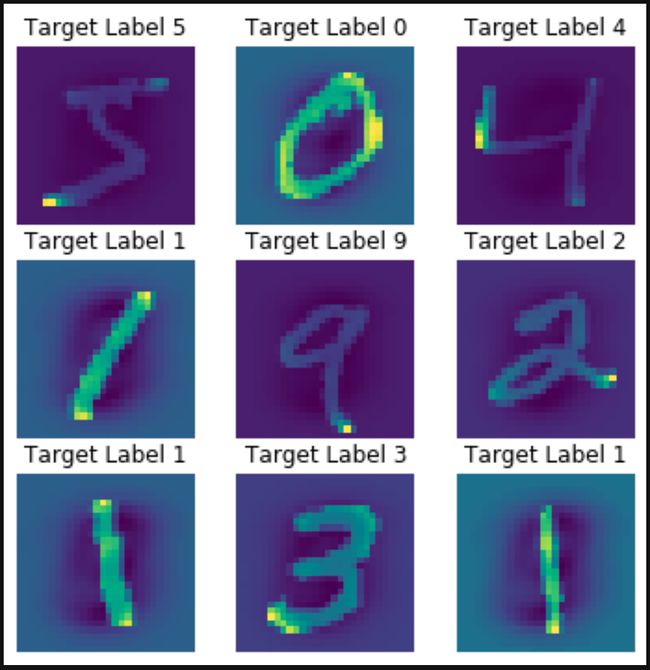

MNIST data set is used for this project to demonstrate the implement of PCA, meanwhile to illustrate the effects of PCA on high dimension data sets. The data set contains 70000 images of handwritten digits. For each image, the data contains 28x28 = 784 features which represent grayscale level.

该项目使用MNIST数据集来演示PCA的实现,同时说明PCA对高维数据集的影响。 数据集包含70000个手写数字图像。 对于每个图像,数据包含代表灰度级的28x28 = 784个特征。

- Import all packages 导入所有包

import pandas as pd

from sklearn.datasets import fetch_openml

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.decomposition import PCA2. Load and understand data set

2.加载并了解数据集

mnist = fetch_openml('mnist_784')

for key in mnist:

print (key)data

target

frame

feature_names

target_names

DESCR

details

categories

url‘data’, ‘target’ et al. nine different keys are included in the MNIST data set. ‘data’, ‘target’ are independent variables and dependent variable.

“数据”,“目标”等。 MNIST数据集中包含九种不同的密钥。 “数据”,“目标”是自变量和因变量。

3. Data structure

3.数据结构

X, y = mnist['data'], mnist['target']

print(X.shape, y.shape)(70000, 784) (70000,)4. Illustrate the first nine data point

4.说明前九个数据点

import matplotlib.pyplot as pltfig = plt.figure( figsize=(6,6) )

for i in range(9):

image = X[i]

image_pixels = image.reshape(28,28)

plt.subplot(3,3,i+1)

plt.imshow(image_pixels)

plt.title(f'Target Label {y[i]}', fontsize=12)

plt.axis('off')5. Standardize the data set

5.标准化数据集

PCA is sensitive to the magnitude of data set, it is a good habit to scale the features of data set before implementing PCA algorithm.

PCA对数据集的大小敏感,这是在实施PCA算法之前扩展数据集特征的好习惯。

scaler_train = StandardScaler()

scaler_train.fit(X)

X = scaler_train.transform(X)6. PCA decomposition

6. PCA分解

pca = PCA(0.95)

pca.fit(X)

X_pca_reduceddimension = pca.transform(X)

pca.n_components_332X_pca_reduceddimension.shape(70000, 332)This decomposition showed PCA is a powerful tool to properly reduce high dimension data set, and it showed that although we keep 95% original data set information, the data dimension reduced around 58% (1- 332/784).

这种分解表明PCA是适当减少高维数据集的强大工具,并且表明尽管我们保留了95%的原始数据集信息,但数据维数却减少了58%(1-332 / 784)。

7. Compare image which keeps different original information

7.比较保留不同原始信息的图像

The most interesting thing is that PCA has an inverse function, and through this function, the compressed representation can approximately inverse back to original high dimension data set(784 features). We will compare to keep 95%, 85% and 75% original information image to raw image.

最有趣的是PCA具有逆函数,通过此函数,压缩表示可以近似逆回到原始的高维数据集(784个特征)。 我们将比较保留95%,85%和75%的原始信息图像与原始图像。

import matplotlib.pyplot as plt

fig = plt.figure(figsize=(15,9))

m = 0

for i in range(3):

for j in [1, 0.95, 0.85, 0.75]:

m += 1

pca = PCA(j)

pca.fit(X)

X_pca = pca.transform(X)

components = pca.n_components_

X_approx = pca.inverse_transform(X_pca)

plt.subplot(3,4,m)

if j == 1:

image = X[i]

plt.title(f'Original Data; Components 784')

else:

image = X_approx[i]

plt.title(f'Variance {j}; Components {components}')

image_pixels = image.reshape(28,28)

plt.axis('off')

plt.imshow(image_pixels)5.总结 (5. Summary)

This post mainly explained the principal of PCA, meanwhile demonstrated the implements and application of PCA. PCA is one powerful tool to reduce high dimension data set while still keep the majority of data information. If we can properly utilize PCA, it will save us computing resources and time.

本文主要介绍了PCA的原理,同时演示了PCA的实现和应用。 PCA是一种功能强大的工具,可以减少高维数据集,同时仍保留大多数数据信息。 如果我们能够正确利用PCA,它将节省我们的计算资源和时间。

翻译自: https://medium.com/@songxia.sophia/principle-components-analysis-pca-essence-and-case-study-with-python-43556234d321

python pca主成分