mmdetect2d训练自己的数据集(一)—— labelme数据处理

前言

近期在学习mmdetect,总体来说mmlab这个框架感觉上手难度还挺大的,自己也是结合b站各位up主(up主名称:我是土堆、OneShotLove、比飞鸟贵重的多_HKL)以及知乎mmlab官方边看边学,真的是保姆级教程,强烈推荐。但是为了防止以后忘记,记录一下,如果有不对的地方,欢迎大家批评指正。

mmdetect2d训练自己的数据集(二)——模型训练

一、 数据集准备

数据集标注使用的是labelme,关于labelme的使用教程网上有很多,也都很详细,就不过多赘述了。最终获得的文件应该是有一个.png(或.jpg)以及对应的.json文件(标注内容),图片以及对应的annotation名称要一样。如下图所示:

如果标注的时候没有注意的话,可以写段代码重命名一下:

import os

import re

# 运行时将该文件放到和json文件同一目录下,或者直接修改dir_path路径

dir_path = "./"

# 定义正则化规则

formula = re.compile('"imagePath": "(.+?png)",')

for file in os.listdir(dir_path):

# 判定是否是json文件

if os.path.splitext(file)[-1] != '.json':

continue

# 读取json文件

with open(os.path.join(dir_path, file), encoding='utf-8') as f:

content = f.read()

# 根据正则规则找到json文件里的imagePath

imagePath = formula.findall(content)[0]

print('imagePath ', imagePath)

new_content = content.replace(imagePath, os.path.splitext(file)[0] + '.png')

#重命名并保存

with open(os.path.join(dir_path, file), 'w', encoding='utf-8') as nf:

nf.write(new_content)

最终目的是确保在数据处理前,对应json文件的名称能和图片一致。

二、 coco数据集结构形式:

coco数据集对应的文件夹结构树如下所示:

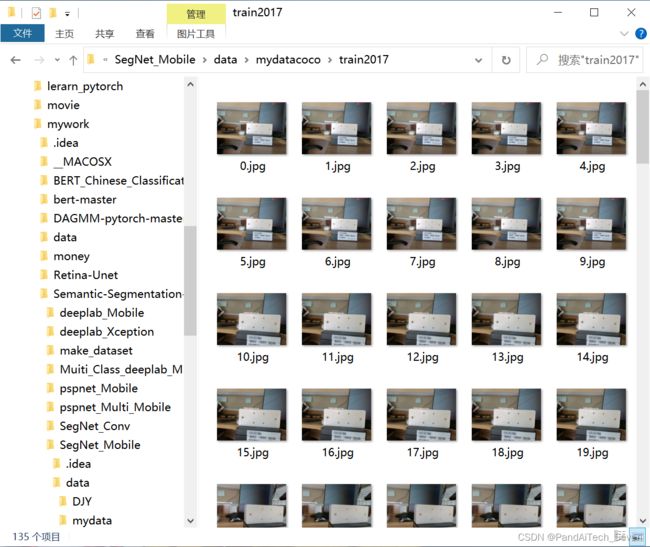

其中,train2017里面堆存训练集图片:

val2017里存放验证集图像:

annotation文件夹里存放对应的标签的json文件:

三、 生成标准的coco数据集格式

labelme生成的json文件是每个图片对应一个,但是coco数据集里是集合起来的,因此需要将其写入同一个json文件。另外,标注时并没有区分训练集和验证集,所以在生成数据的时候需要划分出来,按照自己需要的比例去调节。代码如下:

import os

import json

import numpy as np

import glob

import cv2

from sklearn.model_selection import train_test_split

from tqdm import tqdm

from labelme import utils

# 背景值为0,因此标签对应的mask值应从1开始,根据自己需要识别的种类来。

classname_to_id = {

"hole": 1,

}

class Lableme2CoCo:

def __init__(self):

self.images = []

self.annotations = []

self.categories = []

self.img_id = 0

self.ann_id = 0

# 定义写入json文件的函数

def save_coco_json(self, instance, save_path):

json.dump(instance, open(save_path, 'w', encoding='utf-8'), ensure_ascii=False, indent=1)

# 由json文件构建COCO

def to_coco(self, json_path_list):

self._init_categories()

for json_path in json_path_list:

obj = self.read_jsonfile(json_path)

self.images.append(self._image(obj, json_path))

shapes = obj['shapes']

for shape in shapes:

annotation = self._annotation(shape)

self.annotations.append(annotation)

self.ann_id += 1

self.img_id += 1

instance = {}

instance['info'] = 'spytensor created'

instance['license'] = ['license']

instance['images'] = self.images

instance['annotations'] = self.annotations

instance['categories'] = self.categories

return instance

# 构建类别

def _init_categories(self):

for k, v in classname_to_id.items():

category = {}

category['id'] = v

category['name'] = k

self.categories.append(category)

# 构建COCO的image字段

def _image(self, obj, path):

image = {}

img_x = utils.img_b64_to_arr(obj['imageData'])

h, w = img_x.shape[:-1]

image['height'] = h

image['width'] = w

image['id'] = self.img_id

image['file_name'] = os.path.basename(path).replace(".json", ".jpg")

return image

# 构建COCO的annotation字段

def _annotation(self, shape):

# print('shape', shape)

label = shape['label']

points = shape['points']

annotation = {}

annotation['id'] = self.ann_id

annotation['image_id'] = self.img_id

annotation['category_id'] = int(classname_to_id[label])

annotation['segmentation'] = [np.asarray(points).flatten().tolist()]

annotation['bbox'] = self._get_box(points)

annotation['iscrowd'] = 0

annotation['area'] = 1.0

return annotation

# 读取json文件,返回一个json对象

def read_jsonfile(self, path):

with open(path, "r", encoding='utf-8') as f:

return json.load(f)

# COCO的格式: [x1,y1,w,h] 对应COCO的bbox格式

def _get_box(self, points):

min_x = min_y = np.inf

max_x = max_y = 0

for x, y in points:

min_x = min(min_x, x)

min_y = min(min_y, y)

max_x = max(max_x, x)

max_y = max(max_y, y)

return [min_x, min_y, max_x - min_x, max_y - min_y]

if __name__ == '__main__':

# 训练前准备

# labelme文件路径

labelme_path = r"F:\mywork\Semantic-Segmentation-master\SegNet_Mobile\data\mydata"

# 储存文件路径

saved_coco_path = r"F:\mywork\Semantic-Segmentation-master\SegNet_Mobile\data\mydatacoco"

# annotation文件路径

annotation_path = os.path.join(saved_coco_path, 'annotations')

# train_img文件路径

train_img_path = os.path.join(saved_coco_path, 'train2017')

# val_img文件路径

val_img_path = os.path.join(saved_coco_path, 'val2017')

print('reading...')

# 创建文件夹

if not os.path.exists(annotation_path):

os.makedirs(annotation_path)

if not os.path.exists(train_img_path):

os.makedirs(train_img_path)

if not os.path.exists(val_img_path):

os.makedirs(val_img_path)

# 获取images目录下所有的json文件列表

print(labelme_path + "/*.json")

json_list_path = glob.glob(labelme_path + "/*.json")

print('json_list_path: ', len(json_list_path))

# 划分训练集和验证集,这里没有区分val2017和tran2017目录,所有图片都放在images目录下

# 验证集和训练集比例为1:9,可以根据自己需要更改

train_path, val_path = train_test_split(json_list_path, test_size=0.1, train_size=0.9)

print("train_n:", len(train_path), 'val_n:', len(val_path))

# 把训练集转化为COCO的json格式

# 将训练集对应annotation的json文件写入同一个

l2c_train = Lableme2CoCo()

train_instance = l2c_train.to_coco(train_path)

l2c_train.save_coco_json(train_instance, '%s/annotations/instances_train2017.json' % saved_coco_path)

# 将训练对应的图片放入train2017文件夹

for file in tqdm(train_path):

# 根据划分的json文件来索引训练集

img_name = file.replace('json', 'png')

temp_img = cv2.imread(img_name)

try:

# 分离文件名与路径

(filepath, tempfilename) = os.path.split(img_name)

# 将文件改为jpg格式,因为coco数据集中都是使用jpg格式

tmp_new_name = tempfilename.replace('png', 'jpg')

# 获取新文件的保存路径

train_img = os.path.join(train_img_path, tmp_new_name)

# 写入图片

cv2.imwrite(train_img, temp_img)

except Exception as e:

print(e)

print('Wrong Image:', img_name)

continue

# 把验证集转化为COCO的json格式

# 将验证集对应annotation的json文件写入同一个

l2c_val = Lableme2CoCo()

val_instance = l2c_val.to_coco(val_path)

l2c_val.save_coco_json(val_instance, '%s/annotations/instances_val2017.json' % saved_coco_path)

# 将验证集对应的图片放入val2017文件夹

for file in tqdm(val_path):

# shutil.copy(file.replace("json", "jpg"), "%scoco/images/val2017/" % saved_coco_path)

img_name = file.replace('json', 'png')

temp_img = cv2.imread(img_name)

try:

# 分离文件名与路径

(filepath, tempfilename) = os.path.split(img_name)

# 将文件改为jpg格式,因为coco数据集中都是使用jpg格式

tmp_new_name = tempfilename.replace('png', 'jpg')

# 获取新文件的保存路径

val_img = os.path.join(val_img_path, tmp_new_name)

# 写入图片

cv2.imwrite(val_img, temp_img)

except Exception as e:

print(e)

print('Wrong Image:', img_name)

continue

至此,数据处理结束。