【mobilenetv2 pytorch->onnx】pytorch分类模型导出onnx模型并验证

文章目录

- 1 准备工作

-

- 1.1 mobilenetv2网络介绍

- 1.2 MobileNetV2训练分类数据集

- 2 为何要转

- 3 安装相关依赖

- 4 转换过程

- 5 检验生成的onnx模型

-

- 5.1 onnx.checker检验

- 5.2 np.testing.assert_allclose校验

- 5.3 warning消除记录

- 5.4 使用onnx测试一张图片校验

- 6 整合到一起的代码

1 准备工作

1.1 mobilenetv2网络介绍

详见参考链接MobileNetV2网络结构详解并获取网络计算量与参数量。

1.2 MobileNetV2训练分类数据集

详见参考链接MobileNetV2训练自定义分类数据集。

2 为何要转

想部署在开发板上,通常需要先转成onnx形式。

3 安装相关依赖

其它的依赖在网络训练过程中通常都会遇到,用于转换的依赖安装:

pip install onnxruntime

pip install onnx

4 转换过程

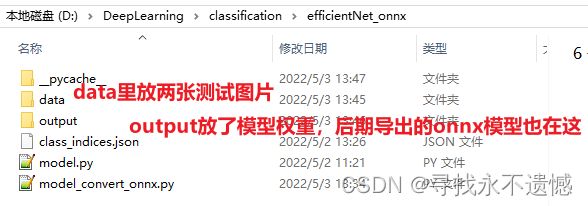

需要准备的东西很少:

model_convert_onnx.py代码如下,运行后可得到转换后的onnx模型:

import onnxruntime

import torch

import numpy as np

import onnx

# 导入网络结构

from model import mobilenet_v2 as create_model

def model_convert_onnx(model, input_shape, output_path):

dummy_input = torch.randn(1, 3, input_shape[0], input_shape[1])

input_names = ["input1"]

output_names = ["output1"]

torch.onnx.export(

model,

dummy_input,

output_path,

verbose=True,

keep_initializers_as_inputs=True,

opset_version=11, # 版本通常为10 or 11

input_names=input_names,

output_names=output_names,

)

if __name__ == '__main__':

# create model

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = create_model(num_classes=5).to(device)

# load model weights

model_weight_path = "./output/model-29.pth"

model.load_state_dict(torch.load(model_weight_path, map_location=device))

# 据说因为BN、dropout的存在,所以这儿要转成eval()模式

model.eval()

# 导出onnx模型的输入尺寸,要和pytorch模型的输入尺寸一致

input_shape = (224, 224)

# onnx模型输出到哪里去

output_path = './output/mobilenetv2.onnx'

# ------------------------------------------#

# pth模型转换为onnx模型,转换完成后,可注释掉

# ------------------------------------------#

model_convert_onnx(model, input_shape, output_path)

print("model convert onnx finsh.")

5 检验生成的onnx模型

5.1 onnx.checker检验

通常这一步都没啥问题,要是有问题,当我没说。

import onnx

# -------------------------#

# 第一轮验证

# -------------------------#

onnx_model = onnx.load(output_path)

onnx.checker.check_model(onnx_model)

print("onnx model check_1 finsh.")

5.2 np.testing.assert_allclose校验

直接看代码注释即可。

import onnxruntime

import torch

import numpy as np

import onnx

# 导入网络结构

from model import mobilenet_v2 as create_model

def check_onnx_2(model, ort_session, input_shape, device):

# -----------------------------------#

# 给个模型输入,分辨率要对

# -----------------------------------#

x = torch.randn(size=(1, 3, input_shape[0], input_shape[1]), dtype=torch.float32)

# -----------------------------------#

# torch模型推理

# -----------------------------------#

with torch.no_grad():

torch_out = model(x.to(device))

# print(torch_out) # tensor([[-0.5728, 0.1695, -0.3256, 1.1357, -0.4081]])

# print(type(torch_out)) # ,里面是个numpy矩阵

# print(type(ort_outs[0])) # 5.3 warning消除记录

[W:onnxruntime:, graph.cc:1237 onnxruntime::Graph::Graph] Initializer 893 appears in graph inputs and will not be treated as constant value/weight. This may prevent some of the graph optimizations, like const folding. Move it out of graph inputs if there is no need to override it, by either re-generating the model with latest exporter/converter or with the tool onnxruntime/tools/python/remove_initializer_from_input.py.

解决方案:

在得到的onnx模型文件夹下,新建remove_initializer_from_input.py,内容为:

import onnx

import argparse

def get_args():

parser = argparse.ArgumentParser()

parser.add_argument("--input", required=True, help="input model")

parser.add_argument("--output", required=True, help="output model")

args = parser.parse_args()

return args

def remove_initializer_from_input():

args = get_args()

model = onnx.load(args.input)

if model.ir_version < 4:

print(

'Model with ir_version below 4 requires to include initilizer in graph input'

)

return

inputs = model.graph.input

name_to_input = {}

for input in inputs:

name_to_input[input.name] = input

for initializer in model.graph.initializer:

if initializer.name in name_to_input:

inputs.remove(name_to_input[initializer.name])

onnx.save(model, args.output)

if __name__ == '__main__':

remove_initializer_from_input()

打开终端,运行python remove_initializer_from_input.py --input mobilenetv2.onnx --output mobilenetv2.onnx即可。

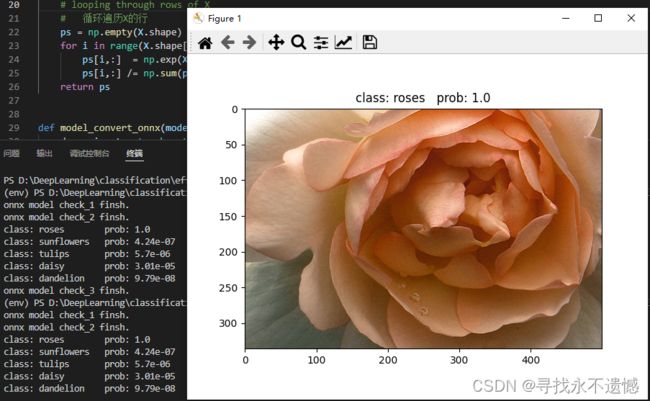

5.4 使用onnx测试一张图片校验

使用onnx测试一张图片校验代码放在predict_origin_onnx.py中,注意:测试代码里面,不能涉及torch,torchvision这种包了。

import onnxruntime

import numpy as np

import onnx

import os

from PIL import Image

import matplotlib.pyplot as plt

import json

def softmax_2D(X):

"""

针对二维numpy矩阵每一行进行softmax操作

X: np.array. Probably should be floats.

return: 二维矩阵

"""

# looping through rows of X

# 循环遍历X的行

ps = np.empty(X.shape)

for i in range(X.shape[0]):

ps[i,:] = np.exp(X[i,:])

ps[i,:] /= np.sum(ps[i,:])

return ps

def check_onnx_3(ort_session, img, json_path, input_shape):

# ----------------------------------------------------------------#

# 图像预处理,包括resize,归一化,减均值,除方差,HWC变为CHW,添加batch维度

# ----------------------------------------------------------------#

img = img.convert('RGB')

img_resize = img.resize(input_shape, Image.BICUBIC) # PIL.Image类型

# PIL.Image类型无法直接除以255,需要先转成array

img_resize = np.array(img_resize, dtype='float32') / 255.0

img_resize -= [0.485, 0.456, 0.406]

img_resize /= [0.229, 0.224, 0.225]

img_CHW = np.transpose(img_resize, (2, 0, 1))

# ---------------------------------------------------------#

# 添加batch_size维度,缺少这个维度,网络没法预测

# ---------------------------------------------------------#

img = np.expand_dims(img_CHW, 0)

# -----------------------------------#

# class_indict用于可视化类别

# -----------------------------------#

with open(json_path, "r") as f:

class_indict = json.load(f)

# -----------------------------------#

# onnx模型推理

# 初始化数据,注意此时img是numpy格式

# -----------------------------------#

ort_inputs = {ort_session.get_inputs()[0].name: img}

ort_outs = ort_session.run(None, ort_inputs) # 推理得到输出

# print(ort_outs) # [array([[-4.290639 , -2.267056 , 7.666328 , -1.4162455 , 0.57391334]], dtype=float32)]

# -----------------------------------#

# 经过softmax转化为概率

# softmax_2D按行转化,一行一个样本

# -----------------------------------#

predict_probability = softmax_2D(ort_outs[0])

# print(predict_probability) # array([[0.1],[0.2],[0.3],[0.3],[0.1]])

# -----------------------------------#

# argmax得到最大概率索引,也就是类别对应索引

# -----------------------------------#

predict_cla = np.argmax(predict_probability, axis=-1)

# print(predict_cla) # array([2])

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla[0])],

predict_probability[0][predict_cla[0]])

plt.title(print_res)

for i in range(len(predict_probability[0])):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict_probability[0][i]))

plt.show()

if __name__ == '__main__':

output_path = './output/mobilenetv2.onnx'

# -------------------------#

# 第三轮验证

# -------------------------#

# load image

img_path = "./data/rose.jpg"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img)

# read class_indict

json_path = './class_indices.json'

# 加载onnx模型

ort_session_2 = onnxruntime.InferenceSession(output_path)

check_onnx_3(ort_session_2, img, json_path, input_shape)

print("onnx model check_3 finsh.")

6 整合到一起的代码

model_convert_onnx.py代码如下:

import onnxruntime

import torch

import numpy as np

import onnx

# 导入网络结构

from model import mobilenet_v2 as create_model

def model_convert_onnx(model, input_shape, output_path):

dummy_input = torch.randn(1, 3, input_shape[0], input_shape[1])

input_names = ["input1"]

output_names = ["output1"]

torch.onnx.export(

model,

dummy_input,

output_path,

verbose=True,

keep_initializers_as_inputs=True,

opset_version=11, # 版本通常为10 or 11

input_names=input_names,

output_names=output_names,

)

def check_onnx_2(model, ort_session, input_shape, device):

# -----------------------------------#

# 给个模型输入,分辨率要对

# -----------------------------------#

x = torch.randn(size=(1, 3, input_shape[0], input_shape[1]), dtype=torch.float32)

# -----------------------------------#

# torch模型推理

# -----------------------------------#

with torch.no_grad():

torch_out = model(x.to(device))

# print(torch_out) # tensor([[-0.5728, 0.1695, -0.3256, 1.1357, -0.4081]])

# print(type(torch_out)) # ,里面是个numpy矩阵

# print(type(ort_outs[0])) #