MapReduce之分区

MapReduce之分区

模式描述

分区模式是将记录进行分类,但并不关心记录的顺序

目的

该模式的目的是将数据集中相似的记录分成不同的,更小的数据集

适用场景

适用这一个模式的最主要的要求是:必须提前知道有多少个分区,例如,如果按照天数对周进行分区,那末将会有七个分区

适用场景如下

- 按连续值裁剪分区

- 按类别剪裁分区

- 分片

性能分析

在性能方面这个模式主要关注的是,每个分区的结果数据中是否有类似的数量的记录,有可能一个分区中包含有整个数据集中大约50%的数据,如果直接简单的适用该模式的话,那么该分区对应的数据会全都发送到一个reducer上,此时处理性能便会明显的下降。

问题描述

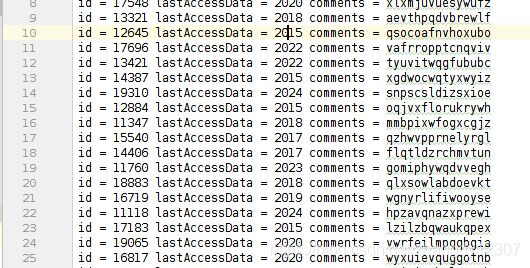

给定彝族用户的信息,按照最近访问日期中的年份信息对记录进行分区,一年对应一个分区

样例输入

生成数据集的代码如下

import java.io.*;

import java.util.Random;

public class create {

public static String getRandomChar(int length) { //生成随机字符串

Random random = new Random();

StringBuffer buffer = new StringBuffer();

for (int i = 0; i < length; i++) {

buffer.append((char)('a'+random.nextInt(26)));

}

return buffer.toString();

}

public static void main(String[] args) throws IOException{

String path="input/file.txt";

File file=new File(path);

if(!file.exists()){

file.getParentFile().mkdirs();

}

file.createNewFile();

FileWriter fw=new FileWriter(file,true);

BufferedWriter bw=new BufferedWriter(fw);

for(int i=0;i<1000;i++){

int id=(int)(Math.random()*10000+10000);

int lastData=(int)(Math.random()*10+2015);

bw.write("id = "+id+" lastAccessData = "+lastData+" comments = "+getRandomChar(15)+'\n');

}

bw.flush();

bw.close();

fw.close();

}

}

mapper阶段任务

mapper获取每条输入记录中的最近访问日期,并将日期中的年份作为键,整条记录作为值输出

mapper阶段编码如下

public static class LastAccessDateMapper extends Mapper{

private final static SimpleDateFormat frmt=new SimpleDateFormat(

"yyyy");

private IntWritable outkey=new IntWritable();

public void map(Object key,Text value,Context context) throws IOException,InterruptedException{

String line=value.toString();

String strDate=line.substring(line.indexOf("lastAccessData")+17,line.indexOf("lastAccessData")+22);

System.out.println(strDate);

// 通过该方法获取年份

Calendar cal=Calendar.getInstance();

try {

cal.setTime(frmt.parse(strDate));

outkey.set(cal.get(Calendar.YEAR));

} catch (ParseException e) {

e.printStackTrace();

}

System.out.println(outkey);

context.write(outkey,new Text(value));

}

}

partitioner阶段任务

分区器检查mapper输出的每个键/值对,并将确定的键/值对写入相对应的分区,这样在reduce阶段每个已编号的分区数据将被相应的reduce任务复制,在任务初始化阶段配置分区器调用setconf方法,在作业配置阶段,驱动程序负责调用LastAccessDatePartitioner.setMinLastAccessDate方法获取该值,该日期用于减去每个键,来确定每个键将被分配到哪个分区。

public static class LastAccessDatePartitioner extends Partitioner implements Configurable {

private static final String MIN_LAST_ACCESS_DATE_YEAR="min.last.access.date.year";

private Configuration conf=null;

private int minLastAccessDateYear=0;

public int getPartition(IntWritable key,Text value,int numPartitions){

return key.get()-minLastAccessDateYear;

}

public Configuration getConf(){

return conf;

}

public void setConf(Configuration conf){

this.conf=conf;

minLastAccessDateYear=conf.getInt(MIN_LAST_ACCESS_DATE_YEAR,0);

}

public static void setMinLastAccessDate(Job job,int minLastAccessDateYear){

job.getConfiguration().setInt(MIN_LAST_ACCESS_DATE_YEAR,minLastAccessDateYear);

}

}

reducer阶段

该阶段仅仅只是输出所有的值,所以很简单

reducer阶段编码如下

public static class ValueReducer extends Reducer{

public void reduce(IntWritable key, Iterable values,Context context) throws IOException,InterruptedException{

for(Text t:values){

context.write(t,NullWritable.get());

}

}

}

完整代码如下

import org.apache.hadoop.conf.Configurable;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Partitioner;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Calendar;

public class LastPartition {

public static class LastAccessDateMapper extends Mapper{

private final static SimpleDateFormat frmt=new SimpleDateFormat(

"yyyy");

private IntWritable outkey=new IntWritable();

public void map(Object key,Text value,Context context) throws IOException,InterruptedException{

String line=value.toString();

String strDate=line.substring(line.indexOf("lastAccessData")+17,line.indexOf("lastAccessData")+22);

System.out.println(strDate);

Calendar cal=Calendar.getInstance();

try {

cal.setTime(frmt.parse(strDate));

outkey.set(cal.get(Calendar.YEAR));

} catch (ParseException e) {

e.printStackTrace();

}

System.out.println(outkey);

context.write(outkey,new Text(value));

}

}

public static class LastAccessDatePartitioner extends Partitioner implements Configurable {

private static final String MIN_LAST_ACCESS_DATE_YEAR="min.last.access.date.year";

private Configuration conf=null;

private int minLastAccessDateYear=0;

public int getPartition(IntWritable key,Text value,int numPartitions){

return key.get()-minLastAccessDateYear;

}

public Configuration getConf(){

return conf;

}

public void setConf(Configuration conf){

this.conf=conf;

minLastAccessDateYear=conf.getInt(MIN_LAST_ACCESS_DATE_YEAR,0);

}

public static void setMinLastAccessDate(Job job,int minLastAccessDateYear){

job.getConfiguration().setInt(MIN_LAST_ACCESS_DATE_YEAR,minLastAccessDateYear);

}

}

public static class ValueReducer extends Reducer{

public void reduce(IntWritable key, Iterable values,Context context) throws IOException,InterruptedException{

for(Text t:values){

context.write(t,NullWritable.get());

}

}

}

public static void main(String[] args) throws Exception{

FileUtil.deleteDir("output");

Configuration configuration=new Configuration();

String[] otherArgs=new String[]{"input/file.txt","output"};

if(otherArgs.length!=2){

System.err.println("参数错误");

System.exit(2);

}

Job job=new Job(configuration,"Layered");

job.setMapperClass(LastAccessDateMapper.class);

job.setReducerClass(ValueReducer.class);

job.setOutputKeyClass(IntWritable.class);

job.setPartitionerClass(LastAccessDatePartitioner.class);

LastAccessDatePartitioner.setMinLastAccessDate(job,2012);

job.setNumReduceTasks(20);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job,new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job,new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true)?0:1);

}

}

遇到的问题

在学习过程中遇到如下问题

java.lang.Exception: java.io.IOException: Illegal partition for 2022 (10)

at org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:491)

at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:551)

Caused by: java.io.IOException: Illegal partition for 2022 (10)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.collect(MapTask.java:1089)

at org.apache.hadoop.mapred.MapTask$NewOutputCollector.write(MapTask.java:721)

at org.apache.hadoop.mapreduce.task.TaskInputOutputContextImpl.write(TaskInputOutputContextImpl.java:89)

at org.apache.hadoop.mapreduce.lib.map.WrappedMapper$Context.write(WrappedMapper.java:112)

at LastPartition$LastAccessDateMapper.map(LastPartition.java:35)

at LastPartition$LastAccessDateMapper.map(LastPartition.java:19)

at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:146)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:793)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:270)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

查阅资料后发现是partition和reducetask个数没对上,调整reducetask个数即可

写在最后

求一份适合《MapReduce设计模式》的数据集和一个比较不错的文本处理程序。。。