opencv和mediapipe实现手势识别

本篇文章只是手势识别的一个demo,想要识别的精度更高,还需要添加其他的约束条件,这里只是根据每个手指关键点和手掌根部的距离来判断手指是伸展开还是弯曲的。关于mediapi pe的简介,可以去看官网:Home - mediapipe,官网有现成的demo程序,直接拷贝应用就可以实现手掌21个关键点的识别,这21个关键点的分布如下:

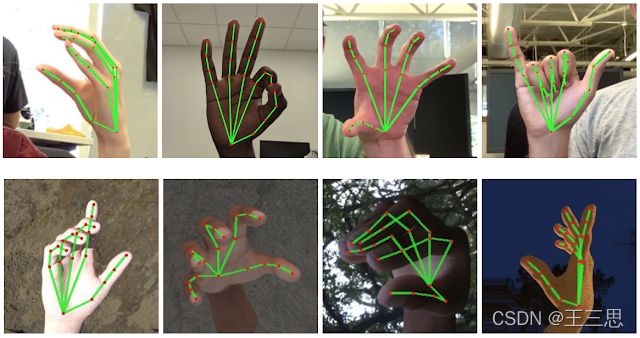

而且,检测的实时性也非常的不错:

当然,mediapipe不止可以检测手势,面部检测,姿态检测都可以:

下面说下这个手势识别的demo的大体思路:

首先,要import必要的库和导入必要的函数方法:

import cv2 as cv

import numpy as np

import mediapipe as mp

from numpy import linalg

#手部检测函数

mpHands = mp.solutions.hands

hands = mpHands.Hands()

#绘制关键点和连接线函数

mpDraw = mp.solutions.drawing_utils

handLmsStyle = mpDraw.DrawingSpec(color=(0, 0, 255), thickness=int(5))

handConStyle = mpDraw.DrawingSpec(color=(0, 255, 0), thickness=int(10))其中,handLmsStyle和handConStyle分别是关键点和连接线的特征,包括颜色和关键点(连接线)的宽度。

如果画面中有手,就可以通过如下函数将关键点和连接线表示出来

if result.multi_hand_landmarks:

#同时出现两只手都可以检测

for i, handLms in enumerate(result.multi_hand_landmarks):

mpDraw.draw_landmarks(frame, handLms, mpHands.HAND_CONNECTIONS,

landmark_drawing_spec=handLmsStyle,

connection_drawing_spec=handConStyle)

有个这21个关键点,可以做的事情就太多了,比如控制电脑的音量,鼠标、键盘,如果有个完善的手势姿态库,还可以做比如手语识别等等。因为实际生活中,手的摆放不一定是正好手心面向摄像头的,所以约束条件越苛刻,精度就会越高,这里的做法就没有考虑这么多,就只是用手指不同姿态时的向量L2范数(就是向量的模,忘记了就看线性代数或者机器学习)不同,来粗略的检测,比如说食指,伸直的时候和弯曲的时候,指尖(点8)到手掌根部(点0)的向量模dist1肯定是大于点6到点0的向量模dist2的,如果食指弯曲的时候,则有dist1 < dist2,食指、中指、无名指和小拇指的判断都是如此,仅大拇指就点17代替的点0,代码如下:

for k in range (5):

if k == 0:

figure_ = finger_stretch_detect(landmark[17],landmark[4*k+2],

landmark[4*k+4])

else:

figure_ = finger_stretch_detect(landmark[0],landmark[4*k+2],

landmark[4*k+4])

然后通过五个手指的状态,来判断当前的手势,我这里列举了一些,简单粗暴:

def detect_hands_gesture(result):

if (result[0] == 1) and (result[1] == 0) and (result[2] == 0) and (result[3] == 0) and (result[4] == 0):

gesture = "good"

elif (result[0] == 0) and (result[1] == 1)and (result[2] == 0) and (result[3] == 0) and (result[4] == 0):

gesture = "one"

elif (result[0] == 0) and (result[1] == 0)and (result[2] == 1) and (result[3] == 0) and (result[4] == 0):

gesture = "please civilization in testing"

elif (result[0] == 0) and (result[1] == 1)and (result[2] == 1) and (result[3] == 0) and (result[4] == 0):

gesture = "two"

elif (result[0] == 0) and (result[1] == 1)and (result[2] == 1) and (result[3] == 1) and (result[4] == 0):

gesture = "three"

elif (result[0] == 0) and (result[1] == 1)and (result[2] == 1) and (result[3] == 1) and (result[4] == 1):

gesture = "four"

elif (result[0] == 1) and (result[1] == 1)and (result[2] == 1) and (result[3] == 1) and (result[4] == 1):

gesture = "five"

elif (result[0] == 1) and (result[1] == 0)and (result[2] == 0) and (result[3] == 0) and (result[4] == 1):

gesture = "six"

elif (result[0] == 0) and (result[1] == 0)and (result[2] == 1) and (result[3] == 1) and (result[4] == 1):

gesture = "OK"

elif(result[0] == 0) and (result[1] == 0) and (result[2] == 0) and (result[3] == 0) and (result[4] == 0):

gesture = "stone"

else:

gesture = "not in detect range..."

return gesture然后根据判断的结果输出即可,效果如下:

完整代码如下:

import cv2 as cv

import numpy as np

import mediapipe as mp

from numpy import linalg

#视频设备号

DEVICE_NUM = 0

# 手指检测

# point1-手掌0点位置,point2-手指尖点位置,point3手指根部点位置

def finger_stretch_detect(point1, point2, point3):

result = 0

#计算向量的L2范数

dist1 = np.linalg.norm((point2 - point1), ord=2)

dist2 = np.linalg.norm((point3 - point1), ord=2)

if dist2 > dist1:

result = 1

return result

# 检测手势

def detect_hands_gesture(result):

if (result[0] == 1) and (result[1] == 0) and (result[2] == 0) and (result[3] == 0) and (result[4] == 0):

gesture = "good"

elif (result[0] == 0) and (result[1] == 1)and (result[2] == 0) and (result[3] == 0) and (result[4] == 0):

gesture = "one"

elif (result[0] == 0) and (result[1] == 0)and (result[2] == 1) and (result[3] == 0) and (result[4] == 0):

gesture = "please civilization in testing"

elif (result[0] == 0) and (result[1] == 1)and (result[2] == 1) and (result[3] == 0) and (result[4] == 0):

gesture = "two"

elif (result[0] == 0) and (result[1] == 1)and (result[2] == 1) and (result[3] == 1) and (result[4] == 0):

gesture = "three"

elif (result[0] == 0) and (result[1] == 1)and (result[2] == 1) and (result[3] == 1) and (result[4] == 1):

gesture = "four"

elif (result[0] == 1) and (result[1] == 1)and (result[2] == 1) and (result[3] == 1) and (result[4] == 1):

gesture = "five"

elif (result[0] == 1) and (result[1] == 0)and (result[2] == 0) and (result[3] == 0) and (result[4] == 1):

gesture = "six"

elif (result[0] == 0) and (result[1] == 0)and (result[2] == 1) and (result[3] == 1) and (result[4] == 1):

gesture = "OK"

elif(result[0] == 0) and (result[1] == 0) and (result[2] == 0) and (result[3] == 0) and (result[4] == 0):

gesture = "stone"

else:

gesture = "not in detect range..."

return gesture

def detect():

# 接入USB摄像头时,注意修改cap设备的编号

cap = cv.VideoCapture(DEVICE_NUM)

# 加载手部检测函数

mpHands = mp.solutions.hands

hands = mpHands.Hands()

# 加载绘制函数,并设置手部关键点和连接线的形状、颜色

mpDraw = mp.solutions.drawing_utils

handLmsStyle = mpDraw.DrawingSpec(color=(0, 0, 255), thickness=int(5))

handConStyle = mpDraw.DrawingSpec(color=(0, 255, 0), thickness=int(10))

figure = np.zeros(5)

landmark = np.empty((21, 2))

if not cap.isOpened():

print("Can not open camera.")

exit()

while True:

ret, frame = cap.read()

if not ret:

print("Can not receive frame (stream end?). Exiting...")

break

#mediaPipe的图像要求是RGB,所以此处需要转换图像的格式

frame_RGB = cv.cvtColor(frame, cv.COLOR_BGR2RGB)

result = hands.process(frame_RGB)

#读取视频图像的高和宽

frame_height = frame.shape[0]

frame_width = frame.shape[1]

#print(result.multi_hand_landmarks)

#如果检测到手

if result.multi_hand_landmarks:

#为每个手绘制关键点和连接线

for i, handLms in enumerate(result.multi_hand_landmarks):

mpDraw.draw_landmarks(frame,

handLms,

mpHands.HAND_CONNECTIONS,

landmark_drawing_spec=handLmsStyle,

connection_drawing_spec=handConStyle)

for j, lm in enumerate(handLms.landmark):

xPos = int(lm.x * frame_width)

yPos = int(lm.y * frame_height)

landmark_ = [xPos, yPos]

landmark[j,:] = landmark_

# 通过判断手指尖与手指根部到0位置点的距离判断手指是否伸开(拇指检测到17点的距离)

for k in range (5):

if k == 0:

figure_ = finger_stretch_detect(landmark[17],landmark[4*k+2],landmark[4*k+4])

else:

figure_ = finger_stretch_detect(landmark[0],landmark[4*k+2],landmark[4*k+4])

figure[k] = figure_

print(figure,'\n')

gesture_result = detect_hands_gesture(figure)

cv.putText(frame, f"{gesture_result}", (30, 60*(i+1)), cv.FONT_HERSHEY_COMPLEX, 2, (255 ,255, 0), 5)

cv.imshow('frame', frame)

if cv.waitKey(1) == ord('q'):

break

cap.release()

cv.destroyAllWindows()

if __name__ == '__main__':

detect()我的公众号: