Pytorch基础学习(第七章-Pytorch训练技巧)

课程一览表:

![]()

目录

一、模型保存与加载

1.序列化与反序列化

2.模型保存与加载的两种方式

3.模型断点续训练

二、模型finetune

1.Transfer Learning & Model Finetune

2.Pytorch中的Finetune

三、GPU的使用

1.CPU 与GPU

2.数据迁移至GPU

3.多GPU并行运算

四、Pytorch常见报错

1.Pytorch常见报错

2.Pytorch课程总结

一、模型保存与加载

1.序列化与反序列化

我们训练好一个模型,是要在以后使用它,但是训练的时候我们的模型是存储在内存当中的,而内存中的数据不具备长久的存储功能,所以需要将模型从内存中搬到硬盘中进行长久的存储,以备以后的使用。

序列化与反序列化主要讲的是硬盘与内存之间的数据转换关系

模型在内存中是以一个对象的形式被存储的,且不具备长久存储的功能;而在硬盘中,模型是以二进制数序列进行存储,所以

序列化是指将内存中的某一个对象保存在硬盘当中,以二进制序列的方式存储下来。

反序列化是指将存储的这些二进制数反序列化的放到内存当中作为一个对象

Pytorch中序列化与反序列的函数

(1)torch.save

功能:序列化

主要参数:

- obj:对象,想要保存的数据,模型、张量等

- f:输出路径

(2)torch.load

功能:反序列化

主要参数:

- f:文件路径

- map_location:指定存放位置,cpu or gpu

2.模型保存与加载的两种方式

(1)保存模型

法一:保存整个Module

torch.save(net,path)

法二:保存模型参数

state_dict = net.state_dict()

torch.save(state_dict,path)

法一是直接保存整个模型,但是这个方法比较耗时,占内存。官方推荐用第二种方法,只保存模型参数

法二的保存方法:Module中有八个有序字典来管理它的一系列参数,我们保存模型是为了在下一次继续使用,而什么是在模型训练之后才得到的呢?是一系列的可学习参数。所以有一种方法就是只保存模型的可学习参数parameters。将这些模型训练的可学习参数保存下来,在下一次构建模型再把这些参数放回到模型中。

(2)加载模型

法一:加载整个网络

net_load = torch.load(model_path)

法二:加载模型的参数,并应用新模型

state_dict_load = torch.load(model_path)

net_new.load_state_dict(state_dict_load)

代码:

模型的保存

class LeNet2(nn.Module):

def __init__(self, classes):

super(LeNet2, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 6, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2),

nn.Conv2d(6, 16, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.classifier = nn.Sequential(

nn.Linear(16*5*5, 120),

nn.ReLU(),

nn.Linear(120, 84),

nn.ReLU(),

nn.Linear(84, classes)

)

def forward(self, x):

x = self.features(x)

x = x.view(x.size()[0], -1)

x = self.classifier(x)

return x

def initialize(self):

for p in self.parameters():

p.data.fill_(20191104)

net = LeNet2(classes=2019)

# "训练"

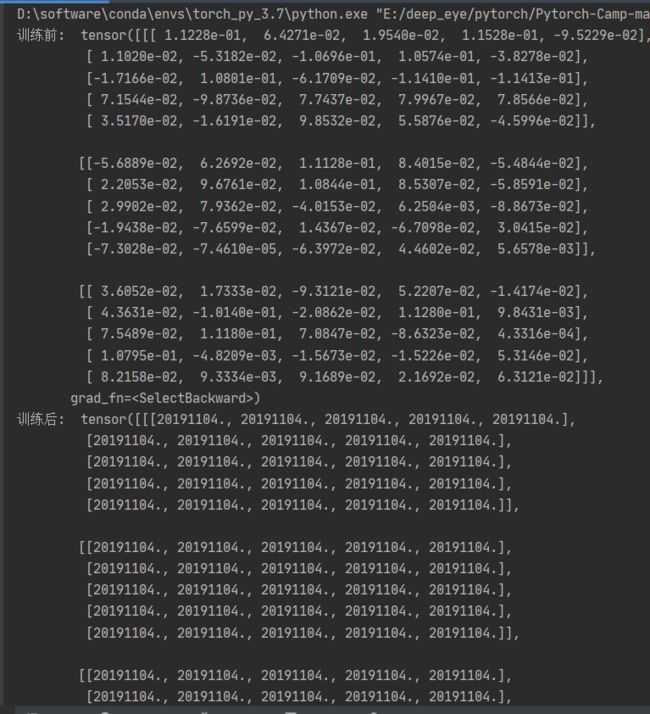

print("训练前: ", net.features[0].weight[0, ...])

net.initialize()

print("训练后: ", net.features[0].weight[0, ...])

path_model = "./model.pkl"

path_state_dict = "./model_state_dict.pkl"

# 保存整个模型

torch.save(net, path_model)

# 保存模型参数

net_state_dict = net.state_dict()

torch.save(net_state_dict, path_state_dict)输出结果:

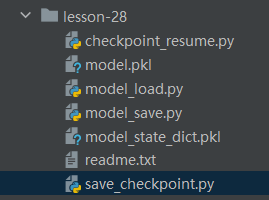

在目录下就可以看到保存的模型和保存的参数

模型的加载:

加载整个模型

# ================================== load net ===========================

flag = 1

# flag = 0

if flag:

path_model = "./model.pkl"

net_load = torch.load(path_model)

print(net_load)看加载的模型的参数,可以看到,加载到了保存的模型

加载模型参数

# ================================== load state_dict ===========================

flag = 1

# flag = 0

if flag:

path_state_dict = "./model_state_dict.pkl"

state_dict_load = torch.load(path_state_dict)

print(state_dict_load.keys())

# ================================== update state_dict ===========================

flag = 1

# flag = 0

if flag:

net_new = LeNet2(classes=2019)

print("加载前: ", net_new.features[0].weight[0, ...])

net_new.load_state_dict(state_dict_load)

print("加载后: ", net_new.features[0].weight[0, ...])输出结果:

保存的参数是以字典的形式进行存储,我们输出一下键,看看都是什么,可以发现键都是每个网络层的weight和bias。

我们通过torch.load(path)的方式加载到的是模型的参数,所以我们需要将这些模型参数放到我们新的网络中去。net_new.load_state_dict(state_dict_load)

这样才嫩更完整的将参数加载到模型

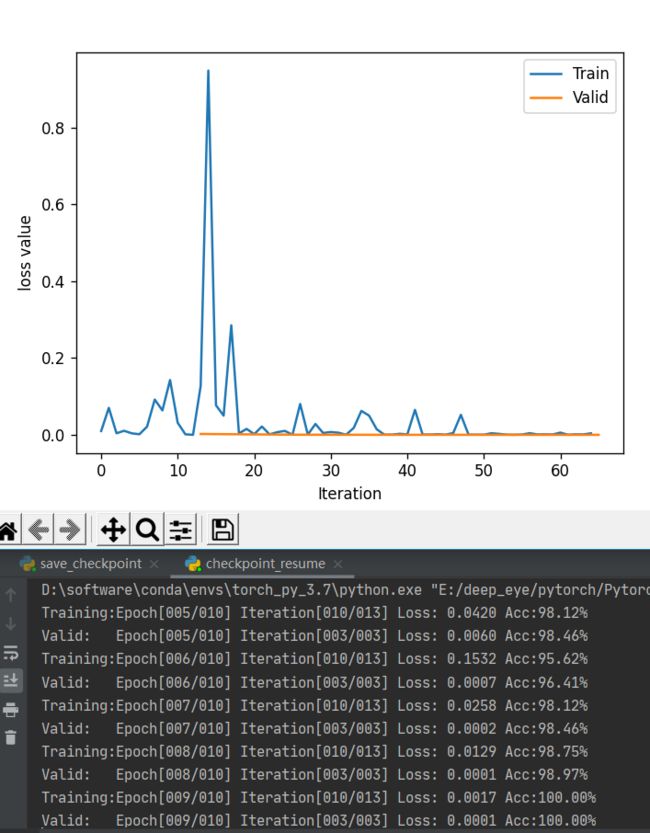

3.模型断点续训练

断点续训练

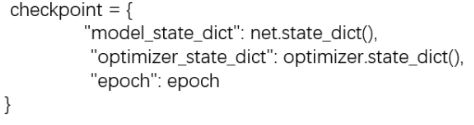

需要保存的数据:checkpoint

应用:模拟意外中断,然后续训练

在epoch迭代中写入

打断训练

if (epoch+1) % checkpoint_interval == 0:

checkpoint = {"model_state_dict": net.state_dict(),

"optimizer_state_dict": optimizer.state_dict(),

"epoch": epoch}

path_checkpoint = "./checkpoint_{}_epoch.pkl".format(epoch)

torch.save(checkpoint, path_checkpoint)

if epoch > 5:

print("训练意外中断...")

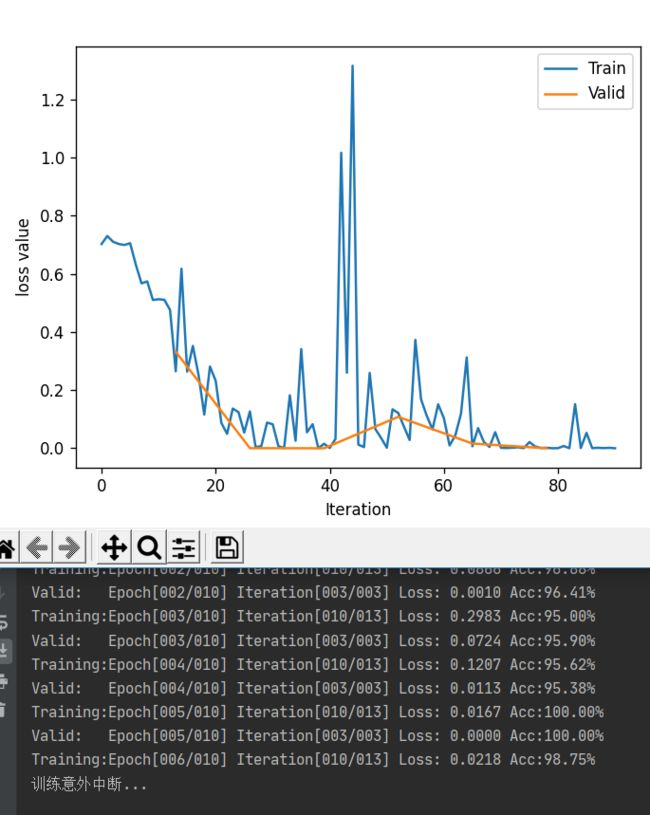

break输出结果:

可以看到,在第四个epoch训练被打断,并保存了模型

续训练:在哪里操作呢?

在训练之前写入

# ============================ step 5+/5 断点恢复 ============================

path_checkpoint = "./checkpoint_4_epoch.pkl"

checkpoint = torch.load(path_checkpoint)

net.load_state_dict(checkpoint['model_state_dict'])

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

start_epoch = checkpoint['epoch']

scheduler.last_epoch = start_epoch输出结果:

可以看到,模型是从epoch5开始训练的

全部代码:

打断:

hello_pytorch_DIR = os.path.abspath(os.path.dirname(__file__)+os.path.sep+".."+os.path.sep+"..")

sys.path.append(hello_pytorch_DIR)

from model.lenet import LeNet

from tools.my_dataset import RMBDataset

from tools.common_tools import set_seed

import torchvision

set_seed(1) # 设置随机种子

rmb_label = {"1": 0, "100": 1}

# 参数设置

checkpoint_interval = 5

MAX_EPOCH = 10

BATCH_SIZE = 16

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

split_dir = os.path.abspath(os.path.join(BASE_DIR, "..", "..", "data", "RMB_data","rmb_split"))

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

if not os.path.exists(split_dir):

raise Exception(r"数据 {} 不存在, 回到lesson-06\1_split_dataset.py生成数据".format(split_dir))

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.RandomGrayscale(p=0.8),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=6, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

start_epoch = -1

for epoch in range(start_epoch+1, MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # 更新学习率

if (epoch+1) % checkpoint_interval == 0:

checkpoint = {"model_state_dict": net.state_dict(),

"optimizer_state_dict": optimizer.state_dict(),

"epoch": epoch}

path_checkpoint = "./checkpoint_{}_epoch.pkl".format(epoch)

torch.save(checkpoint, path_checkpoint)

if epoch > 5:

print("训练意外中断...")

break

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss.item())

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val/len(valid_loader), correct / total))

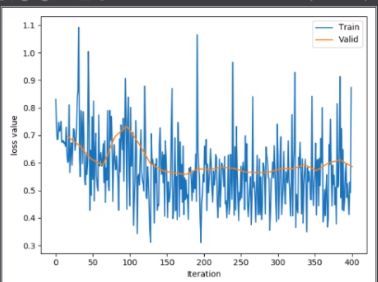

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()续训练:

hello_pytorch_DIR = os.path.abspath(os.path.dirname(__file__)+os.path.sep+".."+os.path.sep+"..")

sys.path.append(hello_pytorch_DIR)

from model.lenet import LeNet

from tools.my_dataset import RMBDataset

from tools.common_tools import set_seed

import torchvision

set_seed(1) # 设置随机种子

rmb_label = {"1": 0, "100": 1}

# 参数设置

checkpoint_interval = 5

MAX_EPOCH = 10

BATCH_SIZE = 16

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

split_dir = os.path.abspath(os.path.join(BASE_DIR, "..", "..", "data","RMB_data", "rmb_split"))

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

if not os.path.exists(split_dir):

raise Exception(r"数据 {} 不存在, 回到lesson-06\1_split_dataset.py生成数据".format(split_dir))

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.RandomGrayscale(p=0.8),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=6, gamma=0.1) # 设置学习率下降策略

# ============================ step 5+/5 断点恢复 ============================

path_checkpoint = "./checkpoint_4_epoch.pkl"

checkpoint = torch.load(path_checkpoint)

net.load_state_dict(checkpoint['model_state_dict'])

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

start_epoch = checkpoint['epoch']

scheduler.last_epoch = start_epoch

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(start_epoch + 1, MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # 更新学习率

if (epoch+1) % checkpoint_interval == 0:

checkpoint = {"model_state_dict": net.state_dict(),

"optimizer_state_dic": optimizer.state_dict(),

"loss": loss,

"epoch": epoch}

path_checkpoint = "./checkpint_{}_epoch.pkl".format(epoch)

torch.save(checkpoint, path_checkpoint)

# if epoch > 5:

# print("训练意外中断...")

# break

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss.item())

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val/len(valid_loader), correct / total))

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

二、模型finetune

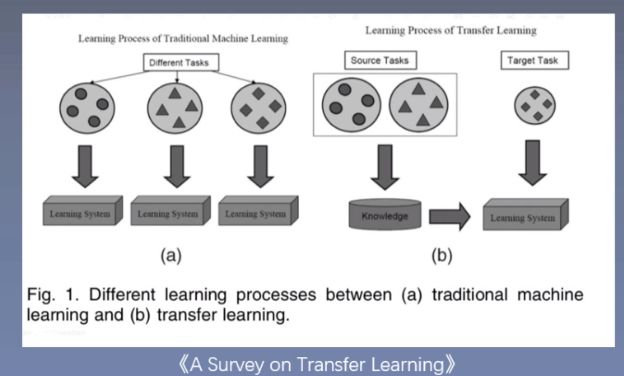

1.Transfer Learning & Model Finetune

Transfer Learning:机器学习分支,研究源域(source domain)的知识如何应用到目标域(target domain)

将在其他任务中学习到的知识,应用到新任务中

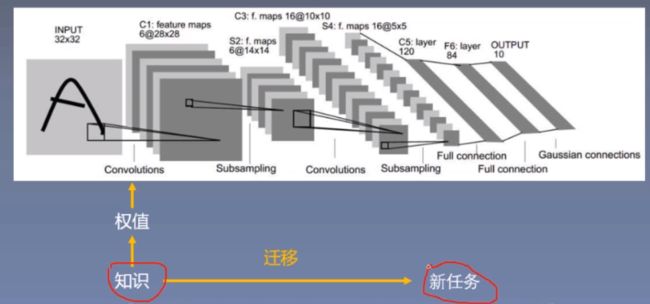

Model Finetune:模型微调

应用时一般根据任务修改最后一个全连接层的输出神经元个数,分几类就几个神经元

模型微调步骤:

- 获取预训练模型参数

- 加载模型(load_state_dict)

- 修改输出层

由于新任务的数据集比较小,不足以训练这么多参数,同时认为特征提取部分的参数是有共性的,可以不进行更新,对于该问题,pytorch有两种方法

法一:对预训练的参数不进行训练,或lr = 0则不更新参数

法二:前面特征提取部分设置较小的学习率,全连接部分学习率设置较大

模型微调训练方法:

- 固定预训练的参数(requires_grad = False;lr = 0)

- Features Extractor较小学习率(params_group)

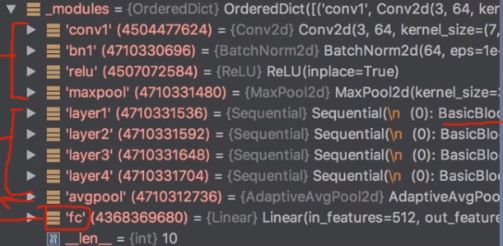

2.Pytorch中的Finetune

应用实例:蚂蚁蜜蜂二分类

Finetune Resnet-18 用于二分类

蚂蚁蜜蜂二分类数据

训练集:各120张 验证集:各70张

不使用finetune,只进行简单的初始化

输出结果:

可以看到准确率是非常低的,loss也很动荡

那我们如何进行model finetune呢?详见代码,有明确标注

数据加载:

class AntsDataset(Dataset):

def __init__(self, data_dir, transform=None):

self.label_name = {"ants": 0, "bees": 1}

self.data_info = self.get_img_info(data_dir)

self.transform = transform

def __getitem__(self, index):

path_img, label = self.data_info[index]

img = Image.open(path_img).convert('RGB')

if self.transform is not None:

img = self.transform(img)

return img, label

def __len__(self):

return len(self.data_info)

def get_img_info(self, data_dir):

data_info = list()

for root, dirs, _ in os.walk(data_dir):

# 遍历类别

for sub_dir in dirs:

img_names = os.listdir(os.path.join(root, sub_dir))

img_names = list(filter(lambda x: x.endswith('.jpg'), img_names))

# 遍历图片

for i in range(len(img_names)):

img_name = img_names[i]

path_img = os.path.join(root, sub_dir, img_name)

label = self.label_name[sub_dir]

data_info.append((path_img, int(label)))

if len(data_info) == 0:

raise Exception("\ndata_dir:{} is a empty dir! Please checkout your path to images!".format(data_dir))

return data_infoimport os

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as plt

import sys

hello_pytorch_DIR = os.path.abspath(os.path.dirname(__file__)+os.path.sep+".."+os.path.sep+"..")

sys.path.append(hello_pytorch_DIR)

from tools.my_dataset import AntsDataset

from tools.common_tools import set_seed

import torchvision.models as models

import torchvision

BASEDIR = os.path.dirname(os.path.abspath(__file__))

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print("use device :{}".format(device))

set_seed(1) # 设置随机种子

label_name = {"ants": 0, "bees": 1}

# 参数设置

MAX_EPOCH = 25

BATCH_SIZE = 16

LR = 0.001

log_interval = 10

val_interval = 1

classes = 2

start_epoch = -1

lr_decay_step = 7

# ============================ step 1/5 数据 ============================

data_dir = os.path.abspath(os.path.join(BASEDIR, "..", "..", "data", "07-02-数据-模型finetune"))

if not os.path.exists(data_dir):

raise Exception("\n{} 不存在,请下载 07-02-数据-模型finetune.zip 放到\n{} 下,并解压即可".format(

data_dir, os.path.dirname(data_dir)))

train_dir = os.path.join(data_dir, "train")

valid_dir = os.path.join(data_dir, "val")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = AntsDataset(data_dir=train_dir, transform=train_transform)

valid_data = AntsDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

# 1/3 构建模型

resnet18_ft = models.resnet18()

# 2/3 加载参数

# flag = 0

flag = 1

if flag:

path_pretrained_model = os.path.join(BASEDIR, "..", "..", "data", "finetune_resnet18-5c106cde.pth")

if not os.path.exists(path_pretrained_model):

raise Exception("\n{} 不存在,请下载 07-02-数据-模型finetune.zip\n放到 {}下,并解压即可".format(

path_pretrained_model, os.path.dirname(path_pretrained_model)))

#加载与训练权重

state_dict_load = torch.load(path_pretrained_model)

resnet18_ft.load_state_dict(state_dict_load)

# 法1 : 冻结卷积层

flag_m1 = 0

# flag_m1 = 1

if flag_m1:

for param in resnet18_ft.parameters():

param.requires_grad = False

print("conv1.weights[0, 0, ...]:\n {}".format(resnet18_ft.conv1.weight[0, 0, ...]))

# 3/3 替换fc层

num_ftrs = resnet18_ft.fc.in_features

resnet18_ft.fc = nn.Linear(num_ftrs, classes)

resnet18_ft.to(device)

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

# 法2 : conv 小学习率

# flag = 0

flag = 1

if flag:

fc_params_id = list(map(id, resnet18_ft.fc.parameters())) # 返回的是parameters的 内存地址

base_params = filter(lambda p: id(p) not in fc_params_id, resnet18_ft.parameters())

optimizer = optim.SGD([

{'params': base_params, 'lr': LR*0}, # 0

{'params': resnet18_ft.fc.parameters(), 'lr': LR}], momentum=0.9)

else:

optimizer = optim.SGD(resnet18_ft.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=lr_decay_step, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(start_epoch + 1, MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

resnet18_ft.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

outputs = resnet18_ft(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().cpu().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

# if flag_m1:

print("epoch:{} conv1.weights[0, 0, ...] :\n {}".format(epoch, resnet18_ft.conv1.weight[0, 0, ...]))

scheduler.step() # 更新学习率

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

resnet18_ft.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

outputs = resnet18_ft(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().cpu().sum().numpy()

loss_val += loss.item()

loss_val_mean = loss_val/len(valid_loader)

valid_curve.append(loss_val_mean)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val_mean, correct_val / total_val))

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()三、GPU的使用

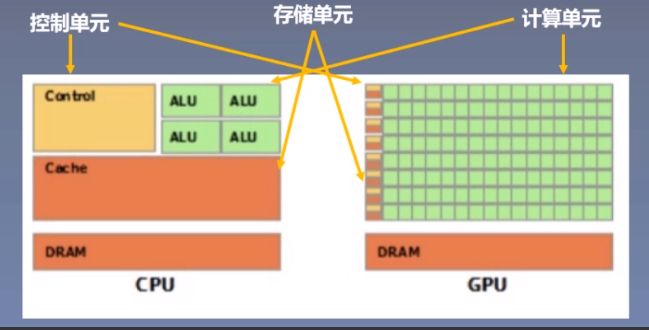

1.CPU 与GPU

CPU(Central Processing Unit,中央处理器):主要包括控制器和运算器

GPU(Graphics Processing Unit,图形处理单元):处理统一的,无依赖的大规模数据运算

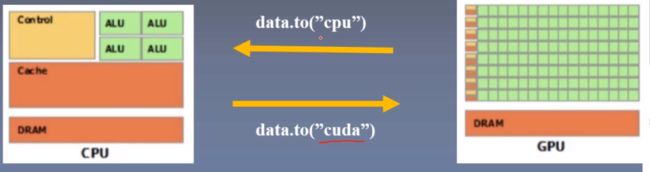

2.数据迁移至GPU

数据迁移:通过to函数实现数据迁移

两种数据类型data:Tensor、Module

to函数:转换数据类型/设备

- tensor.to(*args,**kwargs):可以转换数据类型,也可以转换设备

- module.to(*args,**kwargs):可以转换数据类型,也可以转换设备

区别:张量不执行inplace,模型执行inplace

代码:

定义device

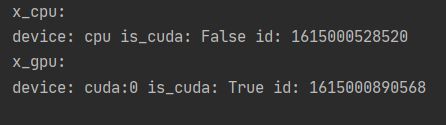

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")(1)tensor to cuda

# ========================== tensor to cuda

# flag = 0

flag = 1

if flag:

x_cpu = torch.ones((3, 3))

print("x_cpu:\ndevice: {} is_cuda: {} id: {}".format(x_cpu.device, x_cpu.is_cuda, id(x_cpu)))

x_gpu = x_cpu.to(device)

print("x_gpu:\ndevice: {} is_cuda: {} id: {}".format(x_gpu.device, x_gpu.is_cuda, id(x_gpu)))

# 弃用pytorch0.4.0之前的用法

# x_gpu = x_cpu.cuda()输出结果:

没有进行inplace操作

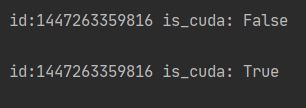

(2)module to cuda

# ========================== module to cuda

# flag = 0

flag = 1

if flag:

net = nn.Sequential(nn.Linear(3, 3))

print("\nid:{} is_cuda: {}".format(id(net), next(net.parameters()).is_cuda))

net.to(device)

print("\nid:{} is_cuda: {}".format(id(net), next(net.parameters()).is_cuda))输出结果:

进行了inplace操作

(3)gpu的数据用在gpu的模型上

# ========================== forward in cuda

# flag = 0

flag = 1

if flag:

output = net(x_gpu)

print("output is_cuda: {}".format(output.is_cuda))

# output = net(x_cpu)cpu的数据不能用在gpu的模型上

torch.cuda常用方法

- torch.cuda.device_count():计算当前可见可用gpu数

- torch.cuda.get_device_name():获取gpu名称

- toch.cuda.manual_seed():为当前gpu设置随机种子

- torch.cuda.manual_seed_all():为所有可见可用gpu设置随机种子

- torch.cuda.set_device():设置主gpu为哪一个物理gpu(不推荐)

代码:

# ========================== 选择 gpu

# flag = 0

flag = 1

if flag:

gpu_id = 0

gpu_str = "cuda:{}".format(gpu_id)

device = torch.device(gpu_str if torch.cuda.is_available() else "cpu")

x_cpu = torch.ones((3, 3))

x_gpu = x_cpu.to(device)

print("x_gpu:\ndevice: {} is_cuda: {} id: {}".format(x_gpu.device, x_gpu.is_cuda, id(x_gpu)))

# ========================== 查看 gpu数量/名称

# flag = 0

flag = 1

if flag:

device_count = torch.cuda.device_count()

print("\ndevice_count: {}".format(device_count))

device_name = torch.cuda.get_device_name(0)

print("\ndevice_name: {}".format(device_name))推荐(设置系统环境变量):os.environ.setdefault("CUDA_VISIBLE_DEVICES","2,3") #设置可见逻辑gpu 2,3

物理gpu是在电脑上的显卡

逻辑gpu是python脚本中可见的数量

逻辑gpu<=物理gpu

设置可见gpu2,3,在逻辑gpu中,gpu0用的是物理gpu2,gpu2用的是物理gpu3

如果可见gpu设置为0,3,2呢?

默认第0个gpu为主gpu

为什么要分主gpu这个概念呢?

这与多gpu运算的分发并行机制有关

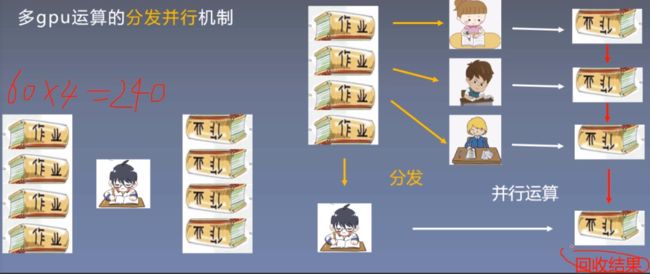

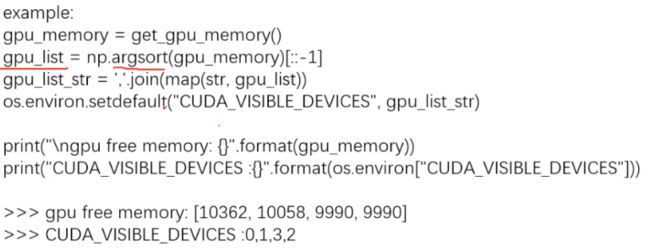

3.多GPU并行运算

多gpu运算的分发并行机制

分发→并行运算→结果回收

例:做一份作业需要60分钟,做四份作业需要4*60 = 240分钟

而4个人一起做,一人做一份,分发作业三分钟,做作业60分钟,回收作业3分钟,只需要66分钟,极大增加了运算速度

将回收结果存储到主gpu中即gpu0

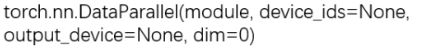

torch.nn.DataParallel

功能:包装模型,实现分发并行机制

主要参数:

- module:需要包装分发的模型

- device_ids:可分发的gpu,默认分发到所有可见可用gpu

- output_device:结果输出设备,主gpu

代码:

# -*- coding: utf-8 -*-

import os

import numpy as np

import torch

import torch.nn as nn

# ============================ 手动选择gpu

# flag = 0

flag = 1

if flag:

gpu_list = [0,1]

gpu_list_str = ','.join(map(str, gpu_list))

os.environ.setdefault("CUDA_VISIBLE_DEVICES", gpu_list_str)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# ============================ 依内存情况自动选择主gpu

# flag = 0

flag = 1

if flag:

def get_gpu_memory():

import platform

if 'Windows' != platform.system():

import os

os.system('nvidia-smi -q -d Memory | grep -A4 GPU | grep Free > tmp.txt')

memory_gpu = [int(x.split()[2]) for x in open('tmp.txt', 'r').readlines()]

os.system('rm tmp.txt')

else:

memory_gpu = False

print("显存计算功能暂不支持windows操作系统")

return memory_gpu

gpu_memory = get_gpu_memory()

if gpu_memory:

print("\ngpu free memory: {}".format(gpu_memory))

gpu_list = np.argsort(gpu_memory)[::-1]

gpu_list_str = ','.join(map(str, gpu_list))

os.environ.setdefault("CUDA_VISIBLE_DEVICES", gpu_list_str)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

class FooNet(nn.Module):

def __init__(self, neural_num, layers=3):

super(FooNet, self).__init__()

self.linears = nn.ModuleList([nn.Linear(neural_num, neural_num, bias=False) for i in range(layers)])

def forward(self, x):

print("\nbatch size in forward: {}".format(x.size()[0]))

for (i, linear) in enumerate(self.linears):

x = linear(x)

x = torch.relu(x)

return x

if __name__ == "__main__":

batch_size = 16

# data

inputs = torch.randn(batch_size, 3)

labels = torch.randn(batch_size, 3)

inputs, labels = inputs.to(device), labels.to(device)

# model

net = FooNet(neural_num=3, layers=3)

net = nn.DataParallel(net)

net.to(device)

# training

for epoch in range(1):

outputs = net(inputs)

print("model outputs.size: {}".format(outputs.size()))

print("CUDA_VISIBLE_DEVICES :{}".format(os.environ["CUDA_VISIBLE_DEVICES"]))

print("device_count :{}".format(torch.cuda.device_count()))

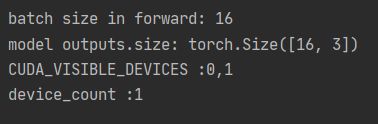

输出结果:

我们设置了可见gpu为0,1

但是计算的gpu个数却为0,是因为我的电脑只有一个gpu

如果有多个gpu就可以进行并行运算

下面看一下多gpu是怎样的

batchsize会除以gpu的个数

查询当前gpu内存剩余

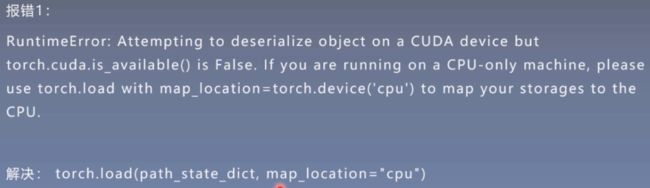

gpu模型加载

模型加载到了一个不可用gpu上,仅能在cpu上加载

class FooNet(nn.Module):

def __init__(self, neural_num, layers=3):

super(FooNet, self).__init__()

self.linears = nn.ModuleList([nn.Linear(neural_num, neural_num, bias=False) for i in range(layers)])

def forward(self, x):

print("\nbatch size in forward: {}".format(x.size()[0]))

for (i, linear) in enumerate(self.linears):

x = linear(x)

x = torch.relu(x)

return x# =================================== 加载至cpu

flag = 0

# flag = 1

if flag:

gpu_list = [0]

gpu_list_str = ','.join(map(str, gpu_list))

os.environ.setdefault("CUDA_VISIBLE_DEVICES", gpu_list_str)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

net = FooNet(neural_num=3, layers=3)

net.to(device)

# save

net_state_dict = net.state_dict()

path_state_dict = "./model_in_gpu_0.pkl"

torch.save(net_state_dict, path_state_dict)

# load

# state_dict_load = torch.load(path_state_dict)

state_dict_load = torch.load(path_state_dict, map_location="cpu")

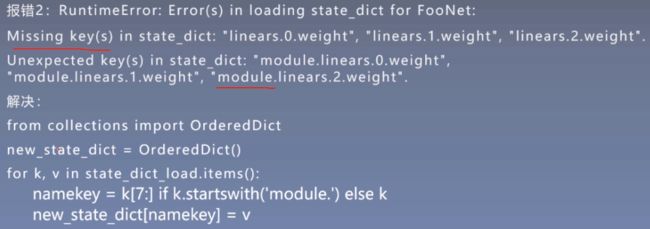

print("state_dict_load:\n{}".format(state_dict_load))训练时采用多gpu训练,导致module字典命名不匹配

代码:

# =================================== 多gpu 保存

flag = 0

# flag = 1

if flag:

if torch.cuda.device_count() < 2:

print("gpu数量不足,请到多gpu环境下运行")

import sys

sys.exit(0)

gpu_list = [0, 1, 2, 3]

gpu_list_str = ','.join(map(str, gpu_list))

os.environ.setdefault("CUDA_VISIBLE_DEVICES", gpu_list_str)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

net = FooNet(neural_num=3, layers=3)

net = nn.DataParallel(net)

net.to(device)

# save

net_state_dict = net.state_dict()

path_state_dict = "./model_in_multi_gpu.pkl"

torch.save(net_state_dict, path_state_dict)

# =================================== 多gpu 加载

# flag = 0

flag = 1

if flag:

net = FooNet(neural_num=3, layers=3)

path_state_dict = "./model_in_multi_gpu.pkl"

state_dict_load = torch.load(path_state_dict, map_location="cpu")

print("state_dict_load:\n{}".format(state_dict_load))

# net.load_state_dict(state_dict_load)

# remove module.

from collections import OrderedDict

new_state_dict = OrderedDict()

for k, v in state_dict_load.items():

namekey = k[7:] if k.startswith('module.') else k

new_state_dict[namekey] = v

print("new_state_dict:\n{}".format(new_state_dict))

net.load_state_dict(new_state_dict)四、Pytorch常见报错

1.Pytorch常见报错

报错代码:

# ========================== 1 num_samples=0

flag = 0

# flag = 1

if flag:

# train_dir = os.path.join("..", "data", "rmb_split", "train")

train_dir = os.path.join("..", "..", "data", "rmb_split", "train")

train_data = RMBDataset(data_dir=train_dir)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=16, shuffle=True)

# ========================== 2

# TypeError: pic should be PIL Image or ndarray. Got

flag = 0

# flag = 1

if flag:

train_transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.FiveCrop(200),

transforms.Lambda(lambda crops: torch.stack([(transforms.ToTensor()(crop)) for crop in crops])),

# transforms.ToTensor(),

# transforms.ToTensor(),

])

train_dir = os.path.join("..", "..", "data", "rmb_split", "train")

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

train_loader = DataLoader(dataset=train_data, batch_size=16, shuffle=True)

data, label = next(iter(train_loader))

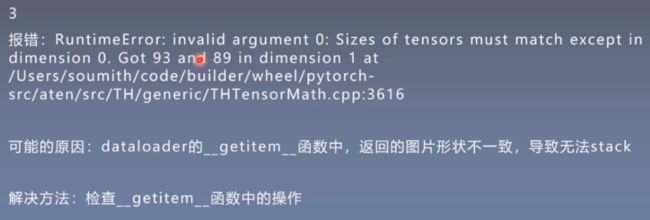

# ========================== 3

# RuntimeError: invalid argument 0: Sizes of tensors must match except in dimension 0

flag = 0

# flag = 1

if flag:

class FooDataset(Dataset):

def __init__(self, num_data, data_dir=None, transform=None):

self.foo = data_dir

self.transform = transform

self.num_data = num_data

def __getitem__(self, item):

size = torch.randint(63, 64, size=(1, ))

fake_data = torch.zeros((3, size, size))

fake_label = torch.randint(0, 10, size=(1, ))

return fake_data, fake_label

def __len__(self):

return self.num_data

foo_dataset = FooDataset(num_data=10)

foo_dataloader = DataLoader(dataset=foo_dataset, batch_size=4)

data, label = next(iter(foo_dataloader))

# ========================== 4

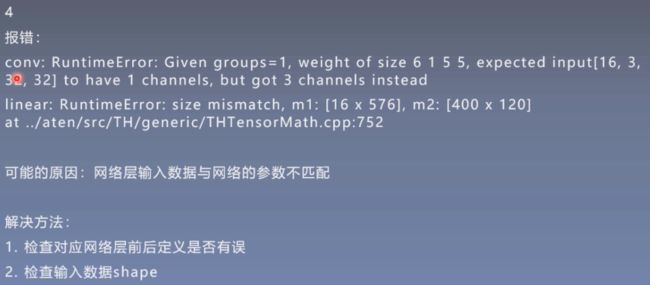

# Given groups=1, weight of size 6 3 5 5, expected input[16, 1, 32, 32] to have 3 channels, but got 1 channels instead

# RuntimeError: size mismatch, m1: [16 x 576], m2: [400 x 120] at ../aten/src/TH/generic/THTensorMath.cpp:752

flag = 0

# flag = 1

if flag:

class FooDataset(Dataset):

def __init__(self, num_data, shape, data_dir=None, transform=None):

self.foo = data_dir

self.transform = transform

self.num_data = num_data

self.shape = shape

def __getitem__(self, item):

fake_data = torch.zeros(self.shape)

fake_label = torch.randint(0, 10, size=(1, ))

if self.transform is not None:

fake_data = self.transform(fake_data)

return fake_data, fake_label

def __len__(self):

return self.num_data

# ============================ step 1/5 数据 ============================

channel = 3 # 1 3

img_size = 32 # 36 32

train_data = FooDataset(num_data=32, shape=(channel, img_size, img_size))

train_loader = DataLoader(dataset=train_data, batch_size=16, shuffle=True)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss()

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=0.01, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10)

# ============================ step 5/5 训练 ============================

data, label = next(iter(train_loader))

outputs = net(data)

# ========================== 5

# AttributeError: 'DataParallel' object has no attribute 'linear'

flag = 0

# flag = 1

if flag:

class FooNet(nn.Module):

def __init__(self):

super(FooNet, self).__init__()

self.linear = nn.Linear(3, 3, bias=True)

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool1 = nn.MaxPool2d(5)

def forward(self, x):

return 1234567890

net = FooNet()

for layer_name, layer in net.named_modules():

print(layer_name)

net = nn.DataParallel(net)

for layer_name, layer in net.named_modules():

print(layer_name)

print(net.module.linear)

# ========================== 6

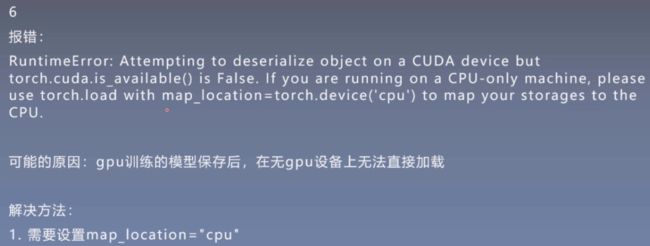

# RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False.

# If you are running on a CPU-only machine, please use torch.load with map_location=torch.device('cpu')

# to map your storages to the CPU.

flag = 0

# flag = 1

if flag:

path_state_dict = "./model_in_multi_gpu.pkl"

state_dict_load = torch.load(path_state_dict)

# state_dict_load = torch.load(path_state_dict, map_location="cpu")

# ========================== 7

# AttributeError: Can't get attribute 'FooNet2' on = 0 && cur_target < n_classes' failed.

flag = 0

# flag = 1

if flag:

inputs = torch.tensor([[1, 2], [1, 3], [1, 3]], dtype=torch.float)

target = torch.tensor([0, 0, 1], dtype=torch.long)

criterion = nn.CrossEntropyLoss()

loss = criterion(inputs, target)

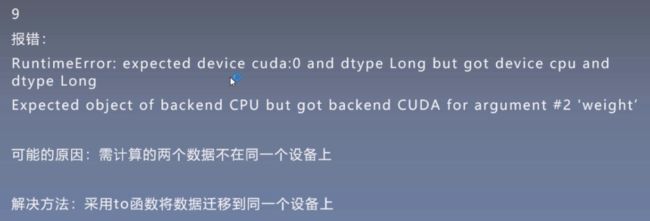

# ========================== 9

# RuntimeError: expected device cuda:0 and dtype Long but got device cpu and dtype Long

flag = 0

# flag = 1

if flag:

x = torch.tensor([1])

w = torch.tensor([2]).to(device)

# y = w * x

x = x.to(device)

y = w * x

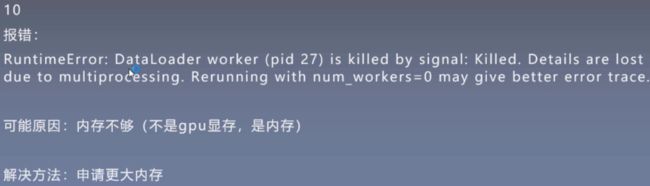

# ========================== 10

# RuntimeError: Expected object of backend CPU but got backend CUDA for argument #2 'weight'

# flag = 0

flag = 1

if flag:

def data_loader(num_data):

for _ in range(num_data):

img_ = torch.randn(1, 3, 224, 224)

label_ = torch.randint(0, 10, size=(1,))

yield img_, label_

resnet18 = models.resnet18()

resnet18.to(device)

for inputs, labels in data_loader(2):

# inputs.to(device)

# labels.to(device)

# outputs = resnet18(inputs)

inputs = inputs.to(device)

labels = labels.to(device)

outputs = resnet18(inputs)

print("outputs device:{}".format(outputs.device))