- 【python】11. 输入输出

lmk565

python开发语言

11.输入输出Python两种输出值的方式:表达式语句和print()函数。第三种方式是使用文件对象的write()方法,标准输出文件可以用sys.stdout引用。如果你希望输出的形式更加多样,可以使用str.format()函数来格式化输出值。如果你希望将输出的值转成字符串,可以使用repr()或str()函数来实现。str():函数返回一个用户易读的表达形式。repr():产生一个解释器易读

- 使用Python和Django构建支持多语言的博客网站

程序员~小强

pythondjangosqlite

随着互联网的发展,博客已经成为人们获取信息和分享想法的重要平台。但是不同国家和地区的用户语言各异,这给博客的国际化带来了挑战。本文将介绍如何使用Python和Django这两个强大的Web开发框架,来构建一个支持多语言的博客网站。Django框架概述Django是一个开源的Web应用框架,由Python写成。它鼓励快速开发和干净的设计。通过提供大量常用组件,Django可以更快地构建高质量的Web

- python pip及常用国内镜像源

sunny05296

pythonpythonpip开发语言

pip常用国内镜像源pip默认从国外的python下载会很慢,建议使用一些国内的镜像源,常用的国内镜像源如下:#清华镜像源https://pypi.tuna.tsinghua.edu.cn/simple#中科大镜像源https://pypi.mirrors.ustc.edu.cn/simple#阿里云镜像源https://mirrors.aliyun.com/pypi/simplepip安装组件时

- 零基础上手Python数据分析 (7):Python 面向对象编程初步

kakaZhui

python数据分析excel

写在前面回顾一下,我们已经学习了Python的基本语法、数据类型、常用数据结构和文件操作、异常处理等。到目前为止,我们主要采用的是面向过程(ProceduralProgramming)的编程方式,即按照步骤一步一步地编写代码,解决问题。这种方式对于简单的任务已经足够,但当程序变得越来越复杂,代码量越来越大时,面向过程编程可能会显得力不从心,代码难以组织、复用和维护。代码复杂性带来的挑战:面向过程v

- Nginx + CertBot 配置HTTPS泛域名证书(Rocky Linux 9.4)

#安装nginx此步省略,以nginx安装在'/usr/local/nginx-1.23.3'目录为例#1.安装certbot#更新包列表sudodnfupdate#安装EPEL仓库:EPEL仓库提供了许多有用的软件包,包括certbotsudodnfinstall-yepel-release#安装Certbot和Nginx插件。dnfinstall-ycertbotpython3-certbot

- OCR提取+识别方案

ocr

1.内容提取通过YOLO提取需要识别的区域1.1安装ultralytics创建虚拟环境(可选)#创建虚拟环境python-mvenv.venv#激活虚拟环境###激活虚拟环境将更改shell的提示以显示您正在使用的虚拟环境,并修改环境,以便运行时python可以获得特定版本和安装的Python。例如:source.venv/bin/activate#显示虚拟环境中安装的所有软件包:python-m

- OpenAI Agents SDK 中文文档 中文教程 (7)

wtsolutions

openaiagentssdkpythonopenaisdk中文文档

英文文档原文详见OpenAIAgentsSDKhttps://openai.github.io/openai-agents-python/本文是OpenAI-agents-sdk-python使用翻译软件翻译后的中文文档/教程。分多个帖子发布,帖子的目录如下:(1)OpenAI代理SDK,介绍及快速入门(2)OpenAIagentssdk,agents,运行agents,结果,流,工具,交接(3)

- oracle 时间格式化 to——datetime,精通 Oracle+Python,第 2 部分:处理时间和日期

照月鱼yoyi

oracle时间格式化to——datetime

作者:PrzemyslawPiotrowskiOracle和Python的日期处理介绍2007年9月发布从Python2.4版开始,cx_Oracle自身可以处理DATE和TIMESTAMP数据类型,将这些列的值映射到Python的datetime模块的datetime对象中。因为datetime对象支持原位的运算操作,这可以带来某些优势。内置的时区支持和若干专用模块使Python成为一台实时机器

- Python --**kwargs

潇湘馆记

python

在Python中,**kwargs是一个特殊语法,用于在函数定义中接收任意数量的关键字参数(即键值对参数),并将这些参数以字典形式存储。它是Python中处理动态参数的强大工具,适用于需要灵活传递参数的场景。1.基本语法定义方式:在函数参数列表中使用**kwargs(名称可以自定义,但通常遵循kwargs约定)。参数类型:kwargs是一个字典,键是参数名,值是对应的参数值。示例defprint_

- Python 数据分析实战:跨境电商行业发展解析

萧十一郎@

pythonpython数据分析开发语言

目录一、案例背景二、代码实现2.1数据收集2.2数据探索性分析2.3数据清洗2.4数据分析2.4.1跨境电商消费者地域分布分析2.4.2跨境电商商品销售与价格关系分析2.4.3跨境电商行业未来发展预测三、主要的代码难点解析3.1数据收集3.2数据清洗-销售数据处理3.3数据分析-跨境电商消费者地域分布分析3.4数据分析-跨境电商商品销售与价格关系分析3.5数据可视化四、可能改进的代码4.1数据收集

- 网络安全爬虫全解析

Hacker_LaoYi

爬虫web安全网络

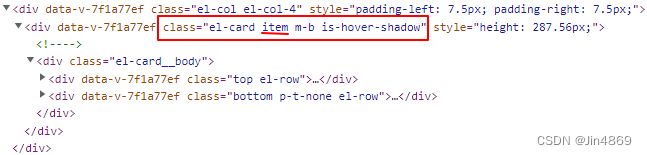

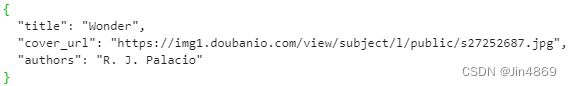

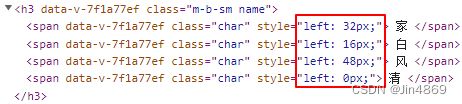

1.网络爬虫的认识网络爬虫是自动从互联网定向或不定向地采集信息地一种程序工具。网络爬虫分为很多类别,常见的有批量型网络爬虫、增量型网络爬虫(通用爬虫)、垂直网络爬虫(聚焦爬虫)。2.网络爬虫的工作原理通用爬虫:首先给定初始URL,爬虫会自动获取这个URL上的所有URL并将已经在爬取的地址存放在已爬取列表中。将新的URL放在队列并依次读取新的URL,依次判读是否满足所设置的停止获取的条件。聚焦爬虫:

- 用Python爬虫获取AliExpress商品信息:item_search API接口实战指南

JelenaAPI小小爬虫

PythonAPIpython爬虫开发语言

引言在全球化电商的浪潮中,数据的力量不容小觑。对于电商分析师、市场研究者以及在线商家而言,能够快速获取商品信息是至关重要的。AliExpress作为全球知名的跨境电商平台,提供了丰富的商品数据。本文将介绍如何使用Python爬虫结合item_searchAPI接口,按关键字搜索并获取AliExpress上的商品信息。一、为什么选择Python爬虫Python因其简洁的语法和强大的库支持,成为编写爬

- 使用DeepSeek R1大模型编写迅投 QMT 的量化交易 Python 代码

wtsolutions

qmt量化交易pythonqmtdeepseek量化交易代码生成

随着人工智能技术的迅猛发展,利用AI工具提升工作效率已成为现代开发者的重要手段。在使用deepseek官方网页生成迅投QMT代码的时候,deepseek给出的代码是xtquant代码,也就是miniqmt代码,并不是我们传统意义上说的大QMT可用的代码。因此,我们需要自建一个知识库,让deepseek根据我的知识库里面的知识,去帮我生成大QMT可用的交易代码。一、建立迅投QMT的知识库建立迅投QM

- Qt上位机编程命名规范-执行版

有追求的菜鸟

qt开发语言

主要规范原则参考Qt上位机编程命名规范。1.文件/文件夹大小写分析考虑跨平台性,全小写是一种约定俗成的风格,在许多大型开源项目中(如Linux内核、Python标准库)被广泛使用。1.1.配合文件扩展名通常文件名小写配合小写扩展名(如.h,.cpp,.json),使整体风格统一:main.cppconfig.jsonutils.h1.2.文件夹和pri文件命名通常小写、下划线分开:control_

- 通过Bokeh实现大规模数据可视化的最佳实践【从静态图表到实时更新】

步入烟尘

算法指南信息可视化Bokehpython

本文已收录于《Python超入门指南全册》本专栏专门针对零基础和需要进阶提升的同学所准备的一套完整教学,从基础到精通不断进阶深入,后续还有实战项目,轻松应对面试,专栏订阅地址:https://blog.csdn.net/mrdeam/category_12647587.html优点:订阅限时19.9付费专栏,私信博主还可进入全栈VIP答疑群,作者优先解答机会(代码指导、远程服务),群里大佬众多可以

- 轻松帮你搞清楚Python爬虫数据可视化的流程

liuhaoran___

python

Python爬虫数据可视化的流程主要是通过网络爬取所需的数据,并利用相关的库将数据分析结果以图形化的方式展示出来,帮助用户更直观地理解数据背后的信息。Python爬虫+数据可视化步骤1.获取目标网站的数据使用`requests`或者`selenium`库从网页上抓取信息。对于动态加载内容的页面可以考虑结合JavaScript渲染引擎。2.解析HTML内容提取有用信息常见工具如BeautifulSo

- python使用Bokeh库实现实时数据的可视化

Oona_01

python信息可视化数据分析

Python语言以其丰富的数据科学生态系统而闻名,其中Bokeh库作为一种功能强大的可视化工具,为实时数据的可视化提供了优秀的支持,本文将介绍如何使用Bokeh库实现实时数据的可视化,并提供相关代码实例,需要的朋友可以参考下使用Python的Bokeh库进行实时数据可视化的实现Bokeh简介实时数据可视化的需求使用Bokeh实现实时数据可视化的步骤代码示例Bokeh的进阶应用总结使用Python的

- Python解决“特定数组的逆序拼接”问题

啥都鼓捣的小yao

经典算法练习python算法开发语言

Python解决“特定数组的逆序拼接”问题问题描述测试样例解决思路代码问题描述小U得到了一个数字n,他的任务是构造一个特定数组。这个数组的构造规则是:对于每个i从1到n,将数字n到i逆序拼接,直到i等于n为止。最终,输出这个拼接后的数组。例如,当n等于3时,拼接后的数组是[3,2,1,3,2,3]。测试样例样例1:输入:n=3输出:[3,2,1,3,2,3]样例2:输入:n=4输出:[4,3,2,

- Python用Bokeh处理大规模数据可视化的最佳实践

一键难忘

Bokehpython开发语言

用Bokeh处理大规模数据可视化的最佳实践在大规模数据处理和分析中,数据可视化是一个至关重要的环节。Bokeh是一个在Python生态中广泛使用的交互式数据可视化库,它具有强大的可扩展性和灵活性。本文将介绍如何使用Bokeh处理大规模数据可视化,并提供一些最佳实践和代码实例,帮助你高效地展示大数据集中的重要信息。1.为什么选择Bokeh?Bokeh是一个专为浏览器呈现而设计的可视化库,它支持高效渲

- Python 爬虫实战:社交媒体品牌反馈数据抓取与舆情分析

西攻城狮北

python爬虫媒体

一、引言在当今数字化时代,社交媒体已成为公众表达意见、分享信息的重要渠道。品牌的声誉和市场表现往往受到消费者在社交平台上的反馈和评价的影响,因此品牌舆情分析变得至关重要。本文将介绍如何使用爬虫技术爬取社交媒体上的品牌反馈数据,并通过数据分析技术,分析品牌的舆情动态。二、环境准备在开始之前,确保你的开发环境已经安装了以下必要的Python库:requests:用于发送HTTP请求。beautiful

- Python预训练模型实现俄语音频转文字

啥都鼓捣的小yao

人工智能python音视频人工智能

Python预训练模型实现俄语音频转文字使用CommonVoice8.0、Golos和MultilingualTEDx的训练和验证分割对俄语的facebook/wav2vec2-xls-r-1b进行了微调。使用此模型时,请确保您的语音输入以16kHz采样。我们只需要装好三个功能包,写好你的文件路径即可使用!importtorchimportlibrosafromtransformersimport

- 基于Python编程语言实现“机器学习”,用于车牌识别项目

我的sun&shine

Pythonpython机器学习计算机视觉

基于Python的验证码识别研究与实现1.摘要验证码的主要目的是区分人类和计算机,用来防止自动化脚本程序对网站的一些恶意行为,目前绝大部分网站都利用验证码来阻止恶意脚本程序的入侵。验证码的自动识别对于减少自动登录时长,识别难以识别的验证码图片有着重要的作用。对验证码图像进行灰度化、二值化、去离散噪声、字符分割、归一化、特征提取、训练和字符识别等过程可以实现验证码自动识别。首先将原图片进行灰度化处理

- 6.8:Python如何处理文件写入时出现的错误?

小兔子平安

Python完整学习全解答javawindowshtml

Python是一种功能强大且易于学习的编程语言,已经成为了当今最流行的编程语言之一。随着Python应用领域的不断扩大,越来越多的人开始学习Python,希望能够掌握这个有用的工具,从而实现更多的创意和创新。而文件操作是Python编程中不可或缺的一部分,对于处理文件写入时的错误更是必须掌握的技能。本文主要介绍如何处理Python中文件写入时的错误。我们将详细讲解如何使用try-except语句、

- Python3包开发的高效Cookiecutter模板:python-package-template

一只爪子

本文还有配套的精品资源,点击获取简介:本文介绍了一个名为python-package-template的Cookiecutter模板,用于简化Python包的开发过程。该模板遵循Python的最佳实践,并自动创建项目结构,包括setup.py、MANIFEST.in、LICENSE、README.md、.gitignore、requirements.txt、测试配置文件、CI配置文件、测试目录和文

- python列表操作计算列表长度并输出,Python基础2:列表

想吃草莓干

一、列表列表是按照特定顺序的排列组合,就像数学中的数列,列表中的元素具有⼀定的排列顺序。在Python中,列表用方括号[]来表示列表,比如:>>>a=['Python','C','Java']1、访问列表中的元素索引开始:0如果我们想要打印上述列表中Python,就需要我们访问列表中第一个元素,在Python中,列表的访问从0开始,索引数为元素的位置减去1,访问的元素位置放在方括号里面,如果我们想

- Python项目自动化模板构建:深入理解Cookiecutter

TEDDYYW

本文还有配套的精品资源,点击获取简介:Python项目的标准化构建过程对于代码的整洁和可维护性至关重要。本文将深入探讨如何利用"cookiecutter"这一Python命令行工具自动化项目的初始化过程。Cookiecutter通过读取预定义模板并根据用户输入自动生成项目结构,简化了项目设置。我们将详细了解"cookiecutter-python-master"模板的组成,包括标准项目结构、初始化

- 多阶段构建实现 Docker 加速与体积减小:含文件查看、上传及拷贝功能的 FastAPI 应用镜像构建

九不多

DockerdockerfastapipythonYOLO

本文围绕使用Docker构建FastAPI应用镜像展开,着重介绍了多阶段构建的Dockerfile编写及相关操作。借助多阶段构建,不仅实现了Docker构建的加速,还有效减小了镜像体积。1.Dockerfile内容以下是我们要使用的Dockerfile内容:#第一个阶段-构建应用FROMdocker.1ms.run/python:3.9ASbuilder#设置工作目录WORKDIR/app#复制依

- Python列表的创建

只是没遇到

python

Python3列表序列是Python中最基本的数据结构。序列中的每个值都有对应的位置值,称之为索引,第一个索引是0,第二个索引是1,依此类推。Python有6个序列的内置类型,但最常见的是列表和元组。列表都可以进行的操作包括索引,切片,加,乘,检查成员。此外,Python已经内置确定序列的长度以及确定最大和最小的元素的方法。列表是最常用的Python数据类型,它可以作为一个方括号内的逗号分隔值出现

- Python最佳实践项目Cookiecutter常见问题解决方案

柯茵沙

Python最佳实践项目Cookiecutter常见问题解决方案python-best-practices-cookiecutterPythonbestpracticesprojectcookiecutter项目地址:https://gitcode.com/gh_mirrors/py/python-best-practices-cookiecutter项目基础介绍本项目是一个Python最佳实践的

- Vision mamba(mamba_ssm)安装踩坑指南

ggitjcg

深度学习python

在这篇博客中,我将分享我在linux环境安装和使用VisionMamba(mamba_ssm)过程中遇到的一些问题和解决方法。前置检查:PyTorch和Python版本在安装mamba_ssm前,请确保你的PyTorch和Python环境版本正确。以下代码可用来检查环境信息:importtorchprint("PyTorchVersion:{}".format(torch.__version__)

- 基本数据类型和引用类型的初始值

3213213333332132

java基础

package com.array;

/**

* @Description 测试初始值

* @author FuJianyong

* 2015-1-22上午10:31:53

*/

public class ArrayTest {

ArrayTest at;

String str;

byte bt;

short s;

int i;

long

- 摘抄笔记--《编写高质量代码:改善Java程序的151个建议》

白糖_

高质量代码

记得3年前刚到公司,同桌同事见我无事可做就借我看《编写高质量代码:改善Java程序的151个建议》这本书,当时看了几页没上心就没研究了。到上个月在公司偶然看到,于是乎又找来看看,我的天,真是非常多的干货,对于我这种静不下心的人真是帮助莫大呀。

看完整本书,也记了不少笔记

- 【备忘】Django 常用命令及最佳实践

dongwei_6688

django

注意:本文基于 Django 1.8.2 版本

生成数据库迁移脚本(python 脚本)

python manage.py makemigrations polls

说明:polls 是你的应用名字,运行该命令时需要根据你的应用名字进行调整

查看该次迁移需要执行的 SQL 语句(只查看语句,并不应用到数据库上):

python manage.p

- 阶乘算法之一N! 末尾有多少个零

周凡杨

java算法阶乘面试效率

&n

- spring注入servlet

g21121

Spring注入

传统的配置方法是无法将bean或属性直接注入到servlet中的,配置代理servlet亦比较麻烦,这里其实有比较简单的方法,其实就是在servlet的init()方法中加入要注入的内容:

ServletContext application = getServletContext();

WebApplicationContext wac = WebApplicationContextUtil

- Jenkins 命令行操作说明文档

510888780

centos

假设Jenkins的URL为http://22.11.140.38:9080/jenkins/

基本的格式为

java

基本的格式为

java -jar jenkins-cli.jar [-s JENKINS_URL] command [options][args]

下面具体介绍各个命令的作用及基本使用方法

1. &nb

- UnicodeBlock检测中文用法

布衣凌宇

UnicodeBlock

/** * 判断输入的是汉字 */ public static boolean isChinese(char c) { Character.UnicodeBlock ub = Character.UnicodeBlock.of(c);

- java下实现调用oracle的存储过程和函数

aijuans

javaorale

1.创建表:STOCK_PRICES

2.插入测试数据:

3.建立一个返回游标:

PKG_PUB_UTILS

4.创建和存储过程:P_GET_PRICE

5.创建函数:

6.JAVA调用存储过程返回结果集

JDBCoracle10G_INVO

- Velocity Toolbox

antlove

模板toolboxvelocity

velocity.VelocityUtil

package velocity;

import org.apache.velocity.Template;

import org.apache.velocity.app.Velocity;

import org.apache.velocity.app.VelocityEngine;

import org.apache.velocity.c

- JAVA正则表达式匹配基础

百合不是茶

java正则表达式的匹配

正则表达式;提高程序的性能,简化代码,提高代码的可读性,简化对字符串的操作

正则表达式的用途;

字符串的匹配

字符串的分割

字符串的查找

字符串的替换

正则表达式的验证语法

[a] //[]表示这个字符只出现一次 ,[a] 表示a只出现一

- 是否使用EL表达式的配置

bijian1013

jspweb.xmlELEasyTemplate

今天在开发过程中发现一个细节问题,由于前端采用EasyTemplate模板方法实现数据展示,但老是不能正常显示出来。后来发现竟是EL将我的EasyTemplate的${...}解释执行了,导致我的模板不能正常展示后台数据。

网

- 精通Oracle10编程SQL(1-3)PLSQL基础

bijian1013

oracle数据库plsql

--只包含执行部分的PL/SQL块

--set serveroutput off

begin

dbms_output.put_line('Hello,everyone!');

end;

select * from emp;

--包含定义部分和执行部分的PL/SQL块

declare

v_ename varchar2(5);

begin

select

- 【Nginx三】Nginx作为反向代理服务器

bit1129

nginx

Nginx一个常用的功能是作为代理服务器。代理服务器通常完成如下的功能:

接受客户端请求

将请求转发给被代理的服务器

从被代理的服务器获得响应结果

把响应结果返回给客户端

实例

本文把Nginx配置成一个简单的代理服务器

对于静态的html和图片,直接从Nginx获取

对于动态的页面,例如JSP或者Servlet,Nginx则将请求转发给Res

- Plugin execution not covered by lifecycle configuration: org.apache.maven.plugin

blackproof

maven报错

转:http://stackoverflow.com/questions/6352208/how-to-solve-plugin-execution-not-covered-by-lifecycle-configuration-for-sprin

maven报错:

Plugin execution not covered by lifecycle configuration:

- 发布docker程序到marathon

ronin47

docker 发布应用

1 发布docker程序到marathon 1.1 搭建私有docker registry 1.1.1 安装docker regisry

docker pull docker-registry

docker run -t -p 5000:5000 docker-registry

下载docker镜像并发布到私有registry

docker pull consol/tomcat-8.0

- java-57-用两个栈实现队列&&用两个队列实现一个栈

bylijinnan

java

import java.util.ArrayList;

import java.util.List;

import java.util.Stack;

/*

* Q 57 用两个栈实现队列

*/

public class QueueImplementByTwoStacks {

private Stack<Integer> stack1;

pr

- Nginx配置性能优化

cfyme

nginx

转载地址:http://blog.csdn.net/xifeijian/article/details/20956605

大多数的Nginx安装指南告诉你如下基础知识——通过apt-get安装,修改这里或那里的几行配置,好了,你已经有了一个Web服务器了。而且,在大多数情况下,一个常规安装的nginx对你的网站来说已经能很好地工作了。然而,如果你真的想挤压出Nginx的性能,你必

- [JAVA图形图像]JAVA体系需要稳扎稳打,逐步推进图像图形处理技术

comsci

java

对图形图像进行精确处理,需要大量的数学工具,即使是从底层硬件模拟层开始设计,也离不开大量的数学工具包,因为我认为,JAVA语言体系在图形图像处理模块上面的研发工作,需要从开发一些基础的,类似实时数学函数构造器和解析器的软件包入手,而不是急于利用第三方代码工具来实现一个不严格的图形图像处理软件......

&nb

- MonkeyRunner的使用

dai_lm

androidMonkeyRunner

要使用MonkeyRunner,就要学习使用Python,哎

先抄一段官方doc里的代码

作用是启动一个程序(应该是启动程序默认的Activity),然后按MENU键,并截屏

# Imports the monkeyrunner modules used by this program

from com.android.monkeyrunner import MonkeyRun

- Hadoop-- 海量文件的分布式计算处理方案

datamachine

mapreducehadoop分布式计算

csdn的一个关于hadoop的分布式处理方案,存档。

原帖:http://blog.csdn.net/calvinxiu/article/details/1506112。

Hadoop 是Google MapReduce的一个Java实现。MapReduce是一种简化的分布式编程模式,让程序自动分布到一个由普通机器组成的超大集群上并发执行。就如同ja

- 以資料庫驗證登入

dcj3sjt126com

yii

以資料庫驗證登入

由於 Yii 內定的原始框架程式, 採用綁定在UserIdentity.php 的 demo 與 admin 帳號密碼: public function authenticate() { $users=array( &nbs

- github做webhooks:[2]php版本自动触发更新

dcj3sjt126com

githubgitwebhooks

上次已经说过了如何在github控制面板做查看url的返回信息了。这次就到了直接贴钩子代码的时候了。

工具/原料

git

github

方法/步骤

在github的setting里面的webhooks里把我们的url地址填进去。

钩子更新的代码如下: error_reportin

- Eos开发常用表达式

蕃薯耀

Eos开发Eos入门Eos开发常用表达式

Eos开发常用表达式

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

蕃薯耀 2014年8月18日 15:03:35 星期一

&

- SpringSecurity3.X--SpEL 表达式

hanqunfeng

SpringSecurity

使用 Spring 表达式语言配置访问控制,要实现这一功能的直接方式是在<http>配置元素上添加 use-expressions 属性:

<http auto-config="true" use-expressions="true">

这样就会在投票器中自动增加一个投票器:org.springframework

- Redis vs Memcache

IXHONG

redis

1. Redis中,并不是所有的数据都一直存储在内存中的,这是和Memcached相比一个最大的区别。

2. Redis不仅仅支持简单的k/v类型的数据,同时还提供list,set,hash等数据结构的存储。

3. Redis支持数据的备份,即master-slave模式的数据备份。

4. Redis支持数据的持久化,可以将内存中的数据保持在磁盘中,重启的时候可以再次加载进行使用。

Red

- Python - 装饰器使用过程中的误区解读

kvhur

JavaScriptjqueryhtml5css

大家都知道装饰器是一个很著名的设计模式,经常被用于AOP(面向切面编程)的场景,较为经典的有插入日志,性能测试,事务处理,Web权限校验, Cache等。

原文链接:http://www.gbtags.com/gb/share/5563.htm

Python语言本身提供了装饰器语法(@),典型的装饰器实现如下:

@function_wrapper

de

- 架构师之mybatis-----update 带case when 针对多种情况更新

nannan408

case when

1.前言.

如题.

2. 代码.

<update id="batchUpdate" parameterType="java.util.List">

<foreach collection="list" item="list" index=&

- Algorithm算法视频教程

栏目记者

Algorithm算法

课程:Algorithm算法视频教程

百度网盘下载地址: http://pan.baidu.com/s/1qWFjjQW 密码: 2mji

程序写的好不好,还得看算法屌不屌!Algorithm算法博大精深。

一、课程内容:

课时1、算法的基本概念 + Sequential search

课时2、Binary search

课时3、Hash table

课时4、Algor

- C语言算法之冒泡排序

qiufeihu

c算法

任意输入10个数字由小到大进行排序。

代码:

#include <stdio.h>

int main()

{

int i,j,t,a[11]; /*定义变量及数组为基本类型*/

for(i = 1;i < 11;i++){

scanf("%d",&a[i]); /*从键盘中输入10个数*/

}

for

- JSP异常处理

wyzuomumu

Webjsp

1.在可能发生异常的网页中通过指令将HTTP请求转发给另一个专门处理异常的网页中:

<%@ page errorPage="errors.jsp"%>

2.在处理异常的网页中做如下声明:

errors.jsp:

<%@ page isErrorPage="true"%>,这样设置完后就可以在网页中直接访问exc

![]()