Python网络爬虫实验二:模拟登陆和数据持久化

实验二:模拟登陆和数据持久化

实验目的

- 熟悉两种常见的登录模式:基于 Session 与 Cookie 的登录,基于 JWT 登录

- 掌握使用MySQL 数据库基本操作,持久化爬取数据

环境

- 安装Mysql和相应的 python 库:pymysql

- Selenium库,PyQuery库,Chrome 和对应的 ChromeDriver

基本要求

实现基于 JWT 登录模式,实现对 https://login3.scrape.center/login 数据的爬取,并把数据持久化到 MySQL,存储的表名为:spider_books,字段名称自定义,存储的字段信息包含:书名、作者、封面图像本地路径、评分、简介、标签、定价、出版社、出版时间、页数、ISBM

实验过程

分析过程

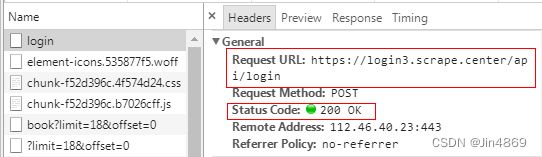

这里我们发现登录时其请求的 URL 为 https://login3.scrape.center/api/login ,是通过 Ajax 请求的,返回状态码为 200。

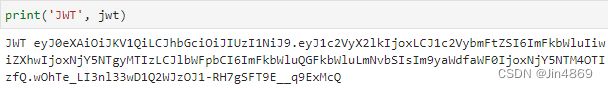

可以看到返回结果是一个 JSON 格式的数据,包含一个 token 字段,其结果为:

token:“eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJ1c2VyX2lkIjoxLCJ1c2VybmFtZSI6ImFkbWluIiwiZXhwIjoxNjY3MjQ3NTcyLCJlbWFpbCI6ImFkbWluQGFkbWluLmNvbSIsIm9yaWdfaWF0IjoxNjY3MjA0MzcyfQ.sk-QJ7U5qezyn9bzr_l7naDHfHaKmYbmuNhjsi5VGy4”

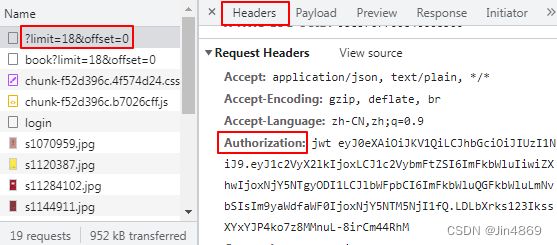

那么有了这个 JWT 之后,后续的数据怎么获取呢?下面我们再来观察下后续的请求内容,如图所示。

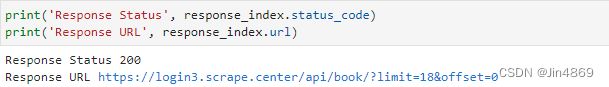

这里我们可以发现,后续获取数据的 Ajax 请求中的 Request Headers 里面就多了一个 Authorization 字段,其结果为 jwt 然后加上刚才的 JWT 的内容,返回结果就是 JSON 格式的数据。

模拟请求登录结果,带上必要的登录信息,获取 JWT 的结果。

后续的请求在 Request Headers 里面加上 Authorization 字段,值就是 JWT 对应的内容。

定义了登录接口和获取数据的接口,分别为 LOGIN_URL 和 INDEX_URL,接着通过 post 请求进行了模拟登录,这里提交的数据由于是 JSON 格式,所以这里使用 json 参数来传递。接着获取了返回结果中包含的 JWT 的结果。第二步就可以构造 Request Headers,然后设置 Authorization 字段并传入 JWT 即可,这样就能成功获取数据了

导入的包

import json

import urllib.request

import pymysql

import requests

from urllib.parse import urljoin # 连接url

固定参数

BASE_URL = 'https://login3.scrape.center/'

LOGIN_URL = urljoin(BASE_URL, '/api/login')

INDEX_URL = urljoin(BASE_URL, '/api/book')

USERNAME = 'admin'

PASSWORD = 'admin'

requests 常用方法之 post 请求

- 作用: 提交资源,新增资源

- 调用:

r = requests.post(url, json/data, headers)#r: 响应对象 - 获取响应对象:

- 响应状态码: 响应对象.status_code

- 响应信息: 响应对象.json()/响应对象.text

response_login = requests.post(LOGIN_URL, json={

'username': USERNAME,

'password': PASSWORD

})

data = response_login.json()

# print('Response JSON', data)

jwt = data.get('token')

# print('JWT', jwt)

jwt 登录的基本框架

headers = {

'Authorization': f'jwt {jwt}'

}

response_index = requests.get(INDEX_URL, params={

'limit': 18,

'offset': 0

}, headers=headers)

# print('Response Status', response_index.status_code)

# print('Response URL', response_index.url)

# print('Response Data', response_index.json())

获得书本在主页的信息

response_index.json() 中书籍的信息存在’results’下

books = response_index.json()['results']

保存书籍图片和保存路径

- urlopen()方法: 利用 Request 类来构造请求

- 使用方法:

urllib.request.Request(url, data=None, headers={}) - 基本用法: (例)

request = urllib.request.Request('https://python.org') response = urllib.request.urlopen(request) print(response.read().decode('urf-8'))

# save image

def save_image(url, name):

if url is not None:

req_image = urllib.request.Request(url)

image = urllib.request.urlopen(req_image).read()

'''保存路径:image/name.jpg'''

f_image = open("image\\" + name + ".jpg", "wb")

f_image.write(image)

f_image.close()

path = "image\\" + name + ".jpg"

else:

path = "null"

return path

连接 mysql

- port: 数据库端口,默认 3306

- 游标:

- 连接完数据库接着就该获取游标,之后才能进行执行、提交等操作

- 游标(cursor)是系统为用户开设的一个数据缓冲区,存放 SQL 语句的执行结果

- 语法:

db.cursor() - 默认返回的数据类型为元组

db = pymysql.connect(host='localhost', user='root', password='****', port=3306, db='spiders')

cursor = db.cursor()

将每一本书的数据存入数据库

爬取一本书的信息

-

tag

tag = "" tag_list = html['tags'] if tag_list is not None: for j in range(len(tag_list)): tag += tag_list[j] + " " else: tag = "null"`

for i in range(len(books)):

book_id = books[i]['id']

book_name = books[i]['name'] if books[i]['name'] is not None else "null" + str(i + 1)

author = ""

author_list = books[i]['authors']

if author_list is not None:

for j in range(len(author_list)):

# .strip()方法删除多余空格

author += author_list[j].strip() + "/"

else:

author = "null"

image_url = books[i]['cover']

image_path = save_image(image_url, book_name)

score = books[i]['score']

# 书本详细信息的路径

req = requests.get("https://login3.scrape.center/api/book/" + book_id + "/", headers=headers)

html = req.json()

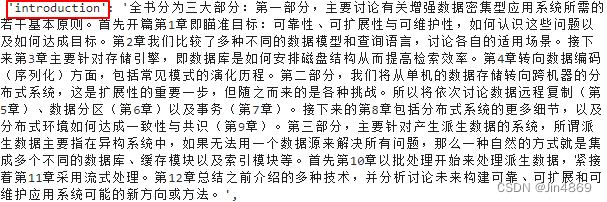

introduce = html['introduction']

tag = ""

tag_list = html['tags']

if tag_list is not None:

for j in range(len(tag_list)):

tag += tag_list[j] + " "

else:

tag = "null"

price = html['price']

publisher = html['publisher']

published_time = html['published_at']

page_num = html['page_number']

isbn_num = html['isbn']

# 插入数据的语句

sql = 'INSERT INTO spi_book(b_name,author,cover,score,intro,label,price,publish_house,publish_date,page,ISBM) ' \

'values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)'

# print(book_name, author, image_path, score, introduce, tag, price)

# print(publisher, published_time, page_num, isbn_num)

try:

# 执行sql语句

cursor.execute(sql, (

book_name, author, image_path, score, introduce, tag, price, publisher, published_time, page_num, isbn_num))

# 提交

db.commit()

print('Insert successfully')

except Exception as err:

# 事件回滚

db.rollback()

print(err)

print('Insert failure')

db.close()