激光条纹中心线提取算法总结和复现

滤波、分割等预处理过程省略。

输入图像为灰度图,激光条纹水平走向。

目录

-

- 几何中心法

- 极值法

- 细化法

- 灰度重心法

- 法向质心法

- Steger算法

几何中心法

检测出光条边界 l、h 后,把两边界的中间线(l + h)/2作为激光条纹的中心线。

#include 极值法

极值法是将激光条纹横截面上灰度值最大点作为激光条纹的中心。

#include 细化法

骨架细化法是重复地剥掉二值图像的边界像素,在剥离的过程中必须保持目标的连通性,直到得到图像的骨架。

具体原理介绍可见Zhang-Suen 图像骨架提取算法的原理和OpenCV实现

#include 灰度重心法

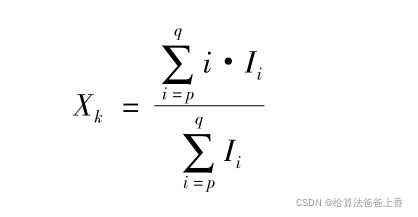

灰度重心法就是对图像中的每一列(行)提取灰度重心作为激光条纹的中心位置。若某行的非零区间为[p,q],则该行的灰度重心位置为:

式中,I i 是第 i 个像素点的灰度值。

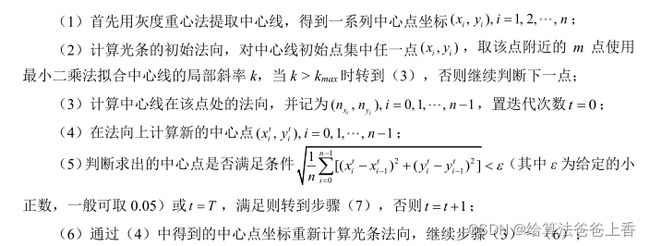

#include 法向质心法

#include Steger算法

#include 后续文章:中心线提取–GPU加速