深度学习- 卷积神经网络(基础篇)Basic CNN-自用笔记8

上一讲我们介绍的全连接的神经网络

所谓的全连接就是说网络里全都是用的线性层,如果我们的网络全都是这种线性层串联起来,我们就把这种网络叫做全连接的网络

接下来我们介绍一种处理图像的卷积的神经网络,卷积层他能保留图像的空间特征。

简单介绍一下

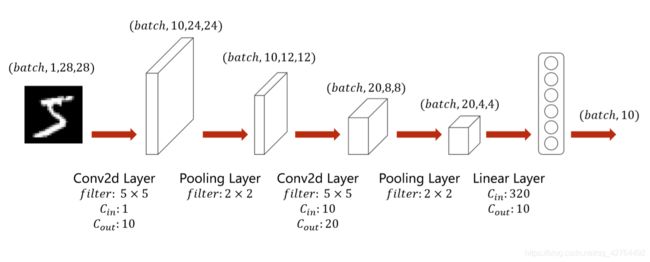

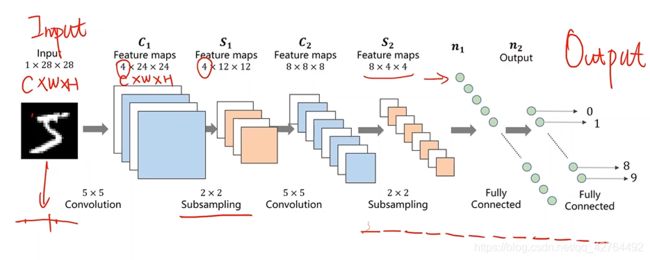

先是对输入的图像进行卷积,然后进行池化下采样,再进行卷积,再进行池化下采样,再进行view变成一位的tensor,再进行全连接层最后输出十维的张量类型。

经过卷积输出的值是通过所有像素加权求和得到的,所以卷积之后的东西包含每个像素的信息。

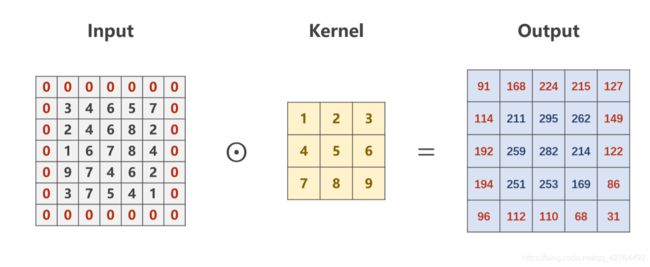

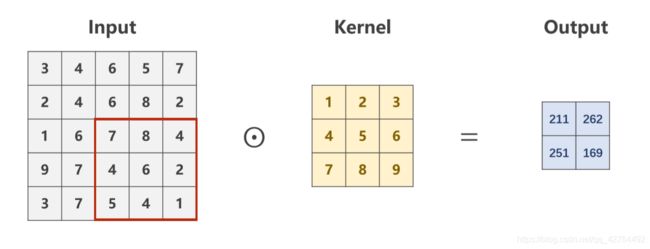

单一通道的卷积的工作原理

Convolution – Single Input Channel

Input:黑白照片,黑白照片为一个通道,大小为1x5x5

Kernel卷积核的大小3x3

Output输出3x3

卷积的过程,如下

……

……

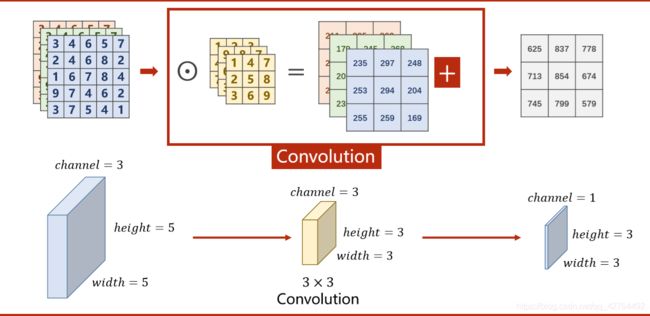

Convolution – 3 Input Channel-1 Output Channel

Input:RGB照片,RGB照片为三个通道,大小为1x5x5

Kernel卷积核的大小3x3,有几个通道就有几个卷积核,因此有三个通道就有三个卷积核

Output输出将三个通道的卷积的值加起来构成一个3x3的维度的矩阵

卷积的过程,如下,将三个通过到变成了一个通道

下图中红色方块的内容就是卷积的操作

输入3x5x5,经过3x3x3的卷积核的卷积,输出为1x3x3

因此总结输入有n个通道,要求卷积核就有n个通道

Convolution – n Input Channel – m Output Channel

若想输出m个通道,要输出m组个卷积核组,每个卷积核组由n个卷积核

举例子

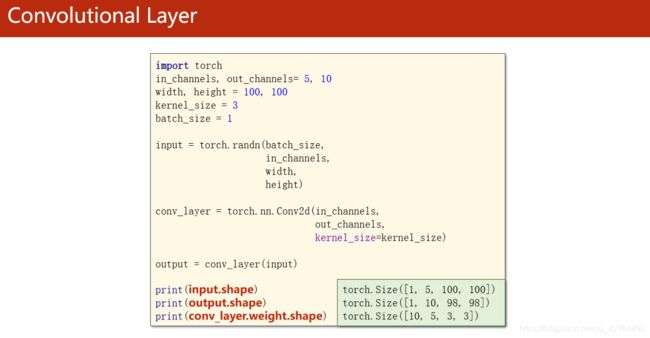

Convolutional Layer – padding=1

Convolutional Layer – stride=2

stride=2表示每次卷积核的 晃动步长为两个像素

举例子

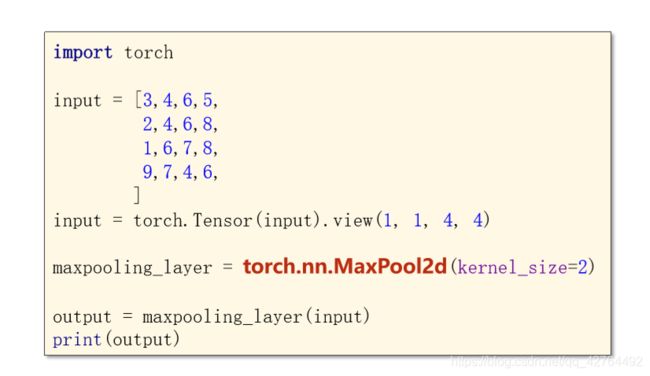

Max Pooling Layer

A Simple Convolutional Neural Network

How to use GPU

- Move Model to GPU

Define device as the first visible cuda device if we have CUDA available.

Convert parameters and buffers of all modules to CUDA Tensor.

- Move Tensors to GPU

对训练集

Send the inputs and targets at every step to the GPU.

对测试集

Send the inputs and targets at every step to the GPU.

代码

# This is a sample Python script.

# Press Shift+F10 to execute it or replace it with your code.

# Press Double Shift to search everywhere for classes, files, tool windows, actions, and settings.

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),#将0-256的像素值的图片格式类型转换成tensor类型

transforms.Normalize((0.1307, ), (0.3081, ))#将0-256的数值映射到0-1的数值,0.1307代表均值,0.3081代表平方差

])

train_dataset = datasets.MNIST(root='F:\实验室\刘二老师\dataset\mnist',

train=True,

download=True,

transform=transform)

train_loader = DataLoader(train_dataset,

shuffle=True,

batch_size=batch_size)

test_dataset = datasets.MNIST(root='F:\实验室\刘二老师\dataset\mnist',

train=False,

download=True,

transform=transform)

test_loader = DataLoader(test_dataset,

shuffle=False,

batch_size=batch_size)

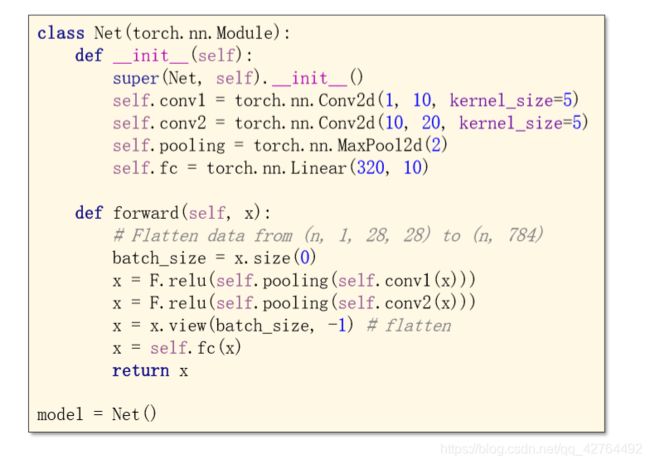

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooing = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10) #320 是需要计算的或者用程序计算输出一下

def forward(self, x):

# Flatten data from (n, 1, 28, 28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooing(self.conv1(x)))

x = F.relu(self.pooing(self.conv2(x)))

x = x.view(batch_size, -1)

x = self.fc(x)

#print(x.shape)

return x

model = Net()

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

# forward + backward + update

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy on test set: %d %% [%d/%d]' % (100 * correct / total, correct, total))

def print_hi(name):

# Use a breakpoint in the code line below to debug your script.

print(f'Hi, {name}') # Press Ctrl+F8 to toggle the breakpoint.

# Press the green button in the gutter to run the script.

if __name__ == '__main__':

print_hi('plant electricity')

for epoch in range(10):

train(epoch)

#if epoch % 10 == 9:

test()

# See PyCharm help at https://www.jetbrains.com/help/pycharm/