Sklearn_EarlyStop

S k l e a r n E a r l y S t o p SklearnEarlyStop SklearnEarlyStop

# 运行 xgboost安装包中的示例程序

import xgboost as xgb

from xgboost import XGBClassifier

# 加载LibSVM格式数据模块

from sklearn.datasets import load_svmlight_file

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from matplotlib import pyplot

# read in data,数据在xgboost安装的路径下的demo目录,现在copy到代码目录下的data目录

my_workpath = './data/'

X_train,y_train = load_svmlight_file(my_workpath + 'agaricus.txt.train')

X_test,y_test = load_svmlight_file(my_workpath + 'agaricus.txt.test')

训练集-校验集分离

假设我们取1/3的训练数据做为校验数据

ps: 为什么要校验?

# split data into train and test sets, 1/3的训练数据作为校验数据

seed = 7

test_size = 0.33

X_train_part, X_validate, y_train_part, y_validate= train_test_split(X_train, y_train, test_size=test_size,

random_state=seed)

X_train_part.shape

X_validate.shape

训练参数设置

# specify parameters via map

param = {'max_depth':2, 'eta':1, 'silent':0, 'objective':'binary:logistic' }

print(param)

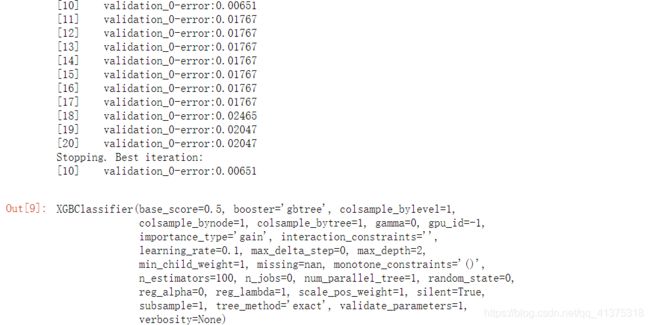

训练模型

# 设置boosting迭代计算次数

num_round = 100

#bst = XGBClassifier(param)

#bst = XGBClassifier()

bst =XGBClassifier(max_depth=2, learning_rate=0.1, n_estimators=num_round, silent=True, objective='binary:logistic')

#eval_set = [(X_train_part, y_train_part), (X_validation, y_validation)]

#bst.fit(X_train_part, y_train_part, eval_metric=["error", "logloss"], eval_set=eval_set, verbose=False)

eval_set =[(X_validate, y_validate)]

bst.fit(X_train_part, y_train_part, early_stopping_rounds=10, eval_metric="error",

eval_set=eval_set, verbose=True)

模型在每次校验集上的性能存在模型中,可用来进一步进行分析 model.evals result() 返回一个字典:评估数据集和分数

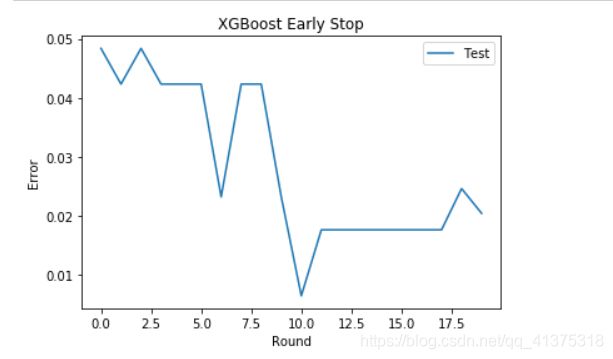

显示学习曲线

# retrieve performance metrics

results = bst.evals_result()

#print(results)

epochs = len(results['validation_0']['error'])

x_axis = range(0, epochs)

# plot log loss

fig, ax = pyplot.subplots()

ax.plot(x_axis, results['validation_0']['error'], label='Test')

ax.legend()

pyplot.ylabel('Error')

pyplot.xlabel('Round')

pyplot.title('XGBoost Early Stop')

pyplot.show()

测试

模型训练好后,可以用训练好的模型对测试数据进行预测

XGBoost预测的输出是概率,输出值是样本为第一类的概率。我们需要将概率值转换为0或1。

# make prediction

preds = bst.predict(X_test)

predictions = [round(value) for value in preds]

test_accuracy = accuracy_score(y_test, predictions)

print("Test Accuracy: %.2f%%" % (test_accuracy * 100.0))

![]()