YOLOAir库使用(五)

YOLOAir:面向小白的目标检测库,更快更方便更完整的YOLO库

模型多样化:基于不同网络模块构建不同检测网络模型。

模块组件化:帮助用户自定义快速组合Backbone、Neck、Head,使得网络模型多样化,助力科研改进检测算法、模型改进,网络排列组合,构建强大的网络模型。

统一模型代码框架、统一应用方式、统一调参、统一改进、易于模块组合、构建更强大的网络模型:内置YOLOv5、YOLOv7、YOLOX、YOLOR、Transformer、Scaled_YOLOv4、YOLOv3、YOLOv4、YOLO-Facev2、TPH-YOLO、YOLOv5Lite、SPD-YOLO、SlimNeck-YOLO、PicoDet等模型网络结构

基于 YOLOv5 代码框架,并同步适配稳定的YOLOv5_v6.1更新, 同步v6.1部署生态。使用这个项目之前, 您可以先了解YOLOv5库。

YOLOv5仓库:https://github.com/ultralytics/yolov5

YOLOAir项目地址: https://github.com/iscyy/yoloair

YOLOAir部分改进说明教程: https://github.com/iscyy/yoloair/wiki/Improved-tutorial-presentation

YOLOAir CSDN地址:https://blog.csdn.net/qq_38668236

YOLOAir库使用说明

1. 下载源码

$ git clone https://github.com/iscyy/yoloair.git

或者打开github链接,下载项目源码,点击Code选择Download ZIP

2. 配置环境

首先电脑安装Anaconda,本文YOLOAir环境安装在conda虚拟环境里

2.1 创建一个python3.8的conda环境yoloair

conda create -n yoloair python=3.8

2.2 安装Pytorch和Torchvision环境

安装Pytorch有种方式,一种是官网链接[安装]https://pytorch.org/(),另外一中是下载whl包到本地再安装

Pytorch whl包下载地址:https://download.pytorch.org/whl/torch/

TorchVision包下载地址:https://download.pytorch.org/whl/torchvision/

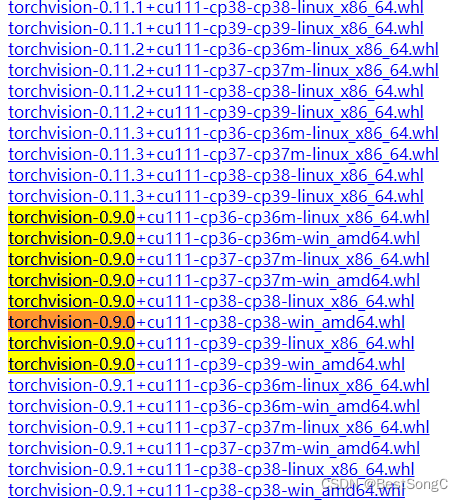

本文Pytorch安装的版本为1.8.0,torchvision对应的版本为0.9.0

注意:cp对应Python包版本,linux对应lLinux版本,win对应Windows版本

当whl文件下载到本地后,进入包下载命令,使用pip install 包名来安装:

pip install torch-1.8.0+cu111-cp38-cp38-win_amd64.whl

pip install torchvision-0.9.0+cu111-cp38-cp38-win_amd64.whl

2.3 安装其他包依赖

YOLOAir项目依赖包具体包含如下:其中Base模块是基本项目依赖包,Logging和Plotting是模型训练时用到的日志和画图包,Export是用于模型转换时用到的(如pt转ONNX、TRT等),thop包是用来计算参数量的

进入到下载的YOLOAir项目目录,使用以下命令安装项目包依赖

pip install -r requirements.txt # 安装依赖包

如果嫌安装速度太慢,可以在此网站(https://pypi.org/)找包下载到本地再安装

2.4 开箱训练

train.py里面可以设置各种参数,具体参数解释详见后续实战更新

python train.py --data coco128.yaml --cfg configs/yolov5/yolov5s.yaml

2.5 模型推理

detect.py在各种数据源上运行推理, 并将检测结果保存到runs/detect目录

python detect.py --source 0 # 网络摄像头

img.jpg # 图像

vid.mp4 # 视频

path/ # 文件夹

path/*.jpg # glob

2.6 集成融合

如果使用不同模型来推理数据集,则可以使用 wbf.py文件通过加权框融合来集成结果,只需要在wbf.py文件中设置img路径和txt路径

$ python wbf.py

开箱使用YOLOAir

1. 模块选择

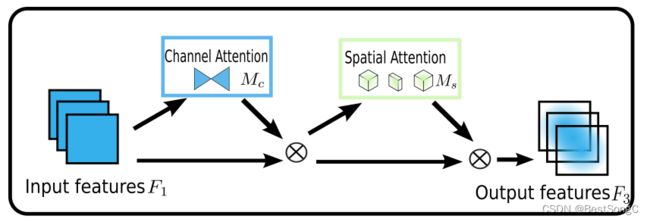

本文选取全局注意力机制GAM(Global Attention Mechanism),整体结构如下图所示:

- 通道注意力子模块:通道注意子模块使用三维排列来在第三个维度(即通道维度)上保留信息,然后用一个两层的MLP(多层感知器)放大跨维通道-空间依赖性(MLP是一种编码-解码器结构,与BAM相同,其压缩比为r),通道注意子模块如下图所示

- 空间注意力子模块:在空间注意力子模块中,为了关注空间信息,使用两个卷积层进行空间信息融合,还从通道注意力子模块中使用了与BAM相同的缩减比r;与此同时由于最大池化操作减少了信息的使用,产生了消极的影响,这里删除了池化操作以进一步保留特性映射,因此空间注意力模块有时会显著增加参数的数量;为了防止参数显著增加,在ResNet50中采用带Channel Shuffle的Group卷积,无Group卷积的空间注意力子模块如下图所示

import numpy as np

import torch

from torch import nn

from torch.nn import init

参考论文:https://arxiv.org/pdf/2112.05561v1.pdf

class GAM_Attention(nn.Module):

# https://paperswithcode.com/paper/global-attention-mechanism-retain-information

def __init__(self, c1, c2, group=True, rate=4):

super(GAM_Attention, self).__init__()

self.channel_attention = nn.Sequential(

nn.Linear(c1, int(c1 / rate)),

nn.ReLU(inplace=True),

nn.Linear(int(c1 / rate), c1)

)

self.spatial_attention = nn.Sequential(

nn.Conv2d(c1, c1 // rate, kernel_size=7, padding=3, groups=rate) if group else nn.Conv2d(c1, int(c1 / rate),

kernel_size=7,

padding=3),

nn.BatchNorm2d(int(c1 / rate)),

nn.ReLU(inplace=True),

nn.Conv2d(c1 // rate, c2, kernel_size=7, padding=3, groups=rate) if group else nn.Conv2d(int(c1 / rate), c2,

kernel_size=7,

padding=3),

nn.BatchNorm2d(c2)

)

def forward(self, x):

b, c, h, w = x.shape

x_permute = x.permute(0, 2, 3, 1).view(b, -1, c)

x_att_permute = self.channel_attention(x_permute).view(b, h, w, c)

x_channel_att = x_att_permute.permute(0, 3, 1, 2)

# x_channel_att=channel_shuffle(x_channel_att,4) #last shuffle

x = x * x_channel_att

x_spatial_att = self.spatial_attention(x).sigmoid()

x_spatial_att = channel_shuffle(x_spatial_att, 4) # last shuffle

out = x * x_spatial_att

# out=channel_shuffle(out,4) #last shuffle

return out

2.模型配置

# parameters

nc: 10 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# anchors

anchors:

#- [5,6, 7,9, 12,10] # P2/4

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 backbone

backbone:

# [from, number, module, args] # [c=channels,module,kernlsize,strides]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2 [c=3,64*0.5=32,3]

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, GAM_Attention, [512,512]], #9

[-1, 1, SPPF, [1024,5]], #10

]

# YOLOv5 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 14

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 18 (P3/8-small)

[-1, 1, GAM_Attention, [128,128]], #19

[-1, 1, Conv, [256, 3, 2]],

[[-1, 15], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 22 (P4/16-medium) [256, 256, 1, False]

[-1, 1, GAM_Attention, [256,256]],

[-1, 1, Conv, [512, 3, 2]], #[256, 256, 3, 2]

[[-1, 11], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 25 (P5/32-large) [512, 512, 1, False]

[-1, 1, GAM_Attention, [512,512]], #

[[19, 23, 27], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

- nc:检测的类别数

- depth_multiple:控制每层代码块的个数

- width_multiple:控制每层特征图的深度

- 参数量:YOLOv5s_gam summary: 314 layers, 11069767 parameters, 11069767 gradients, 21.6 GFLOPs

3.模块配置

在yolo.py中加载模块,配置相关参数(先在Common.py中导入该模块)

elif m in [GAM_Attention]:

c1, c2 = ch[f], args[0]

if c2 != no: # if not output

c2 = make_divisible(c2 * gw, 8)

args = [c1, *args[1:]]

4.开箱训练

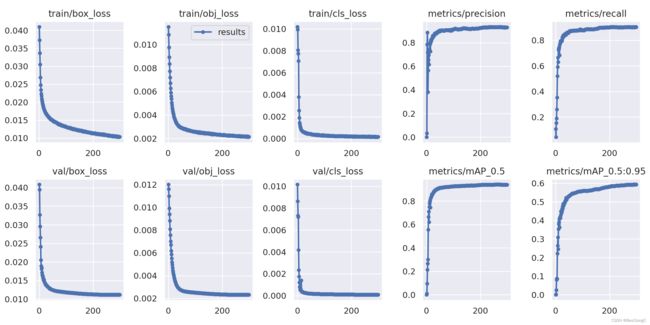

笔者对一个三类别目标检测任务进行训练,得到最终的结果图如下:

训练超参数:hyp.yaml

loss_category: CIoU

mode: yolo

use_aux: false

weights: weights/yolov5s.pt

cfg: configs/yolov5/attention/yolov5s_gam.yaml

data: data/fire_smoke.yaml

hyp: data/hyps/hyp.VOC.yaml

epochs: 300

batch_size: 64

imgsz: 640

rect: false

resume: false

nosave: false

noval: false

noautoanchor: false

noplots: false

evolve: null

bucket: ''

cache: null

image_weights: false

device: 0,1

multi_scale: false

single_cls: false

optimizer: SGD

sync_bn: false

workers: 8

project: runs/train

name: 0808

exist_ok: false

quad: false

cos_lr: false

label_smoothing: 0.0

patience: 100

freeze:

- 0

save_period: -1

local_rank: -1

entity: null

upload_dataset: false

bbox_interval: -1

artifact_alias: latest

swin_float: false

save_dir: runs/train/08083

- 所有实验默认初始化参数为yolov5s,模型为yolov5s权重加载

- base_size=64,hyp=hyp.VOC.yaml,epoch=300,IOU=CIOU,Imgsz=640

- 相比于普通的YOLOv5模型,添加了GAM_Attention模块的模型在训练稳定性上更胜一筹,训练和验证loss下降比较快且平稳;但相比于添加了SENet模块的YOL0v5模型,其在训练初期比较动荡且参数量较多,因此笔者建议在Neck模块中删减GAM_Attention(仅在最后一层预测层即可)

总结

开箱即用YOLOAir库,使用或自定义模块遵循以下几个部分:

- 模块选择

- 模型配置

- 模块配置

- 开箱训练

彩蛋

本文对构建YOLOAir库环境进行详细阐述,笔者以后会定期分享关于项目的其他模块和相关技术,笔者也建立了一个关于目标检测的交流群:781334731,欢迎大家踊跃加入,一起学习鸭!

笔者也持续更新一个微信公众号:Nuist计算机视觉与模式识别,大家帮忙点个关注,后台回复YOLOAir(五)获取本文PDF。