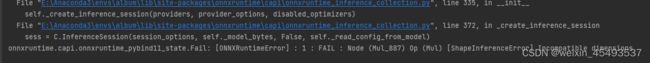

yolov5导出onnx模型后,验证模型报错

参考源码:

https://github.com/ultralytics/yolov5/tree/v5.0

先看看具体报错

原因就出在这里,在5.0版本中这里默认选择inplace操作,导致这个问题

class Detect(nn.Module):

stride = None # strides computed during build

export = False # onnx export

def __init__(self, nc=80, anchors=(), ch=()): # detection layer

super(Detect, self).__init__()

self.nc = nc # number of classes

self.no = nc + 5 # number of outputs per anchor

self.nl = len(anchors) # number of detection layers

self.na = len(anchors[0]) // 2 # number of anchors

self.grid = [torch.zeros(1)] * self.nl # init grid

a = torch.tensor(anchors).float().view(self.nl, -1, 2)

self.register_buffer('anchors', a) # shape(nl,na,2)

self.register_buffer('anchor_grid', a.clone().view(self.nl, 1, -1, 1, 1, 2)) # shape(nl,1,na,1,1,2)

self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv

def forward(self, x):

# x = x.copy() # for profiling

z = [] # inference output

self.training |= self.export

for i in range(self.nl):

x[i] = self.m[i](x[i]) # conv

bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

if not self.training: # inference

if self.grid[i].shape[2:4] != x[i].shape[2:4]:

self.grid[i] = self._make_grid(nx, ny).to(x[i].device)

y = x[i].sigmoid()

y[..., 0:2] = (y[..., 0:2] * 2. - 0.5 + self.grid[i]) * self.stride[i] # xy

y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh

z.append(y.view(bs, -1, self.no))

return x if self.training else (torch.cat(z, 1), x)

将其修改成这样,不在原数组上直接操作,就不会报错了,v5.0前的版本好像有inplace这个参数可以控制。

class Detect(nn.Module):

stride = None # strides computed during build

# export = False # onnx export

def __init__(self, nc=80, anchors=(), ch=()): # detection layer

super(Detect, self).__init__()

self.nc = nc # number of classes

self.no = nc + 5 # number of outputs per anchor

self.nl = len(anchors) # number of detection layers

self.na = len(anchors[0]) // 2 # number of anchors

self.grid = [torch.zeros(1)] * self.nl # init grid

a = torch.tensor(anchors).float().view(self.nl, -1, 2)

self.register_buffer('anchors', a) # shape(nl,na,2)

self.register_buffer('anchor_grid', a.clone().view(self.nl, 1, -1, 1, 1, 2)) # shape(nl,1,na,1,1,2)

self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv

def forward(self, x):

# x = x.copy() # for profiling

z = [] # inference output

# self.training |= self.export

for i in range(self.nl):

x[i] = self.m[i](x[i]) # conv

#修改1

bs, _, ny, nx = map(int, x[i].shape) # x(bs,255,20,20) to x(bs,3,20,20,85)

#修改2

x[i] = x[i].view(-1, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

if not self.training: # inference

self.grid[i] = self._make_grid(nx, ny).to(x[i].device)

y = x[i].sigmoid()

#修改3

xy = (y[..., 0:2] * 2. - 0.5 + self.grid[i]) * self.stride[i] # xy

wh = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i].view(1, self.na, 1, 1, 2) # wh

y = torch.cat((xy, wh, y[..., 4:]), -1)

#修改4

z.append(y.view(-1, int(y.size(1) * y.size(2) * y.size(3)), self.no))

return x if self.training else torch.cat(z, 1)

还可以参考b站上一个大神讲的怎么正确的导出onnx模型,看完后,跟着操作,你会发现你导出的模型看起来确实好看多了

https://www.bilibili.com/video/BV1Xw411f7FW/?spm_id_from=333.337