MobileNet V3 网络结构的原理与 Tensorflow2.0 实现

文章目录

- 介绍

- MobileNet V3 的创新

-

- 1、SE模块的加入

- 2、修改尾部结构

- 3、修改通道数量

- 4、改变激活函数

-

- SE 模块中

- BottleNeck 模块中

- MobileNet V3 网络结构

-

- 1、MobileNet V3 Large

- 2、MobileNet V3 Small

- 实验结果

-

- ImageNet 分类实验结果

- 在 SSDLite 目标检测算法中精度

- 代码实现

- 参考资料

介绍

MobileNet V3 是对 MobileNet V2 的改进,同样是一个轻量级卷积神经网络。为了适用于对资源不同要求的情况,MobileNet V3 提供了两个版本,分别为 MobileNet V3 Large 以及 MobileNet V3 Small。

MobileNet V3 的创新

1、SE模块的加入

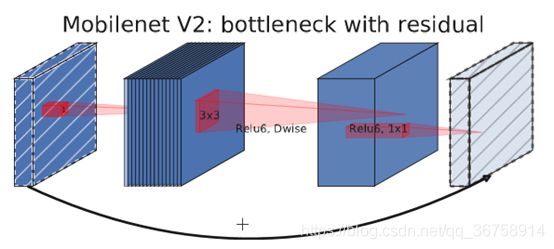

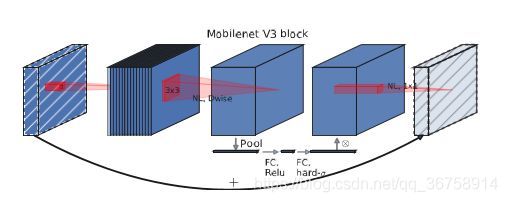

仿照 MnasNet,MobileNet V3 在 MobileNet V2 中的 BottleNeck 模块中的深度级卷积操作后面添加了 SE 模块。如下图所示:

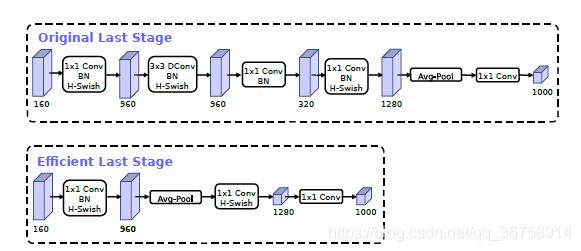

2、修改尾部结构

MobileNet V2 中,在 GlobalAveragePooling 操作之前,为了提高特征图的维度我们使用了一个 1x1 的卷积层,但是这必定会带来了一定的计算量,所以在 MobileNet V3 中作者将其放在 GlobalAveragePooling 的后面,首先利用 GlobalAveragePooling 将特征图大小由 7x7 降到了 1x1,然后再利用 1x1 提高维度,这样就减少了很多计算量。另外,为了进一步地降低计算量,作者直接去掉了前面纺锤型卷积的 3x3 以及 1x1 卷积,进一步减少了计算量,就变成了如下图第二行所示的结构。

3、修改通道数量

修改网络开始时第一个卷积核的数量,MobileNet V2 中使用了 32 个卷积核,在 MobileNet V3 中,作者仅使用了 16 个卷积核就达到了相同的精度。

4、改变激活函数

SE 模块中

MobileNet V3 中将原来 SE 模块中的 sigmoid 激活函数换成了 h-sigmoid 激活函数,其表达式如下:

f ( x ) = m a x ( 0 , m i n ( 1 , x + 1 2 ) ) f(x)=max(0, min(1, \frac{x+1}{2})) f(x)=max(0,min(1,2x+1))

BottleNeck 模块中

MobileNet V3 中将原来 BottleNeck 模块中的 ReLU6 激活函数换成了 h-swish 激活函数,其表达式如下:

f ( x ) = x R e L U 6 ( x + 3 ) 6 f(x)=x\frac{ReLU6(x+3)}{6} f(x)=x6ReLU6(x+3)

MobileNet V3 网络结构

1、MobileNet V3 Large

2、MobileNet V3 Small

【注】以上两图中,exp size 表示在 BottleNeck 模块中增加后的通道数;#out 表示输出的通道数;SE 表示是否在这个 BottleNeck 模块中使用 SE 模块;NL 表示使用的激活函数;s 表示步长。

实验结果

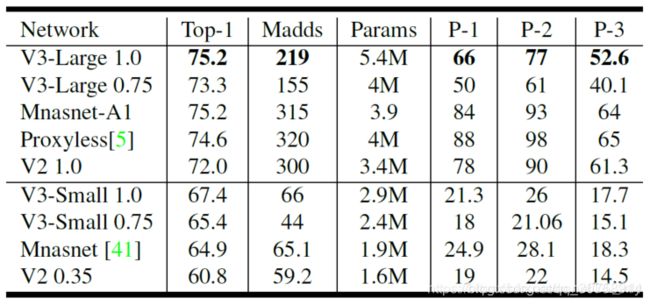

ImageNet 分类实验结果

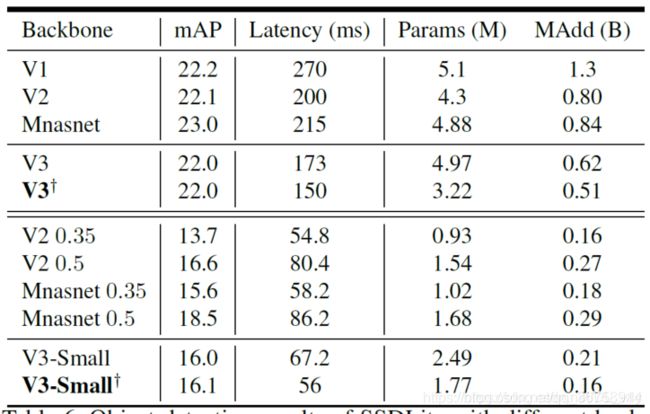

在 SSDLite 目标检测算法中精度

代码实现

import tensorflow as tf

def h_sigmoid(x):

output = tf.keras.layers.Activation('hard_sigmoid')(x)

return output

def h_swish(x):

output = x*h_sigmoid(x)

return output

def Squeeze_excitation_layer(x):

inputs = x

squeeze = inputs.shape[-1]/2

excitation = inputs.shape[-1]

x = tf.keras.layers.GlobalAveragePooling2D()(x)

x = tf.keras.layers.Dense(squeeze)(x)

x = tf.keras.layers.Activation('relu')(x)

x = tf.keras.layers.Dense(excitation)(x)

x = h_sigmoid(x)

x = tf.keras.layers.Reshape((1, 1, excitation))(x)

x = inputs * x

return x

def BottleNeck(inputs, exp_size, out_size, kernel_size, strides, is_se_existing, activation):

x = tf.keras.layers.Conv2D(filters=exp_size,

kernel_size=(1, 1),

strides=1,

padding="same")(inputs)

x = tf.keras.layers.BatchNormalization()(x)

if activation == "HS":

x = h_swish(x)

elif activation == "RE":

x = tf.keras.layers.Activation(tf.nn.relu6)(x)

x = tf.keras.layers.DepthwiseConv2D(kernel_size=kernel_size,

strides=strides,

padding="same")(x)

x = tf.keras.layers.BatchNormalization()(x)

if activation == "HS":

x = h_swish(x)

elif activation == "RE":

x = tf.keras.layers.Activation(tf.nn.relu6)(x)

if is_se_existing:

x = Squeeze_excitation_layer(x)

x = tf.keras.layers.Conv2D(filters=out_size,

kernel_size=(1, 1),

strides=1,

padding="same")(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Activation(tf.keras.activations.linear)(x)

if strides == 1 and inputs.shape[-1] == out_size:

x = tf.keras.layers.add([x, inputs])

return x

def MobileNetV3Large(inputs, classes=1000):

x = tf.keras.layers.Conv2D(filters=16,

kernel_size=(3, 3),

strides=2,

padding="same")(inputs)

x = tf.keras.layers.BatchNormalization()(x)

x = h_swish(x)

x = BottleNeck(x, exp_size=16, out_size=16, kernel_size=3, strides=1, is_se_existing=False, activation="RE")

x = BottleNeck(x, exp_size=64, out_size=24, kernel_size=3, strides=2, is_se_existing=False, activation="RE")

x = BottleNeck(x, exp_size=72, out_size=24, kernel_size=3, strides=1, is_se_existing=False, activation="RE")

x = BottleNeck(x, exp_size=72, out_size=40, kernel_size=5, strides=2, is_se_existing=True, activation="RE")

x = BottleNeck(x, exp_size=120, out_size=40, kernel_size=5, strides=1, is_se_existing=True, activation="RE")

x = BottleNeck(x, exp_size=120, out_size=40, kernel_size=5, strides=1, is_se_existing=True, activation="RE")

x = BottleNeck(x, exp_size=240, out_size=80, kernel_size=3, strides=2, is_se_existing=False, activation="HS")

x = BottleNeck(x, exp_size=200, out_size=80, kernel_size=3, strides=1, is_se_existing=False, activation="HS")

x = BottleNeck(x, exp_size=184, out_size=80, kernel_size=3, strides=1, is_se_existing=False, activation="HS")

x = BottleNeck(x, exp_size=184, out_size=80, kernel_size=3, strides=1, is_se_existing=False, activation="HS")

x = BottleNeck(x, exp_size=480, out_size=112, kernel_size=3, strides=1, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=672, out_size=112, kernel_size=3, strides=1, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=672, out_size=160, kernel_size=5, strides=2, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=960, out_size=160, kernel_size=5, strides=1, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=960, out_size=160, kernel_size=5, strides=1, is_se_existing=True, activation="HS")

x = tf.keras.layers.Conv2D(filters=960,

kernel_size=(1, 1),

strides=1,

padding="same")(x)

x = tf.keras.layers.BatchNormalization()(x)

x = h_swish(x)

x = tf.keras.layers.AveragePooling2D(pool_size=(7, 7), strides=1)(x)

x = tf.keras.layers.Conv2D(filters=1280,

kernel_size=(1, 1),

strides=1,

padding="same")(x)

x = h_swish(x)

x = tf.keras.layers.Conv2D(filters=classes,

kernel_size=(1, 1),

strides=1,

padding="same",

activation=tf.keras.activations.softmax)(x)

return x

def MobileNetV3Small(inputs, classes=1000):

x = tf.keras.layers.Conv2D(filters=16,

kernel_size=(3, 3),

strides=2,

padding="same")(inputs)

x = tf.keras.layers.BatchNormalization()(x)

x = h_swish(x)

x = BottleNeck(x, exp_size=16, out_size=16, kernel_size=3, strides=2, is_se_existing=True, activation="RE")

x = BottleNeck(x, exp_size=72, out_size=24, kernel_size=3, strides=2, is_se_existing=False, activation="RE")

x = BottleNeck(x, exp_size=88, out_size=24, kernel_size=3, strides=1, is_se_existing=False, activation="RE")

x = BottleNeck(x, exp_size=96, out_size=40, kernel_size=5, strides=2, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=240, out_size=40, kernel_size=5, strides=1, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=240, out_size=40, kernel_size=5, strides=1, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=120, out_size=48, kernel_size=5, strides=1, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=144, out_size=48, kernel_size=5, strides=1, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=288, out_size=96, kernel_size=5, strides=2, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=576, out_size=96, kernel_size=5, strides=1, is_se_existing=True, activation="HS")

x = BottleNeck(x, exp_size=576, out_size=96, kernel_size=5, strides=1, is_se_existing=True, activation="HS")

x = tf.keras.layers.Conv2D(filters=576,

kernel_size=(1, 1),

strides=1,

padding="same")(x)

x = tf.keras.layers.BatchNormalization()(x)

x = h_swish(x)

x = tf.keras.layers.AveragePooling2D(pool_size=(7, 7), strides=1)(x)

x = tf.keras.layers.Conv2D(filters=1280,

kernel_size=(1, 1),

strides=1,

padding="same")(x)

x = h_swish(x)

x = tf.keras.layers.Conv2D(filters=classes,

kernel_size=(1, 1),

strides=1,

padding="same",

activation=tf.keras.activations.softmax)(x)

return x

inputs = np.zeros((1, 224, 224, 3), np.float32)

output_large = MobileNetV3Large(inputs)

output_small = MobileNetV3Small(inputs)

参考资料

1、mobilenet系列之又一新成员—mobilenet-v3

2、【论文学习】轻量级网络——MobileNetV3终于来了(含开源代码)