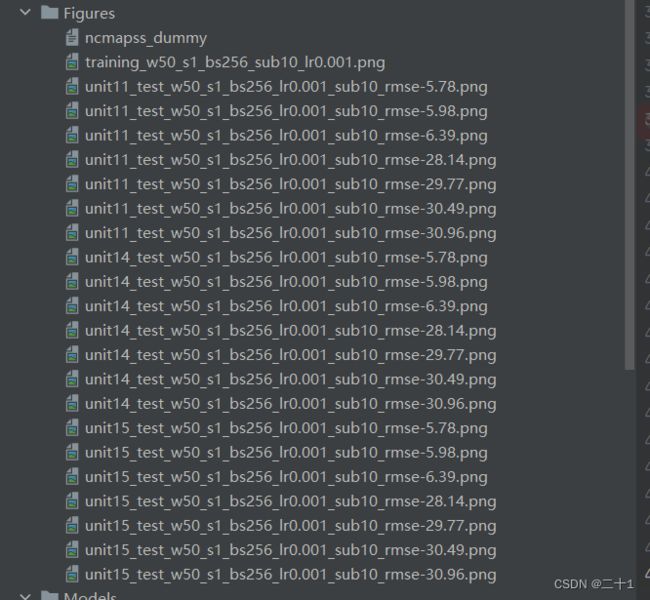

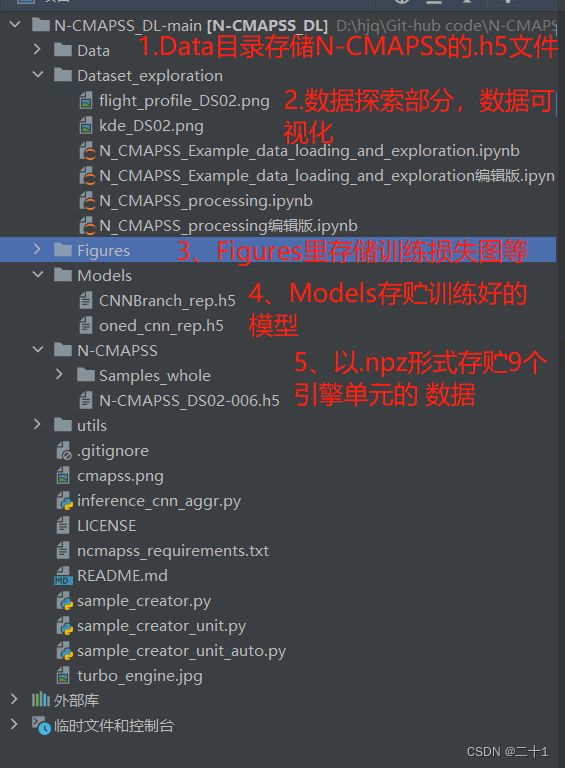

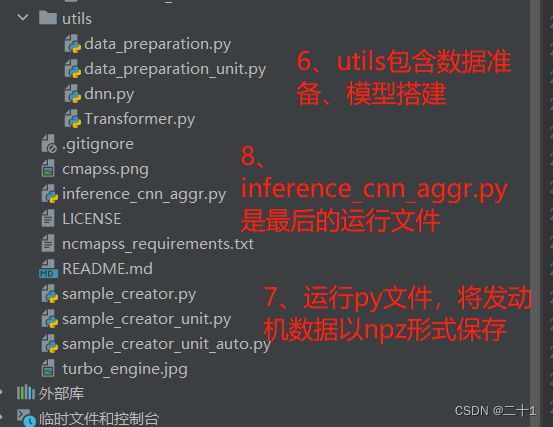

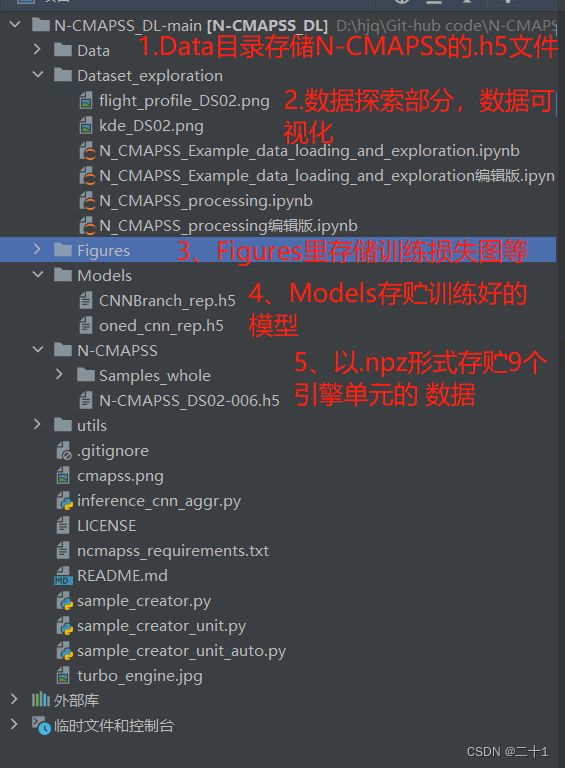

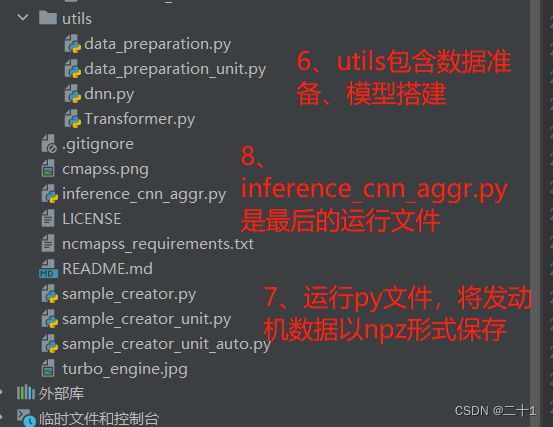

代码目录介绍

重点部分代码解读

sample_creator_unit_auto.py

def main():

parser = argparse.ArgumentParser(description='sample creator')

parser.add_argument('-w', type=int, default=10, help='window length', required=True)

parser.add_argument('-s', type=int, default=10, help='stride of window')

parser.add_argument('--sampling', type=int, default=1, help='sub sampling of the given data. If it is 10, then this indicates that we assumes 0.1Hz of data collection')

parser.add_argument('--test', type=int, default='0', help='select train or test, if it is zero, then extract samples from the engines used for training')

args = parser.parse_args()

sequence_length = args.w

stride = args.s

sampling = args.sampling

selector = args.test

'''

W: operative conditions (Scenario descriptors)

X_s: measured signals

X_v: virtual sensors

T(theta): engine health parameters

Y: RUL [in cycles]

A: auxiliary data

'''

df_all = df_all_creator(data_filepath, sampling)

'''

划分训练集和测试集

Split dataframe into Train and Test

Training units: 2, 5, 10, 16, 18, 20

Test units: 11, 14, 15

'''

units_index_train = [2.0, 5.0, 10.0, 16.0, 18.0, 20.0]

units_index_test = [11.0, 14.0, 15.0]

print("units_index_train", units_index_train)

print("units_index_test", units_index_test)

df_train = df_train_creator(df_all, units_index_train)

print(df_train)

print(df_train.columns)

print("num of inputs: ", len(df_train.columns) )

df_test = df_test_creator(df_all, units_index_test)

print(df_test)

print(df_test.columns)

print("num of inputs: ", len(df_test.columns))

del df_all

gc.collect()

df_all = pd.DataFrame()

sample_dir_path = os.path.join(data_filedir, 'Samples_whole')

sample_folder = os.path.isdir(sample_dir_path)

if not sample_folder:

os.makedirs(sample_dir_path)

print("created folder : ", sample_dir_path)

cols_normalize = df_train.columns.difference(['RUL', 'unit'])

sequence_cols = df_train.columns.difference(['RUL', 'unit'])

if selector == 0:

for unit_index in units_index_train:

data_class = Input_Gen (df_train, df_test, cols_normalize, sequence_length, sequence_cols, sample_dir_path,

unit_index, sampling, stride =stride)

data_class.seq_gen()

else:

for unit_index in units_index_test:

data_class = Input_Gen (df_train, df_test, cols_normalize, sequence_length, sequence_cols, sample_dir_path,

unit_index, sampling, stride =stride)

data_class.seq_gen()

data_preparation_unit.py

def df_all_creator(data_filepath, sampling):

"""

"""

t = time.process_time()

with h5py.File(data_filepath, 'r') as hdf:

W_dev = np.array(hdf.get('W_dev'))

X_s_dev = np.array(hdf.get('X_s_dev'))

X_v_dev = np.array(hdf.get('X_v_dev'))

T_dev = np.array(hdf.get('T_dev'))

Y_dev = np.array(hdf.get('Y_dev'))

A_dev = np.array(hdf.get('A_dev'))

W_test = np.array(hdf.get('W_test'))

X_s_test = np.array(hdf.get('X_s_test'))

X_v_test = np.array(hdf.get('X_v_test'))

T_test = np.array(hdf.get('T_test'))

Y_test = np.array(hdf.get('Y_test'))

A_test = np.array(hdf.get('A_test'))

W_var = np.array(hdf.get('W_var'))

X_s_var = np.array(hdf.get('X_s_var'))

X_v_var = np.array(hdf.get('X_v_var'))

T_var = np.array(hdf.get('T_var'))

A_var = np.array(hdf.get('A_var'))

W_var = list(np.array(W_var, dtype='U20'))

X_s_var = list(np.array(X_s_var, dtype='U20'))

X_v_var = list(np.array(X_v_var, dtype='U20'))

T_var = list(np.array(T_var, dtype='U20'))

A_var = list(np.array(A_var, dtype='U20'))

W = np.concatenate((W_dev, W_test), axis=0)

X_s = np.concatenate((X_s_dev, X_s_test), axis=0)

X_v = np.concatenate((X_v_dev, X_v_test), axis=0)

T = np.concatenate((T_dev, T_test), axis=0)

Y = np.concatenate((Y_dev, Y_test), axis=0)

A = np.concatenate((A_dev, A_test), axis=0)

print('')

print("Operation time (min): ", (time.process_time() - t) / 60)

print("number of training samples(timestamps): ", Y_dev.shape[0])

print("number of test samples(timestamps): ", Y_test.shape[0])

print('')

print("W shape: " + str(W.shape))

print("X_s shape: " + str(X_s.shape))

print("X_v shape: " + str(X_v.shape))

print("T shape: " + str(T.shape))

print("Y shape: " + str(Y.shape))

print("A shape: " + str(A.shape))

'''

Illusration of Multivariate time-series of condition monitoring sensors readings for Unit5 (fifth engine)

5号机组(第五发动机)状态监测传感器读数的多元时间序列说明

W: operative conditions (Scenario descriptors) - ['alt', 'Mach', 'TRA', 'T2']

X_s: measured signals - ['T24', 'T30', 'T48', 'T50', 'P15', 'P2', 'P21', 'P24', 'Ps30', 'P40', 'P50', 'Nf', 'Nc', 'Wf']

X_v: virtual sensors - ['T40', 'P30', 'P45', 'W21', 'W22', 'W25', 'W31', 'W32', 'W48', 'W50', 'SmFan', 'SmLPC', 'SmHPC', 'phi']

T(theta): engine health parameters - ['fan_eff_mod', 'fan_flow_mod', 'LPC_eff_mod', 'LPC_flow_mod', 'HPC_eff_mod', 'HPC_flow_mod', 'HPT_eff_mod', 'HPT_flow_mod', 'LPT_eff_mod', 'LPT_flow_mod']

Y: RUL [in cycles]

A: auxiliary data - ['unit', 'cycle', 'Fc', 'hs']

'''

df_W = pd.DataFrame(data=W, columns=W_var)

df_Xs = pd.DataFrame(data=X_s, columns=X_s_var)

df_Xv = pd.DataFrame(data=X_v[:,0:2], columns=['T40', 'P30'])

df_Y = pd.DataFrame(data=Y, columns=['RUL'])

df_A = pd.DataFrame(data=A, columns=A_var).drop(columns=['cycle', 'Fc', 'hs'])

df_all = pd.concat([df_W, df_Xs, df_Xv, df_Y, df_A], axis=1)

print ("df_all", df_all)

print ("df_all.shape", df_all.shape)

df_all_smp = df_all[::sampling]

print ("df_all_sub", df_all_smp)

print ("df_all_sub.shape", df_all_smp.shape)

return df_all_smp

def df_train_creator(df_all, units_index_train):

train_df_lst= []

for idx in units_index_train:

df_train_temp = df_all[df_all['unit'] == np.float64(idx)]

train_df_lst.append(df_train_temp)

df_train = pd.concat(train_df_lst)

df_train = df_train.reset_index(drop=True)

return df_train

def df_test_creator(df_all, units_index_test):

test_df_lst = []

for idx in units_index_test:

df_test_temp = df_all[df_all['unit'] == np.float64(idx)]

test_df_lst.append(df_test_temp)

df_test = pd.concat(test_df_lst)

df_test = df_test.reset_index(drop=True)

return df_test

def gen_sequence(id_df, seq_length, seq_cols):

""" Only sequences that meet the window-length are considered, no padding is used. This means for testing

we need to drop those which are below the window-length. An alternative would be to pad sequences so that

we can use shorter ones """

'''

只考虑满足窗口长度的序列,不使用填充。这意味着在测试中,我们需要去掉那些低于窗口长度的部分。另一种选择是填充序列,这样我们就可以使用更短的序列

'''

data_matrix = id_df[seq_cols].values

num_elements = data_matrix.shape[0]

for start, stop in zip(range(0, num_elements - seq_length), range(seq_length, num_elements)):

yield data_matrix[start:stop, :]

def gen_labels(id_df, seq_length, label):

""" Only sequences that meet the window-length are considered, no padding is used. This means for testing

we need to drop those which are below the window-length. An alternative would be to pad sequences so that

we can use shorter ones """

data_matrix = id_df[label].values

num_elements = data_matrix.shape[0]

return data_matrix[seq_length:num_elements, :]

def time_window_slicing (input_array, sequence_length, sequence_cols):

label_gen = [gen_labels(input_array[input_array['unit'] == id], sequence_length, ['RUL'])

for id in input_array['unit'].unique()]

label_array = np.concatenate(label_gen).astype(np.float32)

seq_gen = (list(gen_sequence(input_array[input_array['unit'] == id], sequence_length, sequence_cols))

for id in input_array['unit'].unique())

sample_array = np.concatenate(list(seq_gen)).astype(np.float32)

print("sample_array")

return sample_array, label_array

def time_window_slicing_label_save (input_array, sequence_length, stride, index, sample_dir_path, sequence_cols = 'RUL'):

'''

ref

for i in range(0, input_temp.shape[0] - sequence_length):

window = input_temp[i*stride:i*stride + sequence_length, :] # each individual window

window_lst.append(window)

# print (window.shape)

'''

window_lst = []

input_temp = input_array[input_array['unit'] == index][sequence_cols].values

num_samples = int((input_temp.shape[0] - sequence_length)/stride) + 1

for i in range(num_samples):

window = input_temp[i*stride:i*stride + sequence_length]

window_lst.append(window)

label_array = np.asarray(window_lst).astype(np.float32)

return label_array[:,-1]

def time_window_slicing_sample_save (input_array, sequence_length, stride, index, sample_dir_path, sequence_cols):

'''

'''

window_lst = []

input_temp = input_array[input_array['unit'] == index][sequence_cols].values

print ("Unit%s input array shape: " %index, input_temp.shape)

num_samples = int((input_temp.shape[0] - sequence_length)/stride) + 1

for i in range(num_samples):

window = input_temp[i*stride:i*stride + sequence_length,:]

window_lst.append(window)

sample_array = np.dstack(window_lst).astype(np.float32)

print ("sample_array.shape", sample_array.shape)

return sample_array

class Input_Gen(object):

'''

class for data preparation (sequence generator)

'''

def __init__(self, df_train, df_test, cols_normalize, sequence_length, sequence_cols, sample_dir_path,

unit_index, sampling, stride):

'''

'''

print("the number of input signals: ", len(cols_normalize))

min_max_scaler = preprocessing.MinMaxScaler(feature_range=(-1, 1))

norm_df = pd.DataFrame(min_max_scaler.fit_transform(df_train[cols_normalize]),

columns=cols_normalize,

index=df_train.index)

join_df = df_train[df_train.columns.difference(cols_normalize)].join(norm_df)

df_train = join_df.reindex(columns=df_train.columns)

norm_test_df = pd.DataFrame(min_max_scaler.transform(df_test[cols_normalize]), columns=cols_normalize,

index=df_test.index)

test_join_df = df_test[df_test.columns.difference(cols_normalize)].join(norm_test_df)

df_test = test_join_df.reindex(columns=df_test.columns)

df_test = df_test.reset_index(drop=True)

self.df_train = df_train

self.df_test = df_test

print (self.df_train)

print (self.df_test)

self.cols_normalize = cols_normalize

self.sequence_length = sequence_length

self.sequence_cols = sequence_cols

self.sample_dir_path = sample_dir_path

self.unit_index = np.float64(unit_index)

self.sampling = sampling

self.stride = stride

def seq_gen(self):

'''

concatenate vectors for NNs

:param :

:param :

:return:

'''

if any(index == self.unit_index for index in self.df_train['unit'].unique()):

print ("Unit for Train")

label_array = time_window_slicing_label_save(self.df_train, self.sequence_length,

self.stride, self.unit_index, self.sample_dir_path, sequence_cols='RUL')

sample_array = time_window_slicing_sample_save(self.df_train, self.sequence_length,

self.stride, self.unit_index, self.sample_dir_path, sequence_cols=self.cols_normalize)

else:

print("Unit for Test")

label_array = time_window_slicing_label_save(self.df_test, self.sequence_length,

self.stride, self.unit_index, self.sample_dir_path, sequence_cols='RUL')

sample_array = time_window_slicing_sample_save(self.df_test, self.sequence_length,

self.stride, self.unit_index, self.sample_dir_path, sequence_cols=self.cols_normalize)

print("sample_array.shape", sample_array.shape)

print("label_array.shape", label_array.shape)

np.savez_compressed(os.path.join(self.sample_dir_path, 'Unit%s_win%s_str%s_smp%s' %(str(int(self.unit_index)), self.sequence_length, self.stride, self.sampling)),

sample=sample_array, label=label_array)

print ("unit saved")

return

inference_cnn_aggr.py

'''

DL models (FNN, 1D CNN and CNN-LSTM) evaluation on N-CMAPSS

12.07.2021

Hyunho Mo

[email protected]

'''

import gc

import argparse

import os

import json

import logging

import sys

import h5py

import time

import matplotlib

import numpy as np

import pandas as pd

import seaborn as sns

from pandas import DataFrame

import matplotlib.pyplot as plt

from matplotlib import gridspec

import math

import random

from random import shuffle

from tqdm.keras import TqdmCallback

seed = 0

random.seed(0)

np.random.seed(seed)

import importlib

from scipy.stats import randint, expon, uniform

import sklearn as sk

from sklearn import svm

from sklearn.utils import shuffle

from sklearn import metrics

from sklearn import preprocessing

from sklearn import pipeline

from sklearn.metrics import mean_squared_error

from math import sqrt

from tqdm import tqdm

import scipy.stats as stats

import tensorflow as tf

print(tf.__version__)

import tensorflow.keras.backend as K

from tensorflow.keras import backend

from tensorflow.keras import optimizers

from tensorflow.keras.models import Sequential, load_model, Model

from tensorflow.keras.layers import Input, Dense, Flatten, Dropout, Embedding

from tensorflow.keras.layers import BatchNormalization, Activation, LSTM, TimeDistributed, Bidirectional

from tensorflow.keras.layers import Conv1D

from tensorflow.keras.layers import MaxPooling1D

from tensorflow.keras.layers import concatenate

from tensorflow.keras.callbacks import EarlyStopping, ModelCheckpoint, LearningRateScheduler

from tensorflow.python.framework.convert_to_constants import convert_variables_to_constants_v2_as_graph

from tensorflow.keras.initializers import GlorotNormal, GlorotUniform

initializer = GlorotNormal(seed=0)

from utils.data_preparation_unit import df_all_creator, df_train_creator, df_test_creator, Input_Gen

from utils.dnn import one_dcnn,CNNBranch

pd.options.mode.chained_assignment = None

current_dir = os.path.dirname(os.path.abspath(__file__))

print(current_dir)

data_filedir = os.path.join(current_dir, 'N-CMAPSS')

data_filepath = os.path.join(current_dir, 'N-CMAPSS', 'N-CMAPSS_DS02-006.h5')

sample_dir_path = os.path.join(data_filedir, 'Samples_whole')

model_temp_path = os.path.join(current_dir, 'Models', 'CNNBranch_rep.h5')

tf_temp_path = os.path.join(current_dir, 'TF_Model_tf')

pic_dir = os.path.join(current_dir, 'Figures')

def load_part_array (sample_dir_path, unit_num, win_len, stride, part_num):

filename = 'Unit%s_win%s_str%s_part%s.npz' %(str(int(unit_num)), win_len, stride, part_num)

filepath = os.path.join(sample_dir_path, filename)

loaded = np.load(filepath)

return loaded['sample'], loaded['label']

'''

整理sample和label,转换成可训练的形状

'''

def load_part_array_merge (sample_dir_path, unit_num, win_len, win_stride, partition):

sample_array_lst = []

label_array_lst = []

print ("Unit: ", unit_num)

for part in range(partition):

print ("Part.", part+1)

sample_array, label_array = load_part_array (sample_dir_path, unit_num, win_len, win_stride, part+1)

sample_array_lst.append(sample_array)

label_array_lst.append(label_array)

sample_array = np.dstack(sample_array_lst)

label_array = np.concatenate(label_array_lst)

sample_array = sample_array.transpose(2, 0, 1)

print ("sample_array.shape", sample_array.shape)

print ("label_array.shape", label_array.shape)

return sample_array, label_array

def load_array (sample_dir_path, unit_num, win_len, stride):

filename = 'Unit%s_win%s_str%s_smp10.npz' %(str(int(unit_num)), win_len, stride)

filepath = os.path.join(sample_dir_path, filename)

loaded = np.load(filepath)

return loaded['sample'].transpose(2, 0, 1), loaded['label']

def rmse(y_true, y_pred):

return backend.sqrt(backend.mean(backend.square(y_pred - y_true), axis=-1))

def shuffle_array(sample_array, label_array):

ind_list = list(range(len(sample_array)))

print("ind_list befor: ", ind_list[:10])

print("ind_list befor: ", ind_list[-10:])

ind_list = shuffle(ind_list)

print("ind_list after: ", ind_list[:10])

print("ind_list after: ", ind_list[-10:])

print("Shuffeling in progress")

shuffle_sample = sample_array[ind_list, :, :]

shuffle_label = label_array[ind_list,]

return shuffle_sample, shuffle_label

def figsave(history, win_len, win_stride, bs, lr, sub):

fig_acc = plt.figure(figsize=(15, 8))

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Training', fontsize=24)

plt.ylabel('loss', fontdict={'fontsize': 18})

plt.xlabel('epoch', fontdict={'fontsize': 18})

plt.legend(['Training loss', 'Validation loss'], loc='upper left', fontsize=18)

plt.show()

print ("saving file:training loss figure")

fig_acc.savefig(pic_dir + "/training_w%s_s%s_bs%s_sub%s_lr%s.png" %(int(win_len), int(win_stride), int(bs), int(sub), str(lr)))

return

def get_flops(model):

concrete = tf.function(lambda inputs: model(inputs))

concrete_func = concrete.get_concrete_function(

[tf.TensorSpec([1, *inputs.shape[1:]]) for inputs in model.inputs])

frozen_func, graph_def = convert_variables_to_constants_v2_as_graph(concrete_func)

with tf.Graph().as_default() as graph:

tf.graph_util.import_graph_def(graph_def, name='')

run_meta = tf.compat.v1.RunMetadata()

opts = tf.compat.v1.profiler.ProfileOptionBuilder.float_operation()

flops = tf.compat.v1.profiler.profile(graph=graph, run_meta=run_meta, cmd="op", options=opts)

return flops.total_float_ops

def scheduler(epoch, lr):

if epoch == 30:

print("lr decay by 10")

return lr * 0.1

elif epoch == 70:

print("lr decay by 10")

return lr * 0.1

else:

return lr

def release_list(a):

del a[:]

del a

units_index_train = [2.0, 5.0, 10.0, 16.0, 18.0, 20.0]

units_index_test = [11.0, 14.0, 15.0]

def main():

parser = argparse.ArgumentParser(description='sample creator')

parser.add_argument('-w', type=int, default=50, help='sequence length', required=False)

parser.add_argument('-s', type=int, default=1, help='stride of filter')

parser.add_argument('-f', type=int, default=10, help='number of filter')

parser.add_argument('-k', type=int, default=10, help='size of kernel')

parser.add_argument('-bs', type=int, default=256, help='batch size')

parser.add_argument('-ep', type=int, default=30, help='max epoch')

parser.add_argument('-pt', type=int, default=20, help='patience')

parser.add_argument('-vs', type=float, default=0.1, help='validation split')

parser.add_argument('-lr', type=float, default=0.001, help='learning rate')

parser.add_argument('-sub', type=int, default=10, help='subsampling stride')

args = parser.parse_args()

win_len = args.w

win_stride = args.s

partition = 3

n_filters = args.f

kernel_size = args.k

lr = args.lr

bs = args.bs

ep = args.ep

pt = args.pt

vs = args.vs

sub = args.sub

amsgrad = optimizers.Adam(learning_rate=lr, beta_1=0.9, beta_2=0.999, epsilon=1e-07, amsgrad=True, name='Adam')

rmsop = optimizers.RMSprop(learning_rate=lr, rho=0.9, momentum=0.0, epsilon=1e-07, centered=False,

name='RMSprop')

train_units_samples_lst =[]

train_units_labels_lst = []

for index in units_index_train:

print("Load data index: ", index)

sample_array, label_array = load_array (sample_dir_path, index, win_len, win_stride)

print("sample_array.shape", sample_array.shape)

print("label_array.shape", label_array.shape)

sample_array = sample_array[::sub]

label_array = label_array[::sub]

print("sub sample_array.shape", sample_array.shape)

print("sub label_array.shape", label_array.shape)

train_units_samples_lst.append(sample_array)

train_units_labels_lst.append(label_array)

sample_array = np.concatenate(train_units_samples_lst)

label_array = np.concatenate(train_units_labels_lst)

print ("samples are aggregated")

release_list(train_units_samples_lst)

release_list(train_units_labels_lst)

train_units_samples_lst =[]

train_units_labels_lst = []

print("Memory released")

print("samples are shuffled")

print("sample_array.shape", sample_array.shape)

print("label_array.shape", label_array.shape)

print ("train sample dtype", sample_array.dtype)

print("train label dtype", label_array.dtype)

one_d_cnn_model = one_dcnn(n_filters, kernel_size, sample_array, initializer)

print(one_d_cnn_model.summary())

one_d_cnn_model.compile(loss='mean_squared_error', optimizer=amsgrad, metrics=[rmse, 'mae'])

start = time.time()

lr_scheduler = LearningRateScheduler(scheduler)

'''

此处保存最优模型到制定路径下面,并且提前命名.h5文件名称。

save_best_only=True 只在模型被认为是目前最好时保存。如果 filepath 不包含格式化选项,例如 {epoch},则新保存的更好模型将覆盖之前保存的模型。

'''

one_d_cnn_model.compile(loss='mean_squared_error', optimizer=amsgrad, metrics='mae')

history = one_d_cnn_model.fit(sample_array, label_array, epochs=ep, batch_size=bs, validation_split=vs, verbose=2,

callbacks = [EarlyStopping(monitor='val_loss', min_delta=0, patience=pt, verbose=1, mode='min'),

ModelCheckpoint(model_temp_path, monitor='val_loss', save_best_only=True, mode='min', verbose=1)]

)

figsave(history, win_len, win_stride, bs, lr, sub)

print("The FLOPs is:{}".format(get_flops(one_d_cnn_model)), flush=True)

num_train = sample_array.shape[0]

end = time.time()

training_time = end - start

print("Training time: ", training_time)

start = time.time()

output_lst = []

truth_lst = []

for index in units_index_test:

print ("test idx: ", index)

sample_array, label_array = load_array(sample_dir_path, index, win_len, win_stride)

print("sample_array.shape", sample_array.shape)

print("label_array.shape", label_array.shape)

sample_array = sample_array[::sub]

label_array = label_array[::sub]

print("sub sample_array.shape", sample_array.shape)

print("sub label_array.shape", label_array.shape)

estimator = load_model(model_temp_path)

y_pred_test = estimator.predict(sample_array)

output_lst.append(y_pred_test)

truth_lst.append(label_array)

print(output_lst[0].shape)

print(truth_lst[0].shape)

print(np.concatenate(output_lst).shape)

print(np.concatenate(truth_lst).shape)

output_array = np.concatenate(output_lst)[:, 0]

trytg_array = np.concatenate(truth_lst)

print(output_array.shape)

print(trytg_array.shape)

rms = sqrt(mean_squared_error(output_array, trytg_array))

print(rms)

rms = round(rms, 2)

end = time.time()

inference_time = end - start

num_test = output_array.shape[0]

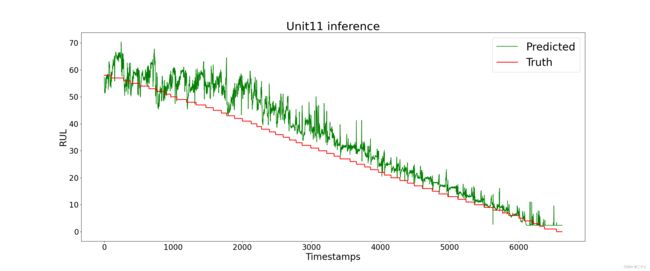

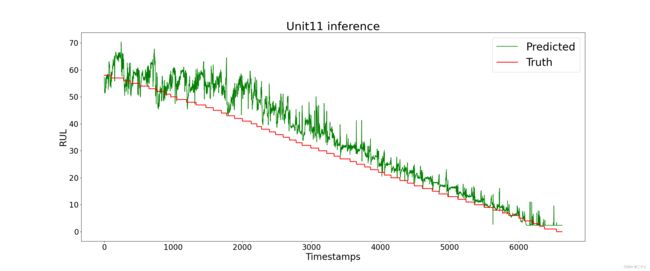

for idx in range(len(units_index_test)):

print(output_lst[idx])

print(truth_lst[idx])

fig_verify = plt.figure(figsize=(24, 10))

plt.plot(output_lst[idx], color="green")

plt.plot(truth_lst[idx], color="red", linewidth=2.0)

plt.title('Unit%s inference' %str(int(units_index_test[idx])), fontsize=30)

plt.yticks(fontsize=20)

plt.xticks(fontsize=20)

plt.ylabel('RUL', fontdict={'fontsize': 24})

plt.xlabel('Timestamps', fontdict={'fontsize': 24})

plt.legend(['Predicted', 'Truth'], loc='upper right', fontsize=28)

plt.show()

fig_verify.savefig(pic_dir + "/unit%s_test_w%s_s%s_bs%s_lr%s_sub%s_rmse-%s.png" %(str(int(units_index_test[idx])),

int(win_len), int(win_stride), int(bs),

str(lr), int(sub), str(rms)))

print("The FLOPs is:{}".format(get_flops(one_d_cnn_model)), flush=True)

print("wind length_%s, win stride_%s" %(str(win_len), str(win_stride)))

print("# Training samples: ", num_train)

print("# Inference samples: ", num_test)

print("Training time: ", training_time)

print("Inference time: ", inference_time)

print("Result in RMSE: ", rms)

if __name__ == '__main__':

main()

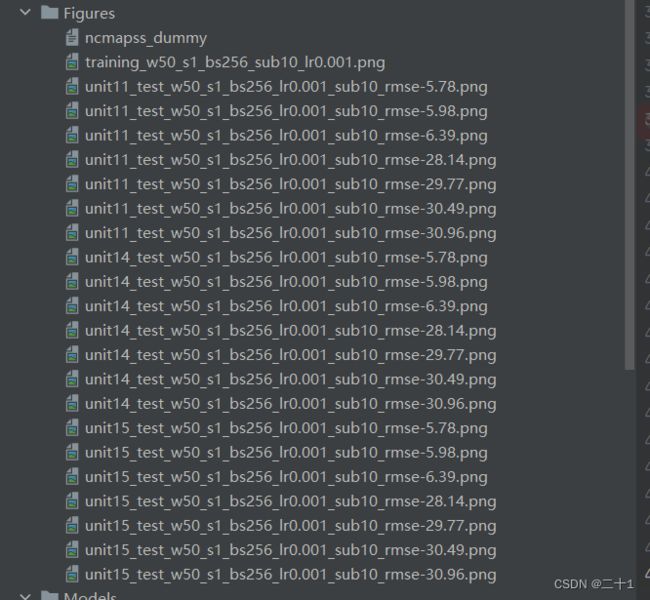

实验结果图