人工智能-强化学习-算法:PPO(Proximal Policy Optimization,改进版Policy Gradient)【PPO、PPO2、TRPO】

强化学习算法 { Policy-Based Approach:Policy Gradient算法:Learning an Actor/Policy π Value-based Approach:Critic { State value function V π ( s ) State-Action value function Q π ( s , a ) ⟹ Q-Learning算法 Actor+Critic \begin{aligned} \text{强化学习算法} \begin{cases} \text{Policy-Based Approach:Policy Gradient算法:Learning an Actor/Policy π} \\[2ex] \text{Value-based Approach:Critic} \begin{cases} \text{State value function $V^π(s)$}\\ \\ \text{State-Action value function $Q^π(s,a)$ $\implies$ Q-Learning算法} \end{cases} \\[2ex] \text{Actor+Critic} \end{cases} \end{aligned} 强化学习算法⎩⎪⎪⎪⎪⎪⎪⎪⎨⎪⎪⎪⎪⎪⎪⎪⎧Policy-Based Approach:Policy Gradient算法:Learning an Actor/Policy πValue-based Approach:Critic⎩⎪⎨⎪⎧State value function Vπ(s)State-Action value function Qπ(s,a) ⟹ Q-Learning算法Actor+Critic

![]()

OpenAI 把PPO当作他们默认强化学习算法

一、On-policy v.s. Off-policy

The on-policy approach in the preceding section is actually a compromise—it learns action values not for the optimal policy, but for a near-optimal policy that still explores. on-policy算法是在保证跟随最优策略的基础上同时保持着对其它动作的探索性,对于on-policy算法来说如要保持探索性则必然会牺牲一定的最优选择机会。

A more straightforward approach is to use two policies, one that is learned about and that becomes the optimal policy, and one that is more exploratory and is used to generate behavior. 有一个更加直接的办法就是在迭代过程中允许存在两个policy,一个用于生成学习过程的动作,具有很强的探索性,另外一个则是由值函数产生的最优策略,这个方法就被称作off-policy。

The policy being learned about is called the target policy, and the policy used to generate behavior is called the behavior policy.In this case we say that learning is from data “off” the target policy, and the overall process is termed off-policy learning.

1、On-policy Algorithm

- “要训练的Agent” 跟 “和环境互动的Agent” 是同一个的话,这个叫做 on-policy。即:要训练的Agent,它是一边跟环境互动,一边做学习这个叫 on-policy。比如:Policy Gradient。Policy Gradient 是一个会花很多时间来取样的算法。Policy Gradient 算法的大多数时间都在取样,Agent/Actor跟环境做互动取样后update参数一次(只能 update 参数一次),接下来就要重新再去环境里取样,然后才能再次 update 参数一次,这非常花时间。

2、Off-policy Algorithm

- “要训练的Agent” 跟 “和环境互动的Agent” 不是同一个的话,这个叫做 off-policy。如果它是在旁边看别人玩,透过看别人玩,来学习的话,这个叫做 Off-policy。

- 在迭代过程中允许存在两个policy, π θ π_θ πθ, π θ ′ π_{θ'} πθ′。其中 π θ ′ π_{θ'} πθ′ 一个用于生成学习过程的动作,具有很强的探索性,另外一个 π θ π_θ πθ 则是由值函数产生的最优策略,这个方法就被称作off-policy。

- 从 on-policy 变成 off-policy 的好处就是:用 π θ ′ π_{θ'} πθ′ 去跟环境做互动,用 π θ ′ π_{θ'} πθ′ 采样到的数据集去训练 π θ π_θ πθ

- 用 π θ ′ π_{θ'} πθ′ 从环境中取样去训练 π θ π_θ πθ 意味着 π θ ′ π_{θ'} πθ′ 从环境中取的样可以用非常多次, π θ π_θ πθ 在做梯度上升的时候,就可以执行梯度上升好几次,, π θ π_θ πθ 可以 update 参数好几次,都只要用同一笔取样数据。,这样就会比较有效率

二、Importance Sampling

- off-policy与重要性采样(Importance Sampling)密不可分

- Importance Sampling主要的作用在于通过一个简单的可预测的分布去估计一个服从另一个分布的随机变量的均值。

- 在实际应用off-policy时,迭代过程通常会有两个策略,一个是Behavior policy,用于生成学习过程所需要选择的动作,这一个简单的,探索性非常强的关于动作选择的分布;另一个是Target policy,这是我们最终希望得到的最优动作选择分布。

- 应用Importance Sampling之处在于通过Behavior policy去估计Target policy可能反馈回来的收益的均值,即用一个简单分布去估计服从另一个分布的随机变量的均值。

- 如果可以从 p ( x ) p(x) p(x) 分布取样时,

E x ∼ p [ f ( x ) ] ≈ 1 N ∑ i = 1 N f ( x i ) \begin{aligned} E_{x\sim p}[f(x)]≈\cfrac1N\sum^N_{i=1}f(x_i) \end{aligned} Ex∼p[f(x)]≈N1i=1∑Nf(xi) - 如果无法从 p ( x ) p(x) p(x) 分布取样,但是可以从 q ( x ) q(x) q(x) 分布取样时

E x ∼ p [ f ( x ) ] = ∫ f ( x ) p ( x ) d x = ∫ f ( x ) p ( x ) q ( x ) q ( x ) d x = E x ∼ q [ f ( x ) p ( x ) q ( x ) ] \begin{aligned} E_{x\sim p}[f(x)]=\int f(x)p(x)dx=\int f(x)\cfrac{p(x)}{q(x)}q(x)dx=E_{x\sim q}[f(x)\color{violet}{\cfrac{p(x)}{q(x)}}] \end{aligned} Ex∼p[f(x)]=∫f(x)p(x)dx=∫f(x)q(x)p(x)q(x)dx=Ex∼q[f(x)q(x)p(x)]

其中的 p ( x ) q ( x ) \color{violet}{\cfrac{p(x)}{q(x)}} q(x)p(x) 称为 Important Weight。 - 原则上, p ( x ) p(x) p(x) 与 q ( x ) q(x) q(x) 可以任意取,但是实践中, p ( x ) p(x) p(x) 与 q ( x ) q(x) q(x) 两分布要尽可能像,否则效果不好。因为 f ( x ) f(x) f(x) 与 f ( x ) p ( x ) q ( x ) f(x)\cfrac{p(x)}{q(x)} f(x)q(x)p(x) 的分布式不同的,如果取样无限次,则两者结果相同。但是在有限次取样后的结果是不同的。

三、On-policy算法(Policy Gradient) ⇒ I m p o r t a n c e S a m p l i n g \xRightarrow{Importance\ Sampling} Importance Sampling Off-policy算法

利用 Importance Sampling 将 Policy Gradient 这个 On-policy算法 转为 Off-policy算法

由Policy Gradient 可知:将 Policy Gradient 经过 “Add a Baseline”、“Assign Suitable Credit” 处理后的梯度为:

∇ θ R ‾ θ ≈ 1 N ∑ i = 1 N ∑ t = 1 T i [ A θ ( s t , a t ) ∇ θ l o g p θ ( a t i ∣ s t i ) ] = E ( s t , a t ) ∼ π θ [ A θ ( s t , a t ) ∇ θ l o g P θ ( a t ∣ s t ) ] = E ( s t , a t ) ∼ π θ ′ [ P θ ( s t , a t ) P θ ′ ( s t , a t ) A ( θ ′ ) ( s t , a t ) ∇ θ l o g P θ ( a t ∣ s t ) ] = E ( s t , a t ) ∼ π θ ′ [ P θ ( a t ∣ s t ) P θ ( s t ) P θ ′ ( a t ∣ s t ) P θ ′ ( s t ) A ( θ ′ ) ( s t , a t ) ∇ θ l o g P θ ( a t ∣ s t ) ] = 令 P θ ( s t ) = P θ ′ ( s t ) E ( s t , a t ) ∼ π θ ′ [ P θ ( a t ∣ s t ) P θ ′ ( a t ∣ s t ) A ( θ ′ ) ( s t , a t ) ∇ θ l o g P θ ( a t ∣ s t ) ] = E ( s t , a t ) ∼ π θ ′ { [ P θ ( a t ∣ s t ) P θ ′ ( a t ∣ s t ) A ( θ ′ ) ( s t , a t ) ] ∇ θ l o g [ P θ ( a t ∣ s t ) P θ ′ ( a t ∣ s t ) A ( θ ′ ) ( s t , a t ) ] } 紫色部分与θ无关 \begin{aligned} \nabla_θ\overline{R}_θ&≈\cfrac1N\sum^N_{i=1}\sum^{T_i}_{t=1}[A^θ(s_t,a_t)\nabla_θ logp_θ(a^i_t|s^i_t)]\\ &=E_{(s_t,a_t)\sim π_θ}[A^θ(s_t,a_t)\nabla_θ logP_θ(a_t|s_t)]\\ &=E_{(s_t,a_t)\sim π_{θ'}}\left[\cfrac{P_θ(s_t,a_t)}{P_{θ'}(s_t,a_t)}A^{(θ')}(s_t,a_t)\nabla_θ logP_θ(a_t|s_t)\right]\\ &=\color{black}{E_{(s_t,a_t)\sim π_{θ'}}\left[\cfrac{P_θ(a_t|s_t)\color{violet}{P_θ(s_t)}}{P_{θ'}(a_t|s_t)\color{violet}{P_{θ'}(s_t)}}A^{(θ')}(s_t,a_t)\nabla_θ logP_θ(a_t|s_t)\right]}\\ &\xlongequal{令P_θ(s_t)=P_{θ'}(s_t)} E_{(s_t,a_t)\sim π_{θ'}}\left[\cfrac{P_θ(a_t|s_t)}{P_{θ'}(a_t|s_t)}A^{(θ')}(s_t,a_t)\nabla_θ logP_θ(a_t|s_t)\right]\\ &=E_{(s_t,a_t)\sim π_{θ'}}\left\{\left[\cfrac{P_θ(a_t|s_t)}{P_{θ'}(a_t|s_t)}A^{(θ')}(s_t,a_t)\right]\nabla_θ log\left[\color{black}{\cfrac{P_θ(a_t|s_t)}{\color{violet}{P_{θ'}(a_t|s_t)}}\color{violet}{A^{(θ')}(s_t,a_t)}}\right]\right\} \text{紫色部分与θ无关}\\ \end{aligned} ∇θRθ≈N1i=1∑Nt=1∑Ti[Aθ(st,at)∇θlogpθ(ati∣sti)]=E(st,at)∼πθ[Aθ(st,at)∇θlogPθ(at∣st)]=E(st,at)∼πθ′[Pθ′(st,at)Pθ(st,at)A(θ′)(st,at)∇θlogPθ(at∣st)]=E(st,at)∼πθ′[Pθ′(at∣st)Pθ′(st)Pθ(at∣st)Pθ(st)A(θ′)(st,at)∇θlogPθ(at∣st)]令Pθ(st)=Pθ′(st)E(st,at)∼πθ′[Pθ′(at∣st)Pθ(at∣st)A(θ′)(st,at)∇θlogPθ(at∣st)]=E(st,at)∼πθ′{[Pθ′(at∣st)Pθ(at∣st)A(θ′)(st,at)]∇θlog[Pθ′(at∣st)Pθ(at∣st)A(θ′)(st,at)]}紫色部分与θ无关

∵ ∇ x l o g f ( x ) = 1 f ( x ) ∇ x f ( x ) ∴ ∇ x f ( x ) = f ( x ) ∇ x l o g f ( x ) \textbf{∵} \quad \nabla_xlogf(x)=\cfrac{1}{f(x)}\nabla_xf(x) \qquad \textbf{∴} \quad \nabla_xf(x)=f(x)\nabla_xlogf(x) ∵∇xlogf(x)=f(x)1∇xf(x)∴∇xf(x)=f(x)∇xlogf(x)

∴ \textbf{∴} ∴目标函数为:

J ( θ ′ ) ( θ ) = R ‾ ( θ ′ ) ( θ ) = E ( s t , a t ) ∼ π θ ′ [ P θ ( a t ∣ s t ) P θ ′ ( a t ∣ s t ) A ( θ ′ ) ( s t , a t ) ] \begin{aligned} \color{red}{J^{(θ')}(θ)=\overline{R}^{(θ')}(θ)=E_{(s_t,a_t)\sim π_{θ'}}\left[\cfrac{P_θ(a_t|s_t)}{P_{θ'}(a_t|s_t)}A^{(θ')}(s_t,a_t)\right]} \end{aligned} J(θ′)(θ)=R(θ′)(θ)=E(st,at)∼πθ′[Pθ′(at∣st)Pθ(at∣st)A(θ′)(st,at)]

其中:

- θ θ θ 表示待优化的参数; θ θ θ不与环境互动;

- θ ′ θ' θ′ 表示用 θ ′ θ' θ′ 去做demonstration, θ ′ θ' θ′ 与环境互动后取样 ( s t , a t ) (s_t,a_t) (st,at),计算 A ( θ ′ ) ( s t , a t ) A^{(θ')}(s_t,a_t) A(θ′)(st,at)

四、Off-policy算法 ⇒ A d d C o n s t r a i n t \xRightarrow{Add\ Constraint} Add Constraint PPO/TRPO

- 由 “Importance Sampling” 的讨论可知,实践中,在公式 E x ∼ p [ f ( x ) ] = E x ∼ q [ f ( x ) p ( x ) q ( x ) ] \begin{aligned}E_{x\sim p}[f(x)]=E_{x\sim q}[f(x)\color{violet}{\cfrac{p(x)}{q(x)}}]\end{aligned} Ex∼p[f(x)]=Ex∼q[f(x)q(x)p(x)] 中的 p ( x ) p(x) p(x) 与 q ( x ) q(x) q(x) 两分布要尽可能像,否则效果不好。为了实现这个约束,需要在目标函数上加上一个约束条件。

- PPO 与 TRPO 的效果差不多,但是在实操时, 使用PPO算法 比 使用 TRPO 算法更容易操作。

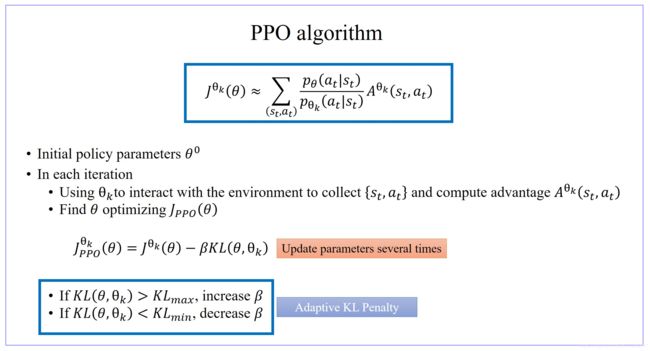

1、PPO (Proximal Policy Optimization)

J P P O ( θ ′ ) ( θ ) = J ( θ ′ ) ( θ ) − β K L ( θ , θ ′ ) = E ( s t , a t ) ∼ π θ ′ [ P θ ( a t ∣ s t ) P θ ′ ( a t ∣ s t ) A ( θ ′ ) ( s t , a t ) ] − β K L ( θ , θ ′ ) \begin{aligned} J^{(θ')}_{PPO}(θ)&=J^{(θ')}(θ)-βKL(θ,θ')\\ &=E_{(s_t,a_t)\sim π_{θ'}}\left[\cfrac{P_θ(a_t|s_t)}{P_{θ'}(a_t|s_t)}A^{(θ')}(s_t,a_t)\right]-βKL(θ,θ') \end{aligned} JPPO(θ′)(θ)=J(θ′)(θ)−βKL(θ,θ′)=E(st,at)∼πθ′[Pθ′(at∣st)Pθ(at∣st)A(θ′)(st,at)]−βKL(θ,θ′)

其中:

- K L ( θ , θ ′ ) KL(θ,θ') KL(θ,θ′) 用来衡量 θ θ θ 与 θ ′ θ' θ′ 的相似度。

- K L ( θ , θ ′ ) KL(θ,θ') KL(θ,θ′) 并不是表示 θ θ θ与 θ ′ θ' θ′分布的距离,而是表示采用 θ θ θ与 θ ′ θ' θ′这两个参数的两个Model的 Output 的 Action 的分布(即: π θ π_θ πθ与 π θ ′ π_{θ'} πθ′ 的分布) 的 KL-Divergence。

- 考虑的不是参数分布的KL-Divergence,而是利用各个参数产生的各个Action分布间的KL-Divergence,因为参数的变化与对应的Action的变化不一定完全一致。有时候你参数小小变了一下,它可能 output 的行为就差很多;或是参数变很多,但 output 的行为可能没什么改变。所以我们真正在意的是这个 actor 它的行为上的差距,而不是它们参数上的差距。

- 所以这里要注意一下,在做 PPO 的时候,所谓的 KL diversions 并不是参数的距离,而是 action 的距离。

2、TRPO (Trust Region Policy Optimization)

J T R P O ( θ ′ ) ( θ ) = E ( s t , a t ) ∼ π θ ′ [ P θ ( a t ∣ s t ) P θ ′ ( a t ∣ s t ) A ( θ ′ ) ( s t , a t ) ] K L ( π θ , π θ ′ ) < δ \begin{aligned} J^{(θ')}_{TRPO }(θ)=E_{(s_t,a_t)\sim π_{θ'}}\left[\cfrac{P_θ(a_t|s_t)}{P_{θ'}(a_t|s_t)}A^{(θ')}(s_t,a_t)\right]_{KL(π_θ,π_{θ'})<δ} \end{aligned} JTRPO(θ′)(θ)=E(st,at)∼πθ′[Pθ′(at∣st)Pθ(at∣st)A(θ′)(st,at)]KL(πθ,πθ′)<δ

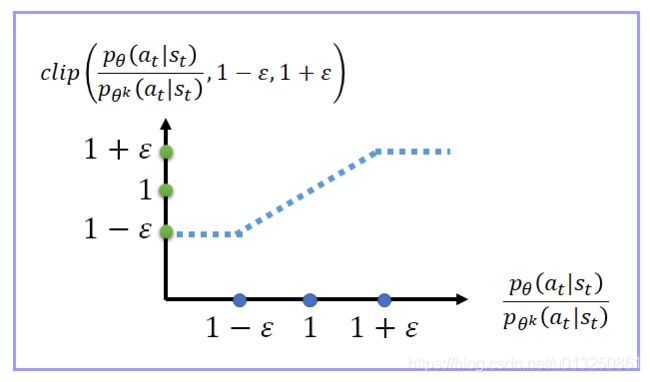

3、PPO2

J P P O 2 θ k ( θ ) ≈ ∑ ( s t , a t ) m i n { P θ ( a t ∣ s t ) P θ k ( a t ∣ s t ) A θ k ( s t , a t ) , c l i p [ P θ ( a t ∣ s t ) P θ k ( a t ∣ s t ) , 1 − ε , 1 + ε ] A θ k ( s t , a t ) } \begin{aligned} J^{θ_k}_{PPO2}(θ)≈\sum_{(s_t,a_t)}min\left\{\cfrac{P_θ(a_t|s_t)}{P_{θ_k}(a_t|s_t)}A^{θ_k}(s_t,a_t), clip\left[\cfrac{P_θ(a_t|s_t)}{P_{θ_k}(a_t|s_t)},1-ε,1+ε\right]A^{θ_k}(s_t,a_t)\right\} \end{aligned} JPPO2θk(θ)≈(st,at)∑min{Pθk(at∣st)Pθ(at∣st)Aθk(st,at),clip[Pθk(at∣st)Pθ(at∣st),1−ε,1+ε]Aθk(st,at)}

- PPO2算法的想法很直接,就是让 P θ P_θ Pθ 与 P θ k P_{θ_k} Pθk 不要差距太大。也就是你拿来与环境互动做 demonstration 的那个 model,跟实际上 learn 的 model 最后在 optimize 以后,不要差距太大。

- 如果 A ( θ ′ ) ( s t , a t ) > 0 A^{(θ')}(s_t,a_t)>0 A(θ′)(st,at)>0,也就意味着该 ( s t , a t ) (s_t,a_t) (st,at) 是好的,所以希望增加该 ( s t , a t ) (s_t,a_t) (st,at) 的机率,所以想让 p θ ( s t , a t ) p_θ(s_t,a_t) pθ(st,at) 越大越好。但是 p θ ( s t , a t ) p θ k ( s t , a t ) \cfrac{p_θ(s_t,a_t)}{p_{θ_k}(s_t,a_t)} pθk(st,at)pθ(st,at) 比值不可以超过 1 + ε 1+ ε 1+ε,如果超过 1 + ε 1+ ε 1+ε 的话, p θ ( s t , a t ) p_θ(s_t,a_t) pθ(st,at) 与 p θ k ( s t , a t ) p_{θ_k}(s_t,a_t) pθk(st,at) 的差距就太大,就没有 benefit 了。所以今天在 train 的时候, p θ ( s t , a t ) p_θ(s_t,a_t) pθ(st,at)只会被 train 到比 p θ k ( s t , a t ) p_{θ_k}(s_t,a_t) pθk(st,at) 大 1 + ε 1+ ε 1+ε 倍就会停止

- 如果 A ( θ ′ ) ( s t , a t ) < 0 A^{(θ')}(s_t,a_t)<0 A(θ′)(st,at)<0,也就意味着该 ( s t , a t ) (s_t,a_t) (st,at) 是坏的,所以希望减小该 ( s t , a t ) (s_t,a_t) (st,at) 的机率,所以想让 p θ ( s t , a t ) p_θ(s_t,a_t) pθ(st,at) 越小越好。但是 p θ ( s t , a t ) p θ k ( s t , a t ) \cfrac{p_θ(s_t,a_t)}{p_{θ_k}(s_t,a_t)} pθk(st,at)pθ(st,at) 比值不可以小于 1 − ε 1- ε 1−ε,如果超过 1 − ε 1- ε 1−ε 的话, p θ ( s t , a t ) p_θ(s_t,a_t) pθ(st,at) 与 p θ k ( s t , a t ) p_{θ_k}(s_t,a_t) pθk(st,at) 的差距就太大,就没有 benefit 了。所以今天在 train 的时候, p θ ( s t , a t ) p_θ(s_t,a_t) pθ(st,at)只会被 train 到比 p θ k ( s t , a t ) p_{θ_k}(s_t,a_t) pθk(st,at) 小 1 − ε 1- ε 1−ε 倍就会停止

4、PPO 跟其它方法的比較

在多数的 cases 里面,PPO 都是不错的,不是最好的,就是第二好的

参考资料:

深度增强学习PPO(Proximal Policy Optimization)算法源码走读

【RL系列】强化学习之On-Policy与Off-Policy

Sutton的Google硬盘

Proximal Policy Optimization Algorithms