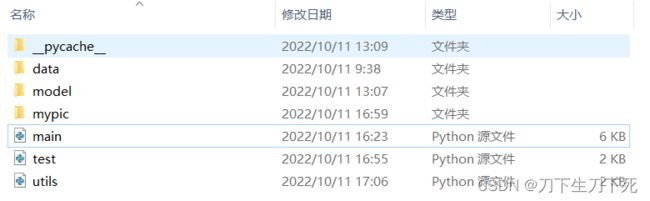

手写字母识别 深度学习 pytorch 线性层 EMNIST数据集 CUDA GPU训练 可使用图片测试 可视化 源代码 详细注释

基于广为流传的手写数字识别的训练代码改进而来

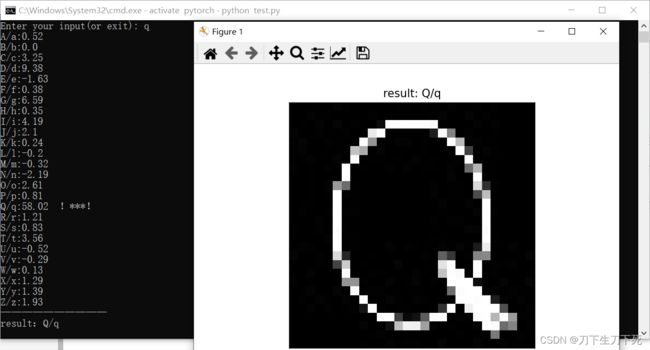

效果

main.py

#神经网络的包

import torch

from torch import nn # 神经网络相关工作

from torch.nn import functional as F # 常用函数

from torch import optim # 优化工具包

import torchvision #计算机视觉

from torchvision import transforms

#其他包

from matplotlib import pyplot as plt

from matplotlib.colors import Normalize

import random

#自己的函数

from utils import plot_image, plot_curve, one_hot

#超参数

batch_size = 512 #一次处理多张图片

LR=0.001

#1)加载数据集EMNIST

#原来的图片进行过处理:水平翻转图像,然后逆时针旋转90度

# 因此,我们处理时,要先顺时针旋转90度,再次水平翻转

train_loader = torch.utils.data.DataLoader(

torchvision.datasets.EMNIST(

'./data',

train=True,

download=True,

# 下载的数据为numpy格式转换为tensor格式,正则化使原本[0,1]的数据在0附近均匀分布

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,)),

transforms.RandomRotation(degrees=(90, 90)), #旋转90度

transforms.RandomVerticalFlip(p=1), #水平翻转(概率=1)

]),

split="letters"#只使用字母集进行测试

),

batch_size=batch_size,#批量大小

shuffle=True#随机打散

) # shuffle把数据随机打散

test_loader = torch.utils.data.DataLoader(

torchvision.datasets.EMNIST(

'./data',

train=False,

download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,)),

transforms.RandomRotation(degrees=(90, 90)),

transforms.RandomVerticalFlip(p=1)

]),

split="letters"

),

batch_size=batch_size,

shuffle=True

)

#2)创建网络

class Net(nn.Module):

#初始化

def __init__(self):

super(Net,self).__init__()

#线性层,每一层为xw+b

self.fc1 = nn.Linear(28*28,256) #第一层28*28是由图片像元数决定的

self.fc2 = nn.Linear(256,64)

self.fc3 = nn.Linear(64,26) #最后一层的输出值为26(因为26分类,输出必须为26)

#正向传播

def forward (self, x):

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)#最后一次不加激活函数,使用均方差来计算

return x

net = Net().cuda()# 创建一个网络对象,放到gpu上训练

#万能的Adam优化器,优化[w1,b1,w2,b2,w3,b3]六个权值

optimizer=optim.Adam(net.parameters(), lr=LR, betas=(0.9, 0.999), eps=1e-08, weight_decay=0, amsgrad=False)

train_loss = []

#3)在数据集上做训练(求导、更新)

for epoch in range(25): #整个数据集迭代25次

for batch_idx, (x,y) in enumerate(train_loader): #迭代器,取一个batch(512张图片)

# 输入> x: [b,1,28,28], y:[512]

x = x.view(x.size(0),28*28).cuda() #四维的x打平为2维[n,1,28,28]→[n,1*28*28],等于reshape

out = net(x) #网络输出:[512,26],每一个样本为a-z的概率

y_onehot = one_hot(y).cuda()#y转换为[26]的onehot编码

loss = F.mse_loss(out, y_onehot).cuda()#计算损失函数:J=y^-y

optimizer.zero_grad() #清零梯度(pytorch不会主动清零,每一次训练要主动清零)

loss.backward() #计算梯度

optimizer.step() #更新梯度(使用优化器更新参数,基础为梯度下降:w' = w - lr*grad)

train_loss.append(loss.item())#记录损失

if batch_idx % 10==0: #每十轮打印训练进度

print(epoch, batch_idx, loss.item())

#4)使用测试集,进行准确度测试

total_correct = 0

for x,y in test_loader:

x = x.view(x.size(0),28*28).cuda() #打平,放在cpu上

out = net(x) #运算

pred = out.argmax(dim=1)+1 #[512,26],取dim=1的最大值(dim=0、dim=1)

y=y.cuda() #y也放到cpu上

correct = pred.eq(y).sum().float().item() # correct值为的数量,item把Tensor类型转化为数值

total_correct += correct #统计准确个数

total_num = len(test_loader.dataset) #测试及大小

acc = total_correct / total_num #计算并输出准确率

print('test acc:', acc)

#5)演示+保存模型(每10轮)

if epoch%10==0 and epoch!=0:

#打印误差

plot_curve(train_loss)

#保存模型

filename= './model/model_'+str(epoch)+'.pkl'

torch.save(net.state_dict(),filename)

#可视化预测结果

#取1-10的batch

a=iter(test_loader)

for i in range(random.randint(1,10)):

x, y = next(a)

#送入网络计算

out = net(x.view(x.size(0),28*28).cuda())

pred = out.argmax(dim=1)

#画图

id=random.randint(1,256)

plot_image(x[id:id+6],pred[id:id+6],id)

test.py

#包

import torch

from torch import nn # 神经网络相关工作

from torch.nn import functional as F # 常用函数

from torch import optim # 优化工具包

import numpy as np

from matplotlib import pyplot as plt

from PIL import Image

#网络

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.fc1 = nn.Linear(28*28,256)

self.fc2 = nn.Linear(256,64)

self.fc3 = nn.Linear(64,26)

def forward (self, x):

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

#创建网络,读取模型

net=Net().cuda()

net.load_state_dict(torch.load('./model/model_10.pkl'))

#数字转字符

letters=["A/a","B/b","C/c","D/d","E/e","F/f","G/g","H/h","I/i","J/j","K/k","L/l","M/m","N/n","O/o","P/p","Q/q","R/r","S/s","T/t","U/u","V/v","W/w","X/x","Y/y","Z/z"]

#读取图片和测试

while True:

#输入

getstr = input("Enter your input(or exit): ")

if getstr=="exit":

break

#读图片,转化为tensor

img = Image.open("./mypic/"+getstr+'.jpg')

#压缩图片适合网络

img=img.resize((28,28))

img = img.convert('L')

img_array = np.array(img)

a=torch.FloatTensor(img_array.reshape(1,784))

#处理图片

a=a/255#转化为float

a=(a-0.1307)/0.3081#归一化

#代入网络计算

out = net(a.cuda())

pred = out.argmax(dim=1)

#预测值和每一维的概率

for i in range(26):

print(str(letters[i])+":"+str(round(out.reshape(26)[i].item()*100,2))+(" !***! " if i==pred.item() else""))

print("——————————")

print("result:"+letters[pred.item()])

#画图

plt.imshow(a.reshape(28,28)*0.3081+0.1307, cmap='gray', interpolation='none')

plt.title("{}: {}".format("result", letters[pred.item()]))

plt.xticks([])

plt.yticks([])

plt.show()

utils.py

import torch

from matplotlib import pyplot as plt

#代价函数曲线

def plot_curve(data):

fig = plt.figure()

plt.plot(range(len(data)), data, color='blue')

plt.legend(['value'], loc='upper right')

plt.xlabel('step')

plt.ylabel('value')

plt.show()

#数字转字符

letters=["A/a","B/b","C/c","D/d","E/e","F/f","G/g","H/h","I/i","J/j","K/k","L/l","M/m","N/n","O/o","P/p","Q/q","R/r","S/s","T/t","U/u","V/v","W/w","X/x","Y/y","Z/z"]

#画六张测试集中的图

def plot_image(img, label, name):

fig = plt.figure()

for i in range(6):

plt.subplot(2, 3, i + 1)

plt.tight_layout()

plt.imshow(img[i][0]*0.3081+0.1307, cmap='gray', interpolation='none')

plt.title("{}: {}".format(str(int(name)+i), letters[label[i].item()]))

plt.xticks([])

plt.yticks([])

plt.show()

#打平,但这里要打平成26维

def one_hot(label, depth=26):

out = torch.zeros(label.size(0), depth)

idx = torch.LongTensor(label).view(-1, 1)-1

out.scatter_(dim=1, index=idx, value=1)

return out