人脸识别项目

用opencv和深度学习方式,组成了三种方式实现人脸识别功能。

人脸识别项目

- 1.使用opencv实现人脸识别

-

- 1.1训练模型

- 1.2 usb摄像头识别

- 1.3全部代码

- 2.opencv人脸检测+cnn人脸识别

-

- 2.1采集人脸建立数据库

- 2.2搭建简单cnn网络进行人脸特征提取

- 2.3 使用摄像头进行人脸识别

- 3.retinaface人脸检测+facenet人脸识别

-

- 3.1 retinaface人脸检测

- 3.2 facenet人脸识别

- 3.3 网络数据格式的标准化

- 3.4 人脸特征编码

- 3.5视频识别

- 3.6人脸识别

- 4.参考

1.使用opencv实现人脸识别

1.1训练模型

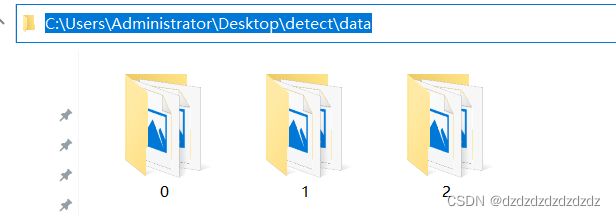

1.准备数据集,将不同人的照片放在不同的文件夹下,如下我在detect工程的目录下建了data文件夹,里面分别放了三个人的照片作为模型训练数据库。

2.利用opencv的人脸检测模型检测人脸,等下要把训练数据库里的人脸检出来,这里的xml文件要科学上网。

def detect_face(img):

#将检测图片转化为灰度图,因为openCV人脸识别用的是灰度图像

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#加载OpenCV人脸检测分类器Haar

face_cascade = cv2.CascadeClassifier('C:/Users/Administrator/Desktop/detect/haarcascade_frontalface_default.xml')

#检测多尺度图像,返回值是一张脸部区域信息的列表(x,y,width,height)

faces = face_cascade.detectMultiScale(gray,scaleFactor=1.2,minNeighbors=5)

#如果没有检测到人脸,返回原始图像

if (len(faces) == 0):

return None,None

#目前假设只有一张脸,x,y为左上角坐标,w,h为矩形的宽高

(x,y,w,h) = faces[0]

#返回图像的正面部分

return gray[y:y+w,x:x+h],faces[0]

2.将人脸和标签对应,建立人脸数据和对应的标签。

#该函数用于读取所有的训练图像,从每个图像检测人脸并将返回两个相同大小的列表,分别为脸部信息和标签

def prepare_training_data(data_folder_path):

# 获取数据文件夹中的目录(每个主题一个目录)

dirs = os.listdir(data_folder_path)

#建立两个列表,分别保存所有的脸部信息和标签

faces = []

labels = []

#浏览每个目录并访问其中的图像

for dir_name in dirs:

#dir_name(str类型)既标签

label = int(dir_name)

#建立包含当前主题主题图像的目录路径

subject_dir_path = data_folder_path + '/' + dir_name

#获取主题目录下图像名称

subject_images_names = os.listdir(subject_dir_path)

#浏览每张图片并检测脸部,然后将脸部信息添加到脸部列表

for image_name in subject_images_names:

#建立图像路径

image_path = subject_dir_path + '/' + image_name

#读取图像

image = cv2.imread(image_path)

#显示图像0.1s

cv2.imshow('Training on Image...',image)

cv2.waitKey(50)

#脸部检测

face,rect = detect_face(image)

#忽略未检测到得脸部,其他的加入列表

if face is not None:

faces.append(face)

labels.append(label)

print(str(image_path)+' '+str(label))

cv2.waitKey(1)

cv2.destroyAllWindows()

#返回人脸和标签列表

return faces,labels

3.根据数据库内容和opencv的LBPH识别器进行模型训练并保存成face_recongnizer.xml文件。

#调用训练函数

faces,labels = prepare_training_data('C:/Users/Administrator/Desktop/detect/data')

#创建LBPH识别器并开始训练,也可以使用Eigen或者Fisher识别器

face_recognizer = cv2.face.LBPHFaceRecognizer_create()

#不想每次都训练,就把训练好的结果保存到xml文件,下次运行时直接加载该文件即可

#所生成的xml文件不小,50多长图片,8M多,这50多张图一共6M多,想训练得更准确文件会有多大?

face_recognizer.train(faces,np.array(labels))

face_recognizer.save('C:/Users/Administrator/Desktop/detect/face_recongnizer.xml')

1.2 usb摄像头识别

1.标框打标签

#根据给定的x,y坐标和宽高在图上绘制矩形

def draw_rect(img,rect):

(x,y,w,h) = rect

cv2.rectangle(img,(x,y),(x+w,y+h),(128,128,0),2)

#根据给定的(x,y)坐标标示出人名

def draw_text(img,text,x,y):

cv2.putText(img,text,(x,y),cv2.FONT_HERSHEY_COMPLEX,1,(128,128,0),2)

#建立标签与人名的映射列表(标签只能为整数)

subjects = ['zgr','bjqs','klst']

2.对图片进行识别与标注

#识别函数,此函数识别传递进来的图像中的人物并在检测到的脸部周围绘制一个矩形及其名称

def predict(test_img):

#生成图像的副本,以保护原始图像

img = test_img

#检测人脸

face,rect = detect_face(img)

# print(face)

#预测人脸

if face is not None:

label = face_recognizer.predict(face)

print(label)

#获取由人脸识别器返回的相应标签的名称

label_text = subjects[label[0]]

#在检测到的人脸周围画一个矩形;标出识别出的名字

draw_rect(img,rect)

draw_text(img,label_text,rect[0],rect[1] - 5)

#返回预测的图像

return img

return None

3.摄像头实时识别

#### 摄像头实时识别 ####

def detect_Cap():

#打开摄像头

cap = cv2.VideoCapture(0)

#从摄像头循环获取图片,0.5s一张

while(1):

#获取图像

ret,frame = cap.read()

print((frame.shape))

#执行预测

predicted_img1 = predict(frame)

if predicted_img1 is not None:

size = predicted_img1.shape

img_h = size[0]

img_w = size[1]

window_h = 600

window_w = img_w * (600 / img_h)

# print('window_h '+ str(window_h)+' window_w '+str(int(window_w)))

cv2.namedWindow('Image', cv2.WINDOW_NORMAL)

cv2.resizeWindow('Image', int(window_w), int(window_h)) # resizeWindow会把img放大或缩小

cv2.imshow('Image', predicted_img1)

elif predicted_img1 is None:

size = frame.shape

img_h = size[0]

img_w = size[1]

window_h = 600

window_w = img_w * (600 / img_h)

# print('window_h '+ str(window_h)+' window_w '+str(int(window_w)))

cv2.namedWindow('Image', cv2.WINDOW_NORMAL)

cv2.resizeWindow('Image', int(window_w), int(window_h)) # resizeWindow会把img放大或缩小

cv2.imshow('Image', frame)

#按Q退出,延时0.5s

if cv2.waitKey(500) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

4.加载图片进行识别

# #加载测试图像 'f:/4.jpg'

def detect_Img(fileName):

test_img1 = cv2.imread(fileName)

#执行预测

predicted_img1 = predict(test_img1)

size = predicted_img1.shape

img_h = size[0]

img_w = size[1]

window_h = 600

window_w = img_w * (600/img_h)

# print('window_h '+ str(window_h)+' window_w '+str(int(window_w)))

cv2.namedWindow('Image',cv2.WINDOW_NORMAL)

cv2.resizeWindow('Image',int(window_w),int(window_h))# resizeWindow会把img放大或缩小

cv2.imshow('Image',predicted_img1)

cv2.waitKey(0)

cv2.destroyAllWindows()

5.加载训练好的模型进行实践测试

face_recognizer = cv2.face.LBPHFaceRecognizer_create()

face_recognizer.read('C:/Users/Administrator/Desktop/detect/face_recongnizer.xml')#读取训练模型,有版本用的方法是.load()

#摄像头检测

detect_Cap()

# detect_Img('f:/13.jpg')#图片检测

1.3全部代码

import cv2

import os

import numpy as np

#检测人脸

def detect_face(img):

#将检测图片转化为灰度图,因为openCV人脸识别用的是灰度图像

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#加载OpenCV人脸检测分类器Haar

face_cascade = cv2.CascadeClassifier('C:/Users/Administrator/Desktop/detect/haarcascade_frontalface_default.xml')

#检测多尺度图像,返回值是一张脸部区域信息的列表(x,y,width,height)

faces = face_cascade.detectMultiScale(gray,scaleFactor=1.2,minNeighbors=5)

#如果没有检测到人脸,返回原始图像

if (len(faces) == 0):

return None,None

#目前假设只有一张脸,x,y为左上角坐标,w,h为矩形的宽高

(x,y,w,h) = faces[0]

#返回图像的正面部分

return gray[y:y+w,x:x+h],faces[0]

#该函数用于读取所有的训练图像,从每个图像检测人脸并将返回两个相同大小的列表,分别为脸部信息和标签

def prepare_training_data(data_folder_path):

# 获取数据文件夹中的目录(每个主题一个目录)

dirs = os.listdir(data_folder_path)

#建立两个列表,分别保存所有的脸部信息和标签

faces = []

labels = []

#浏览每个目录并访问其中的图像

for dir_name in dirs:

#dir_name(str类型)既标签

label = int(dir_name)

#建立包含当前主题主题图像的目录路径

subject_dir_path = data_folder_path + '/' + dir_name

#获取主题目录下图像名称

subject_images_names = os.listdir(subject_dir_path)

#浏览每张图片并检测脸部,然后将脸部信息添加到脸部列表

for image_name in subject_images_names:

#建立图像路径

image_path = subject_dir_path + '/' + image_name

#读取图像

image = cv2.imread(image_path)

#显示图像0.1s

cv2.imshow('Training on Image...',image)

cv2.waitKey(50)

#脸部检测

face,rect = detect_face(image)

#忽略未检测到得脸部,其他的加入列表

if face is not None:

faces.append(face)

labels.append(label)

print(str(image_path)+' '+str(label))

cv2.waitKey(1)

cv2.destroyAllWindows()

#返回人脸和标签列表

return faces,labels

#根据给定的x,y坐标和宽高在图上绘制矩形

def draw_rect(img,rect):

(x,y,w,h) = rect

cv2.rectangle(img,(x,y),(x+w,y+h),(128,128,0),2)

#根据给定的(x,y)坐标标示出人名

def draw_text(img,text,x,y):

cv2.putText(img,text,(x,y),cv2.FONT_HERSHEY_COMPLEX,1,(128,128,0),2)

#建立标签与人名的映射列表(标签只能为整数)

subjects = ['zgr','bjqs','klst']

#识别函数,此函数识别传递进来的图像中的人物并在检测到的脸部周围绘制一个矩形及其名称

def predict(test_img):

#生成图像的副本,以保护原始图像

img = test_img

#检测人脸

face,rect = detect_face(img)

# print(face)

#预测人脸

if face is not None:

label = face_recognizer.predict(face)

print(label)

#获取由人脸识别器返回的相应标签的名称

label_text = subjects[label[0]]

#在检测到的人脸周围画一个矩形;标出识别出的名字

draw_rect(img,rect)

draw_text(img,label_text,rect[0],rect[1] - 5)

#返回预测的图像

return img

return None

#### 摄像头实时识别 ####

def detect_Cap():

#打开摄像头

cap = cv2.VideoCapture(0)

#从摄像头循环获取图片,0.5s一张

while(1):

#获取图像

ret,frame = cap.read()

print((frame.shape))

#执行预测

predicted_img1 = predict(frame)

if predicted_img1 is not None:

size = predicted_img1.shape

img_h = size[0]

img_w = size[1]

window_h = 600

window_w = img_w * (600 / img_h)

# print('window_h '+ str(window_h)+' window_w '+str(int(window_w)))

cv2.namedWindow('Image', cv2.WINDOW_NORMAL)

cv2.resizeWindow('Image', int(window_w), int(window_h)) # resizeWindow会把img放大或缩小

cv2.imshow('Image', predicted_img1)

elif predicted_img1 is None:

size = frame.shape

img_h = size[0]

img_w = size[1]

window_h = 600

window_w = img_w * (600 / img_h)

# print('window_h '+ str(window_h)+' window_w '+str(int(window_w)))

cv2.namedWindow('Image', cv2.WINDOW_NORMAL)

cv2.resizeWindow('Image', int(window_w), int(window_h)) # resizeWindow会把img放大或缩小

cv2.imshow('Image', frame)

#按Q退出,延时0.5s

if cv2.waitKey(500) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

# #加载测试图像 'f:/4.jpg'

def detect_Img(fileName):

test_img1 = cv2.imread(fileName)

#执行预测

predicted_img1 = predict(test_img1)

size = predicted_img1.shape

img_h = size[0]

img_w = size[1]

window_h = 600

window_w = img_w * (600/img_h)

# print('window_h '+ str(window_h)+' window_w '+str(int(window_w)))

cv2.namedWindow('Image',cv2.WINDOW_NORMAL)

cv2.resizeWindow('Image',int(window_w),int(window_h))# resizeWindow会把img放大或缩小

cv2.imshow('Image',predicted_img1)

cv2.waitKey(0)

cv2.destroyAllWindows()

# detect_Img('f:/13.jpg')

#调用训练函数

faces,labels = prepare_training_data('C:/Users/Administrator/Desktop/detect/data')

#创建LBPH识别器并开始训练,也可以使用Eigen或者Fisher识别器

face_recognizer = cv2.face.LBPHFaceRecognizer_create()

#不想每次都训练,就把训练好的结果保存到xml文件,下次运行时直接加载该文件即可

#所生成的xml文件不小,50多长图片,8M多,这50多张图一共6M多,想训练得更准确文件会有多大?

# face_recognizer.train(faces,np.array(labels))

# face_recognizer.save('C:/Users/Administrator/Desktop/detect/face_recongnizer.xml')

face_recognizer.read('C:/Users/Administrator/Desktop/detect/face_recongnizer.xml')#读取训练模型,有版本用的方法是.load()

detect_Cap()

2.opencv人脸检测+cnn人脸识别

2.1采集人脸建立数据库

使用opencv的haarcascade_frontalface_default检测出人脸并分别保存到各自的文件夹下

import cv2

import sys

from PIL import Image

import os

#1.建立每个人的人脸图片文件夹

def CreateFolder(path):

#去除首位空格

del_path_space = path.strip()

#去除尾部'\'

del_path_tail = del_path_space.rstrip('\\')

#判读输入路径是否已存在

isexists = os.path.exists(del_path_tail)

if not isexists:

os.makedirs(del_path_tail)

return True

else:

return False

#2.使用opencv检测出人脸并保存到各自的文件夹

def CatchPICFromVideo(window_name,camera_idx,catch_pic_num,path_name):

#检查输入路径是否存在——不存在就创建

CreateFolder(path_name)

cv2.namedWindow(window_name)

# 视频来源,可以来自一段已存好的视频,也可以直接来自USB摄像头

cap = cv2.VideoCapture(camera_idx)

# 告诉OpenCV使用人脸识别分类器

classfier = cv2.CascadeClassifier("C:\\Users\\Administrator\\Desktop\\detect\\haarcascade_frontalface_default.xml")

#识别出人脸后要画的边框的颜色,RGB格式

color = (0, 255, 0)

num = 0

while cap.isOpened():

ok, frame = cap.read() # 读取一帧数据

if not ok:

break

grey = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) # 将当前桢图像转换成灰度图像

# 人脸检测,1.2和2分别为图片缩放比例和需要检测的有效点数

faceRects = classfier.detectMultiScale(grey, scaleFactor=1.2, minNeighbors=2, minSize=(32, 32))

if len(faceRects) > 0: # 大于0则检测到人脸

for faceRect in faceRects: # 单独框出每一张人脸

x, y, w, h = faceRect

if w > 200:

# 将当前帧保存为图片

img_name = '%s\%d.jpg' % (path_name, num)

#image = frame[y - 10: y + h + 10, x - 10: x + w + 10]

image = grey[y:y+h,x:x+w] #保存灰度人脸图

cv2.imwrite(img_name, image)

num += 1

if num > (catch_pic_num): # 如果超过指定最大保存数量退出循环

break

#画出矩形框的时候稍微比识别的脸大一圈

cv2.rectangle(frame, (x - 10, y - 10), (x + w + 10, y + h + 10), color, 2)

# 显示当前捕捉到了多少人脸图片了,这样站在那里被拍摄时心里有个数,不用两眼一抹黑傻等着

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.putText(frame, 'num:%d' % (num), (x + 30, y + 30), font, 1, (255, 0, 255), 4)

# 超过指定最大保存数量结束程序

if num > (catch_pic_num): break

# 显示图像

cv2.imshow(window_name, frame)

#按键盘‘Q’中断采集

c = cv2.waitKey(10)

if c & 0xFF == ord('q'):

break

# 释放摄像头并销毁所有窗口

cap.release()

cv2.destroyAllWindows()

#3.开始采集人脸保存到数据库

while True:

print("是否录入学生信息(Yes or No)?")

if input() == 'y':

#学生姓名(要输入英文,汉字容易报错)y

new_user_name = input("请输入您的姓名:")

print("请看摄像头!")

#采集学生图像的数量自己设定,越多识别准确度越高,但训练速度慢

window_name = '信息采集' #图像窗口

camera_id = 0 #相机的ID号

images_num = 100 #每个人采集多少张照片

path = 'C:\\Users\\Administrator\\Desktop\\face_cnn\\pic\\' + new_user_name #图像保存位置

CatchPICFromVideo(window_name,camera_id,images_num,path)

else:

break

2.2搭建简单cnn网络进行人脸特征提取

使用cnn搭建简单的卷积网络,提取人脸特征,进行模型训练,将训练得到的模型参数保存到h5文件。

import random

import numpy as np

import os

from sklearn.model_selection import train_test_split

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Convolution2D, MaxPooling2D

# from keras.optimizers import SGD

from tensorflow.keras.optimizers import SGD

from keras.utils import np_utils

from keras.models import load_model

from keras import backend as K

import sys

import numpy as np

import os

import cv2

################################################

#读取待训练的人脸图像,指定图像路径即可

################################################

IMAGE_SIZE = 64

#将输入的图像大小统一

def resize_image(image,height = IMAGE_SIZE,width = IMAGE_SIZE):

top,bottom,left,right = 0,0,0,0

#获取图像大小

h,w,_ = image.shape

#对于长宽不一的,取最大值

longest_edge = max(h,w)

#计算较短的边需要加多少像素

if h < longest_edge:

dh = longest_edge - h

top = dh // 2

bottom = dh - top

elif w < longest_edge:

dw = longest_edge - w

left = dw // 2

right = dw - left

else:

pass

#定义填充颜色

BLACK = [0,0,0]

#给图像增加边界,使图片长、宽等长,cv2.BORDER_CONSTANT指定边界颜色由value指定

constant_image = cv2.copyMakeBorder(image,top,bottom,left,right,cv2.BORDER_CONSTANT,value=BLACK)

return cv2.resize(constant_image,(height,width))

#读取数据

images = [] #数据集

labels = [] #标注集

def read_path(path_name):

for dir_item in os.listdir(path_name):

full_path = path_name + '\\' + dir_item

if os.path.isdir(full_path):

read_path(full_path)

else:

#判断是人脸照片

if dir_item.endswith('.jpg'):

image = cv2.imread(full_path)

image = resize_image(image)

images.append(image)

labels.append(path_name)

return images,labels

#为每一类数据赋予唯一的标签值

def label_id(label,users,user_num):

for i in range(user_num):

if label.endswith(users[i]):

return i

#从指定位置读数据

def load_dataset(path_name):

users = os.listdir(path_name)

user_num = len(users)

images,labels = read_path(path_name)

images_np = np.array(images)

#每个图片夹都赋予一个固定唯一的标签

labels_np = np.array([label_id(label,users,user_num) for label in labels])

return images_np,labels_np

class Dataset:

def __init__(self, path_name):

# 训练集

self.train_images = None

self.train_labels = None

# 验证集

self.valid_images = None

self.valid_labels = None

# 测试集

self.test_images = None

self.test_labels = None

# 数据集加载路径

self.path_name = path_name

# 图像种类

self.user_num = len(os.listdir(path_name))

#当前库采用的维度顺序

self.input_shape = None

# 加载数据集并按照交叉验证的原则划分数据集并进行相关预处理工作

def load(self, img_rows=IMAGE_SIZE, img_cols=IMAGE_SIZE,

img_channels=3):

#数据种类

nb_classes = self.user_num

#加载数据集到内存

images, labels = load_dataset(self.path_name)

train_images, valid_images, train_labels, valid_labels = train_test_split(images, labels, test_size=0.3,

random_state=random.randint(0, 100))

# _, test_images, _, test_labels = train_test_split(images, labels, test_size=0.5,

# random_state=random.randint(0, 100))

# 当前的维度顺序如果为'th',则输入图片数据时的顺序为:channels,rows,cols,否则:rows,cols,channels

# 这部分代码就是根据keras库要求的维度顺序重组训练数据集

# if K.image_dim_ordering() == 'th':

if K.image_data_format() == 'channels_first':

# if K.image_data_format() == "channels_first":

train_images = train_images.reshape(train_images.shape[0], img_channels, img_rows, img_cols)

valid_images = valid_images.reshape(valid_images.shape[0], img_channels, img_rows, img_cols)

#test_images = test_images.reshape(test_images.shape[0], img_channels, img_rows, img_cols)

self.input_shape = (img_channels, img_rows, img_cols)

else:

train_images = train_images.reshape(train_images.shape[0], img_rows, img_cols, img_channels)

valid_images = valid_images.reshape(valid_images.shape[0], img_rows, img_cols, img_channels)

#test_images = test_images.reshape(test_images.shape[0], img_rows, img_cols, img_channels)

self.input_shape = (img_rows, img_cols, img_channels)

# 输出训练集、验证集、测试集的数量

print(train_images.shape[0], 'train samples')

print(valid_images.shape[0], 'valid samples')

#print(test_images.shape[0], 'test samples')

# 我们的模型使用categorical_crossentropy作为损失函数,因此需要根据类别数量nb_classes将

# 类别标签进行one-hot编码使其向量化,在这里我们的类别只有两种,经过转化后标签数据变为二维

train_labels = np_utils.to_categorical(train_labels, nb_classes)

valid_labels = np_utils.to_categorical(valid_labels, nb_classes)

#test_labels = np_utils.to_categorical(test_labels, nb_classes)

# 像素数据浮点化以便归一化

train_images = train_images.astype('float32')

valid_images = valid_images.astype('float32')

#test_images = test_images.astype('float32')

# 将其归一化,图像的各像素值归一化到0~1区间

train_images /= 255

valid_images /= 255

#test_images /= 255

self.train_images = train_images

self.valid_images = valid_images

#self.test_images = test_images

self.train_labels = train_labels

self.valid_labels = valid_labels

#self.test_labels = test_labels

# CNN网络模型类

class Model:

def __init__(self):

self.model = None

# 建立模型

def build_model(self, dataset,nb_classes=4):

# 构建一个空的网络模型,它是一个线性堆叠模型,各神经网络层会被顺序添加,专业名称为序贯模型或线性堆叠模型

self.model = Sequential()

#以下代码将顺序添加CNN网络需要的各层,一个add就是一个网络层

self.model.add(Convolution2D(32, 3, 3, padding='same',

input_shape=dataset.input_shape,data_format = 'channels_first')) # 1 2维卷积层

self.model.add(Activation('relu')) # 2 激活函数层

self.model.add(Convolution2D(32, 3, 3,padding='same',data_format = 'channels_first')) # 3 2维卷积层

self.model.add(Activation('relu')) # 4 激活函数层

self.model.add(MaxPooling2D(pool_size=(2, 2),padding='same')) # 5 池化层

self.model.add(Dropout(0.25)) # 6 Dropout层

self.model.add(Convolution2D(64, 3, 3, padding='same',data_format = 'channels_first')) # 7 2维卷积层

self.model.add(Activation('relu')) # 8 激活函数层

self.model.add(Convolution2D(64, 3, 3,padding='same',data_format = 'channels_first')) # 9 2维卷积层

self.model.add(Activation('relu')) # 10 激活函数层

self.model.add(MaxPooling2D(pool_size=(2, 2),padding='same')) # 11 池化层

self.model.add(Dropout(0.25)) # 12 Dropout层

self.model.add(Flatten()) # 13 Flatten层

self.model.add(Dense(512)) # 14 Dense层,又被称作全连接层

self.model.add(Activation('relu')) # 15 激活函数层

self.model.add(Dropout(0.5)) # 16 Dropout层

self.model.add(Dense(nb_classes)) # 17 Dense层

self.model.add(Activation('softmax')) # 18 分类层,输出最终结果

#输出模型概况

self.model.summary()

# 训练模型

def train(self, dataset, batch_size=20, epochs=1000, data_augmentation=True):

sgd = SGD(lr=0.01, decay=1e-6,

momentum=0.9, nesterov=True) # 采用SGD+momentum的优化器进行训练,首先生成一个优化器对象

self.model.compile(loss='categorical_crossentropy',

optimizer=sgd,

metrics=['accuracy']) # 完成实际的模型配置工作

# 不使用数据提升,所谓的提升就是从我们提供的训练数据中利用旋转、翻转、加噪声等方法创造新的

# 训练数据,有意识的提升训练数据规模,增加模型训练量

if not data_augmentation:

self.model.fit(dataset.train_images,

dataset.train_labels,

batch_size=batch_size,

epochs=epochs,

validation_data=(dataset.valid_images, dataset.valid_labels),

shuffle=True)

# 使用实时数据提升

else:

# 定义数据生成器用于数据提升,其返回一个生成器对象datagen,datagen每被调用一

# 次其生成一组数据(顺序生成),节省内存,其实就是python的数据生成器

datagen = ImageDataGenerator(

featurewise_center=False, # 是否使输入数据去中心化(均值为0),

samplewise_center=False, # 是否使输入数据的每个样本均值为0

featurewise_std_normalization=False, # 是否数据标准化(输入数据除以数据集的标准差)

samplewise_std_normalization=False, # 是否将每个样本数据除以自身的标准差

zca_whitening=False, # 是否对输入数据施以ZCA白化

rotation_range=20, # 数据提升时图片随机转动的角度(范围为0~180)

width_shift_range=0.2, # 数据提升时图片水平偏移的幅度(单位为图片宽度的占比,0~1之间的浮点数)

height_shift_range=0.2, # 同上,只不过这里是垂直

horizontal_flip=True, # 是否进行随机水平翻转

vertical_flip=False) # 是否进行随机垂直翻转

# 计算整个训练样本集的数量以用于特征值归一化、ZCA白化等处理

datagen.fit(dataset.train_images)

# 利用生成器开始训练模型

self.model.fit_generator(datagen.flow(dataset.train_images, dataset.train_labels,

batch_size=batch_size),

epochs=epochs,

validation_data=(dataset.valid_images, dataset.valid_labels))

MODEL_PATH = './aggregate.face.model.h5'

def save_model(self, file_path=MODEL_PATH):

self.model.save(file_path)

def load_model(self, file_path=MODEL_PATH):

self.model = load_model(file_path)

def evaluate(self, dataset):

score = self.model.evaluate(dataset.test_images, dataset.test_labels, verbose=1)

print("%s: %.2f%%" % (self.model.metrics_names[1], score[1] * 100))

# 识别人脸

def face_predict(self, image):

# 依然是根据后端系统确定维度顺序

#K.image_data_format() == 'channels_last'

#if K.image_dim_ordering() == 'th' and image.shape != (1, 3, IMAGE_SIZE, IMAGE_SIZE):

if K.image_data_format() == 'channels_first' and image.shape != (1, 3, IMAGE_SIZE, IMAGE_SIZE):

image = resize_image(image) # 尺寸必须与训练集一致都应该是IMAGE_SIZE x IMAGE_SIZE

image = image.reshape((1, 3, IMAGE_SIZE, IMAGE_SIZE)) # 与模型训练不同,这次只是针对1张图片进行预测

elif K.image_data_format() == 'channels_last' and image.shape != (1, IMAGE_SIZE, IMAGE_SIZE, 3):

image = resize_image(image)

image = image.reshape((1, IMAGE_SIZE, IMAGE_SIZE, 3))

# 浮点并归一化

image = image.astype('float32')

image /= 255

#给出输入属于各个类别的概率

#result_probability = self.model.predict_proba(image)

predict_x=self.model.predict(image)

result_probability=np.argmax(predict_x,axis=1)

#print('result:', result_probability)

#给出类别预测(改)

#if max(result_probability[0]) >= 0.9:

if max(result_probability) >= 0.9:

# result = self.model.predict_classes(image)

predict = self.model.predict(image)

result=np.argmax(predict,axis=1)

print(predict)

# print('result:', result)

# 返回类别预测结果

return result[0]

else:

return -1

if __name__ == '__main__':

user_num = len(os.listdir('C:\\Users\\Administrator\\Desktop\\face_cnn\\pic\\'))

dataset = Dataset('C:\\Users\\Administrator\\Desktop\\face_cnn\\pic\\')

dataset.load()

model = Model()

model.build_model(dataset,nb_classes=user_num)

# 先前添加的测试build_model()函数的代码

model.build_model(dataset,nb_classes=user_num)

# 测试训练函数的代码

model.train(dataset)

model.save_model(file_path='./model/aggregate.face.model.h5')

2.3 使用摄像头进行人脸识别

打开摄像头,使用cv检测人脸,再将人脸输入到模型识别,输出识别结果。

import cv2

import sys

import os

from face_train import Model

if __name__ == '__main__':

if len(sys.argv) != 1:

print("Usage:%s camera_id\r\n" % (sys.argv[0]))

sys.exit(0)

#加载模型

model = Model()

model.load_model(file_path='./model/aggregate.face.model.h5')

# 框住人脸的矩形边框颜色

color = (0, 255, 0)

# 捕获指定摄像头的实时视频流

cap = cv2.VideoCapture(0)

# 人脸识别分类器本地存储路径

cascade_path = "C:\\Users\\Administrator\\Desktop\\detect\\haarcascade_frontalface_default.xml"

# 循环检测识别人脸

while True:

ret, frame = cap.read() # 读取一帧视频

if ret is True:

# 图像灰化,降低计算复杂度

frame_gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

else:

continue

# 使用人脸识别分类器,读入分类器

cascade = cv2.CascadeClassifier(cascade_path)

# 利用分类器识别出哪个区域为人脸

faceRects = cascade.detectMultiScale(frame_gray, scaleFactor=1.2, minNeighbors=2, minSize=(32, 32))

if len(faceRects) > 0:

for faceRect in faceRects:

x, y, w, h = faceRect

# 截取脸部图像提交给模型识别这是谁

classes = [i for i in range(len(os.listdir('C:/Users/Administrator/Desktop/face_cnn/pic')))]

image = frame[y: y + h, x: x + w] #(改)

faceID = model.face_predict(image)

cv2.rectangle(frame, (x - 10, y - 10), (x + w + 10, y + h + 10), color, thickness=2)

if faceID in classes:

# 文字提示是谁

cv2.putText(frame, os.listdir('C:/Users/Administrator/Desktop/face_cnn/pic')[faceID],

(x + 30, y + 30), # 坐标

cv2.FONT_HERSHEY_SIMPLEX, # 字体

1, # 字号

(255, 0, 255), # 颜色

2) # 字的线宽

else:

# 文字提示是谁

cv2.putText(frame, 'None ',

(x + 30, y + 30), # 坐标

cv2.FONT_HERSHEY_SIMPLEX, # 字体

1, # 字号

(255, 0, 255), # 颜色

2) # 字的线宽

cv2.imshow("login", frame)

# 等待10毫秒看是否有按键输入

k = cv2.waitKey(10)

# 如果输入q则退出循环

if k & 0xFF == ord('q'):

break

# 释放摄像头并销毁所有窗口

cap.release()

cv2.destroyAllWindows()

3.retinaface人脸检测+facenet人脸识别

工程文件已经压缩上传(https://download.csdn.net/download/weixin_38226321/82714303)。

3.1 retinaface人脸检测

首先建立retinaface.py文件进行人脸检测,里面有resnet50和mobilenet两种特征提取网络,可以根据参数自己选择。

#-------------------------------------------------------------#

#1. ResNet50的网络部分

#-------------------------------------------------------------#

from keras import layers

from keras.layers import (Activation, BatchNormalization, Conv2D, MaxPooling2D,

ZeroPadding2D)

def identity_block(input_tensor, kernel_size, filters, stage, block):

filters1, filters2, filters3 = filters

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

x = Conv2D(filters1, (1, 1), name=conv_name_base + '2a')(input_tensor)

x = BatchNormalization(name=bn_name_base + '2a')(x)

x = Activation('relu')(x)

x = Conv2D(filters2, kernel_size,padding='same', name=conv_name_base + '2b')(x)

x = BatchNormalization(name=bn_name_base + '2b')(x)

x = Activation('relu')(x)

x = Conv2D(filters3, (1, 1), name=conv_name_base + '2c')(x)

x = BatchNormalization(name=bn_name_base + '2c')(x)

x = layers.add([x, input_tensor])

x = Activation('relu')(x)

return x

def conv_block(input_tensor, kernel_size, filters, stage, block, strides=(2, 2)):

filters1, filters2, filters3 = filters

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

x = Conv2D(filters1, (1, 1), strides=strides,

name=conv_name_base + '2a')(input_tensor)

x = BatchNormalization(name=bn_name_base + '2a')(x)

x = Activation('relu')(x)

x = Conv2D(filters2, kernel_size, padding='same',

name=conv_name_base + '2b')(x)

x = BatchNormalization(name=bn_name_base + '2b')(x)

x = Activation('relu')(x)

x = Conv2D(filters3, (1, 1), name=conv_name_base + '2c')(x)

x = BatchNormalization(name=bn_name_base + '2c')(x)

shortcut = Conv2D(filters3, (1, 1), strides=strides,

name=conv_name_base + '1')(input_tensor)

shortcut = BatchNormalization(name=bn_name_base + '1')(shortcut)

x = layers.add([x, shortcut])

x = Activation('relu')(x)

return x

def ResNet50(inputs):

img_input = inputs

x = ZeroPadding2D((3, 3))(img_input)

x = Conv2D(64, (7, 7), strides=(2, 2), name='conv1')(x)

x = BatchNormalization(name='bn_conv1')(x)

x = Activation('relu')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding="same")(x)

x = conv_block(x, 3, [64, 64, 256], stage=2, block='a', strides=(1, 1))

x = identity_block(x, 3, [64, 64, 256], stage=2, block='b')

x = identity_block(x, 3, [64, 64, 256], stage=2, block='c')

x = conv_block(x, 3, [128, 128, 512], stage=3, block='a')

x = identity_block(x, 3, [128, 128, 512], stage=3, block='b')

x = identity_block(x, 3, [128, 128, 512], stage=3, block='c')

x = identity_block(x, 3, [128, 128, 512], stage=3, block='d')

feat1 = x

x = conv_block(x, 3, [256, 256, 1024], stage=4, block='a')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='b')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='c')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='d')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='e')

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='f')

feat2 = x

x = conv_block(x, 3, [512, 512, 2048], stage=5, block='a')

x = identity_block(x, 3, [512, 512, 2048], stage=5, block='b')

x = identity_block(x, 3, [512, 512, 2048], stage=5, block='c')

feat3 = x

return feat1, feat2, feat3

#2.mobilenet网络

from keras import backend as K

from keras.layers import (Activation, BatchNormalization, Conv2D,

DepthwiseConv2D)

def relu6(x):

return K.relu(x, max_value=6)

#----------------------------------#

# 普通的卷积块

#----------------------------------#

def _conv_block(inputs, filters, kernel=(3, 3), strides=(1, 1)):

x = Conv2D(filters, kernel,

padding='same',

use_bias=False,

strides=strides,

name='conv1')(inputs)

x = BatchNormalization(name='conv1_bn')(x)

return Activation(relu6, name='conv1_relu')(x)

#----------------------------------#

# 深度可分离卷积块

#----------------------------------#

def _depthwise_conv_block(inputs, pointwise_conv_filters,

depth_multiplier=1, strides=(1, 1), block_id=1):

x = DepthwiseConv2D((3, 3),

padding='same',

depth_multiplier=depth_multiplier,

strides=strides,

use_bias=False,

name='conv_dw_%d' % block_id)(inputs)

x = BatchNormalization(name='conv_dw_%d_bn' % block_id)(x)

x = Activation(relu6, name='conv_dw_%d_relu' % block_id)(x)

x = Conv2D(pointwise_conv_filters, (1, 1),

padding='same',

use_bias=False,

strides=(1, 1),

name='conv_pw_%d' % block_id)(x)

x = BatchNormalization(name='conv_pw_%d_bn' % block_id)(x)

return Activation(relu6, name='conv_pw_%d_relu' % block_id)(x)

def MobileNet(img_input, depth_multiplier=1):

# 640,640,3 -> 320,320,8

x = _conv_block(img_input, 8, strides=(2, 2))

# 320,320,8 -> 320,320,16

x = _depthwise_conv_block(x, 16, depth_multiplier, block_id=1)

# 320,320,16 -> 160,160,32

x = _depthwise_conv_block(x, 32, depth_multiplier, strides=(2, 2), block_id=2)

x = _depthwise_conv_block(x, 32, depth_multiplier, block_id=3)

# 160,160,32 -> 80,80,64

x = _depthwise_conv_block(x, 64, depth_multiplier, strides=(2, 2), block_id=4)

x = _depthwise_conv_block(x, 64, depth_multiplier, block_id=5)

feat1 = x

# 80,80,64 -> 40,40,128

x = _depthwise_conv_block(x, 128, depth_multiplier, strides=(2, 2), block_id=6)

x = _depthwise_conv_block(x, 128, depth_multiplier, block_id=7)

x = _depthwise_conv_block(x, 128, depth_multiplier, block_id=8)

x = _depthwise_conv_block(x, 128, depth_multiplier, block_id=9)

x = _depthwise_conv_block(x, 128, depth_multiplier, block_id=10)

x = _depthwise_conv_block(x, 128, depth_multiplier, block_id=11)

feat2 = x

# 40,40,128 -> 20,20,256

x = _depthwise_conv_block(x, 256, depth_multiplier, strides=(2, 2), block_id=12)

x = _depthwise_conv_block(x, 256, depth_multiplier, block_id=13)

feat3 = x

return feat1, feat2, feat3

#3.retainface网络

import keras

import tensorflow as tf

from keras.layers import Activation, Add, Concatenate, Conv2D, Input, Reshape

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.normalization import BatchNormalization

from keras.models import Model

from utils.utils import compose

class UpsampleLike(keras.layers.Layer):

def call(self, inputs, **kwargs):

source, target = inputs

target_shape = keras.backend.shape(target)

return tf.image.resize_images(source, (target_shape[1], target_shape[2]), method=tf.image.ResizeMethod.NEAREST_NEIGHBOR, align_corners=False)

def compute_output_shape(self, input_shape):

return (input_shape[0][0],) + input_shape[1][1:3] + (input_shape[0][-1],)

#---------------------------------------------------#

# 卷积块

# Conv2D + BatchNormalization + LeakyReLU

#---------------------------------------------------#

def Conv2D_BN_Leaky(*args, **kwargs):

leaky = 0.1

try:

leaky = kwargs["leaky"]

del kwargs["leaky"]

except:

pass

return compose(

Conv2D(*args, **kwargs),

BatchNormalization(),

LeakyReLU(alpha=leaky))

#---------------------------------------------------#

# 卷积块

# Conv2D + BatchNormalization

#---------------------------------------------------#

def Conv2D_BN(*args, **kwargs):

return compose(

Conv2D(*args, **kwargs),

BatchNormalization())

#---------------------------------------------------#

# 多尺度加强感受野

#---------------------------------------------------#

def SSH(inputs, out_channel, leaky=0.1):

# 3x3卷积

conv3X3 = Conv2D_BN(out_channel//2, kernel_size=3, strides=1, padding='same')(inputs)

# 利用两个3x3卷积替代5x5卷积

conv5X5_1 = Conv2D_BN_Leaky(out_channel//4, kernel_size=3, strides=1, padding='same', leaky=leaky)(inputs)

conv5X5 = Conv2D_BN(out_channel//4, kernel_size=3, strides=1, padding='same')(conv5X5_1)

# 利用三个3x3卷积替代7x7卷积

conv7X7_2 = Conv2D_BN_Leaky(out_channel//4, kernel_size=3, strides=1, padding='same', leaky=leaky)(conv5X5_1)

conv7X7 = Conv2D_BN(out_channel//4, kernel_size=3, strides=1, padding='same')(conv7X7_2)

# 所有结果堆叠起来

out = Concatenate(axis=-1)([conv3X3, conv5X5, conv7X7])

out = Activation("relu")(out)

return out

#---------------------------------------------------#

# 种类预测(是否包含人脸)

#---------------------------------------------------#

def ClassHead(inputs, num_anchors=2):

outputs = Conv2D(num_anchors*2, kernel_size=1, strides=1)(inputs)

return Activation("softmax")(Reshape([-1,2])(outputs))

#---------------------------------------------------#

# 预测框预测

#---------------------------------------------------#

def BboxHead(inputs, num_anchors=2):

outputs = Conv2D(num_anchors*4, kernel_size=1, strides=1)(inputs)

return Reshape([-1,4])(outputs)

#---------------------------------------------------#

# 人脸关键点预测

#---------------------------------------------------#

def LandmarkHead(inputs, num_anchors=2):

outputs = Conv2D(num_anchors*5*2, kernel_size=1, strides=1)(inputs)

return Reshape([-1,10])(outputs)

def RetinaFace(cfg, backbone="mobilenet"):

inputs = Input(shape=(None, None, 3))

#-------------------------------------------#

# 获得三个shape的有效特征层

# 分别是C3 80, 80, 64

# C4 40, 40, 128

# C5 20, 20, 256

#-------------------------------------------#

if backbone == "mobilenet":

C3, C4, C5 = MobileNet(inputs)

elif backbone == "resnet50":

C3, C4, C5 = ResNet50(inputs)

else:

raise ValueError('Unsupported backbone - `{}`, Use mobilenet, resnet50.'.format(backbone))

leaky = 0

if (cfg['out_channel'] <= 64):

leaky = 0.1

#-------------------------------------------#

# 获得三个shape的有效特征层

# 分别是P3 80, 80, 64

# P4 40, 40, 64

# P5 20, 20, 64

#-------------------------------------------#

P3 = Conv2D_BN_Leaky(cfg['out_channel'], kernel_size=1, strides=1, padding='same', name='C3_reduced', leaky=leaky)(C3)

P4 = Conv2D_BN_Leaky(cfg['out_channel'], kernel_size=1, strides=1, padding='same', name='C4_reduced', leaky=leaky)(C4)

P5 = Conv2D_BN_Leaky(cfg['out_channel'], kernel_size=1, strides=1, padding='same', name='C5_reduced', leaky=leaky)(C5)

#-------------------------------------------#

# P5上采样和P4特征融合

# P4 40, 40, 64

#-------------------------------------------#

P5_upsampled = UpsampleLike(name='P5_upsampled')([P5, P4])

P4 = Add(name='P4_merged')([P5_upsampled, P4])

P4 = Conv2D_BN_Leaky(cfg['out_channel'], kernel_size=3, strides=1, padding='same', name='Conv_P4_merged', leaky=leaky)(P4)

#-------------------------------------------#

# P4上采样和P3特征融合

# P3 80, 80, 64

#-------------------------------------------#

P4_upsampled = UpsampleLike(name='P4_upsampled')([P4, P3])

P3 = Add(name='P3_merged')([P4_upsampled, P3])

P3 = Conv2D_BN_Leaky(cfg['out_channel'], kernel_size=3, strides=1, padding='same', name='Conv_P3_merged', leaky=leaky)(P3)

SSH1 = SSH(P3, cfg['out_channel'], leaky=leaky)

SSH2 = SSH(P4, cfg['out_channel'], leaky=leaky)

SSH3 = SSH(P5, cfg['out_channel'], leaky=leaky)

SSH_all = [SSH1,SSH2,SSH3]

#-------------------------------------------#

# 将所有结果进行堆叠

#-------------------------------------------#

bbox_regressions = Concatenate(axis=1,name="bbox_reg")([BboxHead(feature) for feature in SSH_all])

classifications = Concatenate(axis=1,name="cls")([ClassHead(feature) for feature in SSH_all])

ldm_regressions = Concatenate(axis=1,name="ldm_reg")([LandmarkHead(feature) for feature in SSH_all])

output = [bbox_regressions, classifications, ldm_regressions]

model = Model(inputs=inputs, outputs=output)

return model

3.2 facenet人脸识别

创建facenet.py文件进行人脸特征预测识别结果,有mobilenet和inception_resnetv1网络。

#1.resnet

from functools import partial

from keras import backend as K

from keras.layers import (Activation, BatchNormalization, Concatenate, Conv2D,

Dense, Dropout, GlobalAveragePooling2D, Lambda,

MaxPooling2D, add)

from keras.models import Model

def scaling(x, scale):

return x * scale

def _generate_layer_name(name, branch_idx=None, prefix=None):

if prefix is None:

return None

if branch_idx is None:

return '_'.join((prefix, name))

return '_'.join((prefix, 'Branch', str(branch_idx), name))

def conv2d_bn(x,filters,kernel_size,strides=1,padding='same',activation='relu',use_bias=False,name=None):

x = Conv2D(filters,

kernel_size,

strides=strides,

padding=padding,

use_bias=use_bias,

name=name)(x)

if not use_bias:

x = BatchNormalization(axis=3, momentum=0.995, epsilon=0.001,

scale=False, name=_generate_layer_name('BatchNorm', prefix=name))(x)

if activation is not None:

x = Activation(activation, name=_generate_layer_name('Activation', prefix=name))(x)

return x

def _inception_resnet_block(x, scale, block_type, block_idx, activation='relu'):

channel_axis = 3

if block_idx is None:

prefix = None

else:

prefix = '_'.join((block_type, str(block_idx)))

name_fmt = partial(_generate_layer_name, prefix=prefix)

if block_type == 'Block35':

branch_0 = conv2d_bn(x, 32, 1, name=name_fmt('Conv2d_1x1', 0))

branch_1 = conv2d_bn(x, 32, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 32, 3, name=name_fmt('Conv2d_0b_3x3', 1))

branch_2 = conv2d_bn(x, 32, 1, name=name_fmt('Conv2d_0a_1x1', 2))

branch_2 = conv2d_bn(branch_2, 32, 3, name=name_fmt('Conv2d_0b_3x3', 2))

branch_2 = conv2d_bn(branch_2, 32, 3, name=name_fmt('Conv2d_0c_3x3', 2))

branches = [branch_0, branch_1, branch_2]

elif block_type == 'Block17':

branch_0 = conv2d_bn(x, 128, 1, name=name_fmt('Conv2d_1x1', 0))

branch_1 = conv2d_bn(x, 128, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 128, [1, 7], name=name_fmt('Conv2d_0b_1x7', 1))

branch_1 = conv2d_bn(branch_1, 128, [7, 1], name=name_fmt('Conv2d_0c_7x1', 1))

branches = [branch_0, branch_1]

elif block_type == 'Block8':

branch_0 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_1x1', 0))

branch_1 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 192, [1, 3], name=name_fmt('Conv2d_0b_1x3', 1))

branch_1 = conv2d_bn(branch_1, 192, [3, 1], name=name_fmt('Conv2d_0c_3x1', 1))

branches = [branch_0, branch_1]

mixed = Concatenate(axis=channel_axis, name=name_fmt('Concatenate'))(branches)

up = conv2d_bn(mixed,K.int_shape(x)[channel_axis],1,activation=None,use_bias=True,

name=name_fmt('Conv2d_1x1'))

up = Lambda(scaling,

output_shape=K.int_shape(up)[1:],

arguments={'scale': scale})(up)

x = add([x, up])

if activation is not None:

x = Activation(activation, name=name_fmt('Activation'))(x)

return x

def InceptionResNetV1(inputs,

embedding_size=128,

dropout_keep_prob=0.4):

channel_axis = 3

# 160,160,3 -> 77,77,64

x = conv2d_bn(inputs, 32, 3, strides=2, padding='valid', name='Conv2d_1a_3x3')

x = conv2d_bn(x, 32, 3, padding='valid', name='Conv2d_2a_3x3')

x = conv2d_bn(x, 64, 3, name='Conv2d_2b_3x3')

# 77,77,64 -> 38,38,64

x = MaxPooling2D(3, strides=2, name='MaxPool_3a_3x3')(x)

# 38,38,64 -> 17,17,256

x = conv2d_bn(x, 80, 1, padding='valid', name='Conv2d_3b_1x1')

x = conv2d_bn(x, 192, 3, padding='valid', name='Conv2d_4a_3x3')

x = conv2d_bn(x, 256, 3, strides=2, padding='valid', name='Conv2d_4b_3x3')

# 5x Block35 (Inception-ResNet-A block):

for block_idx in range(1, 6):

x = _inception_resnet_block(x,scale=0.17,block_type='Block35',block_idx=block_idx)

# Reduction-A block:

# 17,17,256 -> 8,8,896

name_fmt = partial(_generate_layer_name, prefix='Mixed_6a')

branch_0 = conv2d_bn(x, 384, 3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 0))

branch_1 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 192, 3, name=name_fmt('Conv2d_0b_3x3', 1))

branch_1 = conv2d_bn(branch_1, 256, 3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 1))

branch_pool = MaxPooling2D(3,strides=2,padding='valid',name=name_fmt('MaxPool_1a_3x3', 2))(x)

branches = [branch_0, branch_1, branch_pool]

x = Concatenate(axis=channel_axis, name='Mixed_6a')(branches)

# 10x Block17 (Inception-ResNet-B block):

for block_idx in range(1, 11):

x = _inception_resnet_block(x,

scale=0.1,

block_type='Block17',

block_idx=block_idx)

# Reduction-B block

# 8,8,896 -> 3,3,1792

name_fmt = partial(_generate_layer_name, prefix='Mixed_7a')

branch_0 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 0))

branch_0 = conv2d_bn(branch_0,384,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 0))

branch_1 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1,256,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 1))

branch_2 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 2))

branch_2 = conv2d_bn(branch_2, 256, 3, name=name_fmt('Conv2d_0b_3x3', 2))

branch_2 = conv2d_bn(branch_2,256,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 2))

branch_pool = MaxPooling2D(3,strides=2,padding='valid',name=name_fmt('MaxPool_1a_3x3', 3))(x)

branches = [branch_0, branch_1, branch_2, branch_pool]

x = Concatenate(axis=channel_axis, name='Mixed_7a')(branches)

# 5x Block8 (Inception-ResNet-C block):

for block_idx in range(1, 6):

x = _inception_resnet_block(x,

scale=0.2,

block_type='Block8',

block_idx=block_idx)

x = _inception_resnet_block(x,scale=1.,activation=None,block_type='Block8',block_idx=6)

# 平均池化

x = GlobalAveragePooling2D(name='AvgPool')(x)

x = Dropout(1.0 - dropout_keep_prob, name='Dropout')(x)

# 全连接层到128

x = Dense(embedding_size, use_bias=False, name='Bottleneck')(x)

bn_name = _generate_layer_name('BatchNorm', prefix='Bottleneck')

x = BatchNormalization(momentum=0.995, epsilon=0.001, scale=False,

name=bn_name)(x)

# 创建模型

model = Model(inputs, x, name='inception_resnet_v1')

return model

#2.mobilenet网络

from keras import backend as K

from keras.layers import (Activation, Conv2D, Dense, DepthwiseConv2D, Dropout,

GlobalAveragePooling2D)

from keras.layers.normalization import BatchNormalization

from keras.models import Model

def _conv_block(inputs, filters, kernel=(3, 3), strides=(1, 1)):

x = Conv2D(filters, kernel,

padding='same',

use_bias=False,

strides=strides,

name='conv1')(inputs)

x = BatchNormalization(name='conv1_bn')(x)

return Activation(relu6, name='conv1_relu')(x)

def _depthwise_conv_block(inputs, pointwise_conv_filters, depth_multiplier=1, strides=(1, 1), block_id=1):

x = DepthwiseConv2D((3, 3),

padding='same',

depth_multiplier=depth_multiplier,

strides=strides,

use_bias=False,

name='conv_dw_%d' % block_id)(inputs)

x = BatchNormalization(name='conv_dw_%d_bn' % block_id)(x)

x = Activation(relu6, name='conv_dw_%d_relu' % block_id)(x)

x = Conv2D(pointwise_conv_filters, (1, 1),

padding='same',

use_bias=False,

strides=(1, 1),

name='conv_pw_%d' % block_id)(x)

x = BatchNormalization(name='conv_pw_%d_bn' % block_id)(x)

return Activation(relu6, name='conv_pw_%d_relu' % block_id)(x)

def relu6(x):

return K.relu(x, max_value=6)

def MobileNet(inputs, embedding_size=128, dropout_keep_prob=0.4, alpha=1.0, depth_multiplier=1):

# 160,160,3 -> 80,80,32

x = _conv_block(inputs, 32, strides=(2, 2))

# 80,80,32 -> 80,80,64

x = _depthwise_conv_block(x, 64, depth_multiplier, block_id=1)

# 80,80,64 -> 40,40,128

x = _depthwise_conv_block(x, 128, depth_multiplier, strides=(2, 2), block_id=2)

x = _depthwise_conv_block(x, 128, depth_multiplier, block_id=3)

# 40,40,128 -> 20,20,256

x = _depthwise_conv_block(x, 256, depth_multiplier, strides=(2, 2), block_id=4)

x = _depthwise_conv_block(x, 256, depth_multiplier, block_id=5)

# 20,20,256 -> 10,10,512

x = _depthwise_conv_block(x, 512, depth_multiplier, strides=(2, 2), block_id=6)

x = _depthwise_conv_block(x, 512, depth_multiplier, block_id=7)

x = _depthwise_conv_block(x, 512, depth_multiplier, block_id=8)

x = _depthwise_conv_block(x, 512, depth_multiplier, block_id=9)

x = _depthwise_conv_block(x, 512, depth_multiplier, block_id=10)

x = _depthwise_conv_block(x, 512, depth_multiplier, block_id=11)

# 10,10,512 -> 5,5,1024

x = _depthwise_conv_block(x, 1024, depth_multiplier, strides=(2, 2), block_id=12)

x = _depthwise_conv_block(x, 1024, depth_multiplier, block_id=13)

# 1024

x = GlobalAveragePooling2D()(x)

# 防止网络过拟合,训练的时候起作用

x = Dropout(1.0 - dropout_keep_prob, name='Dropout')(x)

# 全连接层到128

# 128

x = Dense(embedding_size, use_bias=False, name='Bottleneck')(x)

x = BatchNormalization(momentum=0.995, epsilon=0.001, scale=False,

name='BatchNorm_Bottleneck')(x)

# 创建模型

model = Model(inputs, x, name='mobilenet')

return model

#3.facenet

import keras.backend as K

from keras.layers import Activation, Dense, Input, Lambda

from keras.models import Model

def facenet(input_shape, num_classes=None, backbone="mobilenet", mode="train"):

inputs = Input(shape=input_shape)

if backbone=="mobilenet":

model = MobileNet(inputs, dropout_keep_prob=0.4)

elif backbone=="inception_resnetv1":

model = InceptionResNetV1(inputs, dropout_keep_prob=0.4)

else:

raise ValueError('Unsupported backbone - `{}`, Use mobilenet, inception_resnetv1.'.format(backbone))

if mode == "train":

#-----------------------------------------#

# 训练的话利用交叉熵和triplet_loss

# 结合一起训练

#-----------------------------------------#

logits = Dense(num_classes)(model.output)

softmax = Activation("softmax", name = "Softmax")(logits)

normalize = Lambda(lambda x: K.l2_normalize(x, axis=1), name="Embedding")(model.output)

combine_model = Model(inputs, [softmax, normalize])

return combine_model

elif mode == "predict":

#--------------------------------------------#

# 预测的时候只需要考虑人脸的特征向量就行了

#--------------------------------------------#

x = Lambda(lambda x: K.l2_normalize(x, axis=1), name="Embedding")(model.output)

model = Model(inputs,x)

return model

else:

raise ValueError('Unsupported mode - `{}`, Use train, predict.'.format(mode))

3.3 网络数据格式的标准化

创建retinaface.py文件,定义Retinaface类,进行数据的标准化处理。

import time

import cv2

import numpy as np

from keras.applications.imagenet_utils import preprocess_input

from PIL import Image, ImageDraw, ImageFont

from tqdm import tqdm

from nets.facenet import facenet

from nets_retinaface.retinaface import RetinaFace

from utils.anchors import Anchors

from utils.config import cfg_mnet, cfg_re50

from utils.utils import (Alignment_1, BBoxUtility, compare_faces,

letterbox_image, retinaface_correct_boxes)

#--------------------------------------#

# 写中文需要转成PIL来写。

#--------------------------------------#

def cv2ImgAddText(img, label, left, top, textColor=(255, 255, 255)):

img = Image.fromarray(np.uint8(img))

#---------------#

# 设置字体

#---------------#

font = ImageFont.truetype(font='model_data/simhei.ttf', size=20)

draw = ImageDraw.Draw(img)

label = label.encode('utf-8')

draw.text((left, top), str(label,'UTF-8'), fill=textColor, font=font)

return np.asarray(img)

#--------------------------------------#

# 一定注意backbone和model_path的对应。

# 在更换facenet_model后,

# 一定要注意重新编码人脸。

#--------------------------------------#

class Retinaface(object):

_defaults = {

#----------------------------------------------------------------------#

# retinaface训练完的权值路径

#----------------------------------------------------------------------#

"retinaface_model_path" : 'model_data/retinaface_mobilenet025.h5',

#----------------------------------------------------------------------#

# retinaface所使用的主干网络,有mobilenet和resnet50

#----------------------------------------------------------------------#

"retinaface_backbone" : "mobilenet",

#----------------------------------------------------------------------#

# retinaface中只有得分大于置信度的预测框会被保留下来

#----------------------------------------------------------------------#

"confidence" : 0.5,

#----------------------------------------------------------------------#

# retinaface中非极大抑制所用到的nms_iou大小

#----------------------------------------------------------------------#

"nms_iou" : 0.3,

#----------------------------------------------------------------------#

# 是否需要进行图像大小限制。

# 输入图像大小会大幅度地影响FPS,想加快检测速度可以减少input_shape。

# 开启后,会将输入图像的大小限制为input_shape。否则使用原图进行预测。

# keras代码中主干为mobilenet时存在小bug,当输入图像的宽高不为32的倍数

# 会导致检测结果偏差,主干为resnet50不存在此问题。

# 可根据输入图像的大小自行调整input_shape,注意为32的倍数,如[640, 640, 3]

#----------------------------------------------------------------------#

"retinaface_input_shape": [640, 640, 3],

#----------------------------------------------------------------------#

# 是否需要进行图像大小限制。

#----------------------------------------------------------------------#

"letterbox_image" : True,

#----------------------------------------------------------------------#

# facenet训练完的权值路径

#----------------------------------------------------------------------#

"facenet_model_path" : 'model_data/facenet_mobilenet.h5',

#----------------------------------------------------------------------#

# facenet所使用的主干网络, mobilenet和inception_resnetv1

#----------------------------------------------------------------------#

"facenet_backbone" : "mobilenet",

#----------------------------------------------------------------------#

# facenet所使用到的输入图片大小

#----------------------------------------------------------------------#

"facenet_input_shape" : [160, 160, 3],

#----------------------------------------------------------------------#

# facenet所使用的人脸距离门限

#----------------------------------------------------------------------#

"facenet_threhold" : 0.9,

}

@classmethod

def get_defaults(cls, n):

if n in cls._defaults:

return cls._defaults[n]

else:

return "Unrecognized attribute name '" + n + "'"

#---------------------------------------------------#

# 初始化Retinaface+facenet

#---------------------------------------------------#

def __init__(self, encoding=0, **kwargs):

self.__dict__.update(self._defaults)

for name, value in kwargs.items():

setattr(self, name, value)

#---------------------------------------------------#

# 不同主干网络的config信息

#---------------------------------------------------#

if self.retinaface_backbone == "mobilenet":

self.cfg = cfg_mnet

else:

self.cfg = cfg_re50

#---------------------------------------------------#

# 工具箱和先验框的生成

#---------------------------------------------------#

self.bbox_util = BBoxUtility(nms_thresh=self.nms_iou)

self.anchors = Anchors(self.cfg, image_size=(self.retinaface_input_shape[0], self.retinaface_input_shape[1])).get_anchors()

self.generate()

try:

self.known_face_encodings = np.load("model_data/{backbone}_face_encoding.npy".format(backbone=self.facenet_backbone))

self.known_face_names = np.load("model_data/{backbone}_names.npy".format(backbone=self.facenet_backbone))

except:

if not encoding:

print("载入已有人脸特征失败,请检查model_data下面是否生成了相关的人脸特征文件。")

pass

#---------------------------------------------------#

# 获得所有的分类

#---------------------------------------------------#

def generate(self):

self.retinaface = RetinaFace(self.cfg, self.retinaface_backbone)

self.facenet = facenet(self.facenet_input_shape, backbone=self.facenet_backbone, mode='predict')

#-------------------------------#

# 载入模型与权值

#-------------------------------#

print('Loading weights into state dict...')

self.retinaface.load_weights(self.retinaface_model_path, by_name=True)

self.facenet.load_weights(self.facenet_model_path, by_name=True)

print('Finished!')

def encode_face_dataset(self, image_paths, names):

face_encodings = []

for index, path in enumerate(tqdm(image_paths)):

#---------------------------------------------------#

# 打开人脸图片

#---------------------------------------------------#

image = np.array(Image.open(path), np.float32)

#---------------------------------------------------#

# 对输入图像进行一个备份

#---------------------------------------------------#

old_image = image.copy()

#---------------------------------------------------#

# 计算输入图片的高和宽

#---------------------------------------------------#

im_height, im_width, _ = np.shape(image)

#---------------------------------------------------#

# 计算scale,用于将获得的预测框转换成原图的高宽

#---------------------------------------------------#

scale = [

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0]

]

scale_for_landmarks = [

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0],

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0],

np.shape(image)[1], np.shape(image)[0]

]

#---------------------------------------------------------#

# letterbox_image可以给图像增加灰条,实现不失真的resize

#---------------------------------------------------------#

if self.letterbox_image:

image = letterbox_image(image,[self.retinaface_input_shape[1], self.retinaface_input_shape[0]])

anchors = self.anchors

else:

anchors = Anchors(self.cfg, image_size=(im_height, im_width)).get_anchors()

#---------------------------------------------------#

# 图片预处理,归一化

#---------------------------------------------------#

photo = np.expand_dims(preprocess_input(image),0)

#---------------------------------------------------#

# 将处理完的图片传入Retinaface网络当中进行预测

#---------------------------------------------------#

preds = self.retinaface.predict(photo)

#---------------------------------------------------#

# Retinaface网络的解码,最终我们会获得预测框

# 将预测结果进行解码和非极大抑制

#---------------------------------------------------#

results = self.bbox_util.detection_out(preds,anchors,confidence_threshold=self.confidence)

if len(results)<=0:

print(names[index], ":未检测到人脸")

continue

results = np.array(results)

#---------------------------------------------------------#

# 如果使用了letterbox_image的话,要把灰条的部分去除掉。

#---------------------------------------------------------#

if self.letterbox_image:

results = retinaface_correct_boxes(results, np.array((self.retinaface_input_shape[0], self.retinaface_input_shape[1])), np.array([im_height, im_width]))

results[:, :4] = results[:, :4] * scale

results[:, 5:] = results[:, 5:] * scale_for_landmarks

#---------------------------------------------------#

# 选取最大的人脸框。

#---------------------------------------------------#

best_face_location = None

biggest_area = 0

for result in results:

left, top, right, bottom = result[0:4]

w = right - left

h = bottom - top

if w * h > biggest_area:

biggest_area = w * h

best_face_location = result

#---------------------------------------------------#

# 截取图像

#---------------------------------------------------#

crop_img = old_image[int(best_face_location[1]):int(best_face_location[3]), int(best_face_location[0]):int(best_face_location[2])]

landmark = np.reshape(best_face_location[5:], (5,2)) - np.array([int(best_face_location[0]), int(best_face_location[1])])

crop_img, _ = Alignment_1(crop_img, landmark)

crop_img = np.array(letterbox_image(np.uint8(crop_img), (self.facenet_input_shape[1],self.facenet_input_shape[0]))) / 255

crop_img = np.expand_dims(crop_img, 0)

#---------------------------------------------------#

# 利用图像算取长度为128的特征向量

#---------------------------------------------------#

face_encoding = self.facenet.predict(crop_img)[0]

face_encodings.append(face_encoding)

np.save("model_data/{backbone}_face_encoding.npy".format(backbone=self.facenet_backbone),face_encodings)

np.save("model_data/{backbone}_names.npy".format(backbone=self.facenet_backbone),names)

#---------------------------------------------------#

# 检测图片

#---------------------------------------------------#

def detect_image(self, image):

#---------------------------------------------------#

# 对输入图像进行一个备份,后面用于绘图

#---------------------------------------------------#

old_image = image.copy()

#---------------------------------------------------#

# 把图像转换成numpy的形式

#---------------------------------------------------#

image = np.array(image, np.float32)

#---------------------------------------------------#

# Retinaface检测部分-开始

#---------------------------------------------------#

#---------------------------------------------------#

# 计算输入图片的高和宽

#---------------------------------------------------#

im_height, im_width, _ = np.shape(image)

#---------------------------------------------------#

# 计算scale,用于将获得的预测框转换成原图的高宽

#---------------------------------------------------#

scale = [

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0]

]

scale_for_landmarks = [

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0],

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0],

np.shape(image)[1], np.shape(image)[0]

]

#---------------------------------------------------------#

# letterbox_image可以给图像增加灰条,实现不失真的resize

#---------------------------------------------------------#

if self.letterbox_image:

image = letterbox_image(image,[self.retinaface_input_shape[1], self.retinaface_input_shape[0]])

anchors = self.anchors

else:

anchors = Anchors(self.cfg, image_size=(im_height, im_width)).get_anchors()

#---------------------------------------------------#

# 图片预处理,归一化

#---------------------------------------------------#

photo = np.expand_dims(preprocess_input(image),0)

#---------------------------------------------------#

# 将处理完的图片传入Retinaface网络当中进行预测

#---------------------------------------------------#

preds = self.retinaface.predict(photo)

#---------------------------------------------------#

# Retinaface网络的解码,最终我们会获得预测框

# 将预测结果进行解码和非极大抑制

#---------------------------------------------------#

results = self.bbox_util.detection_out(preds,anchors,confidence_threshold=self.confidence)

#---------------------------------------------------#

# 如果没有预测框则返回原图

#---------------------------------------------------#

if len(results) <= 0:

return old_image

results = np.array(results)

#---------------------------------------------------------#

# 如果使用了letterbox_image的话,要把灰条的部分去除掉。

#---------------------------------------------------------#

if self.letterbox_image:

results = retinaface_correct_boxes(results, np.array((self.retinaface_input_shape[0], self.retinaface_input_shape[1])), np.array([im_height, im_width]))

#---------------------------------------------------#

# 4人脸框置信度

# :4是框的坐标

# 5:是人脸关键点的坐标

#---------------------------------------------------#

results[:, :4] = results[:, :4] * scale

results[:, 5:] = results[:, 5:] * scale_for_landmarks

#---------------------------------------------------#

# Retinaface检测部分-结束

#---------------------------------------------------#

#-----------------------------------------------#

# Facenet编码部分-开始

#-----------------------------------------------#

face_encodings = []

for result in results:

#----------------------#

# 图像截取,人脸矫正

#----------------------#

result = np.maximum(result, 0)

crop_img = np.array(old_image)[int(result[1]):int(result[3]), int(result[0]):int(result[2])]

landmark = np.reshape(result[5:], (5,2)) - np.array([int(result[0]), int(result[1])])

crop_img, _ = Alignment_1(crop_img, landmark)

#----------------------#

# 人脸编码

#----------------------#

#-----------------------------------------------#

# 不失真的resize,然后进行归一化

#-----------------------------------------------#

crop_img = np.array(letterbox_image(np.uint8(crop_img),(self.facenet_input_shape[1],self.facenet_input_shape[0])))/255

crop_img = np.expand_dims(crop_img,0)

#-----------------------------------------------#

# 利用图像算取长度为128的特征向量

#-----------------------------------------------#

face_encoding = self.facenet.predict(crop_img)[0]

face_encodings.append(face_encoding)

#-----------------------------------------------#

# Facenet编码部分-结束

#-----------------------------------------------#

#-----------------------------------------------#

# 人脸特征比对-开始

#-----------------------------------------------#

face_names = []

for face_encoding in face_encodings:

#-----------------------------------------------------#

# 取出一张脸并与数据库中所有的人脸进行对比,计算得分

#-----------------------------------------------------#

matches, face_distances = compare_faces(self.known_face_encodings, face_encoding, tolerance = self.facenet_threhold)

name = "Unknown"

#-----------------------------------------------------#

# 找到已知最贴近当前人脸的人脸序号

#-----------------------------------------------------#

best_match_index = np.argmin(face_distances)

if matches[best_match_index]:

name = self.known_face_names[best_match_index]

face_names.append(name)

#-----------------------------------------------#

# 人脸特征比对-结束

#-----------------------------------------------#

for i, b in enumerate(results):

text = "{:.4f}".format(b[4])

b = list(map(int, b))

#---------------------------------------------------#

# b[0]-b[3]为人脸框的坐标,b[4]为得分

#---------------------------------------------------#

cv2.rectangle(old_image, (b[0], b[1]), (b[2], b[3]), (0, 0, 255), 2)

cx = b[0]

cy = b[1] + 12

cv2.putText(old_image, text, (cx, cy),

cv2.FONT_HERSHEY_DUPLEX, 0.5, (255, 255, 255))

#---------------------------------------------------#

# b[5]-b[14]为人脸关键点的坐标

#---------------------------------------------------#

cv2.circle(old_image, (b[5], b[6]), 1, (0, 0, 255), 4)

cv2.circle(old_image, (b[7], b[8]), 1, (0, 255, 255), 4)

cv2.circle(old_image, (b[9], b[10]), 1, (255, 0, 255), 4)

cv2.circle(old_image, (b[11], b[12]), 1, (0, 255, 0), 4)

cv2.circle(old_image, (b[13], b[14]), 1, (255, 0, 0), 4)

name = face_names[i]

# font = cv2.FONT_HERSHEY_SIMPLEX

# cv2.putText(old_image, name, (b[0] , b[3] - 15), font, 0.75, (255, 255, 255), 2)

#--------------------------------------------------------------#

# cv2不能写中文,加上这段可以,但是检测速度会有一定的下降。

# 如果不是必须,可以换成cv2只显示英文。

#--------------------------------------------------------------#

old_image = cv2ImgAddText(old_image, name, b[0]+5 , b[3] - 25)

return old_image

def get_FPS(self, image, test_interval):

#---------------------------------------------------#

# 对输入图像进行一个备份,后面用于绘图

#---------------------------------------------------#

old_image = image.copy()

#---------------------------------------------------#

# 把图像转换成numpy的形式

#---------------------------------------------------#

image = np.array(image, np.float32)

#---------------------------------------------------#

# Retinaface检测部分-开始

#---------------------------------------------------#

#---------------------------------------------------#

# 计算输入图片的高和宽

#---------------------------------------------------#

im_height, im_width, _ = np.shape(image)

#---------------------------------------------------#

# 计算scale,用于将获得的预测框转换成原图的高宽

#---------------------------------------------------#

scale = [

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0]

]

scale_for_landmarks = [

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0],

np.shape(image)[1], np.shape(image)[0], np.shape(image)[1], np.shape(image)[0],

np.shape(image)[1], np.shape(image)[0]

]

#---------------------------------------------------------#

# letterbox_image可以给图像增加灰条,实现不失真的resize

#---------------------------------------------------------#

if self.letterbox_image:

image = letterbox_image(image,[self.retinaface_input_shape[1], self.retinaface_input_shape[0]])

anchors = self.anchors

else:

anchors = Anchors(self.cfg, image_size=(im_height, im_width)).get_anchors()

#---------------------------------------------------#

# 图片预处理,归一化

#---------------------------------------------------#

photo = np.expand_dims(preprocess_input(image),0)

#---------------------------------------------------#

# 将处理完的图片传入Retinaface网络当中进行预测

#---------------------------------------------------#

preds = self.retinaface.predict(photo)

#---------------------------------------------------#

# Retinaface网络的解码,最终我们会获得预测框

# 将预测结果进行解码和非极大抑制

#---------------------------------------------------#

results = self.bbox_util.detection_out(preds,anchors,confidence_threshold=self.confidence)

#---------------------------------------------------#

# 如果没有预测框则返回原图

#---------------------------------------------------#

if len(results) > 0:

results = np.array(results)

#---------------------------------------------------------#

# 如果使用了letterbox_image的话,要把灰条的部分去除掉。

#---------------------------------------------------------#

if self.letterbox_image:

results = retinaface_correct_boxes(results, np.array((self.retinaface_input_shape[0], self.retinaface_input_shape[1])), np.array([im_height, im_width]))

#---------------------------------------------------#

# 4人脸框置信度

# :4是框的坐标

# 5:是人脸关键点的坐标

#---------------------------------------------------#

results[:, :4] = results[:, :4] * scale

results[:, 5:] = results[:, 5:] * scale_for_landmarks

#---------------------------------------------------#

# Retinaface检测部分-结束

#---------------------------------------------------#

#-----------------------------------------------#

# Facenet编码部分-开始

#-----------------------------------------------#

face_encodings = []

for result in results:

#----------------------#

# 图像截取,人脸矫正

#----------------------#

result = np.maximum(result, 0)

crop_img = np.array(old_image)[int(result[1]):int(result[3]), int(result[0]):int(result[2])]

landmark = np.reshape(result[5:], (5,2)) - np.array([int(result[0]), int(result[1])])

crop_img, _ = Alignment_1(crop_img, landmark)

#----------------------#

# 人脸编码

#----------------------#

#-----------------------------------------------#

# 不失真的resize,然后进行归一化

#-----------------------------------------------#

crop_img = np.array(letterbox_image(np.uint8(crop_img),(self.facenet_input_shape[1],self.facenet_input_shape[0])))/255

crop_img = np.expand_dims(crop_img,0)

#-----------------------------------------------#

# 利用图像算取长度为128的特征向量

#-----------------------------------------------#

face_encoding = self.facenet.predict(crop_img)[0]

face_encodings.append(face_encoding)

#-----------------------------------------------#

# Facenet编码部分-结束

#-----------------------------------------------#

#-----------------------------------------------#

# 人脸特征比对-开始

#-----------------------------------------------#

face_names = []

for face_encoding in face_encodings:

#-----------------------------------------------------#

# 取出一张脸并与数据库中所有的人脸进行对比,计算得分

#-----------------------------------------------------#

matches, face_distances = compare_faces(self.known_face_encodings, face_encoding, tolerance = self.facenet_threhold)

name = "Unknown"

#-----------------------------------------------------#

# 找到已知最贴近当前人脸的人脸序号

#-----------------------------------------------------#

best_match_index = np.argmin(face_distances)

if matches[best_match_index]:

name = self.known_face_names[best_match_index]

face_names.append(name)

#-----------------------------------------------#

# 人脸特征比对-结束

#-----------------------------------------------#

t1 = time.time()

for _ in range(test_interval):

#---------------------------------------------------#

# 将处理完的图片传入Retinaface网络当中进行预测

#---------------------------------------------------#

preds = self.retinaface.predict(photo)

#---------------------------------------------------#

# Retinaface网络的解码,最终我们会获得预测框

# 将预测结果进行解码和非极大抑制

#---------------------------------------------------#

results = self.bbox_util.detection_out(preds,anchors,confidence_threshold=self.confidence)

#---------------------------------------------------#

# 如果没有预测框则返回原图

#---------------------------------------------------#

if len(results) > 0:

results = np.array(results)

#---------------------------------------------------------#

# 如果使用了letterbox_image的话,要把灰条的部分去除掉。

#---------------------------------------------------------#

if self.letterbox_image:

results = retinaface_correct_boxes(results, np.array((self.retinaface_input_shape[0], self.retinaface_input_shape[1])), np.array([im_height, im_width]))

#---------------------------------------------------#

# 4人脸框置信度

# :4是框的坐标

# 5:是人脸关键点的坐标

#---------------------------------------------------#

results[:, :4] = results[:, :4] * scale

results[:, 5:] = results[:, 5:] * scale_for_landmarks

#---------------------------------------------------#

# Retinaface检测部分-结束

#---------------------------------------------------#

#-----------------------------------------------#

# Facenet编码部分-开始

#-----------------------------------------------#

face_encodings = []

for result in results:

#----------------------#

# 图像截取,人脸矫正

#----------------------#

result = np.maximum(result, 0)

crop_img = np.array(old_image)[int(result[1]):int(result[3]), int(result[0]):int(result[2])]

landmark = np.reshape(result[5:], (5,2)) - np.array([int(result[0]), int(result[1])])

crop_img, _ = Alignment_1(crop_img, landmark)

#----------------------#

# 人脸编码

#----------------------#

#-----------------------------------------------#

# 不失真的resize,然后进行归一化

#-----------------------------------------------#

crop_img = np.array(letterbox_image(np.uint8(crop_img),(self.facenet_input_shape[1],self.facenet_input_shape[0])))/255

crop_img = np.expand_dims(crop_img,0)

#-----------------------------------------------#

# 利用图像算取长度为128的特征向量

#-----------------------------------------------#

face_encoding = self.facenet.predict(crop_img)[0]

face_encodings.append(face_encoding)

#-----------------------------------------------#

# Facenet编码部分-结束

#-----------------------------------------------#

#-----------------------------------------------#

# 人脸特征比对-开始

#-----------------------------------------------#

face_names = []

for face_encoding in face_encodings:

#-----------------------------------------------------#

# 取出一张脸并与数据库中所有的人脸进行对比,计算得分

#-----------------------------------------------------#

matches, face_distances = compare_faces(self.known_face_encodings, face_encoding, tolerance = self.facenet_threhold)

name = "Unknown"

#-----------------------------------------------------#

# 找到已知最贴近当前人脸的人脸序号

#-----------------------------------------------------#

best_match_index = np.argmin(face_distances)

if matches[best_match_index]:

name = self.known_face_names[best_match_index]

face_names.append(name)

#-----------------------------------------------#

# 人脸特征比对-结束

#-----------------------------------------------#

t2 = time.time()

tact_time = (t2 - t1) / test_interval

return tact_time

3.4 人脸特征编码

创建encoding.py文件,进行人脸编码,注意每次更新数据库(face_dataset)里的人脸时都需要运行encoding文件,保证更新model_data里面的权重文件。

import os

from retinaface import Retinaface

'''

在更换facenet网络后一定要重新进行人脸编码,运行encoding.py。

'''

retinaface = Retinaface(1)

list_dir = os.listdir("face_dataset")

image_paths = []

names = []

for name in list_dir:

image_paths.append("face_dataset/"+name)

names.append(name.split("_")[0])

retinaface.encode_face_dataset(image_paths,names)

3.5视频识别

创建predict.py文件进行人脸识别

#----------------------------------------------------#

# 将单张图片预测、摄像头检测和FPS测试功能

# 整合到了一个py文件中,通过指定mode进行模式的修改。

#----------------------------------------------------#

import time

import cv2

import numpy as np

from retinaface import Retinaface

if __name__ == "__main__":

retinaface = Retinaface()

#----------------------------------------------------------------------------------------------------------#

# mode用于指定测试的模式:

# 'predict'表示单张图片预测,如果想对预测过程进行修改,如保存图片,截取对象等,可以先看下方详细的注释

# 'video'表示视频检测,可调用摄像头或者视频进行检测,详情查看下方注释。

# 'fps'表示测试fps,使用的图片是img里面的street.jpg,详情查看下方注释。

# 'dir_predict'表示遍历文件夹进行检测并保存。默认遍历img文件夹,保存img_out文件夹,详情查看下方注释。

#----------------------------------------------------------------------------------------------------------#

mode = "video"#"predict"

#----------------------------------------------------------------------------------------------------------#

# video_path用于指定视频的路径,当video_path=0时表示检测摄像头

# 想要检测视频,则设置如video_path = "xxx.mp4"即可,代表读取出根目录下的xxx.mp4文件。