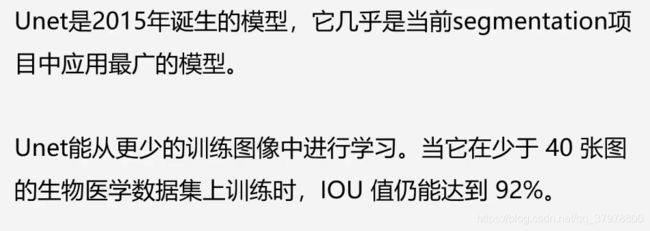

图像语义分割简介

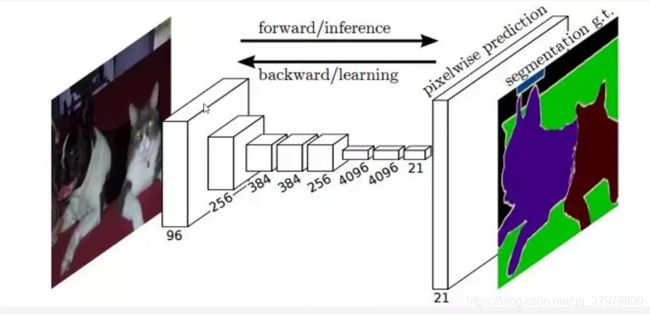

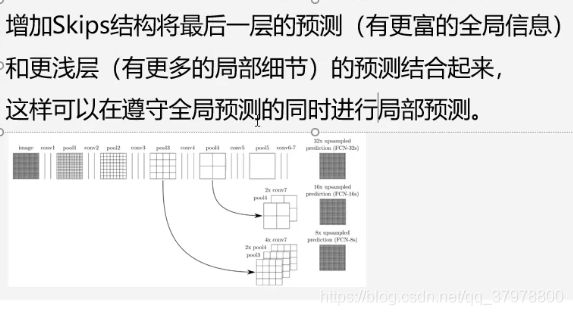

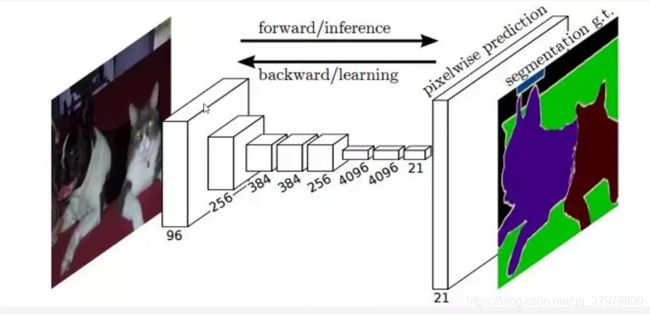

图像语义分割网络结构-FCN

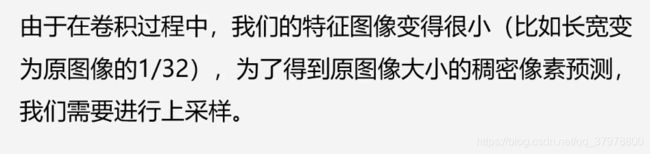

上采样

代码实现

import tensorflow as tf

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import glob

import os

gpus = tf.config.experimental.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu,True)

gpu_ok = tf.test.is_gpu_available()

print("tf version:", tf.__version__)

print("use GPU", gpu_ok)

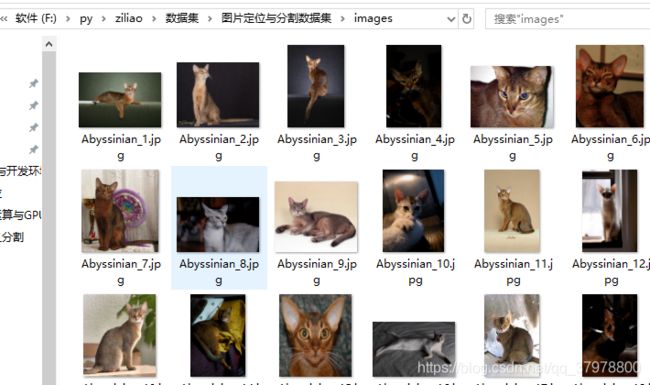

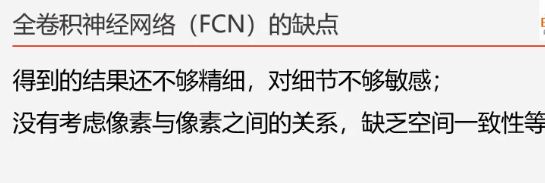

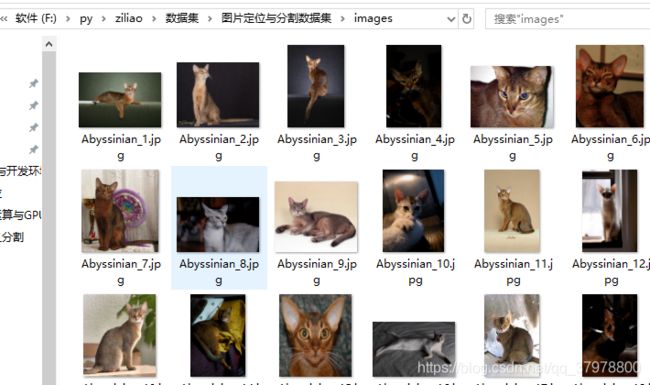

例子

os.listdir("F:/py/ziliao/数据集/图片定位与分割数据集/annotations/trimaps")[-5:]

img = tf.io.read_file(r"F:/py/ziliao/数据集/图片定位与分割数据集/annotations/trimaps/Abyssinian_2.png")

img2 = tf.io.read_file(r"F:/py/ziliao/数据集/图片定位与分割数据集/images/Abyssinian_2.jpg")

img = tf.image.decode_png(img)

img2 = tf.image.decode_jpeg(img2)

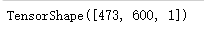

img.shape

img = tf.squeeze(img)

img.shape

plt.imshow(img)

img.numpy()

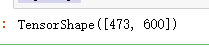

np.unique(img.numpy())

plt.imshow(img2)

完整代码

images = glob.glob(r"F:/py/ziliao/数据集/图片定位与分割数据集/images/*.jpg")

anno = glob.glob(r"F:/py/ziliao/数据集/图片定位与分割数据集/annotations/trimaps/*.png")

np.random.seed(2020)

index = np.random.permutation(len(images))

images = np.array(images)[index]

anno = np.array(anno)[index]

dataset = tf.data.Dataset.from_tensor_slices((images,anno))

test_count = int(len(images)*0.2)

train_count = len(images)-test_count

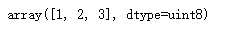

test_count,train_count

data_train =dataset.skip(test_count)

data_test = dataset.take(test_count)

def read_jpg(path):

img = tf.io.read_file(path)

img = tf.image.decode_jpeg(img,channels=3)

return img

def read_png(path):

img = tf.io.read_file(path)

img = tf.image.decode_png(img,channels=1)

return img

def normal_img(input_images,input_anno):

input_images = tf.cast(input_images,tf.float32)

input_images/127.5 - 1

input_anno -= 1

return input_images,input_anno

def load_images(input_images_path,input_anno_path):

input_image = read_jpg(input_images_path)

input_anno = read_png(input_anno_path)

input_image = tf.image.resize(input_image,(224,224))

input_anno = tf.image.resize(input_anno,(224,224))

return normal_img(input_image,input_anno)

data_train = data_train.map(load_images,

num_parallel_calls=tf.data.experimental.AUTOTUNE)

data_test = data_test.map(load_images,

num_parallel_calls=tf.data.experimental.AUTOTUNE)

BATCH_SIZE = 16

data_train = data_train.repeat().shuffle(5912).batch(BATCH_SIZE)

data_test = data_test.batch(BATCH_SIZE)

for img,anno in data_train.take(1):

plt.subplot(1,2,1)

plt.imshow(tf.keras.preprocessing.image.array_to_img(img[0]))

plt.subplot(1,2,2)

plt.imshow(tf.keras.preprocessing.image.array_to_img(anno[0]))

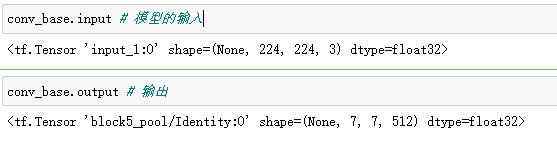

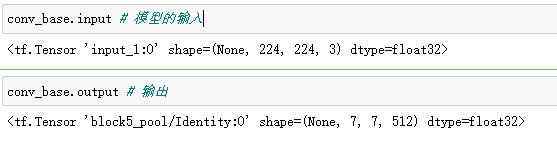

conv_base = tf.keras.applications.VGG16(weights="imagenet",

input_shape=(224,224,3),

include_top = False)

conv_base.summary()

conv_base.get_layer("block5_conv3").output

sub_model = tf.keras.models.Model(inputs = conv_base.input,

outputs = conv_base.get_layer("block5_conv3").output

)

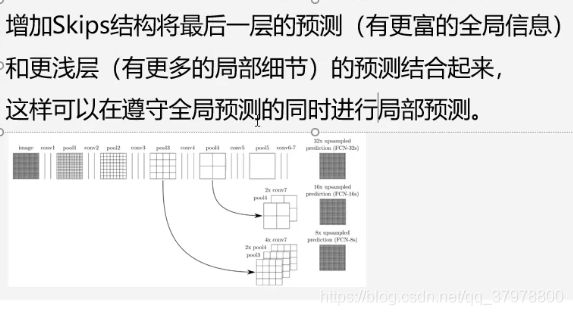

FCN跳阶-获取模型中间层的输出

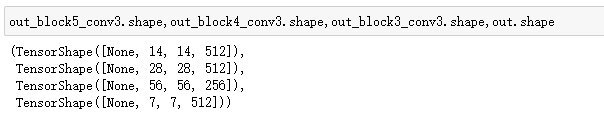

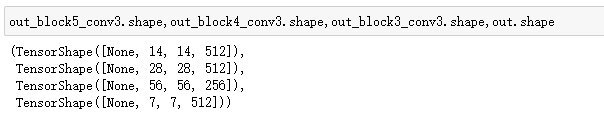

layer_names = [

"block5_conv3",

"block4_conv3",

"block3_conv3",

"block5_pool"

]

layers_output = [conv_base.get_layer(layer_name).output for layer_name in layer_names]

multi_out_model = tf.keras.models.Model(inputs = conv_base.input,

outputs = layers_output

)

multi_out_model.trainable = False

inputs = tf.keras.layers.Input(shape=(224,224,3))

out_block5_conv3,out_block4_conv3,out_block3_conv3,out = multi_out_model(inputs)

反卷积 上采样

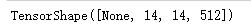

x1 = tf.keras.layers.Conv2DTranspose(512,3,

strides=2,

padding="same",

activation="relu")(out)

x1 = tf.keras.layers.Conv2D(512,3,

padding="same",

activation="relu")(x1)

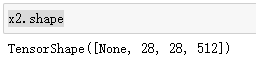

x2 = tf.add(x1,out_block5_conv3)

x2.shape

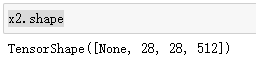

x2 = tf.keras.layers.Conv2DTranspose(512,3,strides=2,padding="same",activation="relu")(x2)

x2 = tf.keras.layers.Conv2D(512,3,padding="same",activation="relu")(x2)

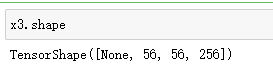

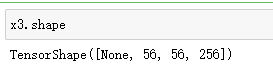

x3 = tf.add(x2,out_block4_conv3)

x3.shape

x3 = tf.keras.layers.Conv2DTranspose(256,3,strides=2,padding="same",activation="relu")(x3)

x3 = tf.keras.layers.Conv2D(256,3,padding="same",activation="relu")(x3)

x4 = tf.add(x3,out_block3_conv3)

x4.shape

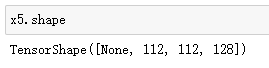

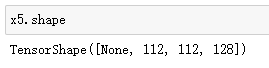

x5 = tf.keras.layers.Conv2DTranspose(128,3,strides=2,padding="same",activation="relu")(x4)

x5 = tf.keras.layers.Conv2D(128,3,padding="same",activation="relu")(x5)

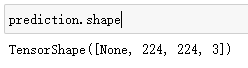

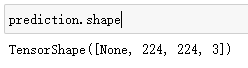

prediction = tf.keras.layers.Conv2DTranspose(3,

3,

strides=2,

padding="same",

activation="softmax")(x5)

model = tf.keras.models.Model(

inputs=inputs,

outputs=prediction

)

model.compile(

optimizer="adam",

loss = "sparse_categorical_crossentropy",

metrics=["acc"]

)

history = model.fit(data_train,

epochs=5,

steps_per_epoch=train_count//BATCH_SIZE,

validation_data=data_test,

validation_steps=test_count//BATCH_SIZE,

)

loss = history.history["loss"]

val_loss = history.history["val_loss"]

epochs = range(15)

plt.figure()

plt.plot(epochs,loss,"r",label="Trainning loss")

plt.plot(epochs,val_loss,"bo",label="Validation loss")

plt.title("Training and Validation Loss")

plt.xlabel("epochs")

plt.ylabel("Loss Value")

plt.legend()

plt.show()

num = 3

for image, mask in data_test.take(1):

pred_mask = model.predict(image)

pred_mask = tf.argmax(pred_mask, axis=-1)

pred_mask = pred_mask[..., tf.newaxis]

plt.figure(figsize=(10, 10))

for i in range(num):

plt.subplot(num, 3, i*num+1)

plt.imshow(tf.keras.preprocessing.image.array_to_img(image[i]))

plt.subplot(num, 3, i*num+2)

plt.imshow(tf.keras.preprocessing.image.array_to_img(mask[i]))

plt.subplot(num, 3, i*num+3)

plt.imshow(tf.keras.preprocessing.image.array_to_img(pred_mask[i]))

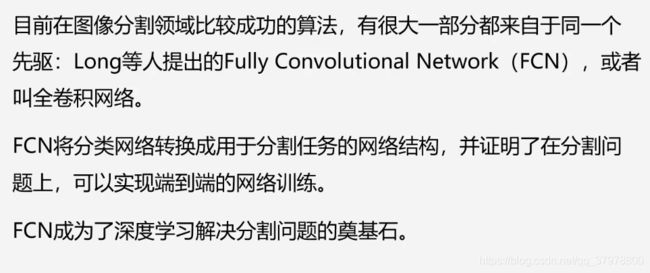

![]()

![]()

![]()